-

-

Notifications

You must be signed in to change notification settings - Fork 4.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Hardware][Nvidia] Enable support for Pascal GPUs #4290

Conversation

|

Looks reasonable to me! It would be worth noting how this affects the size of the wheel. FYI the last release's wheel is already reaching ~100MB https://pypi.org/project/vllm/#files |

|

It will definitely enlarge wheel size, so we will not officially support it. We are limited by pypi package size of 100MB: pypi/support#3792 . |

|

Hey @youkaichao, I wasn't aware of this pypi package size limit - I know some packages on pypi that are much larger than 100MB. For instance, how does torch have wheels larger than 700MB? https://pypi.org/project/torch/#files

|

|

pytorch has approval from pypi. our request on vllm is not approved. actually no response yet. |

|

@cduk Thanks for sharing this. I know Pascal is on the last rung of the CUDA ladder, but it would be good to offer this up as an option for older Pascal GPUs. Until the wheel size issue is fixed and could reconsider this PR, here is a # Download and add Pascal Support

git clone https://github.com/vllm-project/vllm.git

cd vllm

./pascal.sh

# You can now build from source with Pascal GPU support:

pip install -e .

# or build the Docker image with:

DOCKER_BUILDKIT=1 docker build . --target vllm-openai --tag vllm/vllm-openaipascal.sh#!/bin/bash

#

# This script adds Pascal GPU support to vLLM by adding 6.0, 6.1 and 6.2

# GPU architectures to the build files CMakeLists.txt and Dockerfile

#

# Ask user for confirmation

read -p "This script will add Pascal GPU support to vLLM. Continue? [y/N] " -n 1 -r

echo

if [[ ! $REPLY =~ ^[Yy]$ ]]; then

echo "Exiting..."

exit 1

fi

echo

echo "Adding Pascal GPU support..."

# Update CMakeLists.txt and Dockerfile

echo " - Updating CMakeLists.txt"

cuda_supported_archs="6.0;6.1;6.2;7.0;7.5;8.0;8.6;8.9;9.0"

sed -i.orig "s/set(CUDA_SUPPORTED_ARCHS \"7.0;7.5;8.0;8.6;8.9;9.0\")/set(CUDA_SUPPORTED_ARCHS \"$cuda_supported_archs\")/g" CMakeLists.txt

echo " - Updating Dockerfile"

torch_cuda_arch_list="6.0 6.1 6.2 7.0 7.5 8.0 8.6 8.9 9.0+PTX"

sed -i.orig "s/ARG torch_cuda_arch_list='7.0 7.5 8.0 8.6 8.9 9.0+PTX'/ARG torch_cuda_arch_list='$torch_cuda_arch_list'/g" Dockerfile

cat <<EOF

You can now build from source with Pascal GPU support:

pip install -e .

Or build the Docker image with:

DOCKER_BUILDKIT=1 docker build . --target vllm-openai --tag vllm/vllm-openai

EOF |

|

Thanks for explaining the wheelsize issue. |

|

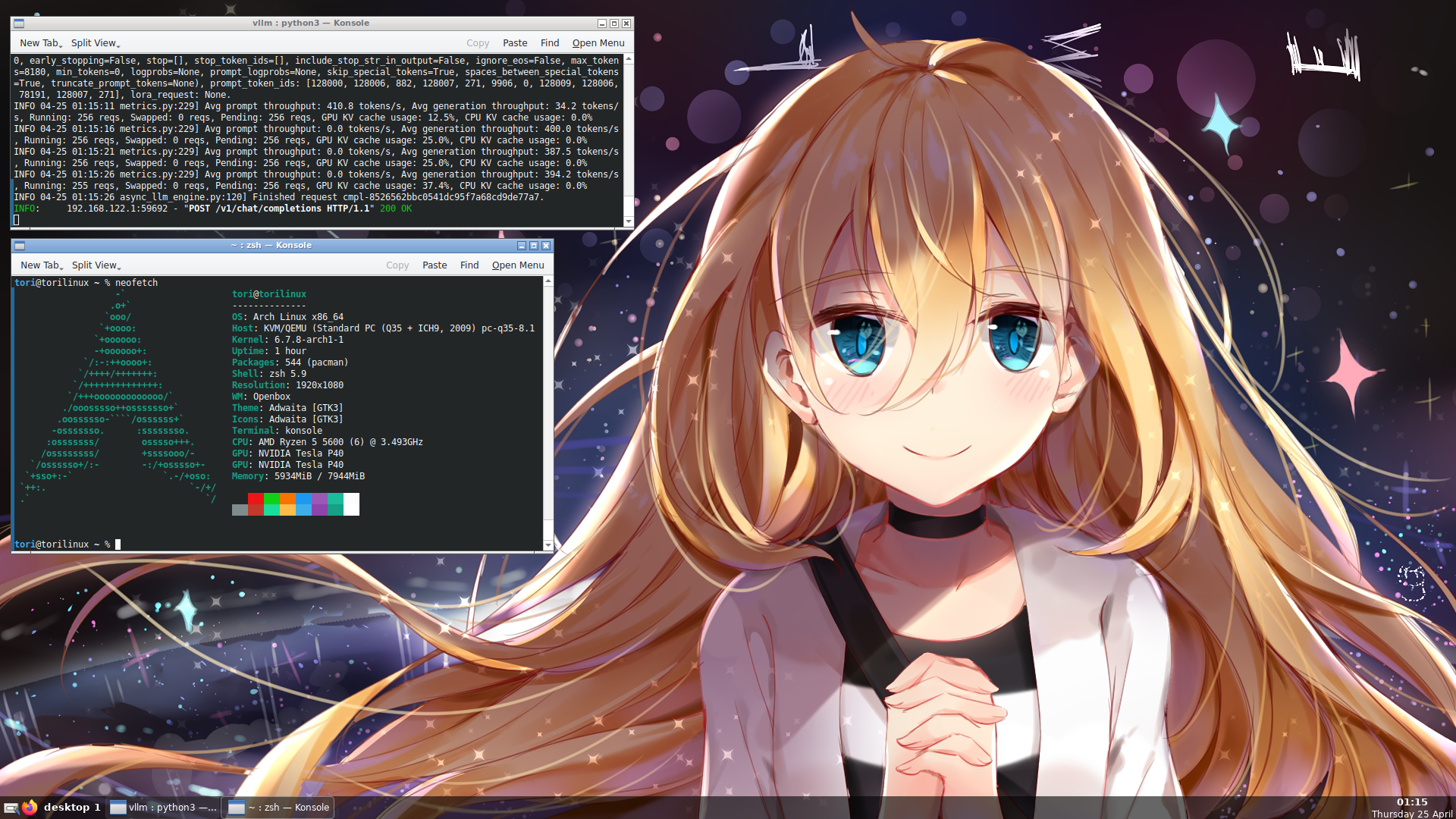

I really appreciate the effort to add Pascal support to the vLLM main branch. As someone who uses vLLM with Pascal GPUs, I want to add a few comments on this. First, I think the 6.2 compute capability can be excluded to reduce the wheel size a bit since it's Tegra/Jetson/DRIVE CC. Other Tegra/Jetson/DRIVE CCs like 7.2 and 8.7 are not supported, so 6.2 support can be excluded too. Support tableSecondly, triton (used by pytorch) needs a patch as well. Crash logP40 performance with vLLMAs a side note, I get ~500 t/s when batching 256 simultaneous requests on a single P40 with Llama 3 8B. (My P40s is usually powerlimited to 125W, so the screenshot is ~400 t/s). |

What quantization are you using? I get 15 t/s for 7B at both FP16 and Q8 (llama.cpp Q8 performance is higher at 25 tok/s). vLLM Q4 performance is bad on P40 due to FP16 code paths and P40s terrible FP16 performance - I get maybe 3 tok/s with a 14B Q4 model with vLLM compared to llama.cpp which can get 19 tok/s. I need to check the code to see if performance can be increased by avoiding FP16 calculations (upscaling to FP32). EDIT: all performance figures for P40 @ 125W power limit |

|

@cduk All of the following is with powerlimit = 125W unless otherwise noted. vLLM float16 commandlinevLLM float16 performance400 t/s vLLM float16 commandline, tp=2vLLM float16 performance, tp=2650! t/s vLLM float16 performance, tp=2, powerlimit = 250W700! t/s vLLM float32 commandline, tp=2vLLM float32 performance, tp=2500 t/s ClientSpeaking of llama.cpp, fp16 (right after conversion) and Q8_0. For example, Q8_0: llama.cpp Q8_0 performance |

|

I found that

Backtrace of second error (not very useful, but shows that's it's related to triton): sasha0552/triton#1 Also @cduk, in case you didn't notice, you closed the PR with that force push because the branches diverged. You should create a new PR, I think (as I know github doesn't allow you to reopen a PR in this case, even if you force push it again with the correct base branch). |

|

I can open a new PR as a placeholder in the hopes that the >100MB request is granted. I'll only add 6.0 and 6.1, as you mention @cduk . Also, I do see that pytorch is now only supporting sm_60. Pascal Architecture

@sasha0552 I'm running on 4 x P100's on CUDA 12.2 with context prompts up to 24k tokens without issue (could get closer to 32k but haven't tried). Average TPS across concurrent 10 threads: 208.3 - Individual Threads: Min TPS: 20.8, Max TPS: 20.8 (#963) I'm using docker images and running with: # build

DOCKER_BUILDKIT=1 docker build . --target vllm-openai --tag vllm-openai --no-cache

# run

docker run -d \

--shm-size=10.24gb \

--gpus '"device=0,1,2,3"' \

-v /data/models:/root/.cache/huggingface \

--env "HF_TOKEN=xyz" \

-p 8000:8000 \

--restart unless-stopped \

--name vllm-openai \

vllm-openai \

--host 0.0.0.0 \

--model=mistralai/Mistral-7B-Instruct-v0.1 \

--enforce-eager \

--dtype=float \

--gpu-memory-utilization 0.95 \

--tensor-parallel-size=4 |

|

@jasonacox you don't run with the |

You're right! I missed that, I'm sorry about that. I have limited memory headroom on these P100's so I wasn't optimizing for cache, just concurrent sessions. Also, I'm running Mistral vs Llama-3. I modified your script to try to get the tokens per second without having to look at the vLLM logs/stats and iterate through different concurrent threads. Please check my math. EDIT: Updated the docker run and start the load test with 1 thread: docker run -d \

--gpus all \

--shm-size=10.24gb \

-v /data/models:/root/.cache/huggingface \

-p 8000:8000 \

--restart unless-stopped \

--name $CONTAINER \

vllm-openai \

--host 0.0.0.0 \

--model=mistralai/Mistral-7B-Instruct-v0.1 \

--dtype=float \

--worker-use-ray \

--gpu-memory-utilization 0.95 \

--disable-log-stats \

--tensor-parallel-size=4loadtest.pyOn the 4 x Tesla P100 (250W) system (no cache): Threads: 1 - Total TPS: 28.76 - Average Thread TPS: 28.77 |

|

@jasonacox As for your code, it looks good. I downloaded mistral to test it on the P40. 1x Tesla P40 (250W, `--dtype float16`):Threads: 8 - Total TPS: 25.63 - Average Thread TPS: 4.96 2x Tesla P40 (250W, `--dtype float32 --tensor-parallel-size 2`):Threads: 8 - Total TPS: 41.81 - Average Thread TPS: 7.81 2x Tesla P40 (250W, `--dtype float16 --tensor-parallel-size 2`):Threads: 1 - Total TPS: 17.15 - Average Thread TPS: 17.16 Not too bad, I guess (considering you have twice as much computing power). vLLM (or maybe pytorch) definitely considers the P40 capabilities. |

|

I'm getting an error trying to activate It seems to be an issue with mistral: #3781 - Are you using Llama-3? |

|

Yes, I am using Llama-3. Can you test it or mistralai/Mistral-7B-Instruct-v0.2? In v0.2, there is no sliding window, instead there is native 32k context. |

|

@sasha0552 Does mistralai/Mistral-7B-Instruct-v0.2 need a chat template or different stop token in vlllm? I'm getting non-stop generation for each test. |

|

There is a slight difference in the chat template between versions, but I don't think it matters. response = client.chat.completions.create(

model="mistralai/Mistral-7B-Instruct-v0.1",

messages=[

{"role": "user", "content": "Hello!"}

],

max_tokens=128,

) |

|

You're right. It is working as it should, just incredibly chatty! Holy smokes is that annoying!! 😂 The max_tokens make it tolerable.

docker commandLLM=mistralai/Mistral-7B-Instruct-v0.2

MODEL=mistralai/Mistral-7B-Instruct-v0.2

docker run -d \

--gpus all \

--shm-size=10.24gb \

-v /data/models:/root/.cache/huggingface \

-p 8000:8000 \

--env "HF_TOKEN=x" \

--restart unless-stopped \

--name vllm-openai \

vllm-openai \

--host 0.0.0.0 \

--model=$MODEL \

--served-model-name $LLM \

--dtype=float16 \

--enable-prefix-caching \

--gpu-memory-utilization 0.95 \

--disable-log-stats \

--worker-use-ray \

--tensor-parallel-size=4

|

|

Can you try a long prompt (2000+ tokens)? No need for batch processing or performance measurement, just send a same long prompt two times (w/o parallelism, just one after the other). In my case it causes a crash. And thank you for patience and testing. |

|

I accidentally got a stack trace from python, it's happening there: vllm/vllm/attention/ops/prefix_prefill.py Line 708 in 4ea1f96

Something wrong with this function: vllm/vllm/attention/ops/prefix_prefill.py Lines 10 to 11 in 4ea1f96

I'll look into this tomorrow and send a PR if I fix it (I have no experience with pytorch/triton, so this should be a good exercise for me). First of all, I will find the exact LoC that generates this intrinsic. Stacktrace |

|

Wow! Nice @sasha0552 - You may want to open a new Issue for this instead of us working it in this poor zombie PR. 😉 |

|

@jasonacox I've created an issue - #4438. |

|

What ended up happening with Pascal support, anyone knows? Is it still deemed not worthy? Just making that assumption given that it's not working in 0.4.3 |

|

@elabz It is not yet merged, but hopefully with the PyPI decision, it can be merged soon. Until then, I have a Pascal enabled repo here which supports it: https://github.com/cduk/vllm-pascal |

[Hardware][Nvidia] This PR enables support for Pascal generation cards. This is tested as working with P100 and P40 cards.