Jingru Tan, Changbao Wang, Buyu Li, Quanquan Li, Wanli Ouyang, Changqing Yin, Junjie Yan

⚠️ We recommend to use the EQLv2 repository (code) which is based on mmdetection. It also includes EQL and other algorithms, such as cRT (classifier-retraining), BAGS (BalanceGroup Softmax).

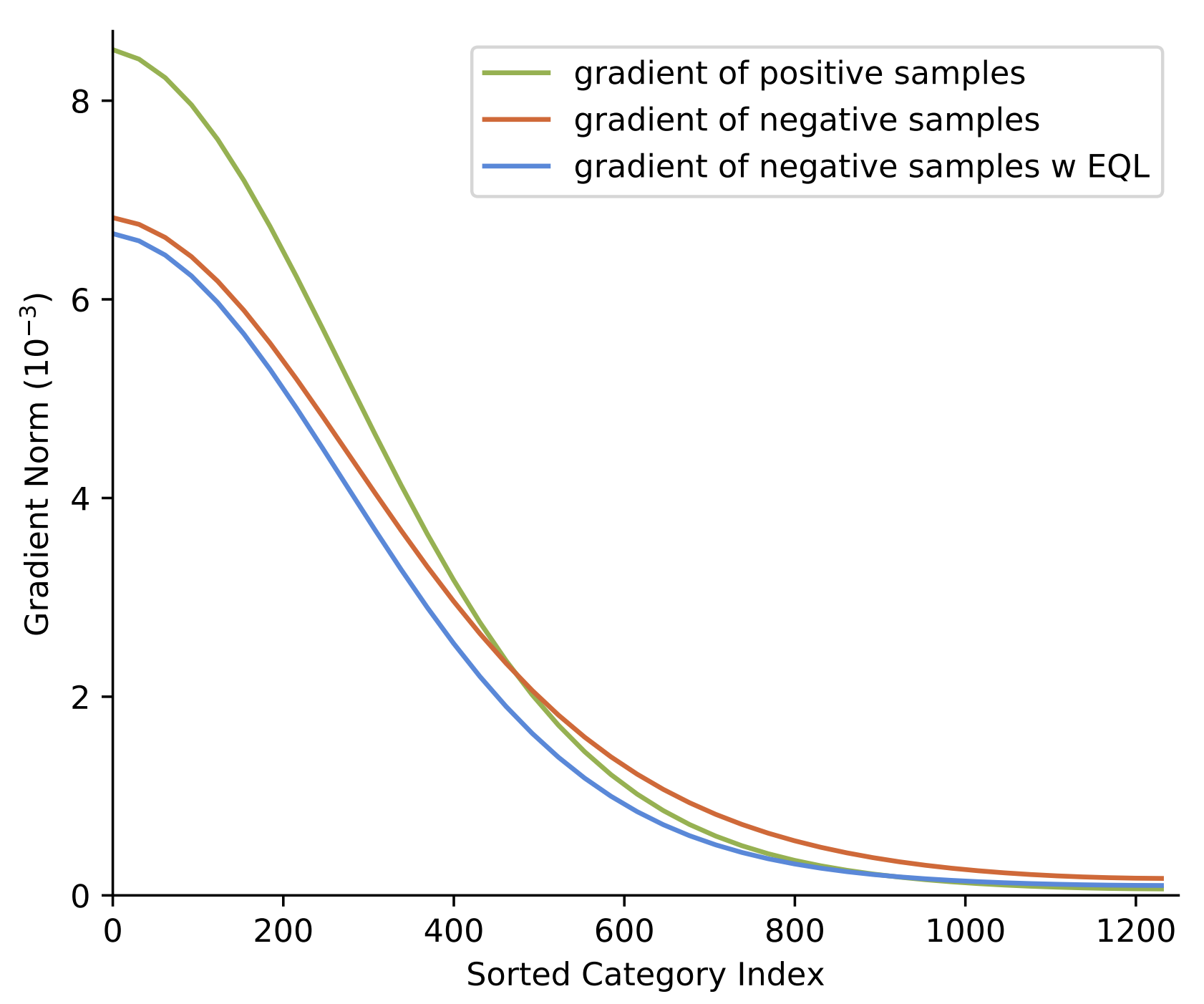

In this repository, we release code for Equalization Loss (EQL) in Detectron2. EQL protects the learning for rare categories from being at a disadvantage during the network parameter updating under the long-tailed situation.

Install Detectron 2 following INSTALL.md. You are ready to go!

Following the instruction of README.md to set up the lvis dataset.

To train a model with 8 GPUs run:

cd /path/to/detectron2/projects/EQL

python train_net.py --config-file configs/eql_mask_rcnn_R_50_FPN_1x.yaml --num-gpus 8Model evaluation can be done similarly:

cd /path/to/detectron2/projects/EQL

python train_net.py --config-file configs/eql_mask_rcnn_R_50_FPN_1x.yaml --eval-only MODEL.WEIGHTS /path/to/model_checkpoint| Backbone | Method | AP | AP.r | AP.c | AP.f | AP.bbox | download |

|---|---|---|---|---|---|---|---|

| R50-FPN | MaskRCNN | 21.2 | 3.2 | 21.1 | 28.7 | 20.8 | model | metrics |

| R50-FPN | MaskRCNN-EQL | 24.0 | 9.4 | 25.2 | 28.4 | 23.6 | model | metrics |

| R50-FPN | MaskRCNN-EQL-Resampling | 26.1 | 17.2 | 27.3 | 28.2 | 25.4 | model | metrics |

| R101-FPN | MaskRCNN | 22.8 | 4.3 | 22.7 | 30.2 | 22.3 | model | metrics |

| R101-FPN | MaskRCNN-EQL | 25.9 | 10.0 | 27.9 | 29.8 | 25.9 | model | metrics |

| R101-FPN | MaskRCNN-EQL-Resampling | 27.4 | 17.3 | 29.0 | 29.4 | 27.1 | model | metrics |

The AP in this repository is higher than that of the origin paper. Because all those models use:

- Scale jitter

- Class-specific mask head

- Better ImageNet pretrain models (of caffe rather than pytorch)

Note that the final results of these configs have large variance across different runs.

If you use EQL, please use the following BibTeX entry.

@InProceedings{tan2020eql,

title={Equalization Loss for Long-Tailed Object Recognition},

author={Jingru Tan, Changbao Wang, Buyu Li, Quanquan Li,

Wanli Ouyang, Changqing Yin, Junjie Yan},

journal={ArXiv:2003.05176},

year={2020}

}