I am aiming to write different Semantic Segmentation models from scratch with different pretrained backbones.

Paper(DeepLabV3plus): Encoder-Decoder with Atrous Separable Convolution

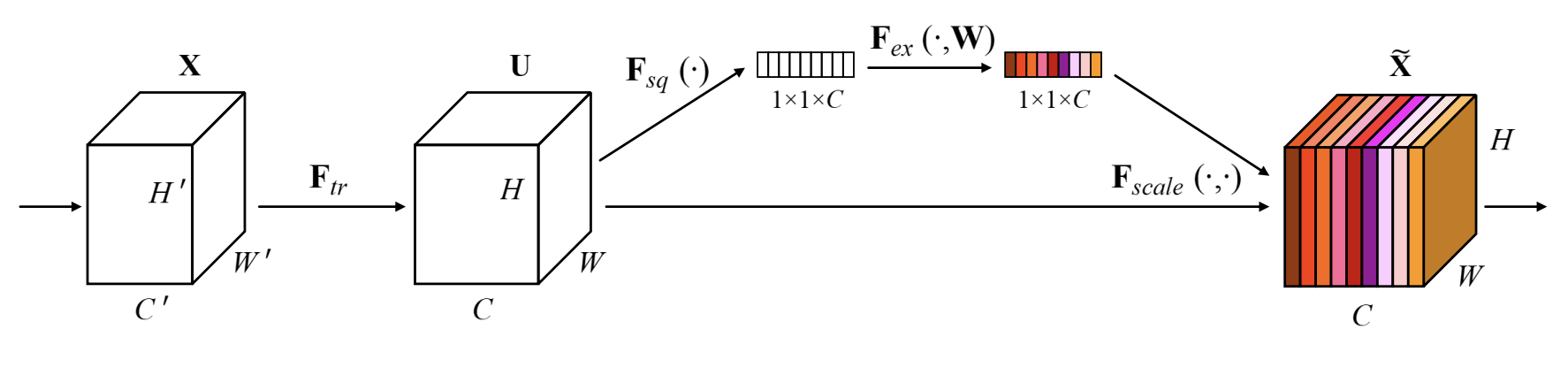

Paper(SqueezeAndExcitation): https://arxiv.org/pdf/1709.01507.pdf

- DeepLabV3plus with EfficientNet as a backbone

- DeepLabV3plus_SqueezeExcitation with EfficientNet as a backbone

- DeepLabV3plus with ResNet as a backbone

- DeepLabV3plus with DenseNet as a backbone

- DeepLabV3plus with SqueezeNet as a backbone

- DeepLabV3plus with VGG16 as a backbone

- DeepLabV3plus with ResNext101 as a backbone. We need to clone qubevl's classification_models repository (I have used the pre-trained model from this repository).

Paper link: GCN

Paper link: Pyramid Scene Parsing Network

This model is to try whether Deeplabv3p model with an added PSPNet module for deeper semantic feature extraction and Squeeze&Excitation modules to add channel-wise attention. I will be sharing results of this if this works out well.

- Unet with MobileNetV2 as a backbone

- Unet with EfficientNet as a backbone Coming Soon...

- Unet with ResNet50 as a backbone Coming Soon...

Note: We can directly use segmentation_models package for Unet (Github: http://github.com/qubvel/segmentation_models).