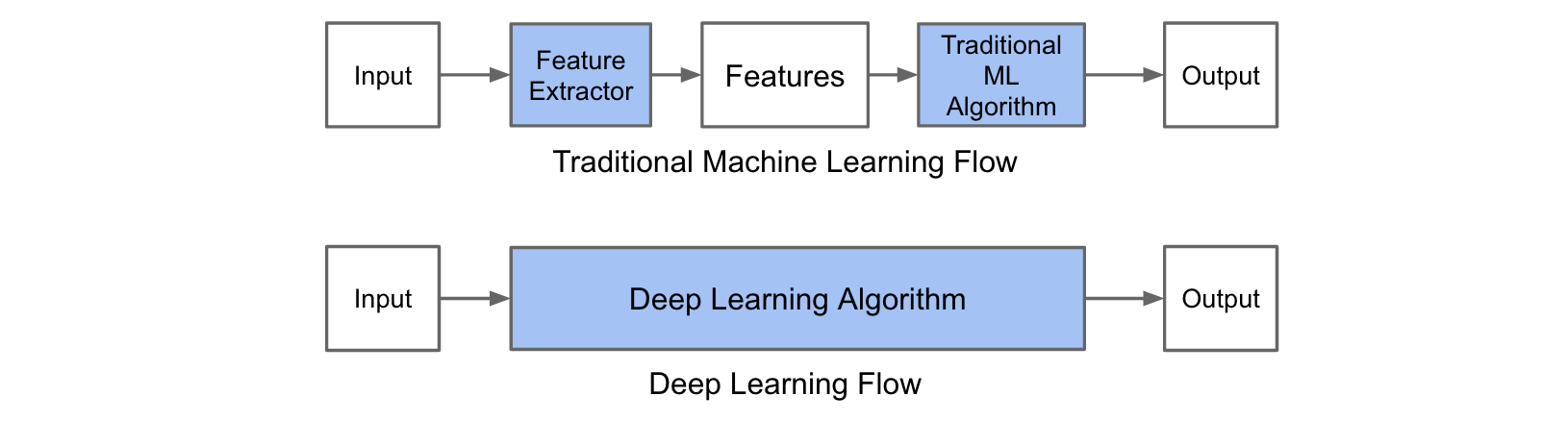

The main difference between traditional machine learning and deep learning algorithms is in the feature engineering. In traditional machine learning algorithms, we need to hand-craft the features. By contrast, in deep learning algorithms feature engineering is done automatically by the algorithm. Feature engineering is difficult, time-consuming and requires domain expertise. The promise of deep learning is more accurate machine learning algorithms compared to traditional machine learning with less or no feature engineering.

The above paragraph might void the ambiguities between ML and DL. So coming to next part, how do we implement? Caffe: Deep learning framework. Keras: A framework which simplifies TensorFlow.

The infamous convolutional neural network i.e differentiating Dogs and Cats from a given picture. This is a classic example of Deep Learning application. How? Caffe or Keras. Kaggle dataset which consists of 25,000 images of Cats and Dogs with labels.

Convolutional neural networks require large datasets and a lot of computational time to train. Some networks could take up to 2-3 weeks across multiple GPUs to train. Transfer learning is a very useful technique that tries to address both problems. Instead of training the network from scratch, transfer learning utilizes a trained model on a different dataset, and adapts it to the problem that we're trying to solve.