-

Notifications

You must be signed in to change notification settings - Fork 525

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Fix merge conflicts [skip ci] #3892

Fix merge conflicts [skip ci] #3892

Commits on May 19, 2021

-

Remove Base.enable_rmm_pool method as it is no longer needed (#3875)

This should resolve the confusion caused in the issue rapidsai/raft#228. Tagging @dantegd for review. Authors: - Thejaswi. N. S (https://github.com/teju85) Approvers: - Dante Gama Dessavre (https://github.com/dantegd) URL: #3875

Configuration menu - View commit details

-

Copy full SHA for 3be1545 - Browse repository at this point

Copy the full SHA 3be1545View commit details

Commits on May 20, 2021

-

Deterministic UMAP with floating point rounding. (#3848)

Use floating rounding to make UMAP optimization deterministic. This is a breaking change as the batch size parameter is removed. * Add procedure for rounding the gradient updates. * Add buffer for gradient updates. * Add an internal parameter `deterministic`, which should be set to `true` when `random_state` is set. The test file is removed due to #3849 . Authors: - Jiaming Yuan (https://github.com/trivialfis) Approvers: - Corey J. Nolet (https://github.com/cjnolet) URL: #3848

Configuration menu - View commit details

-

Copy full SHA for 99a80c8 - Browse repository at this point

Copy the full SHA 99a80c8View commit details -

Update

CHANGELOG.mdlinks for calver (#3883)This PR updates the `0.20` references in `CHANGELOG.md` to be `21.06`. Authors: - AJ Schmidt (https://github.com/ajschmidt8) Approvers: - Dillon Cullinan (https://github.com/dillon-cullinan) URL: #3883

Configuration menu - View commit details

-

Copy full SHA for 0b33f9d - Browse repository at this point

Copy the full SHA 0b33f9dView commit details -

Make sure

__init__is called in graph callback. (#3881)I made the mistake and got a segmentation fault. A value error might be nicer. Authors: - Jiaming Yuan (https://github.com/trivialfis) Approvers: - Corey J. Nolet (https://github.com/cjnolet) URL: #3881

Configuration menu - View commit details

-

Copy full SHA for b7a634a - Browse repository at this point

Copy the full SHA b7a634aView commit details -

AgglomerativeClustering support single cluster and ignore only zero d…

…istances from self-loops (#3824) Closes #3801 Closes #3802 Corresponding RAFT PR: rapidsai/raft#217 Authors: - Corey J. Nolet (https://github.com/cjnolet) Approvers: - Dante Gama Dessavre (https://github.com/dantegd) URL: #3824

Configuration menu - View commit details

-

Copy full SHA for 05124c4 - Browse repository at this point

Copy the full SHA 05124c4View commit details

Commits on May 21, 2021

-

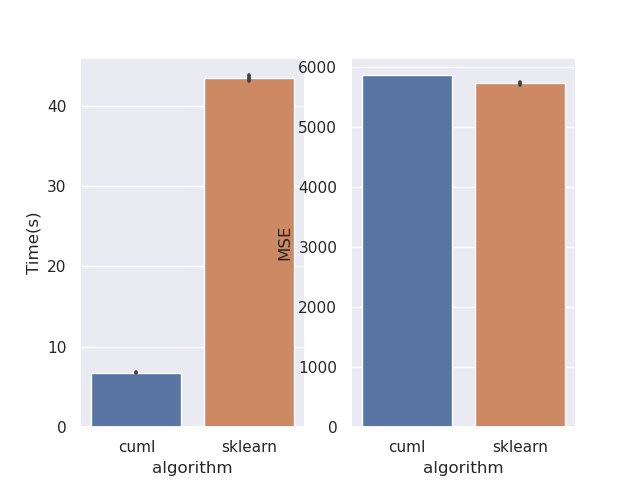

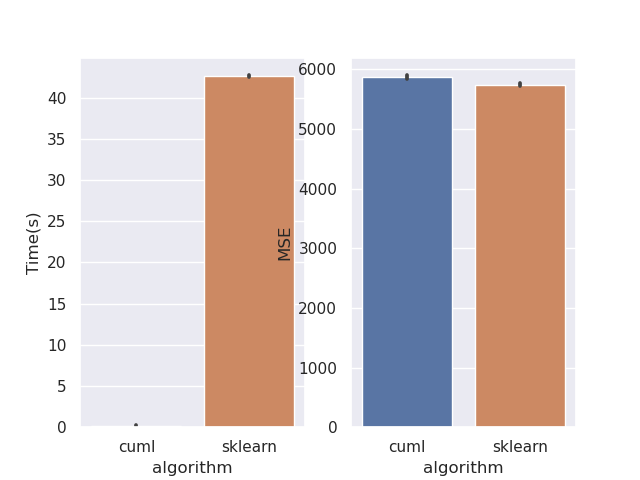

Fix RF regression performance (#3845)

This PR rewrites the mean squared error objective. Mean squared error is much easier when factored mathematically into a slightly different form. This should bring regression performance in line with classification. I've also removed the MAE objective as its not correct. This can be seen from the fact that leaf predictions with MAE use the mean, where the correct minimiser is the median. Also see sklearns implementation, where streaming median calculations are required: https://github.com/scikit-learn/scikit-learn/blob/de1262c35e2aa4ee062d050281ee576ce9e35c94/sklearn/tree/_criterion.pyx#L976. Implementing this correctly for GPU would be very challenging. Performance before:  After:  Script: ```python from cuml import RandomForestRegressor as cuRF from sklearn.ensemble import RandomForestRegressor as sklRF from sklearn.datasets import make_regression from sklearn.metrics import mean_squared_error import numpy as np import pandas as pd import matplotlib import matplotlib.pyplot as plt import seaborn as sns import time matplotlib.use("Agg") sns.set() X, y = make_regression(n_samples=100000, random_state=0) X = X.astype(np.float32) y = y.astype(np.float32) rs = np.random.RandomState(92) df = pd.DataFrame(columns=["algorithm", "Time(s)", "MSE"]) d = 10 n_repeats = 5 bootstrap = False max_samples = 1.0 max_features = 0.5 n_estimators = 10 n_bins = min(X.shape[0], 128) for _ in range(n_repeats): clf = sklRF( n_estimators=n_estimators, max_depth=d, random_state=rs, max_features=max_features, bootstrap=bootstrap, max_samples=max_samples if max_samples < 1.0 else None, ) start = time.perf_counter() clf.fit(X, y) skl_time = time.perf_counter() - start pred = clf.predict(X) cu_clf = cuRF( n_estimators=n_estimators, max_depth=d, random_state=rs.randint(0, 1 << 32), n_bins=n_bins, max_features=max_features, bootstrap=bootstrap, max_samples=max_samples, use_experimental_backend=True, ) start = time.perf_counter() cu_clf.fit(X, y) cu_time = time.perf_counter() - start cu_pred = cu_clf.predict(X, predict_model="CPU") df = df.append( { "algorithm": "cuml", "Time(s)": cu_time, "MSE": mean_squared_error(y, cu_pred), }, ignore_index=True, ) df = df.append( { "algorithm": "sklearn", "Time(s)": skl_time, "MSE": mean_squared_error(y, pred), }, ignore_index=True, ) print(df) fig, ax = plt.subplots(1, 2) sns.barplot(data=df, x="algorithm", y="Time(s)", ax=ax[0]) sns.barplot(data=df, x="algorithm", y="MSE", ax=ax[1]) plt.savefig("rf_regression_perf_fix.png") ``` Authors: - Rory Mitchell (https://github.com/RAMitchell) Approvers: - Philip Hyunsu Cho (https://github.com/hcho3) - Thejaswi. N. S (https://github.com/teju85) - John Zedlewski (https://github.com/JohnZed) URL: #3845

Configuration menu - View commit details

-

Copy full SHA for cb6ef52 - Browse repository at this point

Copy the full SHA cb6ef52View commit details

Commits on May 22, 2021

-

Fix for MNMG test_rf_classification_dask_fil_predict_proba (#3831)

See #3820 Authors: - Micka (https://github.com/lowener) Approvers: - Victor Lafargue (https://github.com/viclafargue) - Dante Gama Dessavre (https://github.com/dantegd) URL: #3831

Configuration menu - View commit details

-

Copy full SHA for ea662e8 - Browse repository at this point

Copy the full SHA ea662e8View commit details -

Fix MNMG test test_rf_regression_dask_fil (#3830)

See #3820 Authors: - Philip Hyunsu Cho (https://github.com/hcho3) Approvers: - Victor Lafargue (https://github.com/viclafargue) - Dante Gama Dessavre (https://github.com/dantegd) URL: #3830

Configuration menu - View commit details

-

Copy full SHA for 3e89f04 - Browse repository at this point

Copy the full SHA 3e89f04View commit details

Commits on May 24, 2021

-

Configuration menu - View commit details

-

Copy full SHA for b52db0b - Browse repository at this point

Copy the full SHA b52db0bView commit details