-

Notifications

You must be signed in to change notification settings - Fork 680

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[subsystem-benchmarks] Save results to json #3829

Conversation

| @@ -82,6 +82,25 @@ impl BenchmarkUsage { | |||

| _ => None, | |||

| } | |||

| } | |||

|

|

|||

| pub fn to_json(&self) -> color_eyre::eyre::Result<String> { | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is more like, to_chart_items, because you also have Serialize/Deserialize derived for this structure which ca produce Jsons

| @@ -151,3 +170,10 @@ impl ResourceUsage { | |||

| } | |||

|

|

|||

| type ResourceUsageCheck<'a> = (&'a str, f64, f64); | |||

|

|

|||

| #[derive(Debug, Serialize)] | |||

| pub struct ChartItem { | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is this a format our other tooling is expecting ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yes, added to the PR description

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What is missing is documentation about this charting feature and how to use it

|

Also, would be great to share some screenshots of it in action 😁 |

Co-authored-by: ordian <[email protected]>

Added PR description. |

|

The CI pipeline was cancelled due to failure one of the required jobs. |

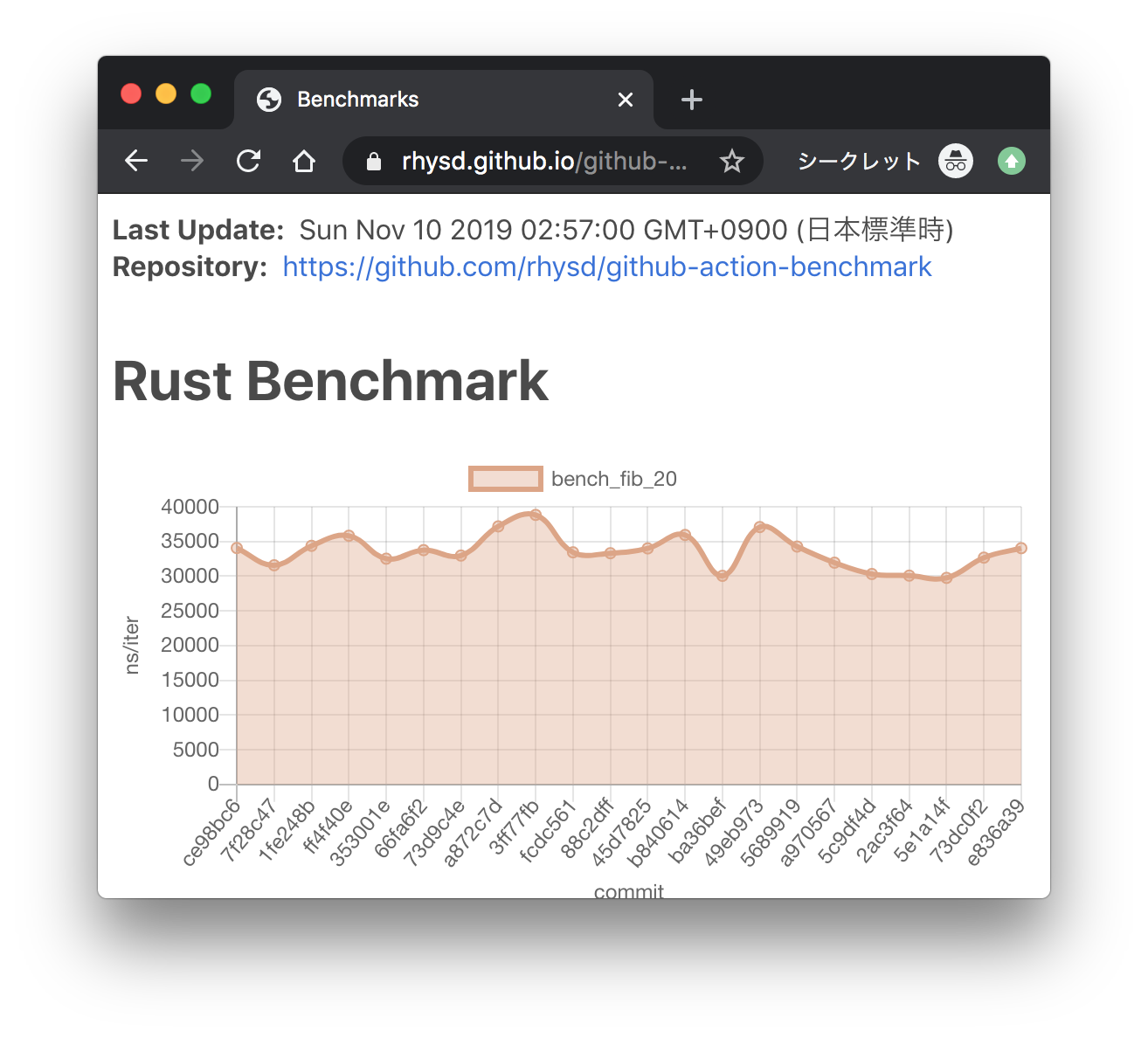

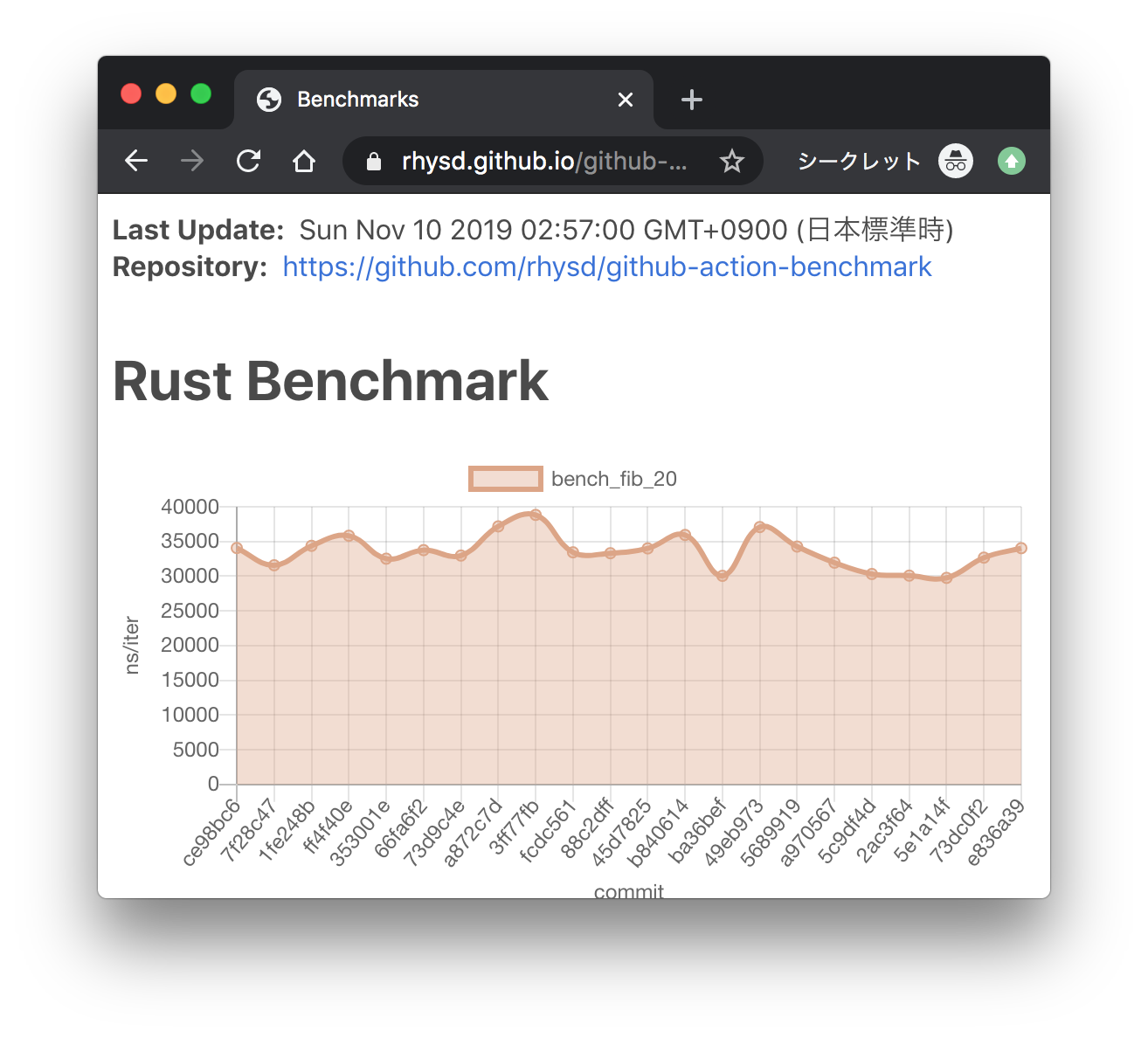

Here we add the ability to save subsystem benchmark results in JSON format to display them as graphs To draw graphs, CI team will use [github-action-benchmark](https://github.com/benchmark-action/github-action-benchmark). Since we are using custom benchmarks, we need to prepare [a specific data type](https://github.com/benchmark-action/github-action-benchmark?tab=readme-ov-file#examples): ``` [ { "name": "CPU Load", "unit": "Percent", "value": 50 } ] ``` Then we'll get graphs like this:  [A live page with graphs](https://benchmark-action.github.io/github-action-benchmark/dev/bench/) --------- Co-authored-by: ordian <[email protected]>

Here we add the ability to save subsystem benchmark results in JSON format to display them as graphs

To draw graphs, CI team will use github-action-benchmark. Since we are using custom benchmarks, we need to prepare a specific data type:

Then we'll get graphs like this:

A live page with graphs