-

Notifications

You must be signed in to change notification settings - Fork 164

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

OpenTelemetry Proposal: Introduce semantic conventions for CI/CD observability #223

Conversation

Initial OTEP definition for CI/CD Observability

some typos and formatting

…P.md per pull request id open-telemetry#223

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What I'm missing from this proposal is the current state of other CID integrations which already support OTEL.

| - Which receivers are needed beyond OTLP to support the use cases and workflows? | ||

| - Which exporters are needed to support common backends? | ||

| - Which processors are needed to support the defined workflows? | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| - How the trace context should be propagated? | |

There are tools which already implement some basic context propagation. Such as the mentioned Jenkins plugin or OTEL-CLI which use environment variables for that use case.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't have the exhaustive list of tool integrations, and glad to collect it with other contributors here.

However, I think the path here is analyzing the needed semantic conventions.

In this context, I should flag a subsequent PR opened to propose semantic conventions for deployments:

open-telemetry/opentelemetry-specification#3169

@thisthat how do you see the alignment of these proposals?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sorry for the late answer @horovits

I like to align the two proposals 👍 My PR focuses only on what attributes we should emit on a trace/log that describes a CI/CD pipeline. I think as part of this OTEP we should address the point of @secustor and try to agree on how the trace context can be propagated. This way, different tools can use together and each one contributes spans to the trace so there won't be blind spots.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@horovits I agree that shared semantics will help. For a tool to propagate context, it needs to know where to look for it in any incoming trigger or data/policy that is used to decide to perform an action. It also needs how to enrich the context and where to send it to propagate it further. Shared semantics definitely help here, which is very much aligned with the mission of CDEvents.

CDEvents has a simple model for deployment related of events today - it would be great to have shared semantics here as well. /cc @AloisReitbauer I think this would be of interest to the App Delivery Tag as well.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@thschue FYI ^^

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I would like to retrigger this discussion as I'm facing some decisions which will have an effect on how we are tackling things.

I see 3 basic ways we should support to propagate context.

HTTP headers

Basically what is already in place the W3C standard.

The use case would be here that systems get triggered by webhook, e.g. VCS to CI system.

Environment variables

I'm imagining here one process handing over context to another. e.g. a pipeline run handing over context to a tool used inside of the run.

Other

Other ways are possible too, tough I would add them to the specification and make them optional e.g. CLI parameters or files containing the necessary information.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@secustor - not sure if you've seen it but there's another OTEP that is specifically related to context propagation at the environment levels that's in progress. open-telemetry/opentelemetry-specification#740

@deejgregor has an working branch for that addition as well that was brought up on today's SIG alongside this OTEP.

This is one player in the space https://github.com/inception-health/otel-export-trace-action |

|

@horovits I have a clarification question regarding the goal here, is this about "OpenTelemetry should define some semantic convention for CI/CD observability" or "CI/CD should use OpenTelemetry"? The latter sounds like a good issue/proposal for CI/CD systems rather than OpenTelemetry. |

in the context of an OpenTelemetry extension proposal OTEP, the point is of course to extend OTel to support CI/CD use cases. I believe that once there's an open specification in place, tool vendors/projects will follow and adopt it. |

Sorry, this is still not clear to me - what exactly does "extend OTel to support CI/CD use cases" mean? What is needed/missing (e.g. do we need extra API? do we need specific semantic conventions) from OTel? |

sorry I might miss your question, but I tried to elaborate on that in the 'Internal details' section of the proposal: can you elaborate what you find missing in the above , so I can try and answer? |

Now I understand, thanks! I was trying to see how could TC help since this PR seems to be stuck / not receiving much attentions. I personally would suggest:

|

|

|

||

| OpenSearch dashboard for monitoring Jenkins pipelines: | ||

|  | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

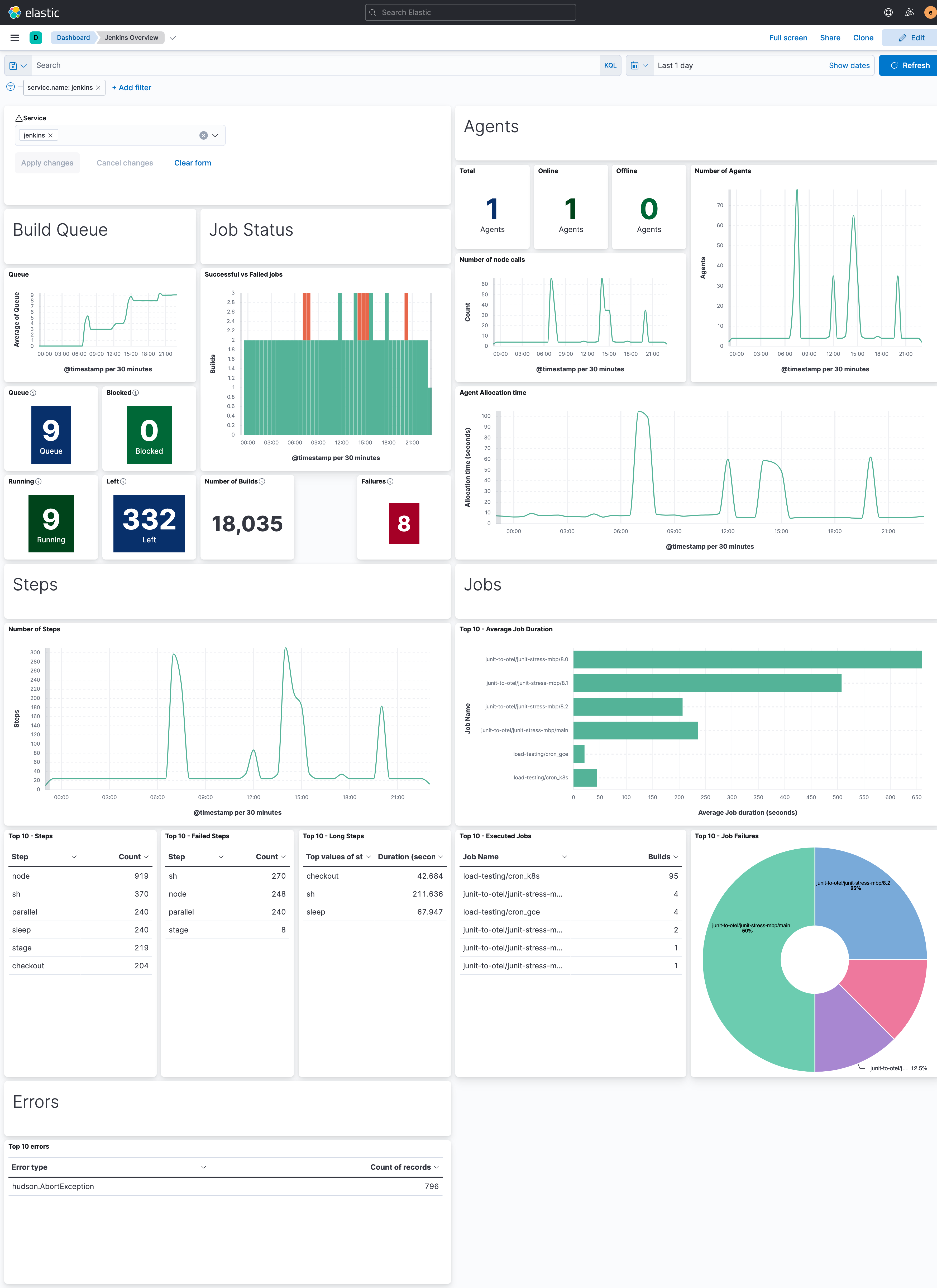

Worth to mention also the Elastic Stack as the primary integration of the Jenkins Opentelemetry plugin

| Elastic Stack dashboard for monitoring Jenkins pipelines: | |

|  | |

| OpenSearch dashboard for monitoring Jenkins pipelines: | ||

|  | ||

|

|

||

| For more examples, see [this article](https://logz.io/learn/cicd-observability-jenkins/) on CI/CD observability using currently available open source tools. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could we add more articles and presentations here? We have been a bunch in the last three years.

- Improve your software delivery with CI/CD observability and OpenTelemetry

- DevOpsWorld 2021 - Embracing Observability in Jenkins with OpenTelemetry

- DevOpsWorld 2021 - Who Observes the Watchers? An Observability Journey

- Embracing Observability in CI/CD with OpenTelemetry

- FOSDEM 2022 - OpenTelemetry and CI/CD

- cdCon Austin 2022 - Making your CI/CD Pipelines Speaking in Tongues with OpenTelemetry

- Observability Guide - Elastic Stack 8.7

| OpenTelemetry instrumentation should then support in collecting and emitting the new data. | ||

|

|

||

| OpenTelemetry Collector can then offer designated processors for these payloads, as well as new exporters for designated backend analytics tools, as such prove useful for release engineering needs beyond existing ecosystem. | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Not only the CI/CD system can send OpenTelemetry data, the tools used as part of the CI/CD pipeline can also send its own OpenTelemetry data. Distributed tracing allows combining all these spans in a single stream of spans. This process gives you more fine-grained details about your pipeline and process.

These are some of the tools integrated into your pipelines give you more details:

| - Which entity model should be supported to best represent CI/CD domain and pipelines? | ||

| - What are the common CI/CD workflows we aim to support? | ||

| - What are the primary tools that should be supported with instrumentation in order to gain critical mass on CI/CD coverage? | ||

| - Is CDEvents a good fit of a specification to integrate with? what is the aligmment, overlap and gaps? and if so, how to establish the cross-foundation and cross-group collaboration in an effective manner? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

OpenTelemetry is more general purpose. It has spans, metrics, and logs. Overall you can replace CDEvents with OpenTelemetry but not the other way around.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks @horovits for this proposal and for mentioning CDEvents here!

CDEvents aims to define shared semantics for interoperability in the CI/CD space. Interoperability includes the observability space too - common semantics in the events generated by the different tools enable things like visualization, metrics and more across tools. The transport layer we use today for such events is CloudEvents; the specification is, however, decoupled from the underlying transport by design, and we were planning indeed to reach out to this community to discuss collaboration.

So, I definitely agree we should collaborate, I think it would be really valuable for the ecosystem.

Many tools are adopting OpenTelemetry and many are adopting CDEvents (or both) and having common semantics would be very beneficial.

I'd be happy to join one of the open telemetry community meetings to present CDEvents, if that is helpful.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

CDEvents could be attached to spans as span events and thus best of both worlds.

| ## Open questions | ||

|

|

||

| Open questions include: | ||

| - Which entity model should be supported to best represent CI/CD domain and pipelines? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There is some work done in the Jenkins OpenTelemetry plugin to try to have a general model for naming conventions

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Also in the junit2otlp for the testing naming conventions

|

|

||

| CI/CD tools today emit various telemetry data, whether logs, metrics or trace data to report on the release pipeline state, to help pinpoint flakyness, and accelerate root cause analysis of failures, whether stemming from the application code, a configuration, or from the CI/CD environment. However, these tools do not follow any particular standard, specification, or semantic conventions. This makes it hard to use observability tools for monitoring these pipelines. Some of these tools provide some observability visualization and analytics capabilities out of the box, but in addition to the tight coupling the offered capabilities are oftentime not enough, especially when one wishes to monitor aggregated information across different tools and different stages of the release process. | ||

|

|

||

| Some tools have started adopting OpenTelemetry, which is an important step in creating standardization. A good example is [Jenkins](https://github.com/jenkinsci/jenkins), a popular CI OSS project, which offers the [Jenkins OpenTelemetry plugin](https://plugins.jenkins.io/opentelemetry/) for emitting telemetry data in order to: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

GitHub Actions also support sending OpenTelemetry data using the action otel-export-trace-action

|

|

||

| Open questions include: | ||

| - Which entity model should be supported to best represent CI/CD domain and pipelines? | ||

| - What are the common CI/CD workflows we aim to support? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The major CI/CD tools

- Jenkins

- GitLab

- GitHub Actions

- CircleCI

- TeamCity

- ArgoCD

- TravisCI

- Azure DevOps

- ...

And build systems/tools:

- Maven (Java)

- Gradle (Java)

- Npm/yarn (Node.js)

- Mage (Go)

- CMake (C/C++)

- Make

- ...

Test frameworks:

- Junit (Java, C, C++, ...)

- Pytest (Python)

- Jest (Node.js)

- ...

Deploy/devOps tools

- Ansible

- Terraform

- Puppet

- Chef

- ...

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

While it isn't mentioned here, I am happy to advocate this internally to be added to Atlassian's Bitbucket Pipelines.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

good listing, thanks for putting it up.

I would first focus on CI/CD tools. build and test frameworks etc. can come as a separate phase, as it brings in new domains.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+Flux

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+Tekton

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

From my understanding of this OTEP, it feels like there is two areas of concerns:

- Defining a semantic convention on how to represent build status

- Understanding how long changes take from inception to production

Please let me know if that isn't correct.

The reason why I think these should be decoupled is that VCS system and CI/CD system should be treated as separated entities since we could further expand the insights we can gain from a VCS system but not force the implementation details to be done by the CI/CD system. For example, you wouldn't want your CI/CD system to track PR size, contributors, and time to merge; while it can it does mean each CI/CD needs to support interactions for each VCS which can be vastly different. The same can be said vice versa.

I think we should drop reference about the collector and instrumentation since I would considered it out of scope due to the fact it side steps the conversation for standardising the actual data being sent from these system. At least, instrumentation should be moved to its own section.

|

|

||

| Building CI/CD observability involves four stages: Collect → Store → Visualize → Alert. OpenTelemetry provides a unified way for the first step, namely collecting and ingesting the telemetry data in an open and uniform manner. | ||

|

|

||

| If you are a CI/CD tool builder, the specification and instrumentation will enable you to properly structure your telemetry, package and emit it over OTLP. OpenTelemetry specification will determine which data to collect, the semantic convention of the data, and how different signal types can be correlated based on that, to support downstream analytics of that data by various tools. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think there is another potential spec here considering a lot of CI/CD is a combination of tooling in a repeatable fashion.

For example if you're able to generate a trace of your pipeline, the existing tooling is unaware of what is being used as your trace context. This means that your tools could be generating their own trace contexts that are disjointed to the CI/CD trace context, making it extremely difficult to combine those two together.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

VCS and the use cases you mention such as "track PR size, contributors, and time to merge" are not in the scope of this OTEP. this proposal is about the release pipelines. it can carry metadata such as the build number being deployed and perhaps the commit SHA, but not getting into the internals of the build process. monitoring VCS may be a valid use case for a separate proposal.

| ## Internal details | ||

|

|

||

| OpenTelemetry specification should be enhanced to cover semantics relevant to pipelines, such as the branch, build, step (ID, duration, status), commit SHA (or other UUID), run (type, status, duration). These should be geared for observability into issues in the released application code. | ||

| In addition, oftentimes release issues are not code-based but rather environmental, stemming from issues in the build machines, garbage collection issues of the tool or even a malstructured pipeline step. In order to provide observability into CI/CD environment, especially one with distributed execution mechanism, there's need to monitor various entities such as nodes, queues, jobs and executors (using the Jenkins terms, other tools having respective equivalents, which the specification should abstract with the semantic convention). |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This feels like another area that is conflating what the goal of this OTEP is.

Internal operations of Jenkins (or any build system) should be separated out into their own section.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If it is within your control (ie self hosted runners), you can use the otel collector and auto instrumentation agents (where possible and not provided by the vendor) on build nodes to surface this information.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

many times pipeline runs fail due to environmental issues rather than ones related to the deployed code.

I see it as a core value that CI/CD observability brings, to discern these two cases.

and while you can still use OTEL to monitor your individual tools, the purpose of this OTEP is to standardize on this.

same as we've done with client side instrumentation WG.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Indeed issues with the environment in a distributed build system can be very difficult to track down even if both CI and nodes are instrumented to emit OTEL data.

For example CI could use https://plugins.jenkins.io/opentelemetry/ to send traces of the job executions and build agents could use https://github.com/prometheus/node_exporter and/or https://github.com/open-telemetry/opentelemetry-collector to export host metrics. Even in this scenario it can be difficult to correlate a failing pipeline run with the metrics host(s) that executed the build.

The plugin https://plugins.jenkins.io/opentelemetry-agent-metrics/ builds on https://plugins.jenkins.io/opentelemetry/ to solve this issue by running dedicated otel collectors on each build agent and adding attributes to the metrics identifying the run and main CI controller (https://github.com/jenkinsci/opentelemetry-agent-metrics-plugin/blob/main/src/main/resources/io/jenkins/plugins/onmonit/otel.yaml.tmpl)

|

|

||

| The CDF (Continuous Delivery Foundation) has the Events Special Interest Group ([SIG Events](https://github.com/cdfoundation/sig-events)) which explores standardizing on CI/CD event to facilitate interoperability (it is a work-stream within the CDF SIG Interoperability.). The group is working on [CDEvents](https://cdevents.dev/), a standardized event protocol that caters for technology agnostic machine-to-machine communication in CI/CD systems. It makes sense to evaluate alignment between the standards. | ||

|

|

||

| OpenTelemetry instrumentation should then support in collecting and emitting the new data. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is more language semantic but the instrumentation provided by open telemetry doesn't need to be updated, but rather, our definitions for events that should be captured within this domain of interest.

| OpenTelemetry instrumentation should then support in collecting and emitting the new data. | |

| These defined events from the Continuous Delivery Foundation (CDF) should be merged into the semantic convention for these systems to implement. |

|

|

||

| OpenTelemetry instrumentation should then support in collecting and emitting the new data. | ||

|

|

||

| OpenTelemetry Collector can then offer designated processors for these payloads, as well as new exporters for designated backend analytics tools, as such prove useful for release engineering needs beyond existing ecosystem. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I would suggest moving this to a stretch goal to be honest, mostly contributing components to the collector requires (hopefully an contributors from that company) to help maintain that component. Gathering interest to develop and contribute components from vendors may take a bit of effort and I wouldn't want it to block this OTEP.

|

|

||

| Open questions include: | ||

| - Which entity model should be supported to best represent CI/CD domain and pipelines? | ||

| - What are the common CI/CD workflows we aim to support? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

While it isn't mentioned here, I am happy to advocate this internally to be added to Atlassian's Bitbucket Pipelines.

| Open questions include: | ||

| - Which entity model should be supported to best represent CI/CD domain and pipelines? | ||

| - What are the common CI/CD workflows we aim to support? | ||

| - What are the primary tools that should be supported with instrumentation in order to gain critical mass on CI/CD coverage? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This should be made its own area of interest mostly because there is a lot of scope and how would we standardise tooling to work and link back to the build pipeline natively.

| - Which receivers are needed beyond OTLP to support the use cases and workflows? | ||

| - Which exporters are needed to support common backends? | ||

| - Which processors are needed to support the defined workflows? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I would considered these out of scope considering that we are not looking to bring additional instrumentation from tooling and vendors, but rather having agreed upon convention that each implement

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+1 to this one.

|

👋 Hello all! |

|

A similar effort focused on measuring deliveries in particular: |

|

Looking to revive this OTEP. It looks to have been a while since there's been any traction (though I just found a new comment from a couple weeks ago). I'd like to know what needs to be done to get this moved forward & in? Brought this up att the SIG meeting today for specification and the overall thought was to bring the discussion back here & potentially in the WG. |

|

Hey @adrielp, I am also interested in this OTEP and would like to help move this forward :) |

|

awesome @thisthat ! Per the last SIG WG meeting, I've started working on a project proposal to create a CI/CD Observability Sem conventions working group, focused on driving this OTEP as well as the Environment Variables as trace propagators OTEP(due to how it's necessary for distributed tracing in batch systems like CI/CD). Part of the project proposal requires figuring out staffing needs and getting folks together for the working group, so definitely looking for folks there. Also pulled up with @horovits yesterday and we'll be syncing up again on Monday about this OTEP in particular. |

|

Please, keep me posted @adrielp I am more than happy to join and help the WG :) |

|

@adrielp same here! |

|

@adrielp please include me as well, happy to be a part of it! |

|

Count me in! |

|

Thanks Adriel,

Count me in for the working group. I definitely hope we can collaborate

with the CDEvents (https://cdevents.dev) project as well.

Andrea

…On Thu, 23 Nov 2023 at 14:15, Adriel Perkins ***@***.***> wrote:

awesome @thisthat <https://github.com/thisthat> ! Per the last SIG WG

meeting, I've started working on a project proposal to create a CI/CD

Observability Sem conventions working group, focused on driving this OTEP

as well as the Environment Variables as trace propagators OTEP(due to how

it's necessary for distributed tracing in batch systems like CI/CD).

Part of the project proposal requires figuring out staffing needs and

getting folks together for the working group, so definitely looking for

folks there.

Also pulled up with @horovits <https://github.com/horovits> yesterday and

we'll be syncing up again on Monday about this OTEP in particular.

—

Reply to this email directly, view it on GitHub

<#223 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAQ2PKBJSFYNX7G7AI7E333YF5LBZAVCNFSM6AAAAAATQTABMKVHI2DSMVQWIX3LMV43OSLTON2WKQ3PNVWWK3TUHMYTQMRUGUYDSMZXHE>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

+## Open questions

+

+Open questions include:

+- Which entity model should be supported to best represent CI/CD domain and pipelines?

+- What are the common CI/CD workflows we aim to support?

+Tekton

+1 (I'm a bit biased as a Tekton maintainer :D)

On Tekton side we have a few relevant features:

- emit open telemetry metrics

- generate open telemetry traces for distributed tracing via annotations on

Tekton resources

- emit CloudEvents as well as CDEvents (experimental)

So, we definitely care about observability in Tekton

Andrea

… —

Reply to this email directly, view it on GitHub

<#223 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAQ2PKDILHE2XPRLVHOLJJTYF7CM7AVCNFSM6AAAAAATQTABMKVHI2DSMVQWIX3LMV43YUDVNRWFEZLROVSXG5CSMV3GSZLXHMYTONBXGA2DKNJTG4>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

Would love to help with this as finalising the work on New component: Github Actions Receiver now and https://github.com/krzko/run-with-telemetry to provide telemetry for GitHub Actions. |

@afrittoli yes we should carry on the discussion we started with the CDEvents as to potential collaboration between the projects. some concerns have been raised on your team last time, so we should map carefully if there's an overlap between OTel and CDEvents and the fit. |

@krzko Congrats on completing the work on GitHub Actions! the insights of your experimentation will be valuable here. |

we'd like to hold a call to share with everyone the work to formalize a working group, and to see who's interested in getting involved as we figure out staffing requirements. |

|

The PR has been opened to create the CI/CD Observability Working Group Without a doubt, it's rough, but it's ready to be read, commented on, and discussed in the upcoming meeting. Staffing of course is one of those hot topics. 😄 🚀 |

|

@mhausenblas @afrittoli - I just realized coming back here that I missed your names in the working group PR. Sorry about that 😞 I've added you now, please feel free to check it out and make sure I got it right! Thanks! https://github.com/open-telemetry/community/pull/1822/files#diff-41c277076e06d5ea84d2e8bc9eded2bc97e7f0888502f4f8d691b6c5c3639e57 |

|

Important update: we got approval of the TC to establish the CI/CD Observability SIG. |

|

@horovits Any chance to address/discuss/answer to the comments to the PR? I will do another full review once that is done. |

|

@carlosalberto @horovits - just to provide an update on this. We discussed this OTEP on the SemConv meeting last week. We actually might not need to proceed directly with this OTEPs. Based on current direction, OTEPs are for SPEC changes, and right now our focus is the Semantic Conventions changes. We're reviewing the CDEvents work right now and coming up with a data model. Once we do that we'll be directly contributing to the Semantic Conventions through pull requests. If we need to make any future specification changes, we may leverage this OTEP or make new ones (that are smaller) to account for those changes. But as of now, this OTEP isn't directly needed to be moved forward, just provide larger visibility to the efforts and context until there are spec changes. cc. @jsuereth |

|

Thanks for the follow up! Should we then close this OTEP? We can always find it later on if/as needed. |

|

@carlosalberto I'm fine with closing it if that's how y'all want to handle it. Based on the conversations, I think it makes logical sense given the direction we're headed. @horovits - any objections? |

|

Hey @horovits Any concern? |

@carlosalberto @adrielp sure let's follow the process of the Semantic Conventions team, if this OTEP is no longer required then let's close it. |

|

closing the OTEP PR per the feedback from @carlosalberto @adrielp @jsuereth and the OpenTelemetry Semantic Conventions WG.

|

This is PR is a new OTEP for CI/CD Observability