The scope of this project was limited due to the GCP free trial constraints.

- Set environment variables for the following:

- WEATHER_API - Visual Crossing API Key

- GCP_GCS_BUCKET - The name of your GCP GCS Bucket, can be found via GCP Console

- GCP_PROJECT_ID - GCP Project ID, can be found via GCP Console

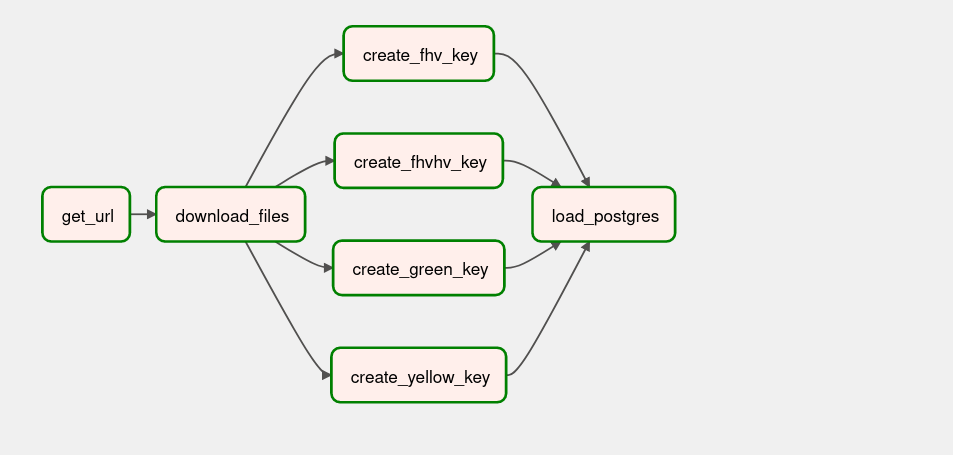

Airflow Setup with Docker, through official guidelines

-

Build the image (only first-time, or when there's any change in the

Dockerfile, takes ~15 mins for the first-time):docker-compose build

then

docker-compose up

-

In another terminal, run

docker-compose psto see which containers are up & running (there should be 7, matching with the services in your docker-compose file). -

Login to Airflow web UI on

localhost:8080with default creds:airflow/airflow -

Run your DAG on the Web Console.

-

When finished or to shut down the container:

docker-compose down

To stop and delete containers, delete volumes with database data, and download images, run:

docker-compose down --volumes --rmi allor

docker-compose down --volumes --remove-orphans