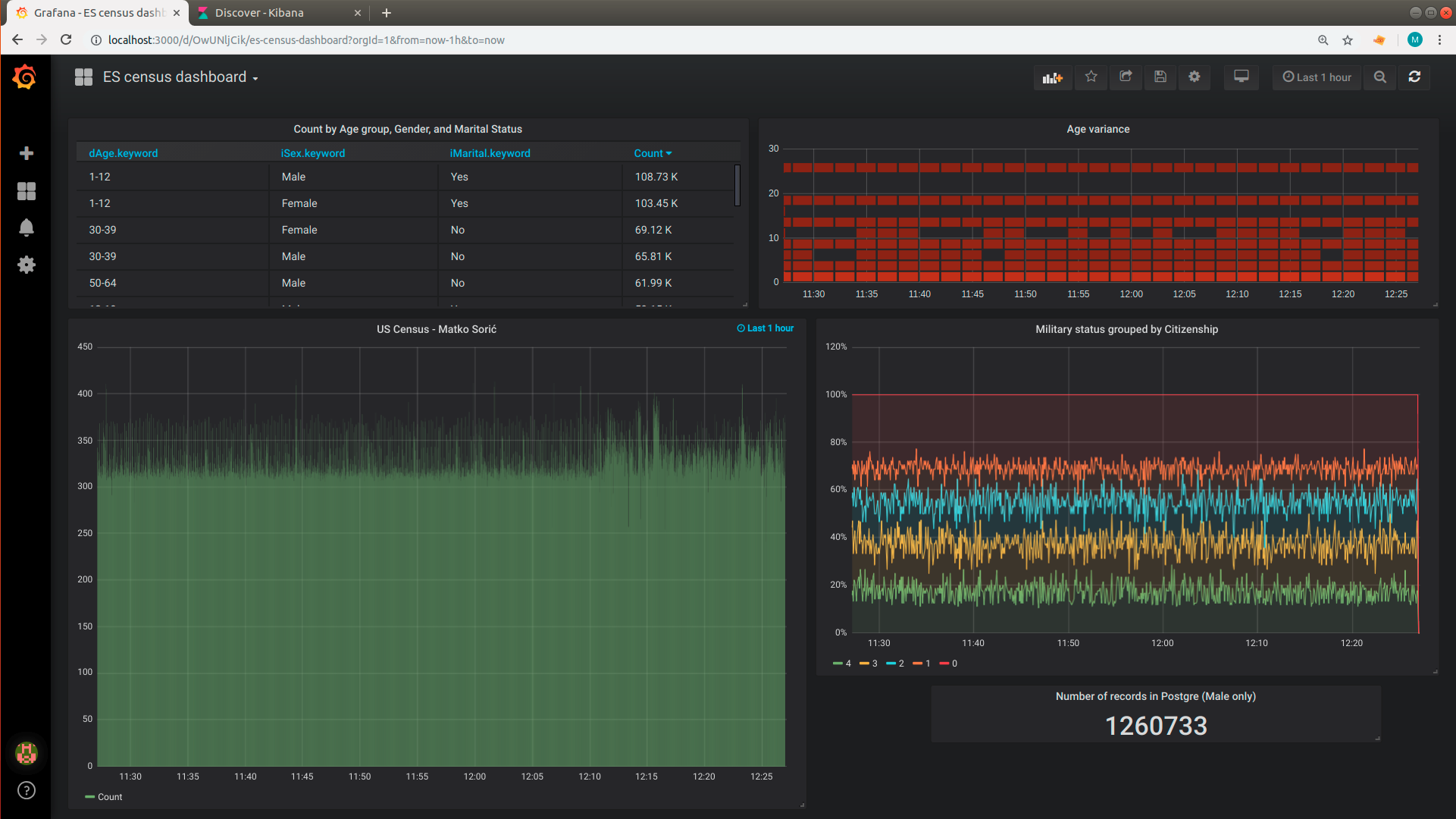

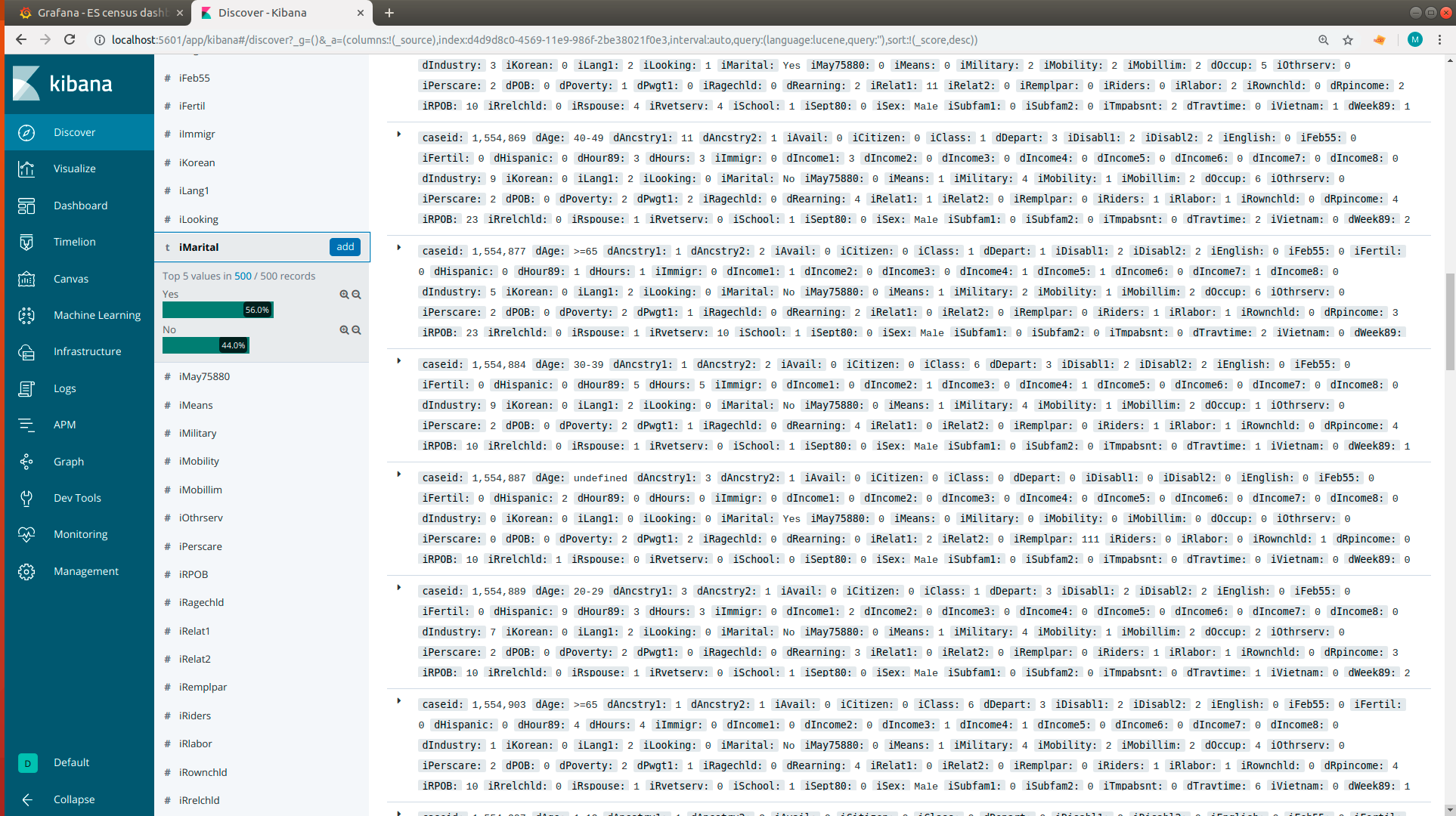

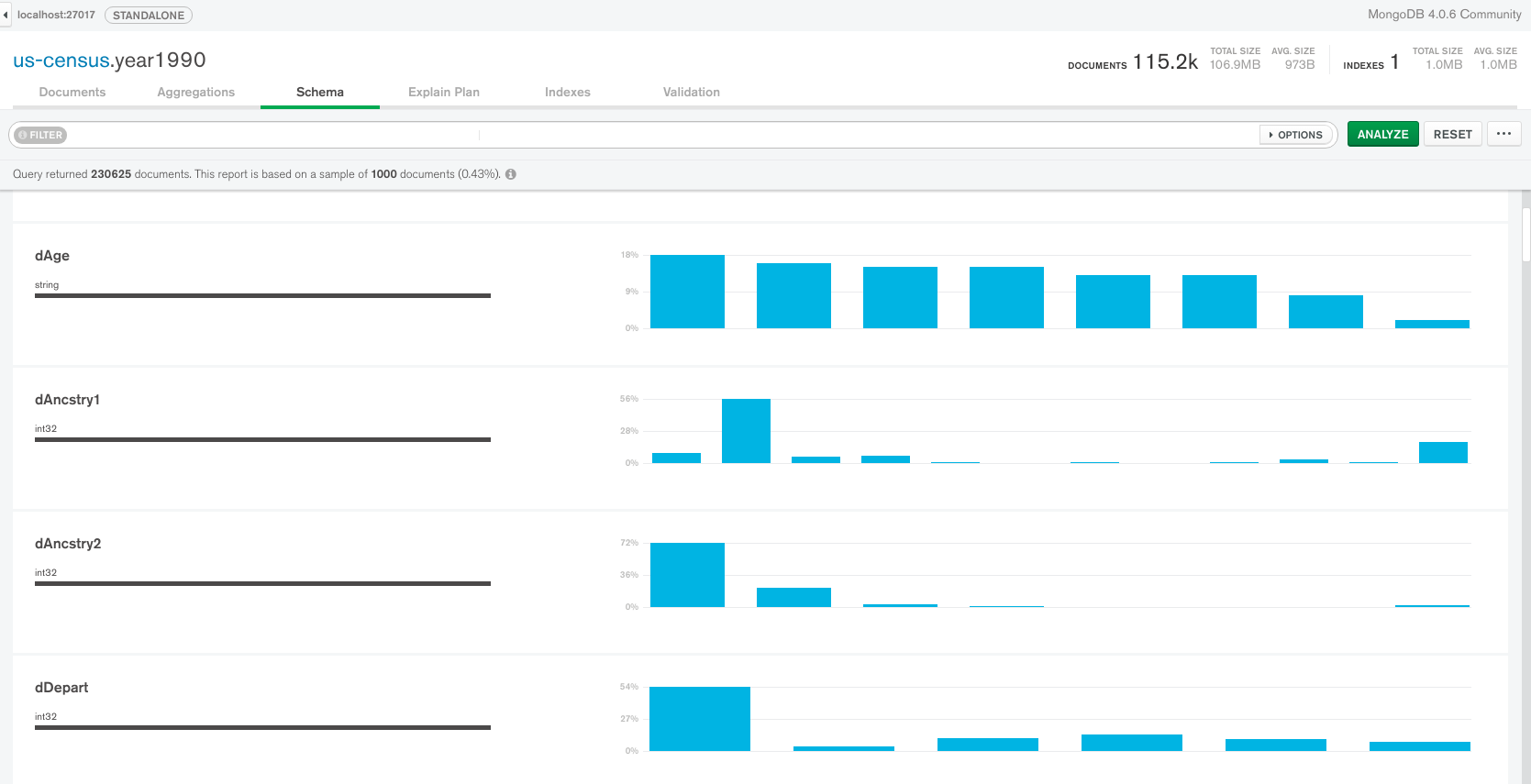

This is my data streaming demonstration with census information collected in United States in 1990. Initial data is in csv file. Kafka producer reads it, adds a timestamp in epoch time to enable Grafana monitoring, and sends it to a local instance of Kafka, creating two different topics (us-census-male and us-census-female), depending on the gender code. Spark Streaming application is subscribed to both topics, and configured to send data to Postgres, MongoDB, and ElasticSearch. To demonstrate ETL and data enrichment, some columns (like gender, age, marital status, etc.) are transformed from codes to original values, and passed along to MongoDB and ElasticSearch. MongoDB and ElasticSearch contain records from both topics, male and female. PostgreSQL gets only raw data from a male topic. Grafana is connected to ElasticSearch and Postgres for monitoring.

I downloaded the dataset from UCI Machine Learning Repository.

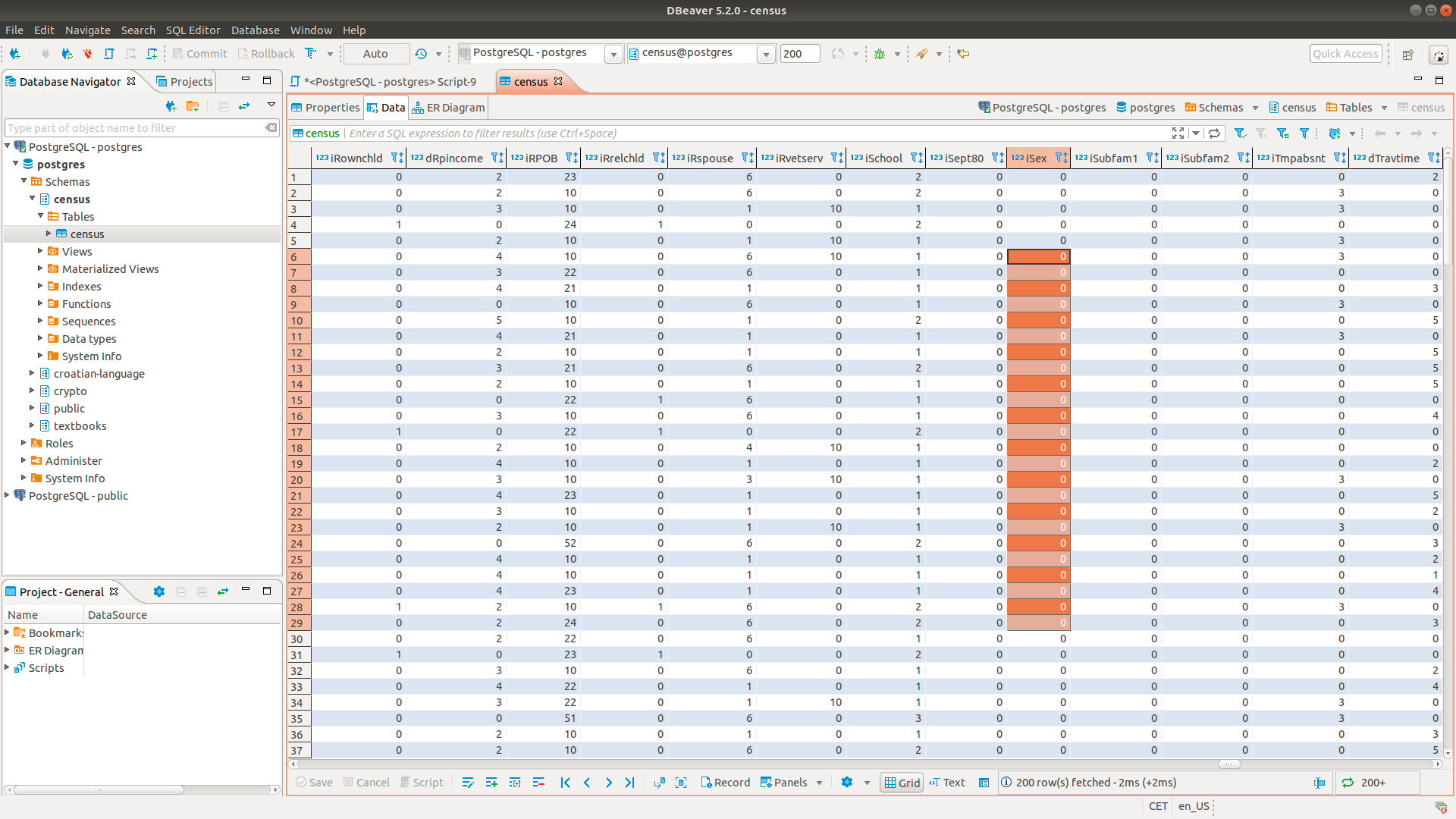

Original data without enriching contains only codes, so each column is a numeric type.

Uncompressed csv file has about 360 MB.

US Census Data (1990)

Code mappings are here:

Mappings

Spark Spreaming 2.4.0

Kafka 2.1

PostgreSQL 10.6

MongoDB 4.0.5

ElasticSearch 6.6.3

Grafana 5.4.3