Introduction: This repository is to apply TRN on ASLLRP dataset. The goal is to recognize sign labels, limited in lexical signs. In addition to TRN framework, keypoint detection, hand detection and left and right hand recognition is also included.

Note: always use git clone --recursive https://github.com/metalbubble/TRN-pytorch to clone this project

Otherwise you will not be able to use the inception series CNN architecture.

- PyTorch 1.3

- Weights & Biases

- Detectron2

- Extract frames.

python extract_frame.py

- Cut videos to utterance clips.

python cut_video_to_clip.py- Delete blank frames.

python delete_unvalid_frame.py

- Generate vocabulary and handshape list.

python generate_vocabulary.py

- Parse annotation xml file and generate json file for each utterance video. Note: you can also include handshape annotation in this step, while I do so in

process_dataset_dai_hand.py

python parse_sign.py

- Cut the utterance videos into sign videos, and reorganize them according to the label. Note: since the label name migh include '/', it's necessary to replace '/' with 'or'.

python GenerateClassificationFolders.py

- Generate category list and train/test list. Each row in train/test list is

[video_id, num_frames, class_idx].

python process_dataset_dai.py

To compute optical flow image of each frame, run python extract_of.py

- Pretrain on Egohands dataset.

python Hand/train_detectron2_ego.py

- Finetune on my annotated images from ASLLRP dataset

python Hand/findtune_asl_detectron2.py

python Hand/train_detectron2_keypoint.py

Simply associate left right wrist keypoint with detected bounding boxes. Save in root/dai_lexical_handbox, each file is the bounding boxes in [left, right] order.

python Hand/classify_leftright_asl_detectron2.py

Then we are able to generate train/test list in which each row is [video_id, num_frames, class_idx, right_start_hs, left_start_hs, right_end_hs, left_end_hs].

python process_dataset_dai_hand.py

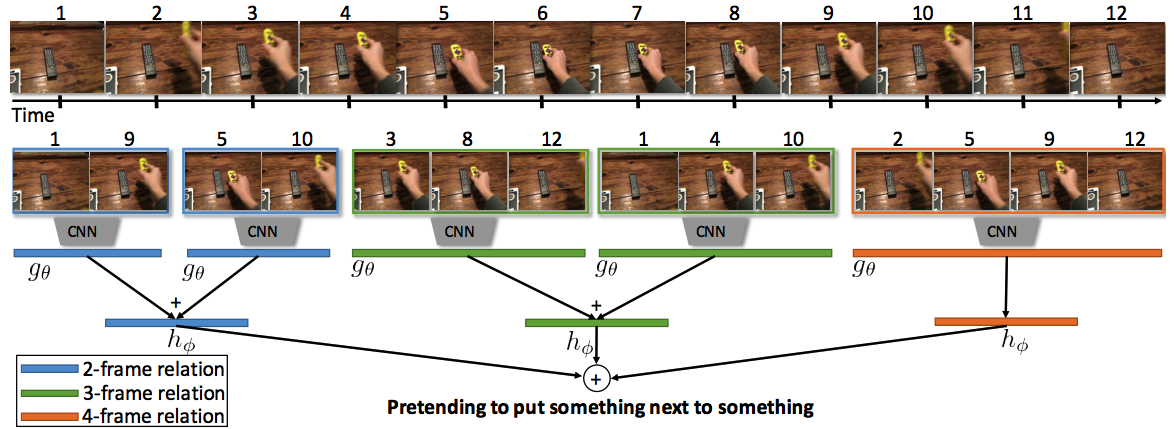

Core code to implement the Temporal Relation Network module is TRNmodule. It is plug-and-play on top of the TSN.

- The command to train single scale TRN using full frame. To train on hand image, use

main-hand.py. To jointly train, usemain-handjoint.py.

CUDA_VISIBLE_DEVICES=0 python main.py \

--dataset dai --modality RGB \

--arch BNInception --num_segments 3 \

--consensus_type TRN --batch_size 64 - The command to train multi-scale TRN

CUDA_VISIBLE_DEVICES=0 python main.py \

--dataset dai --modality RGB \

--arch BNInception --num_segments 3 \

--consensus_type TRNmultiscale --batch_size 64 - The command to test the single scale TRN

python test_video_dai.py \

--frame_folder $FRAME_FOLDER \

--test_segments 3 \

--consensus_type TRN \

--weight $WEIGHT_PATH \

--arch BNInception \

--dataset dai- The command to test the single scale 2-stream TRN

python test-dai-2stream.py \

--dataset rachel --modality RGB \

--arch BNInception --num_segments 3 \

--resume $RGB_WEIGHTPATH \

--resume_of $OF_WEIGHTPATH \

--consensus_type TRN --batch_size 64 \

--data_length 3 \

--evaluateB. Zhou, A. Andonian, and A. Torralba. Temporal Relational Reasoning in Videos. European Conference on Computer Vision (ECCV), 2018. PDF

@article{zhou2017temporalrelation,

title = {Temporal Relational Reasoning in Videos},

author = {Zhou, Bolei and Andonian, Alex and Oliva, Aude and Torralba, Antonio},

journal={European Conference on Computer Vision},

year={2018}

}