-

Notifications

You must be signed in to change notification settings - Fork 4.7k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Unstable Kubernetes v1.6.6 cluster created with Kops 1.6.2 #2928

Comments

|

@bboreham I'm wondering if you could weigh in on the second issue. I'm inclined to think that weave is somehow involved here because of the errors we're getting in syslog from kubelet i.e. CNI errors. And before you ask, we're using weave 1.9.4. |

|

I'm using kops 1.6.2 and upgraded a cluster from k8s 1.6.2 to 1.6.7. The cluster is using Calico. |

I see exactly one error mentioning CNI: This is a message from kubelet basically saying the container process was dead when it tried to check on it. And all the other messages are along the same lines. Looks to me like your Docker is very unhappy, but I have no idea why. If there are other messages you wanted me to look at please clarify. |

|

This is no longer an issue for me ... I believe the steps I've taken in #2982 (comment) have alleviated the problem. |

|

This, unfortunately is still an issue. 😰 Re-opening. |

|

Did the changes with kubelet help at all? |

|

@chrislovecnm to clarify:

To summarise ... these are the issue I'm trying to solve (I don't know if they are related to my cluster's instability):

At the moment ... I'm investigating a kernel panic and trying to set up kernel dumps using |

|

@chrislovecnm another reason I haven't used the flags is because I'm still on kops 1.6.2 and those features will probably be in kops 1.7.x. |

|

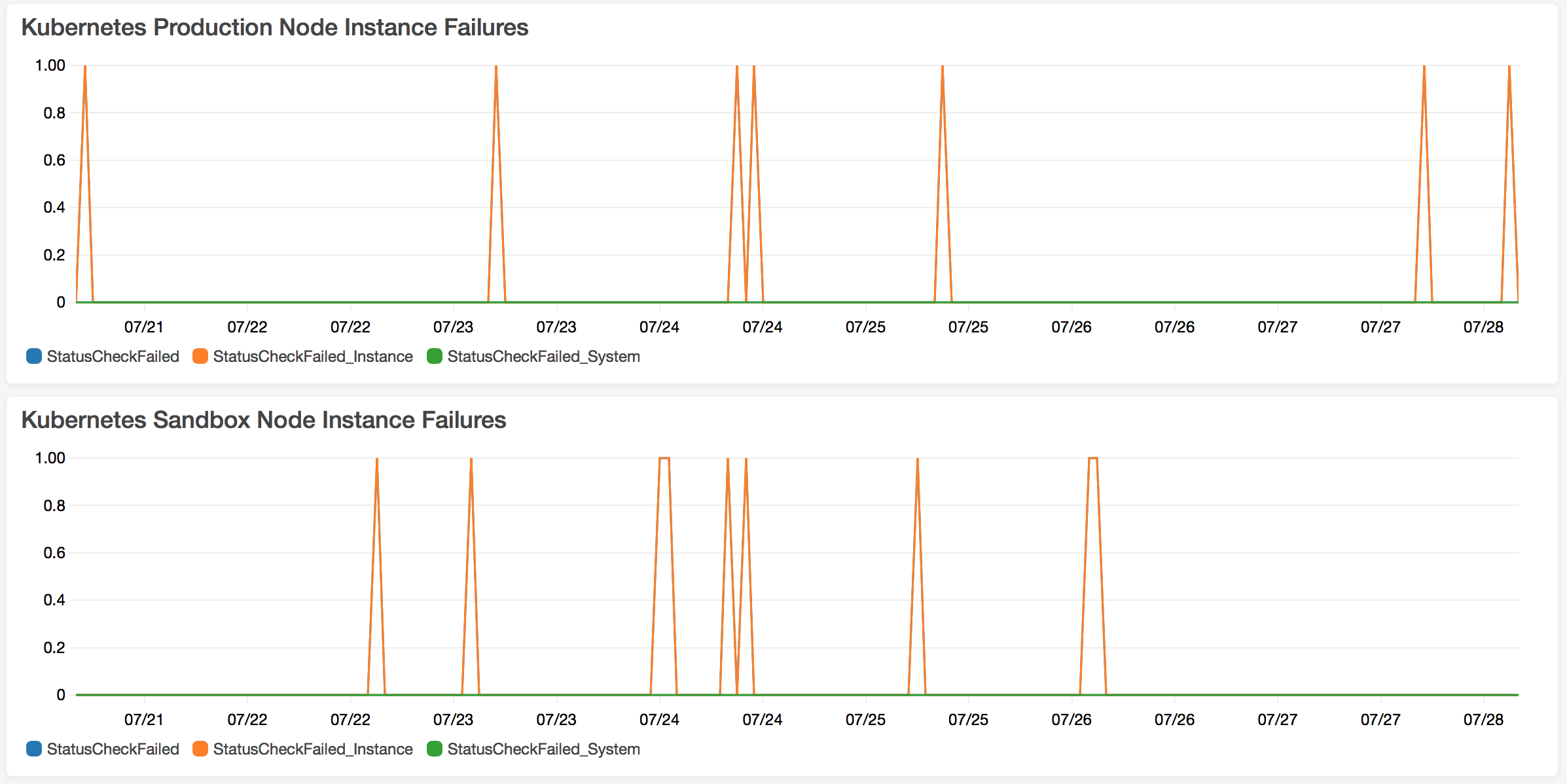

@chrislovecnm reporting back ... using a AMI with 4.4.78 has solved no. 2 and no. 3 listed in #2928 (comment). Changed AMI on sandbox cluster yesterday early morning. You can see I used to get 1 termination per day on average. No more node terminations👇 And the same with the "unregister_netdevice: waiting for lo to become free" issue ... 👇 |

|

Headroom still is not fixed? |

|

@chrislovecnm I'll be doing that next week. Have been busy investigating/addressing cluster instability issues. Will keep you posted. |

|

I have experienced similar issue: We don't yet know why the containers were dead. What should we look at? Our cluster has plenty of unused cpu and memory. |

|

You neee to be using kops 1.7.1 with k8s 1.6.6 - please report on how it works! |

|

@chrislovecnm Thanks for the tip. Is it somwhere in kops docs? |

|

Closing this because a solution was found even thought it's not quite explainable. |

Versions of kops:

Version of kubernetes

Problem

I have used kops+kubernetes since 1.4.7 without issues. I'm struggling with an unstable cluster and the exhibited behaviours simply do not make sense.

The first issue

I'm experiencing cases where a node is terminated and replaced. Sometimes a master, sometimes a minion (but mostly a minion). We don't have a fix for this nor do we know why the node is being rotated.

If a master is rotated we get a lot of

Unknownstatus pods and the everything just goes berzerk across the cluster.These issue may be related:

The second issue

I'm experiencing cases where pods keep locked in a state of transition. By state of transition I mean that if a pod was

Terminating, it stays stuck at that ... and if a node wasContainerCreatingit stays stuck at thatI noticed that pods that are stuck in this way have dead containers. When we have dead containers

/var/log/syslogis full of these:If I clean them (dead containers) out with the below command ... they are able to proceed (

/var/log/syslogis not devoid of the aforementioned errors).To get by I've created a cronjob to do this for me every minute ...

These issue may be related:

Way Forward

This is happening very often ... and I'm willing to help get to the bottom of it. Unsure what I need to provide to give more context so just let me know what I need to check and what logs I need to provide.

The text was updated successfully, but these errors were encountered: