Learning to Select the Guidance Frame in Video Object Segmentation by Deep Sorting Frames

Contact: Brent Griffin (griffb at umich dot edu)

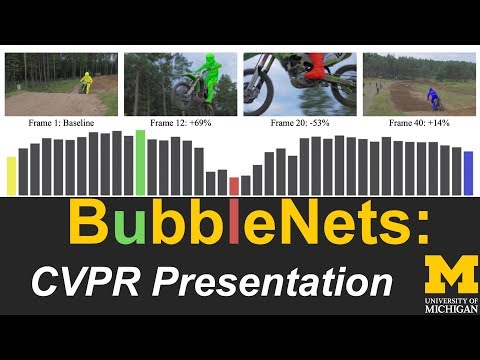

BubbleNets: Learning to Select the Guidance Frame in Video Object Segmentation by Deep Sorting Frames

Brent A. Griffin and Jason J. Corso

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019

Please cite our paper if you find it useful for your research.

@inproceedings{GrCoCVPR19,

author = {Griffin, Brent A. and Corso, Jason J.},

booktitle={The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

title = {BubbleNets: Learning to Select the Guidance Frame in Video Object Segmentation by Deep Sorting Frames},

month = {June},

year = {2019}

}

CVPR 2019 Oral Presentation: https://youtu.be/XBEMuFVC2lg

Qualitative Comparison on DAVIS 2017 Validation Set: Segmentations from different annotation frame selection strategies.

BubbleNets Framework: Deep sorting compares and swaps adjacent frames using their predicted relative performance.

Download resnet_v2_50.ckpt and add to ./methods/annotate_suggest/ResNet/.

Add new data to ./data/rawData/ folder following the examples provided.

scooter-black is an example with BubbleNets and annotation already complete, and soapbox is a completely unprocessed folder example.

Each folder in rawData will be used to train a separate segmentation model using the corresponding annotated training data.

Remove folders from rawData if you do not need to train a new model for them.

Run ./bubblenets_select_frame.py

Uses automatic BubbleNets annotation frame selection with GrabCut-based user annotation tool. BubbleNets selections are stored in a text file (e.g., ./rawData/scooter-black/frame_selection/BN0.txt), so using another annotation tool is also possible.

[native Python, has scikit dependency, requires TensorFlow]

Run ./osvos_segment_video.py

Runs OSVOS segmentation given user-provided annotated training frames. Trained OSVOS models are stored in ./data/models/. Results are timestamped and will appear in the ./results/ folder.

[native Python, requires TensorFlow]

Code for generating new BubbleNets training labels is located in the ./generate_labels folder.

S. Caelles*, K.K. Maninis*, J. Pont-Tuset, L. Leal-Taixé, D. Cremers, and L. Van Gool.

One-Shot Video Object Segmentation, Computer Vision and Pattern Recognition (CVPR), 2017.

Video Object Segmentation.

https://github.com/scaelles/OSVOS-TensorFlow

K. He, X. Zhang, S. Ren and J. Sun.

Deep Residual Learning for Image Recognition, Computer Vision and Pattern Recognition (CVPR), 2016.

Image preprocessing.

https://github.com/tensorflow/tensorflow/blob/master/tensorflow/contrib/slim/python/slim/nets/resnet_v2.py

This code is available for non-commercial research purposes only.

Interview with Robin.ly discussing BubbleNets: https://youtu.be/v5FbjQfl5u4