-

Notifications

You must be signed in to change notification settings - Fork 5.2k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

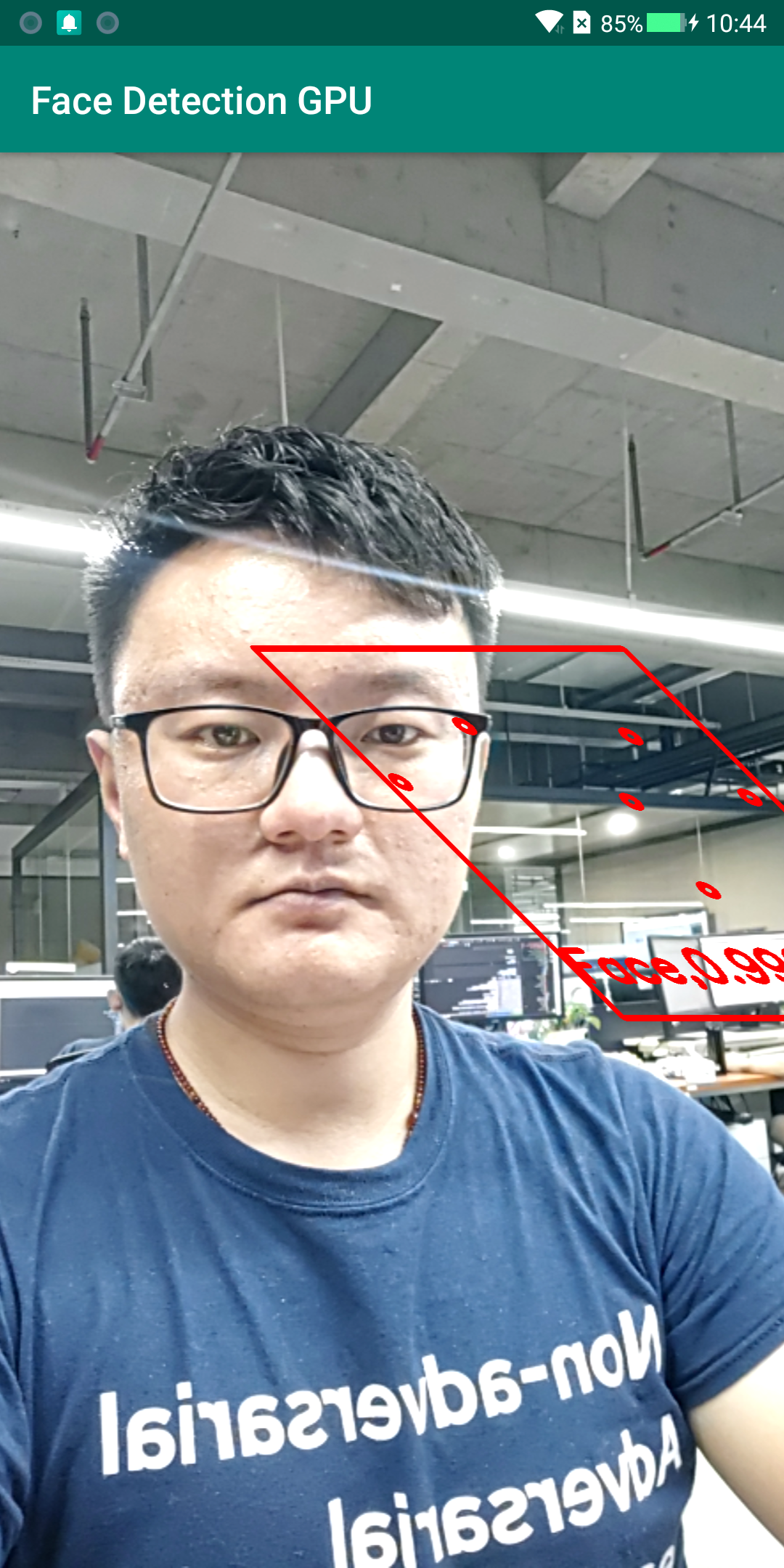

Strange result for face detection gpu demo #9

Comments

|

The results of the Face Detection CPU demo is fine |

|

@xuguozhi Why did the first screenshot show the bounding box being positioned wrongly? Was this temporarily only? |

|

Thanks for reporting. We are aware of such rendering issue. Some other user already got the same issue on a xiaomi mix2s. We believe it's a GPU rendering issue inside the AnnotationOverlayCalculator for certain types of the Android phones. Unfortunately, we fail to reproduce it on our testing devices. To help us reproduce this issue, please let us know your device type if possible. Thanks. |

@mgyong It's not temporarily, it seems always showing like that. My testing phone is XIaomi black shark https://www.mi.com/blackshark-game2/ |

@jiuqiant Xiaomi black shark https://www.mi.com/blackshark-game2/ |

|

@xuguozhi, we are able to reproduce the problem on a Redmi Note 4. We will be working on a fix. Thanks. |

|

Hi @xuguozhi, I think the root cause has been found: Some Xiaomi phones have an odd-size camera image resolution (1269x1692) by default, and mediapipe GpuBuffer assumes all texture sizes are evenly divisible by 4. I can provide a temporary workaround until a proper solution is found.

The first fix (num coords) is a true bug, larger than this one, and fixes a memory allocation issue. The second fix (java) is a way to request a different size camera texture. You can play around with the request size, but the goal is to have the camera give a multiple-of-4-sized image. In my experiments, the size given here results in 1080x1920 image. Hope that helps, and thanks for working with us to help make MediaPipe better |

Sorry, in line 624, should it be |

|

@xuguozhi, sorry for the confusion. |

It doesn't work, the boxes in face detection GPU or object detection GPU are in red, but not standard rectangles. It appears like rectangles with wrongly affine transformation. However, both the CPU version of face detection or object detection works fine. |

|

Ah, sorry about line number mixup, fixed. Seeing red squares is progress! Did you also modify the camera size? Can you verify the new resolution? Another screenshot may also help. |

Hi @mcclanahoochie, |

|

What is the resolution of the camera frames? (before and after the java edit) Another option, instead of the java edit, is to insert a ImageTransformationCalculator calculator in the beginning of the graph to resize the image to a known size. This is to test my theory about the %4 size issue. This graph fixes the skew on the Xiaomi phone here. |

|

@xuguozhi Did you try out @mcclanahoochie ImageTransformationCalculator suggestion? and did it work? If it did, pls let us know |

|

|

Hi, the same issues appear on OPPO Find X and Xiaomi MAX2 phones. |

|

@xuguozhi What @mcclanahoochie gives you is a new MediaPipe graph. You can visualize it in http://viz.mediapipe.dev. Please manually replace the content of the face detection gpu graph with the code snippet in @mcclanahoochie's comment . |

|

@mgyong @jiuqiant @mcclanahoochie Cool~, it works! Thanks, you guys are great! |

|

Awesome! |

fix(timestamp): attempt to fix timestamp error

…e_recognition Adding licenses for files that were missing it

Hi, I have encountered strange face detection results in face detection GPU demo. I have uploaded the screenshot image above. Any suggestions? BTW, my phone is Android 8.0.0

The text was updated successfully, but these errors were encountered: