-

Notifications

You must be signed in to change notification settings - Fork 517

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Django channels + ASGI leaks memory #419

Comments

|

Identifying a memory leak makes me a contributor? :/ |

|

Could you try a different wsgi server? I cannot reproduce any of this with uwsgi or django's devserver. |

|

That might be the thing then, I'm actually using ASGI, tried the three options available, Daphne, Hypercorn and Gunicorn+Uvicorn. |

|

Oh you're using Django from a development branch and run on ASGI? That might be not the same issue @reupen is seeing at all then. |

|

I'm absolutely not using Django from a development branch, I'm using Django Channels. |

|

got it. we never tested with channels, but have support/testing on our roadmap. the memory leak still shouldn't happen. we'll investigate but it could take some time. |

|

Feel free to reach me for any further clarifications, I'm happy to help and appreciate your efforts nonetheless 👍 |

|

Yeah, I can repro it with a random channels app that just serves regular routes via ASGI + hypercorn (not even any websockets configured) So far no luck with uvicorn though. Did you observe faster/slower leaking when comparing servers? |

|

I understand the problem now, and can see how we leak memory. I have no idea why this issue doesn't show with uvicorn but I also don't care. It's very simple: For resource cleanup we expect every Django request to go through I think you will see more of the same issue if you try to set tags with This issue should not be new in 0.10, but it should've been there since forever. @reupen if you saw a problem when upgrading to 0.10 then this is an entirely separate bug, and I would need more information about the setup you're running (please in a new issue though) I think this becomes a duplicate of #162 then (or it will get #162 as dependency at least). cc @tomchristie. The issue is that last time I looked into this I saw a not-quite-stable specification, which is why I was holding off of it. You might be able to find a workaround by installing https://github.com/encode/sentry-asgi in addition to the Django integration |

|

I haven’t parsed all this, but I’ll comment on this one aspect...

ASGI 3 is baked and done. You’re good to go with whatever was blocked there. |

|

I don't think this helps in this particular situation because the latest version of channels still appears to use a prev version (ran into encode/sentry-asgi#13 when using latest hypercorn and latest channels) |

|

@tomchristie I want to pull sentry-asgi into sentry. Do you think it would be possible to make a middleware that behaves as polyglot ASGI 2 and ASGI 3? |

|

Something like this would be a "wrap ASGI 2 or ASGI 3, and return an ASGI 3 interface" middleware... def asgi_2_or_3_middleware(app):

if len(inspect.signature(app).parameters) == 1:

# ASGI 2

async compat(scope, receive, send):

nonlocal app

instance = app(scope)

await instance(receive, send)

return compat

else:

# ASGI 3

return appAlternatively, you might want to test if the app is ASGI 2 or 3 first, and just wrap it in a 2->3 middleware if needed. def asgi_2_to_3_middleware(app):

async compat(scope, receive, send):

nonlocal app

instance = app(scope)

await instance(receive, send)

return compatOr equivalently, this class based implementation, that Uvicorn uses: https://github.com/encode/uvicorn/blob/master/uvicorn/middleware/asgi2.py |

The main problem I observed was an increase in memory usage over time of a long-running (tens of minutes) Celery task (which has lots of Django ORM usage). (Although there did seem to be an increase for web processes as well.) I haven't had a chance to do any traces, but I'll create a new issue when I do. |

|

@astutejoe When 0.10.2 comes out, you can do this to fix your leaks:

|

|

Hey! that sounds great @untitaker I will try it eventually, thanks for your time!!! |

|

0.10.2 is released with the new ASGI Middleware! No documentation yet, will

write tomorrow

…On Mon, Jul 15, 2019, 18:54 Gabriel Garcia ***@***.***> wrote:

Hey! that sounds great @untitaker <https://github.com/untitaker> I will

try it eventually, thanks for your time!!!

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#419?email_source=notifications&email_token=AAGMPROVOTED5MVGDYDKC3DP7STU5A5CNFSM4H7KBM4KYY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGODZ6JU4I#issuecomment-511482481>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AAGMPRMFHDML6J3ZBSG5B23P7STU5ANCNFSM4H7KBM4A>

.

|

|

Nice one. 👍 |

|

Please watch this PR, when it is merged the docs are automatically live:

getsentry/sentry-docs#1118

…On Mon, Jul 15, 2019 at 8:41 PM Tom Christie ***@***.***> wrote:

Nice one. 👍

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#419?email_source=notifications&email_token=AAGMPRP5YBXDTZ7YZNMOMITP7TAFBA5CNFSM4H7KBM4KYY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGODZ6TFGI#issuecomment-511521433>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AAGMPRNSWR34QPCR3R36HCDP7TAFBANCNFSM4H7KBM4A>

.

|

|

docs are deployed, this is basically fixed. New docs for ASGI are live on https://docs.sentry.io/platforms/python/asgi/ |

|

@untitaker I'm currently using channels==1.1.8 and raven==6.10.0 and experiencing memory leak issues very similar to this exact issue. I wanted to inquire whether there is a solution for Django Channels 1.X? I see that Django Channels 2.0 and sentry-sdk offer a solution via SentryAsgiMiddleware. I wanted to see if this also works with Django Channels 1.0? |

|

I'm not aware of any issues on 1.x, and for that version sentry-sdk does

not do anything differently, but I believe you would have to try for

yourself.

…On Wed, Nov 6, 2019, 05:06 Sachin Rekhi ***@***.***> wrote:

@untitaker <https://github.com/untitaker> I'm currently using

channels==1.1.8 and raven==6.10.0 and experiencing memory leak issues very

similar to this exact issue. I wanted to inquire whether there is a

solution for Django Channels 1.X? I see that Django Channels 2.0 and

sentry-sdk offer a solution via SentryAsgiMiddleware. I wanted to see if

this also works with Django Channels 1.0?

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#419?email_source=notifications&email_token=AAGMPRPX4ZHTMXWNO4XNOITQSI7C7A5CNFSM4H7KBM4KYY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOEDFF7SQ#issuecomment-550133706>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAGMPRPECROM3OHQ2Z2AY7TQSI7C7ANCNFSM4H7KBM4A>

.

|

Hopefully this stops the memory leak getsentry/sentry-python#419

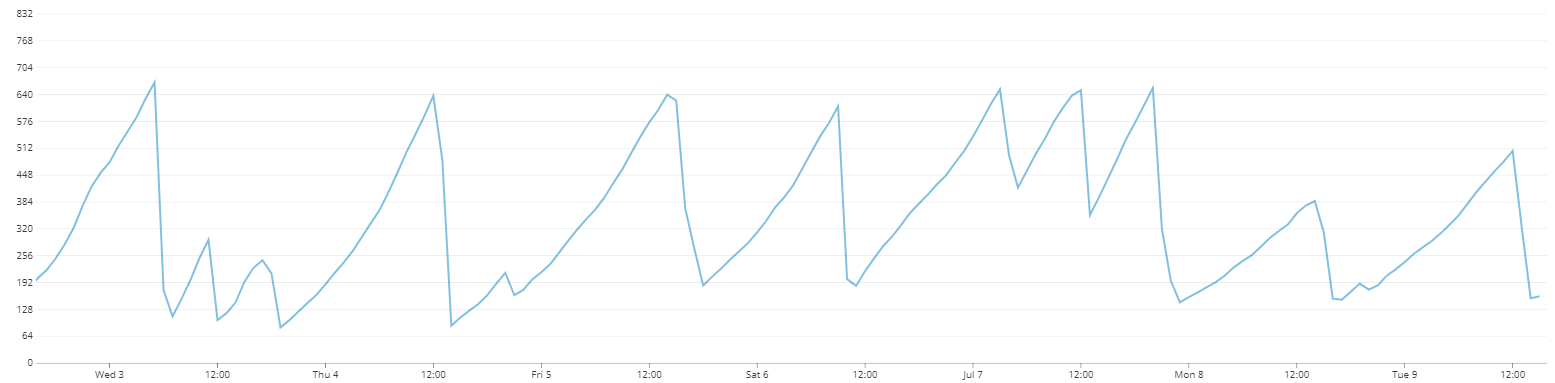

I expended around 3 days trying to figure out what was leaking in my Django app and I was only able to fix it by disabling sentry Django integration (on a very isolated test using memory profiler, tracemalloc and docker). To give more context before profiling information, that's how my memory usage graph looked on a production server (killing the app and/or a worker after a certain threshold):

Now the data I gathered:

By performing 100,000 requests on this endpoint:

A tracemalloc snapshot, grouped by filename, showed sentry django integration using 9MB of memory after a 217 seconds test with 459 requests per second. (using NGINX and Hypercorn with 3 workers):

tracemalloc probe endpoint:

I have performed longers tests and the sentry django integration memory usage only grows, never releases, this is just a scaled-down version of the tests I've been performing to identify this leak.

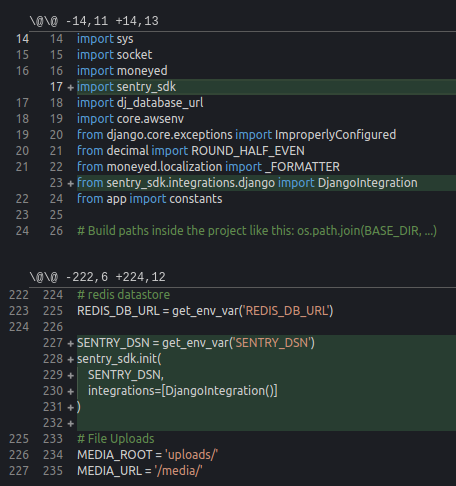

This is how my sentry settings looks like on settings.py:

Memory profile after disabling the Django Integration (same test and endpoint), no sentry sdk at top 5 most consuming files:

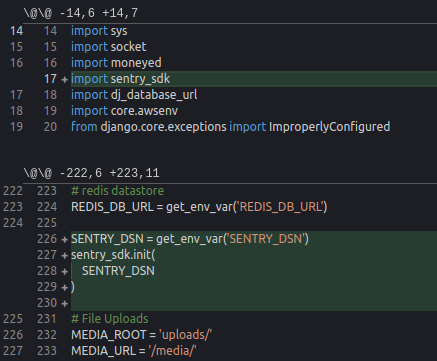

settings.py for the above profile:

Memory profile grouped by line number (more verbose):

my

pip freezeoutput:I used the official python docker image with the label 3.7, meaning latest 3.7 version.

Hope you guys can figure the problem with this data, I'm not sure if I'll have the time to contribute myself!

Bonus, memory profiling after 1,000,000 requests (Django Integration using 44MB):

The text was updated successfully, but these errors were encountered: