-

Notifications

You must be signed in to change notification settings - Fork 24.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Make Ccr recovery file chunk size configurable #38370

Conversation

|

Pinging @elastic/es-distributed |

|

I don't know if our plan is to actually merge this. But I pushed it up here. It un-awaits fix a test that only failed due to a different test failing (because I use that test here). If we do not actually merge this PR, I will do that change elsewhere. @dliappis - You can use this branch for benchmarking. The setting is |

|

If we do want to add this setting to production, I will probably need to add a max size (since I do the |

|

Using the geopoint track and

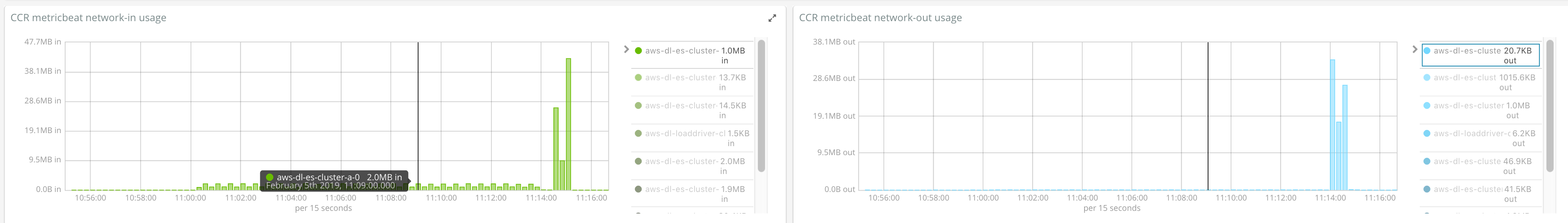

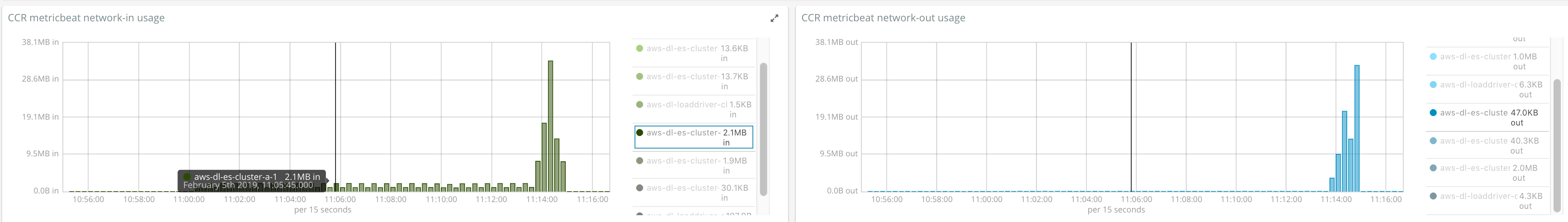

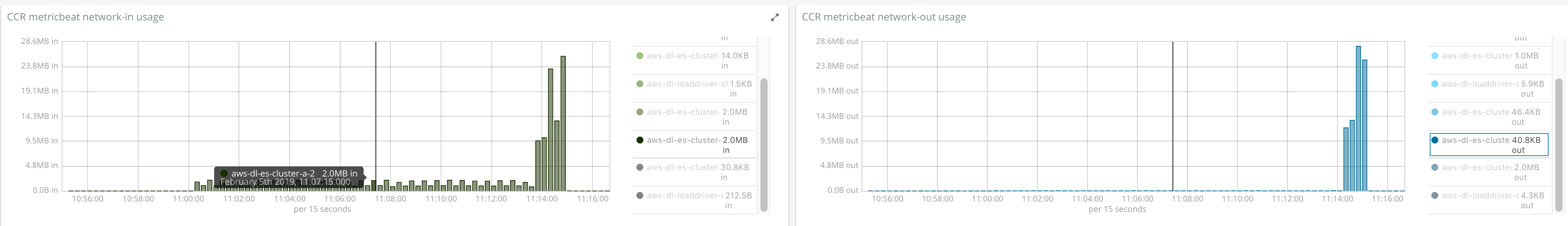

Earlier with the default The latency of the connection is ~100-130ms. The amount of docs/store size can be seen below: Network stats on followerNode 0Node 1Node 2Rally summary |

|

One clarification is that the small peak in the network graphs towards the end is due to peer recovery (within the network of the followers) and not related to recovery from remote or CCR. |

Using

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM (also see the benchmarking results).

|

@elasticmachine run elasticsearch-ci/default-distro |

* master: (23 commits) Lift retention lease expiration to index shard (elastic#38380) Make Ccr recovery file chunk size configurable (elastic#38370) Prevent CCR recovery from missing documents (elastic#38237) re-enables awaitsfixed datemath tests (elastic#38376) Types removal fix FullClusterRestartIT warnings (elastic#38445) Make sure to reject mappings with type _doc when include_type_name is false. (elastic#38270) Updates the grok patterns to be consistent with logstash (elastic#27181) Ignore type-removal warnings in XPackRestTestHelper (elastic#38431) testHlrcFromXContent() should respect assertToXContentEquivalence() (elastic#38232) add basic REST test for geohash_grid (elastic#37996) Remove DiscoveryPlugin#getDiscoveryTypes (elastic#38414) Fix the clock resolution to millis in GetWatchResponseTests (elastic#38405) Throw AssertionError when no master (elastic#38432) `if_seq_no` and `if_primary_term` parameters aren't wired correctly in REST Client's CRUD API (elastic#38411) Enable CronEvalToolTest.testEnsureDateIsShownInRootLocale (elastic#38394) Fix failures in BulkProcessorIT#testGlobalParametersAndBulkProcessor. (elastic#38129) SQL: Implement CURRENT_DATE (elastic#38175) Mute testReadRequestsReturnLatestMappingVersion (elastic#38438) [ML] Report index unavailable instead of waiting for lazy node (elastic#38423) Update Rollup Caps to allow unknown fields (elastic#38339) ...

This commit adds a byte setting `ccr.indices.recovery.chunk_size`. This setting configs the size of file chunk requested while recovering from remote.

This commit adds a byte setting

ccr.indices.recovery.chunk_size. Thissetting configs the size of file chunk requested while recovering from

remote.