-

Notifications

You must be signed in to change notification settings - Fork 120

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

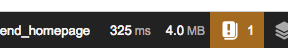

Docker for Mac + Symfony too slow #2707

Comments

|

@antondachauer thanks for your report. I tried to reproduce the build but I think the config files are missing: |

|

It needs some nginx config files, please see the Dockerfile part. |

|

Your problem is commonly known more then a year ... it's because bind-mounts are terribly slow. |

|

That sounds like docker missed his mission on Mac. Will the problem eventually be solved with a native solution? |

|

Use the |

|

still no native solution? |

|

I recommend following @alpharder's advice -- first try with If Please note:

If you try the experimental build I'd love to know

|

|

@djs55 |

|

I've tested the edge version (in combination with some docker volumes and some delegated volumes), and it seems to work fine, and perhaps makes a slight improvement to performance, but again, nothing like running the services natively 😞 |

could you advise any links to read about why it is more performant and about "inside the VM"? |

|

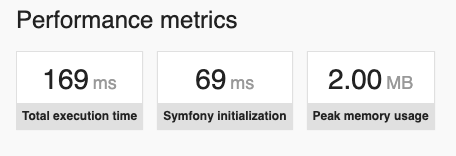

You can observe the 'why' part. Open up your Activity Monitor. Run your SF Dockerized App and access it. Now watch the OSXFS process consuming most CPU time in conjunction with the hypervisor running your docker machine host. As the Hypervisor will ask OSXFS for the file and OSXFS will look into the filesystem for the file and then send it to the VM - for each and every read - for each and every write - each and every attribute check like ctime or atime. The writing scenarios are somehow better through delegated and cached behaviors but the real big problem are the reads which - afaik always - go through the OSXFS layer in a bind mount setting, which is the real bottleneck. (Correct me if I am wrong, that are real observed behaviors in huge SF applications running on docker for mac for development) In SF and Composer times this is multiple thousand files getting accessed through that combination. resulting in page load times of 30+ seconds. (You will see in the dev-toolbar the pre-framework initialisation time is a huge part of that time) A Volume instead would not have to go through that layer, thus resulting in blazingly fast - almost native - performance. Thats why projects like docker-sync exist which use that mechanism to trickster a nearly native performance setup (with some delay after editing files locally until synced). PS: Small SF Applications can be made a bit faster by Volume Mount the 'vendor', 'node_modules' and 'var' folders. Thus having at least all vendors and cache not going through the OSXFS layer. However this at some point will not suffice as the reads on 'src' will kill the performance again at a certain project size. (Small Hello World SF Applications will run relatively fast though) |

|

Today I tried again to get Symfony running for development with the current Docker version. It seems to be working quite well now. You can find the configuration here: https://github.com/webworksnbg/sfdocker My Docker version is |

|

Issues go stale after 90d of inactivity. Prevent issues from auto-closing with an If this issue is safe to close now please do so. Send feedback to Docker Community Slack channels #docker-for-mac or #docker-for-windows. |

|

@antondachauer thanks a lot for sharing :) with that same build docker for mac + symfony are fast now, no need for docker-sync anymore |

|

Just until the application reaches a complexity threshold and everything is slow again... I guess if you only got 4GB or RAM for docker (because 8GB installed and IDE takes 2GB and Browser 1GB and rest of the system the last 1GB because mac) you will hit the same degraded performance again, because as far as I get it/would guess the osxfs delegated caching only works "until full". I had not debugged that for now though. But the delegated only fixed the problem for 16GB+ systems with at least 6-8GB for docker as far as I can observe at work. Fresh reboot and sometimes only after a full-reset of the docker environment you will get decent speed in small bootstrapped applications. Load a really complex "live-bussiness-level with hundreds of tests" one and performance will degrade in no time back to multiple seconds of wait time per request. I may could provide anything (as long as anonymized) on the diagnostic side, if needed to find the culprit. But in some caeses its currently unusable for some of my colleagues to use it in every-day development as it will slow them more down than just using the central sftp development machine running the needed LAMP stack. /remove-lifecycle stale |

|

I ran Mamp on my local Mac OS machine for years and now I wanted to go the step to Docker containers. But its incredibly slow. Every request takes 3-10 Seconds. I am not used to it. Feels like shit. Can this be a step in the future? I am not sure if I can deploy this to the production sever... . |

|

@Slowwie the question is if it is only slow in MacOS with bind-mounts only. The overall overhead on a real linux machine (which is often used in production scenarios) is significant lower and almost native +- milli or nanoseconds. You may test near-to-linux performance through not using bind mounts but pack your application into the container you build and run it without any volume mounts. You also may try to use the ':delegated:' option on your bind-mounts and see if your problem will be gone with that (in fact that's what this ticket more or less is all about). I guess, if you use Symfony, that the Webtoolbar shows you much time spent on initialization which is a problem based on "i need to read all those composer vendors" which is many tiny accesses through the bind-mount layer (osxfs). In theory the mentioned delegated option (or the cached option, look them up in the documentation what the difference is) should minder the problems with that as most read will get cached and writes back to the host will get delayed in case of delegated. But if possible lets get the ticket on track about the WHY the delegated and/or cached option seems to not always work and/or gets slow over time. |

|

I had same issue. This is not only related to bind-mount volumes or docker version. |

|

As it's somehow related I also want to link awareness to that: #3455 -- Especially in context of xdebug. There are just so many things which may slow down a Docker for Mac instance, seemingly at random. Like using it for a docker with fine speed and then the performance degrades until docker reset or machine restart. At work we also kept an eye on the timer thingy, which did not seem to have a big impact for our performance when it happened. Nonetheless the linked article about the php/php-xdebug/nginx proxy is at least worth something ;) -- It won't however help to reduce impact on local testing, which are most of the cases we run into performance bottlenecks, as there you will need to have xdebug for coverage and stuff... |

|

tl;dr: best way to use docker on a mac: <rant> i'm not even flexing about linux or something. i'm sorry but steve would probably be pretty sad if he knew about the current state of macos. every decent project is either objective c only and unavailable on other platforms or completely broken on macos because apple still thinks they are special and "workarounds" are acceptable.. i could rant all day about this "incompetence" and "design". back to docker: just wait for wayland to be decent and switch as soon as it can handle multiple screens with different dpi as well as macos. in terms of windows.. it's getting there. they are adding more and more linux features into windows itself. (don't even try to install kaspersky / mcafee or anything else. it will break it. microsoft seems to be quite competent in adding useful developer features. and snake oil companies cannot keep up with the "rapid" changes) </rant> |

|

last week, my boss gave me a new mac, with no usb, no display interface. this morning, i got my new adapter with 3usb + 1 hdmi + 1 rj45+1 charge. install docker, build my image for dev. |

|

since docker on mac is so slow |

|

@abdrmdn Not really the best solution. It may work for a limited amount of developers but as stated in the article its remote-only. Beside the fact that you will have to locally modify your project files for each developer (yea I know docker-compose.override.yml, but its pretty limiting for complex projects) just for port binding all the specific ports. What if a developer just uses the range of an other and then it conflicts... we also evaluated such an idea but scrapped it because of all that "what I have to know to properly use it" Fun stuff about docker is that I can carry my dev infrastructure with me. For every single project and work on it on the go. (beside the point of stating my application requirements explicitly and so on) Note that this post is especially about PHP development on Docker for Mac and mid-range to large projects with a decent amount of vendors or the PHP composer vendor bundling in general, which causes high amount of initialization time because of loading all those vendor files, even with current delegation implementation of OSXFS, which in general sucks too much CPU time in my opinion and slows the whole system down. We in our team just dropped Docker for Mac as the drawbacks did not justify the gain of simplicity (Sorry, Docker for Mac folks!) and thus we switched back to a Virtualbox provisioned through simple Vagrantfile. This way we can ensure that its not slow running and we could use vagrant rsync-auto to keep the files in sync (whereas mutagen.io or unison is a better option). For our application we just use PHPStorms Auto-Deploy feature after an initial rsync of the project directory. (as PHPStorm stomps Symlinks and File-Permissions if used for initial sync) Its fast-as-native, if you have no pending IO within the VM to your HDD/SSD. But that's true for every system. You can simply forward your standard application ports through vagrant host binding/forwarding so you can just use localhost. and you can just use your docker CLI on your host system just as if you are using Docker for Mac. (just keep the file systems 1:1 mapped and in sync) provided you set that DOCKER_HOST environment variable tot the VM. SetupVagrant.configure("2") do |config|

config.vm.box = "centos/7"

config.vm.hostname = "docker-dev-vm"

config.vm.network "private_network", ip: "10.1.1.10"

config.vm.provision "shell", path: "setup.sh"

config.vagrant.plugins = ["vagrant-hostsupdater"]

# HTTP/HTTPS Ports

config.vm.network "forwarded_port", guest: 8443, host: 8443

config.vm.network "forwarded_port", guest: 8080, host: 8080

# Utility Service Ports

config.vm.network "forwarded_port", guest: 8090, host: 8090

config.vm.network "forwarded_port", guest: 8091, host: 8091

config.vm.network "forwarded_port", guest: 8092, host: 8092

config.vm.network "forwarded_port", guest: 8093, host: 8093

config.vm.network "forwarded_port", guest: 8094, host: 8094

config.vm.network "forwarded_port", guest: 8095, host: 8095

config.vm.provider "virtualbox" do |v|

v.memory = 2048

v.cpus = 2

# Enable nested virt to speed up docker

v.customize ["modifyvm", :id, "--nested-hw-virt", "on"]

end

# Windows will have to live and let live with the difference in filesystems

# So it will have to use the /home/vagrant space to deploy applications and

# will have to specify absolute paths within its dockerfiles or just ssh onto the VM

unless Vagrant::Util::Platform.windows?

# Add host user to docker-vm and copy vagrant key accordingly

# This will make it easier to map local paths to VM paths and have

# transparent directory mappings especially for docker-copmose related stuff

config.vm.provision "shell", inline: "mkdir -p "+ENV["HOME"]

config.vm.provision "shell", inline: "chown -R 1000:1000 "+ENV["HOME"]

# ultimately we could also create that user

# or chmod the current user through editing passwd and groups file

#config.vm.provision "shell", inline: "adduser -m -G docker,wheel -d "+ENV["HOME"]+" "+ENV["USER"]

end

# Auto-Sync 1:1

# comment this if you are using alternative sync strategies

# change it if you want to sync another file-tree it defaults to ~/docker-dev-vm

if ARGV[0] == 'rsync' or ARGV[0] == 'rsync-auto'

config.vm.synced_folder ENV["HOME"]+"/docker-dev-vm", ENV["HOME"]+"/docker-dev-vm", type: "rsync", rsync__exclude: [".svn/", ".git/"], rsync__verbose: true

end

end#!/bin/bash

# Install ElRepo Centos LTS Kernel

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

yum -y install https://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

yum -y --enablerepo=elrepo-kernel install kernel-lt

# Enable LTS kernel

grub2-set-default 0

grub2-mkconfig -o /boot/grub2/grub.cfg

# Install Docker

yum -y install yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum -y install docker-ce docker-ce-cli containerd.io

mkdir -p /etc/systemd/system/docker.service.d

echo "[Service]" > /etc/systemd/system/docker.service.d/override.conf

echo "ExecStart=" >> /etc/systemd/system/docker.service.d/override.conf

echo "ExecStart=/usr/bin/dockerd -H fd:// -H tcp://0.0.0.0:2375" >> /etc/systemd/system/docker.service.d/override.conf

systemctl daemon-reload

systemctl enable docker

# Make docker accessible to default user

usermod -aG docker vagrant

# Enable IP4 forwarding

echo "net.ipv4.ip_forward = 1" > /etc/sysctl.d/40-docker.confAfter bootup you just set your environment variable for For PHPStorm (or any other ssh relevant use) you may use the vagrant generated, passwordless, private key to access the VM as vagrant user for deployment sync. At least for our workflow its much better than the slow bind mound performance of Docker for Mac and the productivity in using docker on mac is now on a usable level for our team. CAVEATSYes, the shown setup has time-drift issues on hybernate because not installing the virtualbox-guest-agent or a ntpd with a auto-sync timer. This could be easily fixed by adding it to the setup script and redeploying the machine. Because it's a host-only network we use for the VM we ain't need a docker authentication and thus it's unsafe configured! Just NEVER host-bind/forward the port 2375 through the Vagrantfile and your are good to go. The moment forward a port from the VM to your host it will be accessible on all interfaces, this can be limited, just lookup the directives in the Vagrant documentation. The setup implies the UserID 1000, sadly it's not easy automatically adjustable to your host UserID as you always login as vagrant user and then usermod won't work because of an active user session. (And no we don't want to sed the passwd 😉) So your Dockerfiles should, if applicable, always run as user |

|

@Blackskyliner I agree with you that our setup had some dependencies, it defeated some of the purposes of docker. one thing was annoying mostly is when you work at home for example, cause we didn't set up access to the server from outside the office for obvious reasons. |

|

@djs55 @nebuk89 I (think) have a problem with permission/user-group. In my docker image there is a non-root user, and this is own of all my workdir. When i activate mutagen caching i see that all file have different owner (501 - dialout) and this block my application because my symfony application can't create any file (example logs). I know that in docker-sync or mutagen configurations, there are a keys for define a different user/group. But with this integration i don't know how can i do. Can u help me? |

|

@Valkyrie00 thanks for mentioning the user and group issue. I don't know if it would be sufficient but there's a more recent build which sets the file permissions in the container to less restrictive |

|

My monolithic Symfony 4.4.8/PHP 7.4.6 app is "huge" (Power behind mySites.guru) We mount the /tmp/app folder to a docker volume and set the symfony cache folder and log path in Kernel.php to point to these (meaning that the huge mass of cache/log files never leave docker and never transferred back to my Mac) I have been using docker and symfony for years with real world apps. My developer experience counts as my tests are realworld. Bind mounts have been far too slow for years. docker-sync proved unreliable, used to "just stop" during the day We moved to NFS mounts with /blow you away/ speed when compared to bind mounts This morning I would get anywhere from 300ms to 7000ms load times for our most resource intensive page load in development environment with NFS mounts I have today tested the new build with mutagen (with no :cached or :delegated flags on the mounts at all in docker-compose.yml) The load times are consistently 140-160ms page load times. Editing files in phpStorm are instantly synced to the containers as soon as phpStorm writes them to disk. Its almost toooooo good to be true. The app feels very fast again, just like it does in production. Mutagen could be a game changer for development in docker on Mac. I have no idea how it does it, but it blew me away from the very first page load. |

@djs55 Thank you so much! Extra Feedback UX (as required): |

I think the best place for this would be in the docker menubar next to the "Docker is running" bit, maybe even animate the whale when syncing... that would be cool - leaving the docker for Mac preference panes open just to see this status isn't really tenable I dont think. |

|

@PhilETaylor Yeah we had considered moving a sync to something like a small 'spinning dot' or similar on the whale. We wanted to get a feel to start of the size of folders/duration it was taking people to sync and see if this was needed :) sounds like it well might be |

|

I see a few things needed after using this now for a while full time. These are opinions only, do not be offended, they are not demands.

The initial sync which takes place, takes longer (not excessive) and needs more detailed feedback within the preferences pane. For example, display the amount of space used by each folder synced. That is needed to make informed decisions on which to not sync when space is running low. A shoutout to mutagen on this page would not go amiss either.

Remove that horrid pop up that says each time that “a sync will take space”, just mention that in documentation. It’s a given that it will take space, if the pop up is to stay then make it useful, add the stats, say like “if you continue this will take 1Gb of your 52Gb docker image, you will only have 20Gb free for use by containers, images and the rest of docker”

I’ve not tested what happens if you try to sync a large project into a tiny docker base image file. Does it error? Does it provide accurate feedback?

And lastly, without having the docket preferences panes open, when I save a file, and switch to my browser to hit reload, having a peek at the spinner in the menu bar I will know that it’s “doing stuff” and syncing my change, this can take milliseconds, to a few seconds depending on resources and computer speed, and when the spinner stops I can refresh the web page I’m working on. Sometimes I have been refreshing before the sync completed my change and that gets frustrating:-) I think that makes perfect sense to have. You can also then turn it into a triangle if there was an error.

…Sent from my iPhone

On 27 May 2020, at 09:31, Ben De St Paer-Gotch ***@***.***> wrote:

@PhilETaylor Yeah we had considered moving a sync to something like a small 'spinning dot' or similar on the whale. We wanted to get a feel to start of the size of folders/duration it was taking people to sync and see if this was needed :) sounds like it well might be

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub, or unsubscribe.

|

|

On 45429 version I have "Error" after turning on cache. Where I can find details of this "Error" status? |

|

Hi @kerstvo for now it lacks ui for error reports, you can do: |

|

@kerstvo I trawled through the files in |

|

@kerstvo if it is a symlink issue, there's an early build with a fix in this comment |

|

I made a full example about how to configure Mutagen with a Symfony project. It can be used for any type of project in any language. |

Or just install the Docker For Mac builds referenced in this thread, or from the https://docs.docker.com/docker-for-mac/edge-release-notes/ page, which have built in support for mutagen without having to install run or configure it yourself ;) Also for Symfony's |

|

@PhilETaylor, you won't be able to do fine tuning with the UI integrated version as you can with the manual configuration. |

|

My project is huge, and I have found the integrated docker-for-mac mutagen more than adequate with its standard configuration - with /blow you away/ speed and no issues yet to report. |

|

I have tried a way from https://docs.docker.com/docker-for-mac/edge-release-notes/ but still getting "Error" instead of "Ready" on file sync page. How to check this error? |

|

@CptShooter this helped me #2707 (comment) |

|

Hi everyone. I wanted to update you on the status of Mac file sharing performance in general, and Mutagen in particular, in our new Edge release, 2.3.5.0 (https://desktop.docker.com/mac/edge/47376/Docker.dmg). TL;DR: We have made some big file sharing performance improvements in this release by removing osxfs and using gRPC-FUSE instead (by default). On Mutagen, we feel that it's hard to use and needs a rethink, so we have removed it for the moment, although we are still working on it. Let's start with the good news. The experiments with gRPC-FUSE replacing osxfs have been really promising. It seems to improve file sharing performance by a good amount, and also fix most of the CPU problems that people have been complaining about for a long time. And it "just works", no configuration or expert knowledge required. So it's the default in the new Edge release, and we plan that it will be in the next Stable release (planned for next month) too. On Mutagen, the first thing to say is thank you for all your feedback about the various iterations of this feature. It's been really helpful. As you've seen, we've made a lot of changes in response to your feedback, and incorporated a lot of your ideas, but overall we're still not happy with it. There's confusion about command line vs UI enablement. There's concern that it changes the behaviour of existing compose files, which wouldn't be a problem except that there are some cases where it makes performance worse. There are bugs due to the cache not having caught up when someone tries to perform the next action. We haven't solved the problem of caching most of a directory but excluding a subdirectory. These are all things that you have raised. We know that a lot of people have had very good experiences of it too, and have seen big performance improvements, and were looking forward to it getting into a Stable release. But overall our feeling is that it would break too many things too, and that it's not ready for general release. So right now we're focusing on gRPC-FUSE as the solution for the majority of users. We haven't abandoned Mutagen, and we still intend to bring the technology back, but probably redesigned as more of an "expert" feature rather than a solution for everybody. Thank you again for your feedback. This is the strength of the Edge builds, that we can try out experimental features and learn what works and what needs rethinking. |

|

I'm also going to dupe this ticket up against #1592 because it's the same issue, and it would be better to consolidate the conversation in one place. |

|

I recently updated Docker (to 2.3.5.0) and came looking for this thread because my development version felt a bit slow again. I now realize that Mutagen was removed, so to me this is a big step back performance-wise. I had no problems with Mutagen (installation was easy and it "just worked"), so I'll probably downgrade just so I can get the performance back. I understand that Mutagen is just a small part of a bigger picture and that trade-offs need to be made, but please take this feedback into account. |

Exactly what I did after 2 days of using the gRPC-FUSE version which "felt slower" ... :-( |

|

2.3.4.0 was working great a day for me. But I installed the update which was very slow again. I seems that Docker on MacOS is not ready, I'll try to install a Linux VM, it seems easier. |

|

@HyperCed That's because it has been removed in 2.3.5.0. Here's what the release notes say:

|

|

Closed issues are locked after 30 days of inactivity. If you have found a problem that seems similar to this, please open a new issue. Send feedback to Docker Community Slack channels #docker-for-mac or #docker-for-windows. |

Issues without logs and details cannot be exploited, and will be closed.

Expected behavior

Page loading time < 10sec (Symfony dev env)

Page loading time < 3sec (Symfony prod env)

Actual behavior

Page loading time > 40sec (dev)

Information

Steps to reproduce the behavior

docker-compose.yml

php7-fpm Dockerfile

See https://github.com/docker-library/php/blob/master/7.1/fpm/Dockerfile

nginx Dockerfile

The text was updated successfully, but these errors were encountered: