- 🌴 Currently living in the suburbs of Paris.

- 🎓 Double Master of Science student: MVA Maths, Vision, Learning at ENS Paris-Saclay and Data Science at CentraleSupélec.

- 🔍 Looking for a full-time Machine learning researcher position or a PhD position.

- 🧐 Passionate about anything related to learning algorithms.

- Simpler is better

- If it does not work, there is a reason behind

- Theory unlocks imagination, experience brings intuition

- Theory is not enough, and experimenting takes time

- Solving a problem does not mean getting the best metric

Classical Machine Learning:

- Regression, SVM

- XGBoost, Light GBM, CatBoost

- SHAP values, Anchor, LIME

Computer vision:

- Image classification and regression

- GAN

- Perceptual loss, neural style transfer, super-resolution

- Object detection: RCNNs, YOLOs

- Semantic segmentation

- Key points detection

- Few shot learning

- Self-supervised learning: SimCLR, BYOL, MoCO, SwAV, DINO

Text & Images

- CLIP, CLIP Seg, SAM

- Diffusion models: Stable diffusion, Dreambooth, ControlNet

NLP:

- Text classification

- LLMs fine-tuning, text generation

Speech:

- Voice activity detection

- Speech-to-text

Time series:

- DTW, dictionary learning

- Time series classification

- Breakpoint detection

- Adaptive Brownian bridge-based aggregation representation

- Self-supervised learning for time series

Game Theory and RL:

- Monte Carlo, Q-learning, TD(0), SARSA

- DQN, PPO

- PSRO

Other:

- Uncertainty estimation

- Spiking neural network

- Mixture density network

This was my Master thesis internship before graduating. I worked at Beacon Biosignals, an American startup. Beacon Biosignals develops cutting-edge neurotechnology and AI solutions for advanced healthcare applications.

I worked on training a foundation model for sleep EEG time series using self-supervised learning, adapting methods from computer vision such as contrastive learning and DINO to EEG data. This approach achieved 80.02% accuracy on sleep staging with 95% fewer labels, representing only a 2.6% performance drop compared to using fully annotated data.

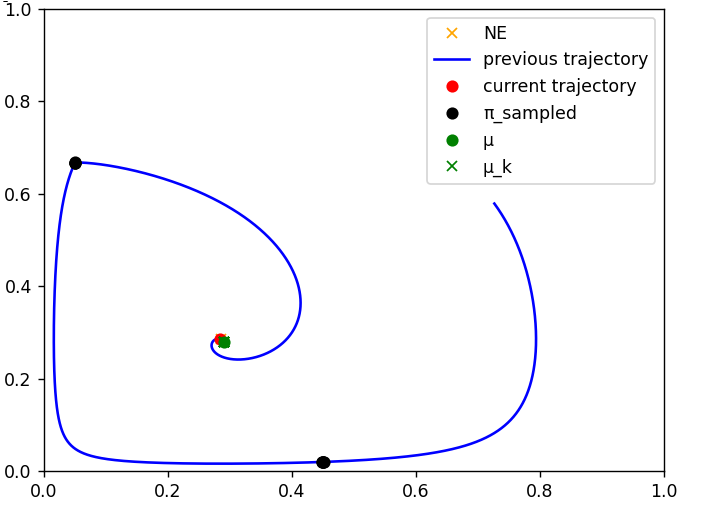

During my last year at CentraleSupélec, I had the chance to work with Google DeepMind on a research project from October 2023 to April 2024.

The goal of the project was to improve the convergence speed of FoReL based algorithms with population based ideas. We designed an algorithm and showed a huge gain of convergence speed on two-player zero-sum Normal form games.

Full report here.

Github repository here

After coming back from Germany, I started my last year at CentraleSupélec and the prestigious MVA Master at ENS Paris-Saclay. I was more focused on AI and I had a lot of theoretical courses. I had the chance to work on a lot of projects with a lot of different people. It was probably one of the most intense years of my life.

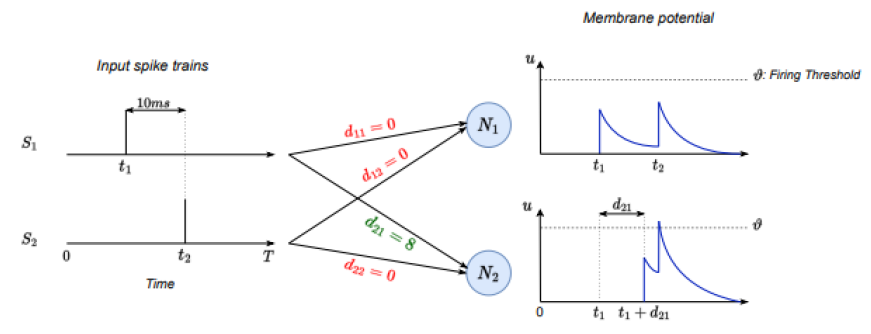

Short one-week project on Spiking Neural Networks. We aimed to explain the behavior of a spiking neural network and we benchmarked the performance of SNNs on image classification and time series classification.

The code and the reports are available here.

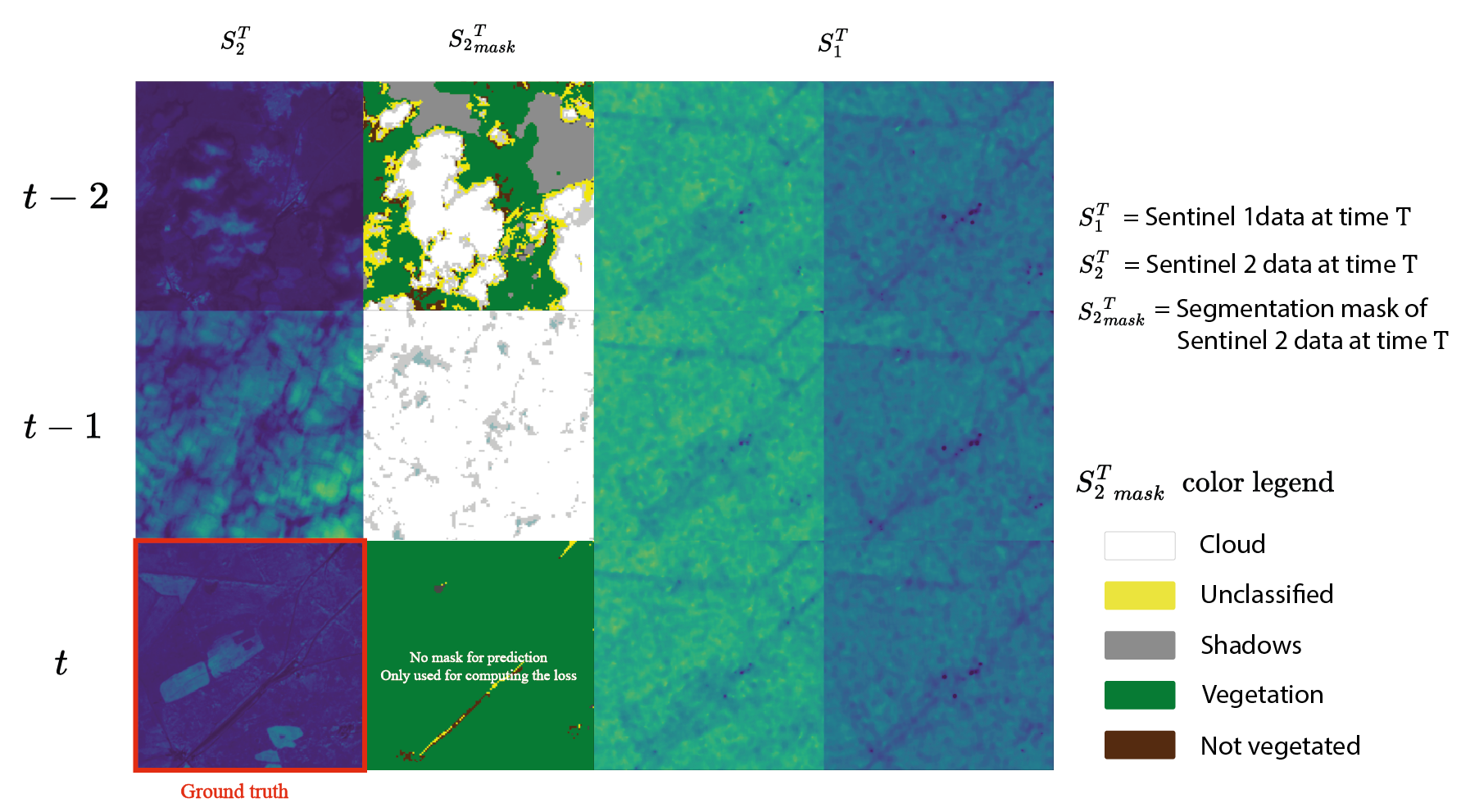

The goal of this competition was to predict the leaf area index for each pixel of a satellite images captured by Sentinel 1 and 2. The competition took place in April 2023.

The repository of the code is here.

Our paper got accepted at the 2023 Big Data from Space (BiDS) conference that took place from 6 to 9 November 2023 in Vienna, Austria. The paper is available here.

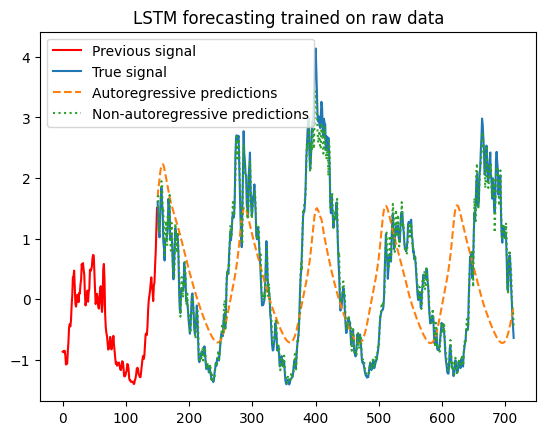

Part of the Machine Learning for Time Series course of Laurent Oudre. Implementation of two papers on time series representation.

Full report here.

Github repository here

References:

Elsworth, S., & Güttel, S. (2020). ABBA: Adaptive Brownian bridge-based symbolic aggregation of time series. Data Mining and Knowledge Discovery, 34(4), 1175-1200. Link. Elsworth, S., & Güttel, S. (2020). Time series forecasting using LSTM networks: A symbolic approach. arXiv preprint arXiv:2003.05672. Link.

Part of the Advanced learning for text and graph data course of Michalis Vazirgiannis. The goal was to retrieve molecules from a text query.

Full report here.

Github repository here

Part of the Probabilistic Graphical Models and Deep Generative Models course of Pierre Latouche and Pierre-Alexandre Mattei. The goal was to implement mixture density networks and evaluate their efficiency on several datasets.

Full report here.

Github repository here

Poster here

Part of the Deep learning for medical imaging course of Olivier Colliot and Maria Vakalopoulou.

This project focuses on developing an automated system to distinguish between reactive and tumoral lymphocytosis using blood smear images and patient attributes. The dataset includes samples from 204 patients, with 142 for training and 42 for testing, collected from the Lyon Sud University Hospital. The goal is to assist clinicians in identifying cases requiring flow cytometry, reducing costs and improving diagnostic accuracy.

Github repository here

Part of the Reinforcement learning course of Stergios Christodoulidis. The goal was to implement simple reinforcement learning algorithms to play Flappy Bird.

Full report here.

Github repository here

Part of the Reinforcement learning course of Stergios Christodoulidis. This project simulates a prey-predator environment. Reinforcement learning is used to learn the behavior of each agent. To encourage cooperation, we used the MADDPG algorithm.

Full report here.

Github repository here

Poster here

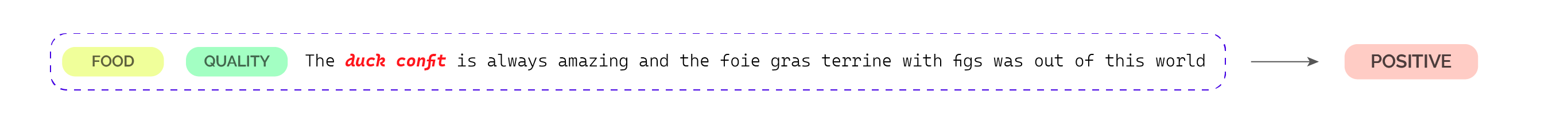

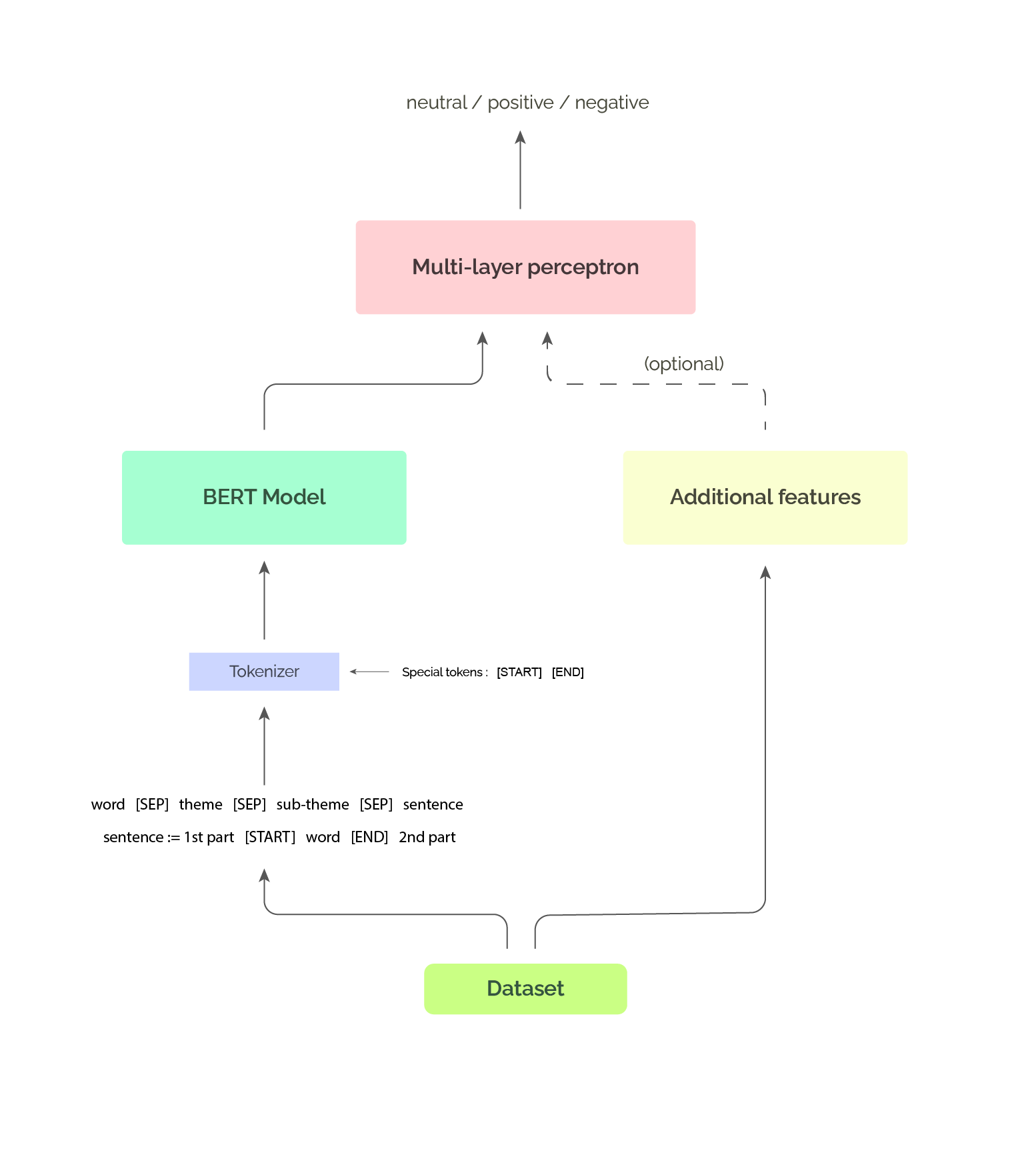

Part of the Natural Language Processing course of Naver Labs Europe. The project consists in classifying between three labels (neutral / positive / negative) how a sentence is perceived given an aspect of it (wether it is about the food quality, the general ambiance and so on) highlighted by a specific word in the sentence.

We fine-tuned DistilBert for that specific task.

More details in the repository

Part of the Machine Learning on Network Science course of Fragkiskos Malliaros. The goal was to benchmark different architectures on the Twitch dataset on several classification and regression tasks.

Full report here.

Github repository here

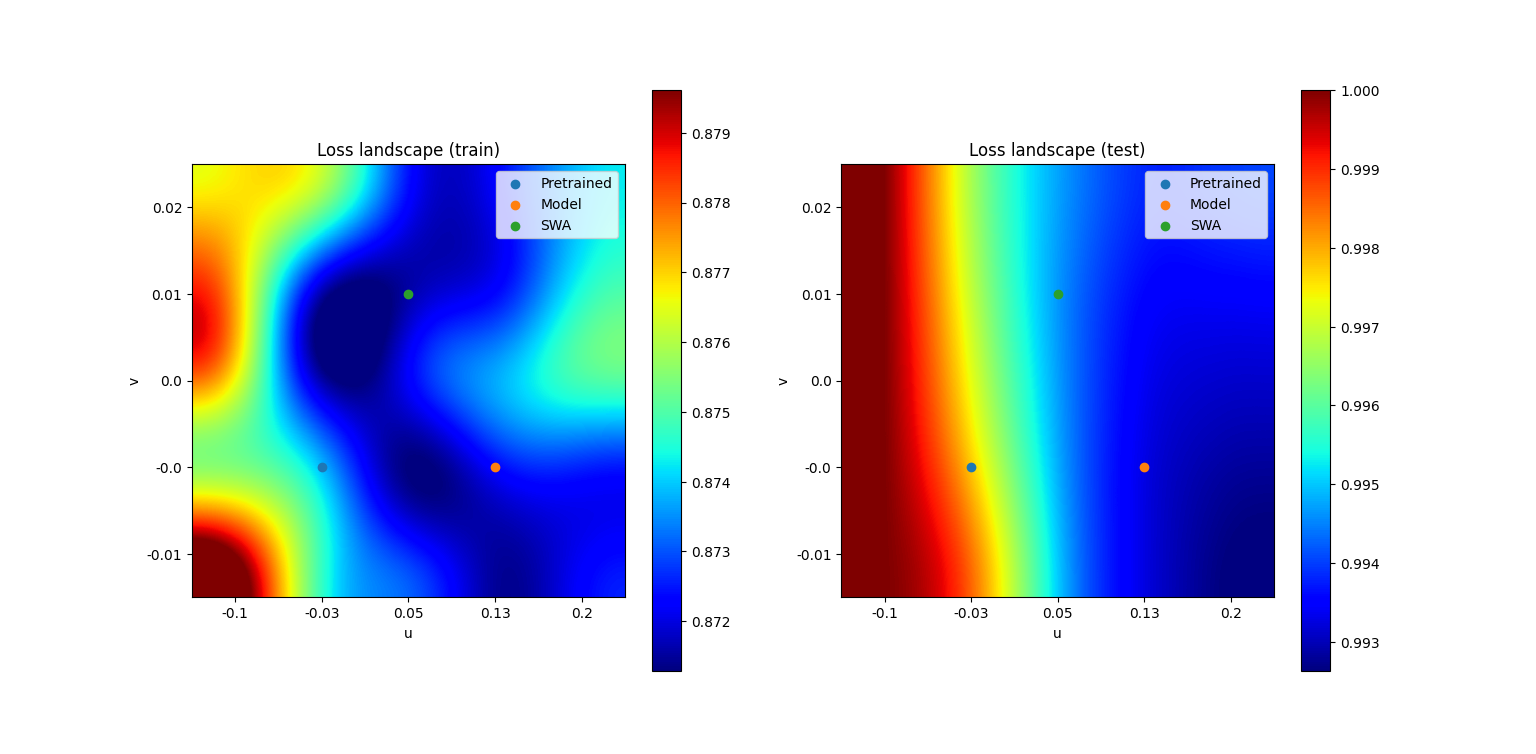

Part of the Bayesien Machine Learning course of Rémi Bardenet. The goal was to implement the paper "Averaging Weights Leads to Wider Optima and Better Generalization" by Pavel Izmailov, Dmitrii Podoprikhin, Timur Garipov, Dmitry Vetrov, Andrew Gordon Wilson.

| Loss landscape comparison for MobileNet V2 on CIFAR100 |

|---|

|

Full report here.

Github repository here

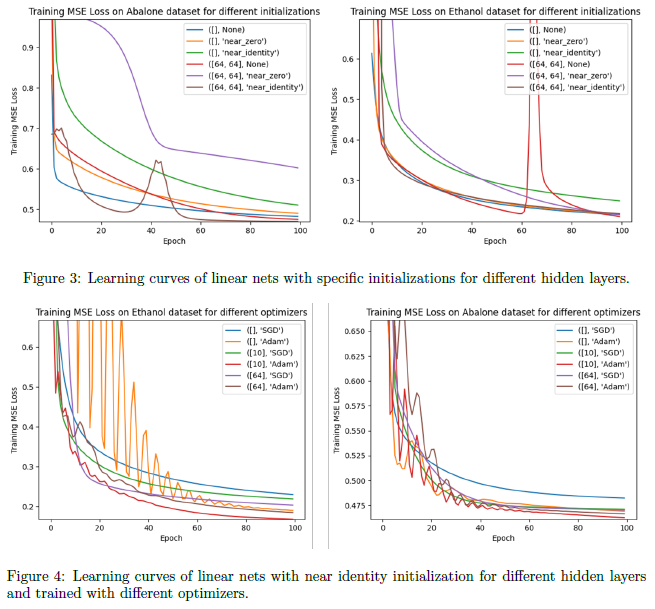

Part of the Theoretical Principles of Deep Learning course of Hedi Hadiji. The goal was to reimplement the paper Arora, S., Cohen, N., & Hazan, E. (2018, July). On the optimization of deep networks: Implicit acceleration by overparameterization. In International Conference on Machine Learning (pp. 244-253). PMLR.

Full report here.

Presentation slides here.

Github repository here

Right before coming back from Germany, I accepted a short mission of 1 months as a Freelance Data analyst at Etandex.

The project consisted of predicting the potential of a commercial opportunity. The client wanted deep insights on how to explain the predictions of the algorithms.

| SHAP value summary plot |

|---|

|

XGBoost, SHAP value, LIME, Anchor

| App trailer |

|---|

|

I got inspired by my internship in a Start-up in the United States. At the same time, I got really interested in Generative AI. A friend of mine invited me to create Photogen AI. The idea was to sell AI-generated images of the customers.

DreamBooth: Fine Tuning Text-to-Image Diffusion Models for Subject-Driven Generation (CVPR 2023) was published and a lot of AI avatar apps popped out of nowhere. However, no one could generate qualitative realistic images. We focused on that, and after a few tricks with Dreambooth, we got decent to really good results.

| In front of the Eiffel Tower | In front of the Kremlin | In Rome | Professional picture |

|---|---|---|---|

|

|

|

|

Automatic 1111, Hugging face, diffusers Dreambooth, Stable diffusion

| AWS Dashboard |

|---|

|

Turning all tests on Google Colab to production on AWS. Creating dashboards and alerts to monitor errors.

Two production pipelines on AWS:

- Inference with dynamic autoscaling group with warmup. Automatic scaling based on the monitoring of the number of SQS messages.

- Dreambooth fine-tuning with AWS Batch.

AWS, Docker, Fast API

One weekend competition on any technical subject related to Generative AI.

I worked on how to decrease the number of required images for Dreambooth. It became a huge literature review and testing of the latest repositories on Pose Transfer, Instruct pix2pix, pictures light adaptation, Background matting, etc.

| Visualization. Prompt: Wearing a red suit at Cannes |

|---|

|

A few weeks of work on group photo generation with personalized Dreambooth weights.

Here are some visualizations for the following prompts:

Positive: fantasy themed portrait of {token} with a unicorn horn party hat, vibrant rich colors, pink and blue mist, rainbow, magical atmosphere, drawing by ilya kuvshinov:1.0, Miho Hirano, Makoto Shinkai, Albert Lynch, 2D

Negative: pictures from afar, bad glance, signature, black and white pictures

Positive: digital oil painting of {token} (with a comically large head:1.2), big forehead, (fisheye:1.1), unrealistic proportions, portrait, caricature, closeup, rich vibrant colors, ambient lighting, 4k, HQ, concept art, illustration, ilya kuvshinov, lois van baarle, rossdraws, detailed, trending on artstation

Negative: bad glance, signature, pictures from afar, black and white pictures

|

|

|

|---|---|---|

|

|

|

CLIP Seg, Background Matting, ControlNet, Dreambooth

Before coming back to the university, I decided to do another internship abroad. I got an opportunity at Stryker in Freiburg, Germany.

I had the chance to work in the R&D department of a big company and also to try the medical field. I liked the environment of a big company and the people I met there. I also enjoyed working in Medtech as I felt that my work had a real meaning in saving lives.

3D Computer vision, Key points detection, triangulation, subpixel coordinate regression

Six months internship at Polygon. Polygon is a new kind of psychology practice that provides remote diagnostics for dyslexia, ADHD, and other learning differences. The company is based in Santa Monica, California, United States.

I worked on a project to assist diagnosis of learning differences. The global idea is that we record testing sessions of patients with a camera.

From these videos, we extract all the useful information as time series. And then, we use these time series to understand what happened at what moment because of what. This approach gets rid of high-dimensional video data. At the same time, it makes the global pipeline much more interpretable which is so important in the medical field where mistakes can cost a lot.

Here is a list of key features I worked on:

- Unifying all the data on AWS Storage

- Data cleaning and standardization

- Voice activity detection with Gaussian mixture models

- 3D face landmarks detection with Face alignment nets

- Benchmarking speech-to-text solutions (Whisper, AWS Transcribe, ...)

- Setting up AWS Batch pipelines to optimize feature extraction costs

- Time series visualization with Plotly and Streamlit

- Time series classification and breakpoint detection

AWS, Docker, Pytorch, Steamlit, Plotly

Face landmarks detection, Voice activity detection, Speech-to-text, Time series classification, and breakpoint detection

After one and half years of studying general engineering, I wanted to discover the professional world so I started my one-and-half-year gap year.

My first internship was with the Paris Digital Lab, a tech consulting company as a Machine learning consultant. I did 3 projects of 7 weeks with different companies, each of them with a Minimal viable product at the end following Scrum methodology.

Consulting was not my thing. Even though projects can be very different and challenging, most of the time, they were theoretically too simple and I had no right to choose what to work on.

This project was about detecting and classifying radio signals in the IQ format. The IQ format is a time series of complex numbers, representing two orthogonal components of a radio signal.

A visualization of the Fast Fourier Transform of the signal was enough to convince us that Object detection was a good way to solve that problem.

YOLOv3 achieved 0.95 mAP @ IOU 0.5 on the task.

Pytorch, YOLO, RCNN, Object detection, Unet, Semantic segmentation, signal processing

This project is about lipstick retrieval from a selfie. The approach is to first, find the lips with Face landmarks detection, then crop on these lips and predict the optical properties of the lipstick. From these properties, find the best fit in a database of lipsticks.

I used Dlib out-of-the-box for the face landmarks detection. The regression task was made with a regression CNN. And then the matching was a weighted L2 score on optical properties.

The available data was generated with a GAN. For the colors, I had to move into the LAB space to have a perceptual distance. The client also wanted an estimation of the uncertainty for each prediction so I implemented Deep evidential regression.

Tensorflow, Deep regression, Uncertainty estimation, Dlib

This one is an exploration of everything that can be done to personalize detected objects and add classes to YOLOv5 with the minimum amount of manual annotation.

I did a huge literature review of Few-shot image classification, few-shot object detection, class agnostic detection, open-world object detection, CLIP, Referring expression comprehension.

The research in zero-shot learning got so hot at that time. I designed a solution with a class-agnostic detector and CLIP on top of it. It achieved 0.20 mAP on COCO. However, a few days before the end of my internship, One for all was released, and could do the same better and faster.

Pytorch, Hugging face Few-shot image classification, few-shot object detection, class agnostic detection, open-world object detection, CLIP, Referring expression comprehension

Automatants is the AI student organization of CentraleSupélec. It promotes Machine learning at CentraleSupélec, gathering skills and sharing knowledge through courses, events, competitions, and projects. While I was in my 3rd year, I joined this association out of curiosity and I was the President of it in my 4th year.

From January 2021 to January 2022, I was the President of this student organization. It was probably the most fulfilling experience of my life. 20 people, tens of events, competitions, and so much fun.

Here are some images:

| Generated cats |

|---|

|

This was my very first Deep learning personal project. The goal was to generate cat images. I got a dataset from the internet and I took my very first step in Deep learning.

I started with a simple DCGAN, then a GAN with residual connexions changed the loss to a Wasserstein loss, and at the end I trained Progressive GAN. I read the papers for Style GAN but did not implement it.

To make it public, I served it on the website of my association with Tensorflow JS. Try it here.

Tensorflow, Keras, Tensorflow JS GAN, Resnet, Progressive GAN, Wasserstein loss, Style GAN

| Neural Stryle Transfer Visualization |

|---|

|

After GANs, I got hooked on perceptual losses. The idea of designing a "perceptual loss" instead of using a pixel-wise loss was so interesting that I had to implement it.

After implementing the vanilla version of neural style transfer, I wanted to have a quicker method to get stylish images so I implemented fast neural style transfer. It consists in using a generator network to directly transform an image to minimize perceptual loss. I managed to transfer style in real time from my camera.

Tensorflow, Keras, OpenCV Perceptual loss, Neural style transfer, Fast NST, VGG loss

| Visualization of the dataset |

|---|

|

Winning a competition on imbalanced image classification. This competition was the occasion to apply everything I learned in one year.

My best model was an ensemble of MobileNetv2 nets trained with semi-supervised learning and a lot of regularization (label smoothing, dropout, weight decay).

Here is the repository of my code for more details.

TensorFlow, Keras Imbalanced dataset, Resnet, MobileNetv2, ShuffleNetv2, Few-shot image classification, Semi-supervised learning, Regularization

| Visualization of the game |

|---|

|

This project solves a maze game only knowing the distance to the exit. When the game begins, the player has to provide a list of moves (right, left, top, right). Then the environment returns the distance to the exit after following the list of moves.

I used a genetic algorithm to solve this game only for the sake of having fun with a genetic algorithm.

The corresponding repository is here

OOP, Genetic algorihtm, Pygame

ViaRézo is the tech student association of CentraleSupélec. It provides internet access and many web services (mailing lists, VMs, social media, etc.) to more than 2000 students.

I joined this association at the same time as Automatants but I gradually left because of the lack of theoretical challenge.

Creating CI/CD scripts on existing WebApps. Quality checkers, unit tests, automatic deployment.

Workflow, CI/CD, Gitlab, GitHub

As a student, I was still not sure what I wanted to do in my life. After a Bachelor's in maths, physics, and algorithmics I got into one of the best universities in France. That's when I started to try out different things...

| Game trailer |

|---|

My very first group coding project with a team of 5 people. We designed and developed a 3D Horror Game on Unity in C# within 1 week.

I mainly worked on the interactions with the environment, the fighting gameplay, and mobs' behavior.

You can download the game here.

Unity, Blender, C#

| Our robot | Photo of the playground |

|---|---|

|

|

Building an autonomous robot that moves in a defined environment and moves objects within a team of 11 people in about one year.

We had a fixed camera outside of the game board. I worked on the localization of the robot, the detection of the objects and obstacles from the camera, and the transmission of this information.

OpenCV, Python, Bluetooth

One-week project in collaboration with the Pasteur institute. Study of links between genes from reactions to different stimuli on R.

R Studio, Unsupervised learning, PCA, Joint graphical lasso

A few facts about myself

- I was born Asian, grew up in a French environment and lived in the US and in Germany for 6 months.

- I am a sports addict: volleyball, bouldering, spikeball, running, biking...

- There is no fun if I don't aim for the best. Being competitive brings so much and it is ok not to be the best.

- I love both spending my time reading philosophy in a silent park and partying with friends.

- One day, I was playing basketball to cool down after an exam. I slipped on a clementine and broke my ankle. Then I walked with crutches for 2 months.