-

Notifications

You must be signed in to change notification settings - Fork 6

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

accelerated depth streaming #1

Comments

- synthetic example streaming moving greyscale - encoded with HEVC Main10 P010LE pixel format closes #2 related to bmegli/hardware-video-streaming#1

Extending NHVE for HEVC support

|

- rename h264 streaming to realsense-nhve-h264 This makes place for another example with HEVC depth streaming Related to bmegli/hardware-video-streaming#1

- add additional executable for color/infrared/depth HEVC streaming - update readme accordingly Related to bmegli/hardware-video-streaming#1

Extending RNHVE to support depth streaming apart from color/infrared

|

|

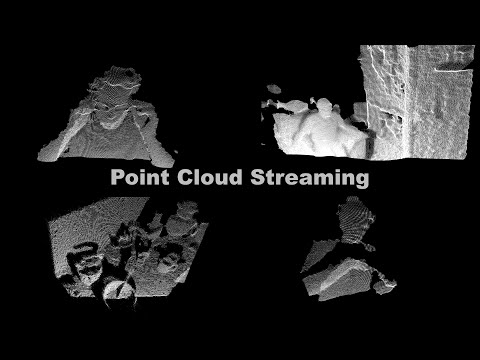

Further improvements were discussed in in librealsense#5799. A zero-copy pipeline for point cloud streaming/decoding/unprojection/rendering was sketched out:

|

Continuing work from realsense-ir-to-vaapi-h264 issue encoding depth stream where the plan was sketched out.

Extending HVE for HEVC support

Done in HVE ac3a4c1.

P010LE encoding example was also added.

HEVC Main10 depth encoding example

Done in realsense-depth-to-vaapi-hevc10.

This configures Realsense to output P016LE Y plane which is directly fed to hardware for encoding as P010LE (binary compatible). Range/precision trade-off can be controlled.

Extending HVD for HEVC support

This is already supported.

Extending NHVE for HEVC support

Extend with new HVE interface for encoder, add synthetic procedurally generated HEVC Main10 P010LE example

Extending RNHVE to support depth streaming apart from color/infrared

Rather straightforward. The only problem is that currently RNHVE uses H.264. Possibly separate repository or configurable codec.

Extending UNHVD for depth data or creating separate project that decodes and feeds point cloud data to Unity

A bit involved to keep performance, framerate and low latency.

Probably:

The text was updated successfully, but these errors were encountered: