If you want to skip ahead, please review Repository Structure and Preparatory Steps.

This repository documents my home lab configuration. The original goal of this project was to provide a home server platform where I could run essential services in my home network 24/7/365, as well as provide space for learning and experimentation. I originally began with a Raspberry Pi to run the DNS based ad blocker Pi-hole but I quickly outgrew that hardware. I settled on the Intel NUC as the appropriate for my needs combination of performance/resources/power efficiency. The software is VMware ESXi as a hypervisor, and HashiCorp Nomad as an orchestrator.

My design goals were to use infrastructure as code and immutable infrastructure to address challenges I've experienced in previous lab environments. What I found in my past experience is I would spend significant effort over days/weeks/months to get an environment set up and configured just right, but then lacking a complete record of the steps necessary to recreate it I would be terrified of messing it up or losing it.

Often I would make some change to the environment and forget what I did or why I did it. Or I would upgrade a software package and later discover it messed something up. Without versioned infrastructure as code it was difficult to recover from an issue, especially if I didn't recall exactly what I had changed. If the problem was serious enough I would resort to rebuilding the system from scratch. Using versioned infrastructure makes it easier to recover from a failure, and easier to perform upgrades safely.

The high level architecture is VMs on ESXi running on an Intel NUC.

- Castle nodes are Nomad/Consul/Vault servers

- NAS provides an NFS volume for persistent storage

I selected ESXi as the hypervisor due to its widespread popularity and compatibility. I originally began with ESXi 6.7 but am now on 7.0 Update 1.

Each of the three Castle nodes run Nomad, Consul, and Vault, to form highly available clusters of each application.

Nomad serves as a general purpose orchestrator that can handle containers, VMs, Java, scripts, and more. I selected Nomad for its simplicity and ease of use. Nomad supports a server/client mode for development where machines can function as both a server and a client, and this is what I use. Each Castle node operates as a Nomad server and a Nomad client.

Consul serves as the service catalog. It integrates natively with Nomad to register and deregister services as they are deployed or terminated, provide health checks, and enable secure service-to-service communication. Each Castle node operates as a Consul server, however the server can also act as a client agent.

Vault provides secrets management. It integrates natively with Nomad to provide secrets to application workloads. Although Vault 1.4+ can perform its own data storage, I found it simpler in this environment to use Consul as the storage backend for Vault since the nodes will automatically discover each other through the Consul storage backend. Each Castle node operates as a Vault server.

Some of the applications that will be run in this environment require persistent storage. The datastore needs to be consistent and available to services that are scheduled on any of the Castle nodes. The approach I chose was to leverage Florian Apolloner's NFS CSI Plugin which should work with any NFS share. Since I did not have one I opted to create a storage VM that functions as a virtual Network-attached storage (NAS) device. The VM has a ZFS volume that is optionally mirrored across two physical volumes on the ESXi host. This provides some degree of redundancy in the event of a single disk failure. Additionally, the persistent data could be backed up to a cloud storage solution such as Backblaze or S3 or wherever. The ZFS volume is exposed as an NFS share, and then storage volumes can be created with Nomad via the CSI plugin.

There are Consul and Nomad snapshot agents running that periodically save Consul and Nomad cluster snapshots. Additionally with Vault Enterprise, one could establish a Disaster Recovery cluster on another machine that would contain a complete copy of the Vault cluster data and configuration.

One of the original requirements for this project was to provide a primary LDNS for my home network. That is accomplished with Pi-hole running on Nomad. In the Pi-hole job, Keepalived is used to provide a floating VIP (virtual address). My secondary LDNS is my home router. I've configured it per the Forward DNS for Consul Service Discovery tutorial so it can resolve queries for .consul as well.

DNS over HTTPS to Cloudflare is used in the Pi-hole configuration.

- Watching #7430 for Traefik UDP fix

In a cloud environment we would have capabilities like cloud auto join to enable the nodes to discover each other and form a cluster. Since we don't exactly have this capability available in the home lab I resort to static IP addresses. Each node has a designated IP address so they will be able to communicate with each other and form a cluster. This is mainly applicable to Consul, because absent static addresses the Nomad and Vault servers could locate each other with Consul. However the Consul servers would already have to be in place and the simplest way to do that in this lab is with static addresses.

In a cloud environment we would have primitives like auto scaling groups that faciliate dynamic infrastructure and make immutable upgrades effortless. Since we don't exactly have this capability available in the home lab I use a blue green approach for upgrades. We designate three blue nodes and three green nodes. We begin by provisioning the "blue" nodes. When it comes time for an upgrade, we update our base images and provision the "green" nodes. Once we've verified the green nodes are healthy, we drain the blue nodes and destroy them, leaving just the new green nodes. If something goes wrong or there is a problem with the upgrade we still have our original blue nodes. We can remove the green nodes and try again. Repeat.

These guides serve as a great starting point and are what I have followed:

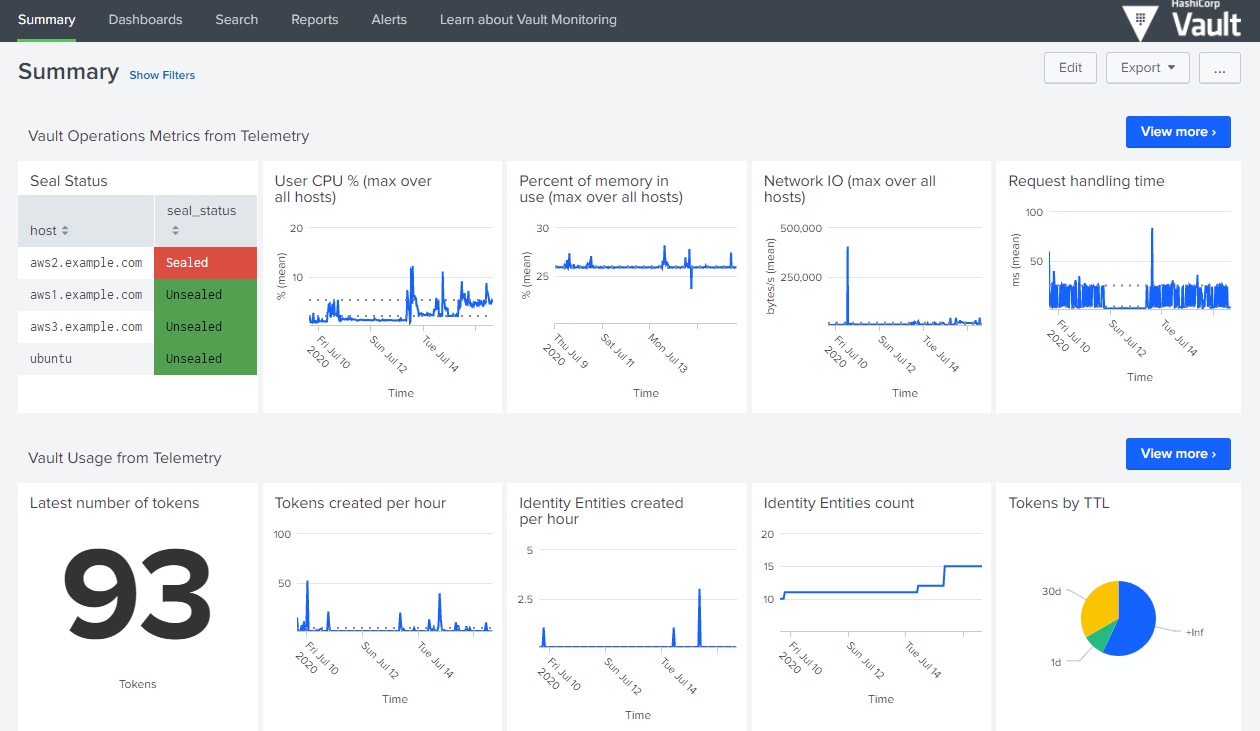

- Monitor Telemetry & Audit Device Log Data

- Vault Cluster Monitoring (specifically the PDF)

- Monitor Consul Datacenter Health with Telegraf

There are additional details in the nomad-jobs README.

If you would like to avoid repeated certificate warnings in your browser and elsewhere, you can build your own certificate authority and add the root certificate to the trust store on your machines. Once you’ve done that Vault can issue trusted certificates for other hosts or applications, like your ESXi host for example. Later when configuring Traefik you can provide it with a wildcard certificate from Vault or configure the Traefik's Let’s Encrypt provider to obtain a publicly trusted certificate.

Note: If you do not add your root certificate to the system trust store you’ll need to add VAULT_SKIP_VERIFY to the Nomad server

The idea with Traefik is now easy ingress - automatic TLS, plus connect sidecar means automatic TLS for everything with a couple lines of config in the Nomad job.

In a cloud environment we would have machine identity provided by the platform that we could directly leverage for authentication and access to secrets. Since we don't have that capability in this "on prem" lab, we can use Vault's AppRole authentication method in a Trusted orchestrator model to faciliate the secure introduction of secrets to our server nodes.

I looked for low power consumption systems with a large number of logical cores. I selected the 2018 Intel NUC8i7BEH, which provides 8 logical cores and pretty good performance relative to its cost and power consumption. It supports up to 64 GB RAM and provides two internal drive bays, as well as support for external storage via a Thunderbolt 3 interface. The basic elements of the lab do not require all that much storage; 500 GB would be sufficient. However I would recommend at least 1 TB over two physical volumes to provide adequate room for troubleshooting, recovery, experimentation, and growth.

Here's a terrific blog post that provides an overview comparison of the various Intel NUC models:

This repo is composed of two parts:

- ESXi Home Lab infrastructure

- Nomad Jobs

The high level steps are:

- Install and configure ESXi.

- Use the Packer template to create a base image on your ESXi host. See Packer README for specific steps.

- Use the Terraform code to provision server VMs on your ESXi host using the base image as a template. See Terraform README for specific steps.

- Use the Nomad job files to run services on cluster. See README for specifics.

Note: The steps that I've documented here may not be the best way of doing things-- my goal with this project is to learn.

I recommend starting a Vault instance before continuing on with these steps. It will be easier to use these configs as they expect the secrets to be in Vault. Once you have a provisional Vault server running, you can build your CA, create an AppRole, and store passwords needed by the Packer templates. Later, when your real cluster is ready you can migrate the provisional Vault data to the real one.

In my environment at that stage I configured the real cluster as a DR secondary, allowing Vault to replicate all secrets and configuration to it, and then designated it the primary. I then configured the original provisional cluster as a DR secondary. Alternatively you could migrate using vault operator migrate, referencing the Storage Migration tutorial Learn guide but going in the opposite direction.

There is a simple Vagrantfile that you can use to spin up a single node Vault server here.

- Create the trusted orchestrator policy. This will be used by Terraform to issue wrapped SecretIDs to the nodes during the provisioning process.

vault policy write trusted-orchestrator -<<EOF

# the ability to generate wrapped secretIDs for the nomad server bootstrap approle

path "auth/approle/role/bootstrap/secret*" {

capabilities = [ "create", "read", "update" ]

min_wrapping_ttl = "100s"

max_wrapping_ttl = "600s"

}

# required for vault terraform provider to function

path "auth/token/create" {

capabilities = ["create", "update"]

}

# required to create

path "auth/token/lookup-accessor" {

capabilities = ["update"]

}

# required to destroy

path "auth/token/revoke-accessor" {

capabilities = ["update"]

}

EOF

- Issue a Vault token for your Terraform workspace and set it as the

VAULT_TOKENenvironment variable in your Terraform workspace. In Terraform Cloud this variable should be marked as Sensitive.

vault token create -orphan \

-display-name=trusted-orchestrator-terraform \

-policy=trusted-orchestrator \

-explicit-max-ttl=4320h \

-ttl=4320h

-

Create a nomad server policy and Vault token role configuration as outlined in the Nomad Vault Configuration Documentation.

-

Create an AppRole called

bootstrapto be used in the build process by following the AppRole Pull Authentication Learn guide. The bootstrap role, should have the nomad-server policy assigned, as well as a policy similar to the below for authorization to retrieve a certificate (substitute your path names, and add your particular cloud credential if using a cloud auto unseal):

vault policy write pki -<<EOF

path "pki/intermediate/issue/hashidemos-io" {

capabilities = [ "update" ]

}

path "auth/token/create" {

capabilities = ["create", "read", "update", "list"]

}

EOF

Here is how to create the bootstrap role:

vault write auth/approle/role/bootstrap \

secret_id_bound_cidrs="192.168.0.101/32","192.168.0.102/31","192.168.0.104/31","192.168.0.106/32" \

secret_id_num_uses=1 \

secret_id_ttl=420s \

token_bound_cidrs="192.168.0.101/32","192.168.0.102/31","192.168.0.104/31","192.168.0.106/32" \

token_period=259200 \

token_policies="consul-client-tls,consul-server-tls,gcp-kms,nomad-server,pki"

Please check the docs to understand what the above parameters do.

- Save the RoleID in a file called

role_idand place it in the castle files directory.

The SecretID is generated and delivered by Terraform as defined in main.tf, and after booting the RoleID and wrapped SecretID are used by Vault Agent to authenticate to Vault and retrieve a token, using this Vault Agent config.

Docs:

- https://learn.hashicorp.com/tutorials/vault/pattern-approle?in=vault/recommended-patterns

- https://www.nomadproject.io/docs/integrations/vault-integration

- Follow the Build Your Own Certificate Authority guide to generate your root and intermediate CAs.

- Save the root certificate as

root.crtin the castle files directory. - Update the

cert.tplandkey.tplVault Agent templates in the castle files directory with your host/domain/role names. These templates are for the provisioner script that runs in Terraform. It runs Vault Agent once to authenticate to Vault and issue certificates for the Vault servers to use. - Follow either the Secure Consul Agent Communication with TLS Encryption or the Generate mTLS Certificates for Consul with Vault guide to bootstrap your Consul CA. The first uses Consul's built-in CA whereas the second uses Vault as the CA for Consul. I began with Consul initially but in the current version of this repository am using Vault.

- Save the Consul CA certificate as

consul-agent-ca.pemin the castle files directory. - Update the

consul-server-cert.tpl,consul-server-key.tpl,consul-client-cert.tpl, andconsul-client-key.tplVault Agent templates in the castle files directory with your role name. Once again these templates are for the provisioner script that runs in Terraform. It runs Vault Agent once to authenticate to Vault and issue certificates for the Consul servers to use.

Note: Per Jeff Mitchell, if you want the full CA chain output from your intermediate CA, you should upload the root cert along with the signed intermediate CA cert when you use set-signed. I just appended the root certificate to the signed intermediate certificate.

Note: generate_lease must be true for any PKI roles that you will be using from within template stanzas in Nomad jobs, per this note in the Nomad documentation.

- Configure your desired seal stanza in the Vault configuration file. This stanza is optional, and in the case of the master key, Vault will use the Shamir algorithm to cryptographically split the master key if this is not configured. I happen to use GCP KMS, which require GCP credentials be placed on each Vault server. There are a variety of ways to accomplish this. I have them retrieved from Vault and rendered onto the file system by Vault Agent in setup_castle.tpl, which is run as a provisioner (i know :-D) in the Terraform code.

- Search and replace all IP addresses to match your private network address range.

- If you do not have enterprise licenses search and replace all packages to oss.

If you create a K3s instance, I followed these steps to perform WAN Federation between the primary Consul data center on the Castle nodes and a secondary Consul data center on K3s. There is a Mesh Gateway Nomad job in the nomad-jobs folder. The Consul Helm values and other configurations are located in the k8s folder.

- When looking to improve this overall architecture I will be looking at adding some automated pipelines.

Nomad

Consul

Terraform Cloud

Going to need these at one point or another: