This repository contains the source code for the Lokole project by the Canadian-Congolese non-profit Ascoderu. The Lokole project consists of two main parts: an email client and an email server.

The Lokole email client is a simple application that offers functionality like:

- Self-service creation of user accounts

- Read emails sent to the account

- Write emails including rich formatting

- Send attachments

All emails are stored in a local SQLite database. Once per day, the emails that were written during the past 24 hours get exported from the database, stored in a JSON file, compressed and uploaded to a location on Azure Blob Storage. The Lokole Server picks up these JSON files, manages the actual mailboxes for the users on the Lokole and sends new emails back to the Lokole by using the same compressed file exchange format.

The Lokole email application is intended to run on low-spec Raspberry Pi 3 hardware (or similar). Read the "Production setup" section below for further information on how to set up the client devices.

The Lokole email server has two main responsibilities:

- Receive emails from the internet that are addressed to Lokole users and forward them to the appropriate Lokole device.

- Send new emails created by Lokole users to the rest of the internet.

Email is at the core of our modern life, letting us keep in touch with friends and family, connecting us to our businesses partners and fostering innovation through exchange of information.

However, in many parts of the developing world, email access is not very wide-spread, usually because bandwidth costs are prohibitively high compared to local purchasing power. For example, in the Democratic Republic of the Congo (DRC) only 3% of the population have access to emails which leaves 75 million people unconnected.

The Lokole is a project by the Canadian-Congolese non-profit Ascoderu that aims to address this problem by tackling it from three perspectives:

- The Lokole is an email client that only uses bandwidth on a schedule. This reduces the cost of service as bandwidth can now be purchased when the cost is lowest. For example, in the DRC, $1 purchases only 65 MB of data during peak hours. At night, however, the same amount of money buys 1 GB of data.

- The Lokole uses an efficient data exchange format plus compression so that it uses minimal amounts of bandwidth, reducing the cost of service. All expensive operations (e.g. creating and sending of emails with headers, managing mailboxes, etc.) are performed on a server in a country where bandwidth is cheap.

- The Lokole only uses bandwidth in batches. This means that the cost of service can be spread over many people and higher savings from increased compression ratios can be achieved. For example, individually purchasing bandwidth for $1 to check emails is economically un-viable for most people in the DRC. However, the same $1 can buy enough bandwidth to provide email for hundreds of people via the Lokole. Spreading the cost in this way makes email access sustainable for local communities.

Below is a list of some of the key technologies used in the Lokole project:

- Connexion is the web framework for the Lokole email server API.

- Flask is the web framework for the Lokole email client application.

- Dnsmasq and hostapd are used to set up a WiFi access point on the Lokole device via which the Lokole email client application is accessed.

- WvDial is used to access the internet on the Lokole device to synchronize emails with the Lokole email server.

- Celery is used to run background workers of the Lokole email server in Azure ServiceBus (production) or RabbitMQ (development). Celery is also used to run background workers and scheduled tasks on the Lokole email client application in SQLAlchemy.

- Libcloud is used to store emails in Azure Storage (production) or Azurite (development).

- Sendgrid Inbound Parse is used to receive emails from email providers and forward them to the Lokole email server. Sendgrid Web API v3 is used to deliver emails from the Lokole email server to email providers. The MX records for Sendgrid are automatically generated via Cloudflare API v4.

- Github API v4 is used to authenticate interactive calls to the Lokole email server API such as registering new clients or managing existing clients. Authorization is managed by Github team memberships on the Ascoderu organization. Management operations are exposed via the Lokole status page which is implemented in React with Ant Design.

- Github Actions are used to verify pull requests and deploy updates to production.

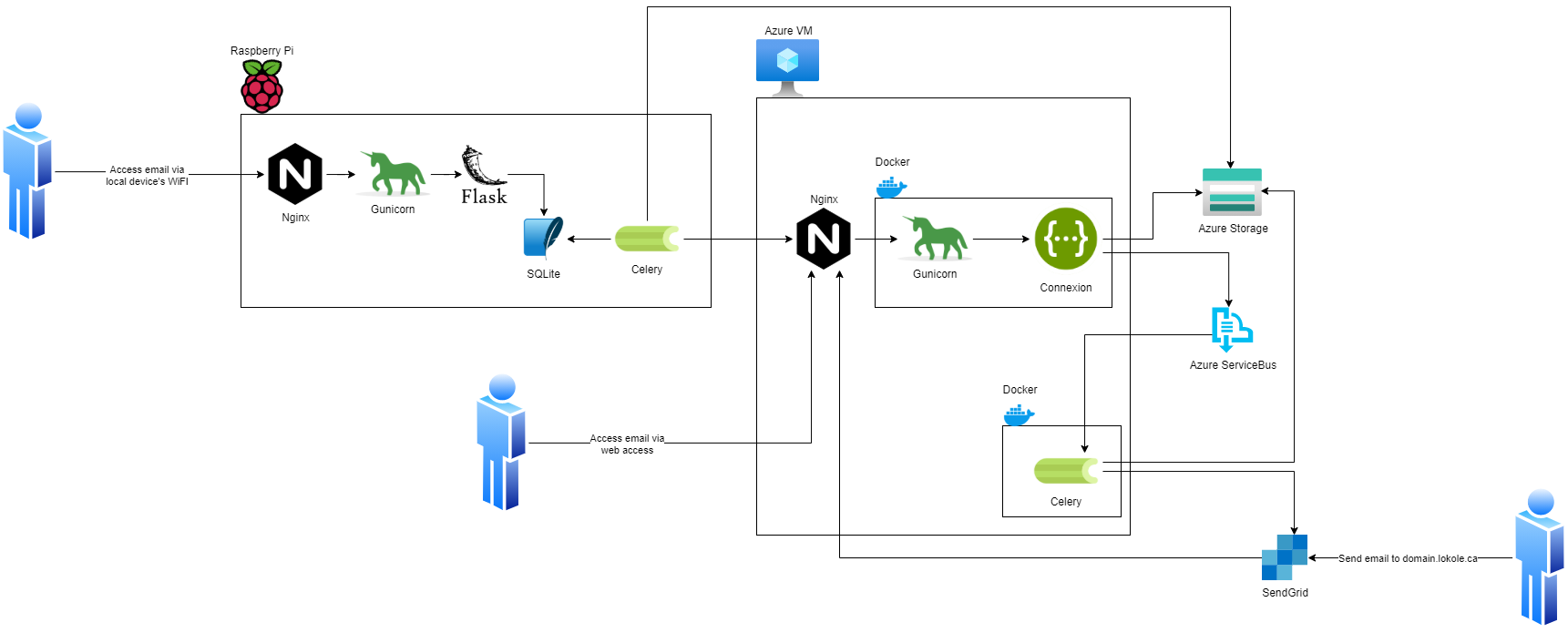

The diagram below shows the technologies in the context of the system as well as their interactions:

The key data flows and client/server interactions of the system are documented in the diagrams below.

In order to communicate between the Lokole cloud server and the Lokole email application, a protocol based on gzipped jsonl files uploaded to Azure Blob Storage is used. The files contains a JSON object per line. Each JSON object describes an email, using the following schema:

{

"sent_at": "yyyy-mm-dd HH:MM",

"to": ["email"],

"cc": ["email"],

"bcc": ["email"],

"from": "email",

"subject": "string",

"body": "html",

"attachments": [{"filename": "string", "content": "base64", "cid": "string"}]

}First, install the system dependencies:

Second, get the source code.

git clone [email protected]:ascoderu/lokole.git

cd lokoleThird, build the project images. This will also verify your checkout by running the unit tests and other CI steps such as linting:

make buildYou can now run the application stack:

make start logsThere are OpenAPI specifications that document the functionality of the application and provide references to the entry points into the code (look for the yaml files in the swagger directory). The various APIs can also be easily called via the testing console that is available by adding /ui to the end of the API's URL. Sample workflows are shown in the integration tests folder and can be run via:

# run the services, wait for them to start

make build start

# in another terminal, run the integration tests

# the integration tests also serve the purpose of

# seeding the system with some test data

# you can access the email service at http://localhost:8080

# you can access the email client at http://localhost:5000

# you can access the status page at http://localhost:3000

make integration-tests test-emails

# finally, tear down the services

make stopThe state of the system can be inspected via:

# run the development tools and then

# view storage state at http://localhost:10001

# view database state at http://localhost:8882

# view queue state at http://localhost:5555

make start-devtoolsNote that by default the application is run in a fully local mode, without leveraging any cloud services. For most development purposes this is fine but if you wish to set up the full end-to-end stack that leverages the same services as we use in production, keep on reading.

The project uses Sendgrid, so to emulate a full production environment, follow these Sendgrid setup instructions to create a free account, authenticate your domain, and create an API key with at least Inbound Parse and Mail Send permissions.

The project uses Cloudflare to automate DNS management whenever new Lokole clients are set up. Create an account, set your domain to be managed by Cloudflare and look up the Cloudflare Global API Key.

The project also makes use of a number of Azure services such as Blobs, Tables, Queues, Application Insights, and so forth. To set up all the required cloud resources programmatically, you'll need to create a service principal by following these Service Principal instructions. After you created the service principal, you can run the Docker setup script to initialize the required cloud resources.

cat > ${PWD}/secrets/sendgrid.env << EOM

LOKOLE_SENDGRID_KEY={the sendgrid key you created earlier}

EOM

cat > ${PWD}/secrets/cloudflare.env << EOM

LOKOLE_CLOUDFLARE_USER={your cloudflare user account email address}

LOKOLE_CLOUDFLARE_KEY={your cloudflare global api key}

EOM

cat > ${PWD}/secrets/users.env << EOM

OPWEN_SESSION_KEY={some secret for user session management}

LOKOLE_REGISTRATION_USERNAME={some username for the registration endpoint}

LOKOLE_REGISTRATION_PASSWORD={some password for the registration endpoint}

EOM

docker-compose -f ./docker-compose.yml -f ./docker/docker-compose.setup.yml build setup

docker-compose -f ./docker-compose.yml -f ./docker/docker-compose.setup.yml run --rm \

-e SP_APPID={appId field of your service principal} \

-e SP_PASSWORD={password field of your service principal} \

-e SP_TENANT={tenant field of your service principal} \

-e SUBSCRIPTION_ID={subscription id of your service principal} \

-e LOCATION={an azure location like eastus} \

-e RESOURCE_GROUP_NAME={the name of the resource group to create or reuse} \

-v ${PWD}/secrets:/secrets \

setup ./setup.shThe secrets to access the Azure resources created by the setup script will be

stored in files in the secrets directory. Other parts of the

project's tooling (e.g. docker-compose) depend on these files so make sure to

not delete them.

To set up a production-ready deployment of the system, follow the development setup scripts described above, but additionally also pass the following environment variables to the Docker setup script:

DEPLOY_COMPUTE: Must be set tok8sto toggle the Kubernetes deployment mode.KUBERNETES_RESOURCE_GROUP_NAME: The resource group into which to provision the Azure Kubernetes Service cluster.KUBERNETES_NODE_COUNT: The number of VMs to provision into the cluster. This should be an odd number and can be dynamically changed later via the Azure CLI.KUBERNETES_NODE_SKU: The type of VMs to provision into the cluster. This should be one of the supported Linux VM sizes.

The script will then provision a cluster in Azure Kubernetes Service and

install the project via Helm. The secrets to connect to the provisioned

cluster will be stored in the secrets directory.

As an alternative to the Kubnernets deployment, a Virtual Machine may also be provisioned to run the services by passing the following environment variables to the Docker setup script:

DEPLOY_COMPUTE: Must be set tovmto toggle the Virtual Machine deployment mode.VM_RESOURCE_GROUP_NAME: The resource group into which to provision the Azure Virtual Machine.VM_SKU: The type of VMs to provision into the cluster. This should be one of the supported Linux VM sizes.

There is a script to set up a new Lokole email client. The script will install the email app in this repository as well as standard infrastructure like nginx and gunicorn. The script will also make ready peripherals like the USB modem used for data exchange, and set up any required background jobs such as the email synchronization cron job.

The setup script assumes that you have already set up:

- 3 Azure Storage Accounts, general purpose: for the cloudserver to manage its queues, tables and blobs.

- 1 Azure Storage Account, blob storage: for the cloudserver and email app to exchange email packages.

- 1 Application Insights account: to collect logs from the cloudserver and monitor its operations.

- 1 SendGrid account: to send and receive emails in the cloudserver.

The setup script is tested with hardware:

- Raspberry Pi 3 running Raspbian Jessie lite v2016-05-27, v2017-01-11, v2017-04-10, and v2017-11-29.

- Orange Pi Zero running Armbian Ubuntu Xenial

The setup script is also tested with USB modems:

The setup script installs the latest version of the email app published to PyPI. New versions get automatically published to PyPI (via Travis) whenever a new release is created on Github.

You can run the script on your client device like so:

curl -fsO https://raw.githubusercontent.com/ascoderu/lokole/master/install.py && \

sudo python3 install.py <client-name> <sim-type> <sync-schedule> <registration-credentials>To translate Lokole to a new language, install Python, Babel and a translation editor such as poedit. Then follow the steps below.

# set this to the ISO 639-1 language code for which you are adding the translation

export language=ln

# generate the translation file

pybabel init -i babel.pot -d opwen_email_client/webapp/translations -l "${language}"

# fill-in the translation file

poedit "opwen_email_client/webapp/translations/${language}/LC_MESSAGES/messages.po"

# finalize the translation file

pybabel compile -d opwen_email_client/webapp/translations