-

Notifications

You must be signed in to change notification settings - Fork 309

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add a priority queue implementation built on top of Heap

#51

Conversation

|

This looks really good! @timvermeulen Could you lend a second pair of eyes and review the heap logic?

I agree. I don't think the separate It might be nice to add a few convenience APIs for bulk insertion/creation:

I think this would be the most profitable next step to take. It'd be great to see how the performance of |

|

Super to see this in progress! Just one quick initial comment/question while browsing, push/pop is documented as |

The implementation does skip levels (see |

|

Ok, thanks for clarification! |

|

In the case of |

Maybe let q: PriorityQueue = ...

for elt in q.minHeap {

// iterates from min to max

}

for elt in q.maxHeap {

// iterates from max to min

}wdyt? |

Another approach I was mulling over is exposing separate functions for creating the iterator (e.g. I think exposing a view that conforms to |

If we can provide a bidirectional collection view I think that'd work great: for elt in q.sortedElements {

// iterates from min to max

}

for elt in q.sortedElements.reversed() {

// iterates from max to min

}However, it wasn't clear to me if we're able to provide an efficient bidirectional view over the heap. |

Would the priority queue be able to conform to the Collection protocol? Because min and max values are determined during the remove operation, the priority queue does not lend itself easily to random access of elements via indexes.

I like this method of traversing the priority queue, as it is the least ambiguous.

In this case, the iterator could default to iterating from min to max (or max to min if that makes more sense) |

|

I don’t think the iterator necessarily should default to any sorted order - it could just provide “some” order unless asked for sorted / reversed as suggested? Just trying to find reuse of existing protocols if possible if performant and natural (that being said, can’t say whether efficiency would be good - but just wanted to point out that there is an existing interface that might be useful) - that would also argue for popFirst/popLast methods for dequeueing from respective ends |

This was my impression.

I think both could be useful: let q: PriorityQueue<Foo> = ...

let heap: [Element] = q.heap // direct access to the underlying heap array

for elt in q.minToMax { ... } // a sequence that iterates from min to max

for elt in q.maxToMin { ... } // a sequence that iterates from max to minDirect access to the underlying heap array could be useful if want to efficiently hand off all the elements to an API that expects an Array if order isn't important. |

I feel that exposing the underlying heap to the developer would introduce unnecessary complexity, and a separate function could return an array view of the priority queue. Also, because elements of the highest priority are at the front of the priority queue, another way to conform to the Sequence protocol would be like this let q: PriorityQueue = ...

for elt in q {

// iterates from max to min

}

for elt in q.reversed() {

// iterates from min to max

}While I think this is the most straightforward way to traverse the priority queue, I'm not sure how intuitive it is for others. |

|

I think conforming to

I think we should expose it as

Because we're using a min-max heap, the lowest-priority element is at the front of the backing array not the highest.

I'm still in the camp of not adding conformance to Meta question: does this sort of discussion belong in the forums or would we rather it happen here? |

I can get behind this idea as well!

Maybe have something like this? The priority queue would not conform to the Sequence protocol, yet rather have whatever let q: PriorityQueue = ...

for elt in q.orderedIncreasing() {

// iterates from min to max

}

for elt in q.orderedDecreasing() {

// iterates from max to min

}Assuming we are exposing the underlying heap as For brevity, however, it might be better just to have it as

I think discussion here is better, as we would be able to see code changes and implementation suggestions all in one place. |

|

Other possible naming options: |

I also like riffing off of That would leave us with: let q: PriorityQueue<Element> = ...

let heap: [Element] = q.unordered // direct read-only access to the underlying heap array

for elt in q.ascending { ... } // a sequence that iterates from min to max

for elt in q.descending { ... } // a sequence that iterates from max to minI think I still prefer

I think this discussion is working well in this thread—we seem to be making progress and converging. If we find ourselves at a stalemate, that might be a good time to gather more opinions by bringing it to the forums. And before we merge the PR, it'd prob be good to solicit feedback and do some kind of API review on the forums. |

|

@AmanuelEphrem Do you want to work on implementing extension PriorityQueue {

public struct Iterator: IteratorProtocol, Sequence {

private var _base: PriorityQueue

private let _direction: IterationDirection

[…]

}

var ascending: Iterator {

Iterator(_base: Self, _direction: .ascending)

}

var descending: Iterator {

Iterator(_base: Self, _direction: .descending)

}

}I'll work on adding the performance tests. |

Yes! I was thinking of the exact same implementation as well. |

| input: [Int].self | ||

| ) { input in | ||

| return { timer in | ||

| var queue = PriorityQueue(input) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should this go above the closure (so that we're not also timing the instantiation)?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think it should still stay within the outer closure, but outside the inner closure (essentially swapping lines 54 and 55) so it stays consistent with the other benchmarks.

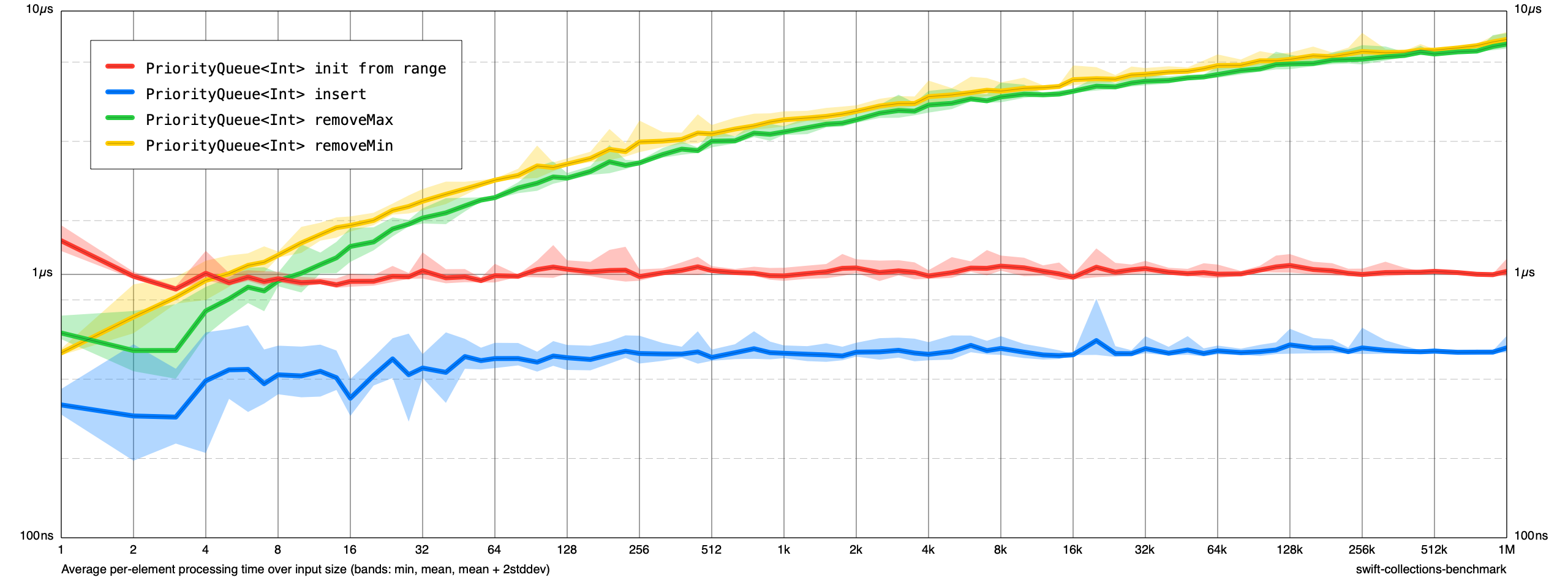

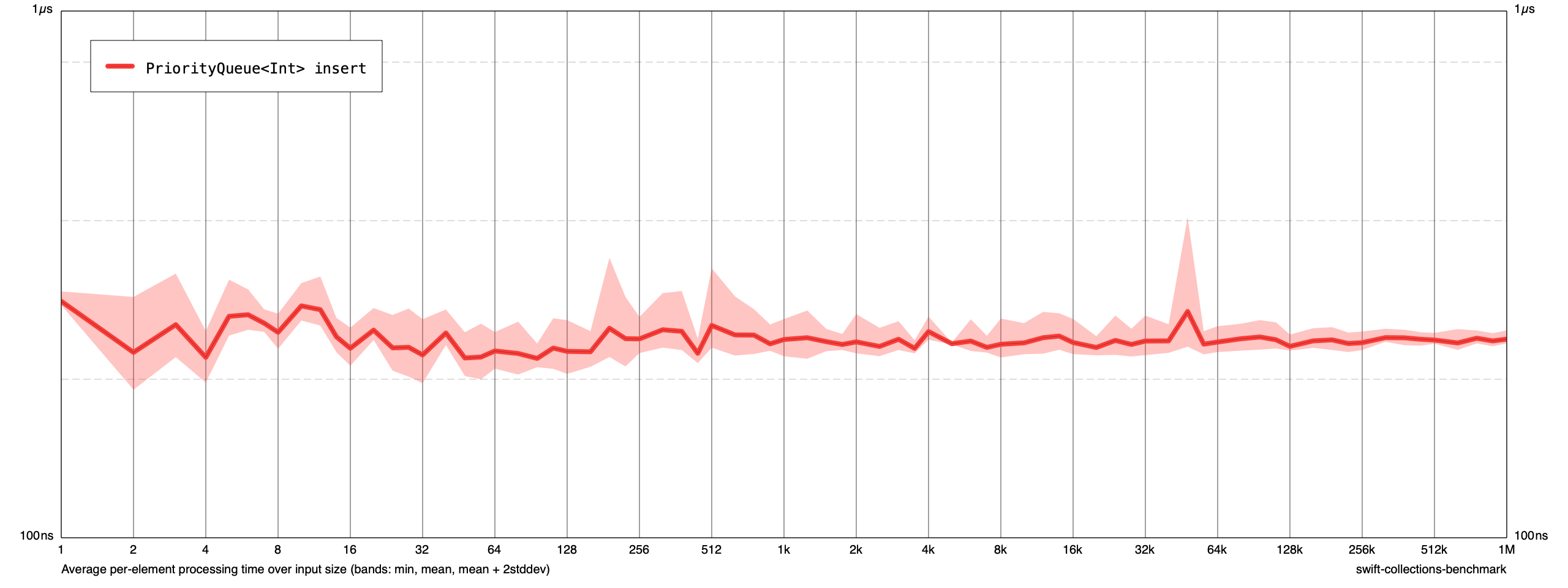

This was run on a 2019 MacBook Pro (2.3 GHz 8-Core Intel Core i9). Yes, it was built in release mode. |

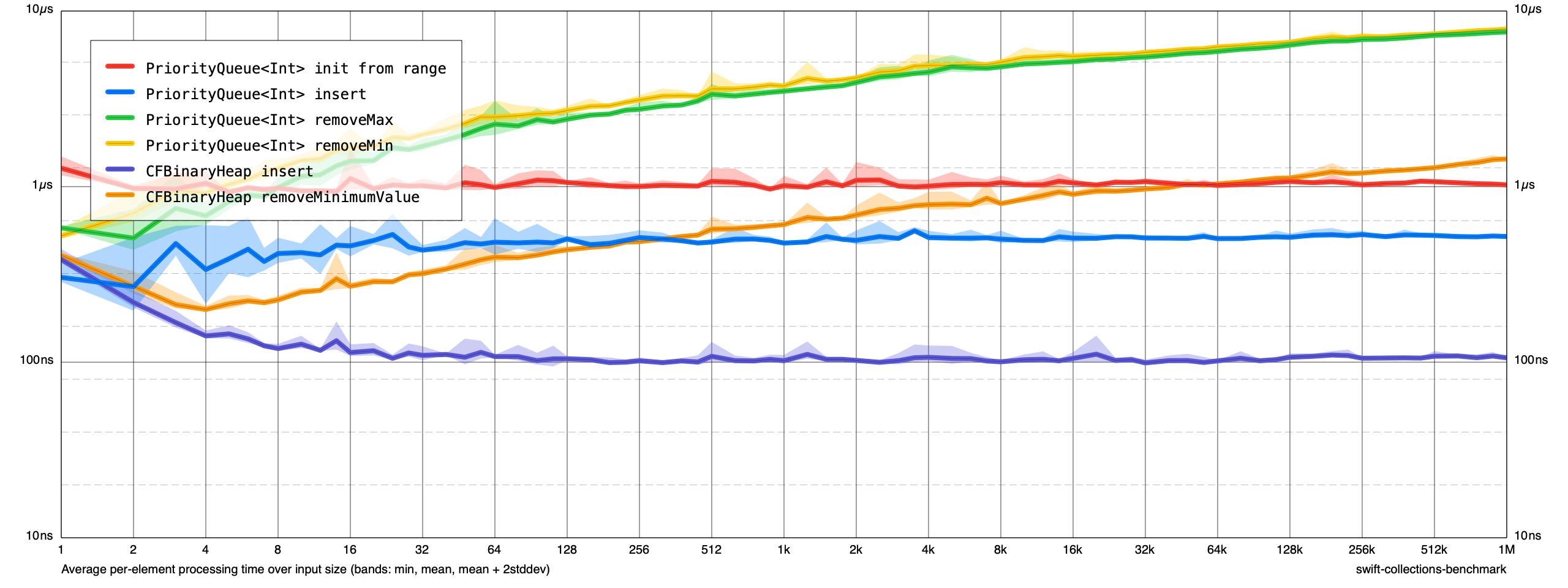

@AquaGeek Nice! It'd be great to see how the performance compares to CFBinaryHeap for equivalent operations (e.g. |

@kylemacomber Here's a quick pass at adding |

|

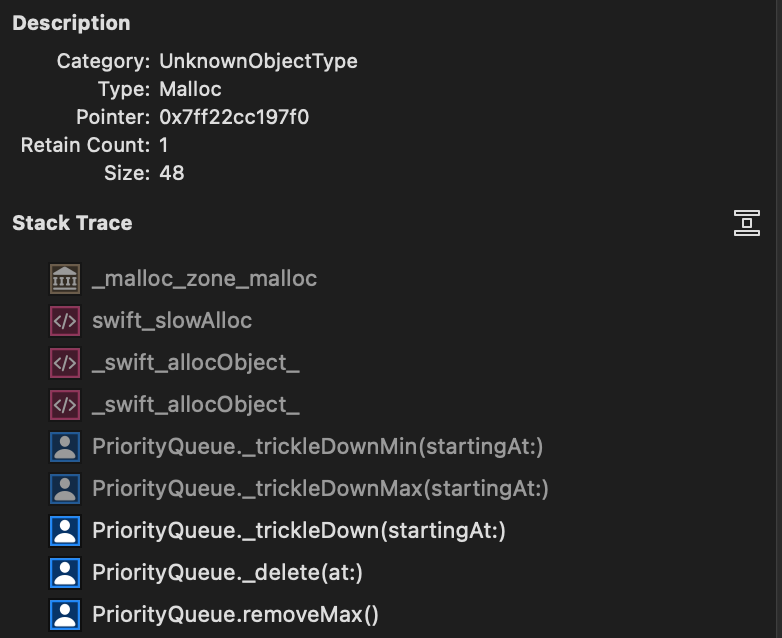

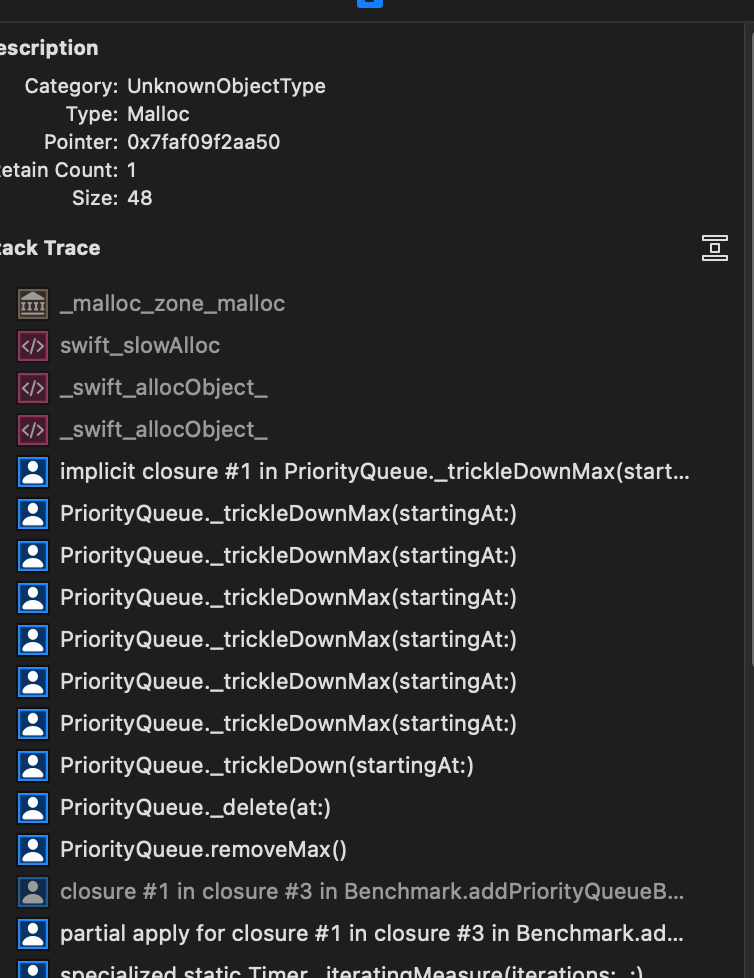

I just spent a little time profiling a bit (as the benchmark runtime seems quite long compared to the C++ version referenced in the article linked to previously), there seems to be an issue with using swapAt: (probably the same issue discussed here) - I just did a simple measurement of changed to brought this test down to 165K transient allocations and the runtime was basically halved. And the original benchmark went from: to: Not sure if this is a known issue with the swapAt: method, but it seems a bit rough to force two memory allocations per swapAt: operation for a simple small value type. Also, looking at the removeMax test, there is also a large amount of transient allocations with this backtrace, can't quite understand why though (haven't looked at any others yet). |

|

Tracked down the removeMax transient allocations too (disable inlining of _trickleDownMax and used Instruments), removing them changed the runtime for one iteration of the benchmark from from 95s -> 83s (and removed several M allocations too). It was triggered by a couple of guard statements looking like: So I just tried remove 'sortedUsing' and created two duplicate methods for _indexOfChildOrGrandchild (one for greater, one for less than) and the allocations went away (it seems an implicit closure is created by passing the operator function as an argument which caused all the allocations). |

I wonder if |

Exclude the cost of initializing the queue from the benchmark

be7a4c7 to

c024a77

Compare

The type stored in the underlying heap has more than 2 values, so Pair is a misnomer. This has been renamed to 'Element.' The existing tyepalias Element has been renamed to 'Pair,' as it only includes the Value and its Priority.

These were removed from the underlying Heap in another PR. Many of the same reasons for doing that apply here.

c024a77 to

dff2b17

Compare

|

@lorentey I rebased on |

| struct _Element: Comparable { | ||

| @usableFromInline let value: Value | ||

| let priority: Priority | ||

| let insertionCounter: UInt64 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Anyone have any thoughts on this approach? Is using UInt64 overkill for this? Should we overflow back to 0 on insertion instead of trapping?

|

What about decreasePriority/increasePriority functions? It's used by some algorithms, for example Dijkstras algorithm, to become faster. |

|

What is the status of this PR, is it pending review still? @AquaGeek |

Yes, it's still pending review. In particular, I'm looking for feedback on the best way to handle FIFO ordering — right now, I'm using a I'd love to get this landed. |

|

@AquaGeek Have you considered using a timestamp such as Date() to enforce FIFO in case of equal priorities instead of the counter? What would be the disadvantages of that approach? I guess precision. |

I think that would be effectively the same thing but with the downside of depending on |

If exploring this, I'd aim for using |

| /// - Complexity: O(log `count`) | ||

| @inlinable | ||

| public mutating func popMax() -> Value? { | ||

| _base.popMax()?.value |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can we also return the priority along with value like in Python?

next = q.get()

print(next)

//(1, 'Jones')

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm also confused about why we can't get back the priorities we previously set.

|

My gut feeling is that if someone wants to ensure that this preserves insertion order for items with the same priority, then they can simply define their Priority type to account for this. Using |

|

Then again, if they're defining a type anyway, they could just as well define a custom I don't quite get the value this adds over struct Prioritized<Element, Priority: Comparable>: Comparable {

let priority: Priority

let element: Element

init(_ element: Element, _ priority: Priority) {

self.priority = priority

self.element = element

}

static func ==(left: Self, right: Self) -> Bool { left.priority == right.priority }

static func <(left: Self, right: Self) -> Bool { left.priority < right.priority }

}

typealias PriorityQueue<Element, Priority: Comparable> =

Heap<Prioritized<Element, Priority>>Can y'all remind me of the point in defining a separate If stable ordering is needed often enough to deserve its own place here, then ideally it should be offered for The typealias StablePriorityQueue<Element, Priority: Comparable> =

StableHeap<Prioritized<Element, Priority>> |

Honestly, it has been so long that I've lost a lot of the context here.

At the moment, that would have been my primary argument for |

|

Not having to conform to My hesitation is really just me getting a bit worried about the hidden costs of having to store a full UInt64 value with every single item, just on the off chance some of them might compare equal. Clients that do not care about preserving insertion order shouldn't have to pay the storage overhead of these. (A hidden 8-byte value usually doesn't sound too bad, but it can end up significant enough to be worth worrying about.) Automatically & implicitly bundling stable ordering with the Element+Priority separation sounds unappealing to me -- both aspects are interesting ideas on their own, but combining them together under the name What if we waited until we had some actual users for this module before we start elaborating it? If potential adopters came back complaining about the pain of having to define wrapper types and/or asking how they could preserve insertion order, then we'd probably be in a better position to figure out the right way to serve them. (Which may well turn out to be your original design -- I may just not be seeing that far ahead!) Note: I'm not aware of any high-profile heap implementations in other languages that provide out-of-the-box support for preserving insertion order. Then again, I only did a quick & very basic search and may have missed something obvious. (Now of course we could also preserve insertion ordering using a different design -- such as using UInt32 values, and renumbering all existing items if we ever wrap around, or by tweaking the underlying Heap implementation in some way. But then again, the effort of thinking about & implementing these may well prove unnecessary, and why bother until we know we'll need it...) |

Yeah, I think that's valid. I'm hesitant to commit to too many design decisions in absence of compelling use cases. There's always the "if you care about eking out every last drop of performance you can drop down to

@phausler was one of the people who brought up FIFO ordering in the forum thread. The comparison was against The original use case for me starting to implement this at all was a priority queue for pending network requests. FIFO ordering is important, as we don't want a request that was enqueued first waiting forever in the queue because subsequent requests with the same priority happen to get inserted into a slot ahead of it in the heap. That being said, we haven't cut over to this library's implementation yet, partly because we were hoping to get the FIFO ordering and the removal of the

I mainly used |

| static func < (lhs: Self, rhs: Self) -> Bool { | ||

| if lhs.priority < rhs.priority { | ||

| return true | ||

| } else if lhs.priority == rhs.priority { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

One issue we've run into in our upstream implementation of this, is that this Comparable implementation only works when trying to dequeue the minimum element. Dequeueing the maximum element doesn't respect FIFO ordering due to the default implementation of > being to flip the arguments and use < (i.e. a > b ⇢ b < a). Implementing > to also account for the insertion counter starts violating invariants in the heap — it creates weird situations where an element can be both greater than and less than another if it was inserted before and has the same priority.

One way we've come up with to fix this is to only insert the priorities into the underlying heap and use them as a key for looking up an array (or linked list or deque) in a dictionary that actually contains the values. That eliminates the need to store a UInt64 per element but comes at some cost (especially if you use an array) and requires that the Priority be Hashable. None of these changes affect the underlying Heap.

struct PriorityQueue<Value, Priority: Hashable> {

var _base: Heap<Priority>

var _elements: Dictionary<Priority, Deque<Value>>

...

}Are there other approaches for maintaining FIFO ordering?

This is a good point, but only as it underscores a point. From my viewpoint, the fact that Expecting Clients have full flexibility to resolve the ordering of items as they wish, by conforming their I believe The idea of adding a serial number to incoming items to implement a FIFO Adding a second heap-like data structure that provides both a FIFO Given how backlogged this package is, I don't see us shipping second-order additions like this in the foreseeable future -- accordingly, I'm going to close this PR. Apologies for leaving it open this long! I'm not against trying again after we've managed to ship the current set of data structures in limbo -- however, my concerns above will likely still apply. An example of a perhaps more interesting direction (that could better satisfy both criteria above) might be to have a |

|

Sorry for resurrecting the discussion here if people are exhausted about it; but if this library is never going to add PriorityQueue -- would an acceptable alternative be some Examples in the docs on how to use the Heap type to roll your own PriorityQueues using Heap? Looking at the diff in this PR, there's a ton of details that could be omitted if users are implementing the PriorityQueue they need, so I think a short-enough example would be small enough to fit in the docs? |

Description

This introduces a double-ended

PriorityQueueas discussed in #3.Detailed Design

Heap(a min-max heap) is used as the storage. One of the big advantages of using a min-max heap is that we don't need to bifurcate into a min heap or max heap and keep track of which kind is used — you can pop min or max, as needed.The main difference between

HeapandPriorityQueueis that the latter separates the value from the comparable priority of it. This is useful in cases where a type doesn't conform toComparabledirectly but it may have a property that does — e.g.Task.priority. It also keeps track of insertion order, so dequeueing of elements with the same priority should happen in FIFO order.To-Do

Sequenceconformance_indexOfChildOrGrandchild(of:sortedUsing:)(probably by splitting it back out into 2 separate functions)@inlinableis requiredremoveMin/Max_minMaxHeapIsMinLevelan instance method_bubbleUpMin(startingAt:)and_trickleDown(startingAt:)iterative instead of recursiveinsert(contentsOf:)Heapimplementation merged intomainFuture Directions

replaceMinandreplaceMaxDocumentation

The public APIs have largely been documented. An overview document has been added to the Documentation directory.

Testing

There are unit tests for the added

PriorityQueuetype.Performance

Performance tests have been added. @lorentey has a PR up (#76) which adds a

std::priority_queuebenchmark — it would be interesting to compare against that with more complex types.We may want to revisit the library JSON to ensure we have the desired comparisons defined.

Source Impact

This is purely additive. No existing APIs were changed, deprecated, or removed.

Checklist