-

Notifications

You must be signed in to change notification settings - Fork 6.8k

[FEATURE] Add interleaved batch_dot oneDNN fuses for new GluonNLP models #20312

Conversation

|

Hey @bgawrych , Thanks for submitting the PR

CI supported jobs: [windows-gpu, clang, unix-gpu, edge, centos-gpu, website, sanity, unix-cpu, centos-cpu, miscellaneous, windows-cpu] Note: |

|

Hi @leezu @szha, is something wrong with master CI? I get always on windows-gpu - in this PR and in #20227 |

|

If the tests fail consistently, let's disable these tests and open an issue for tracking them. |

906a4c3

to

336e442

Compare

|

@akarbown Can you review and merge if it's ok? |

Float self attention fuse WIP Add transpose - reshape Add self attention fuse with oneDNN support

336e442

to

88401a0

Compare

|

@mxnet-bot run ci [unix-cpu, windows-gpu] |

|

Jenkins CI successfully triggered : [unix-cpu, windows-gpu] |

Description

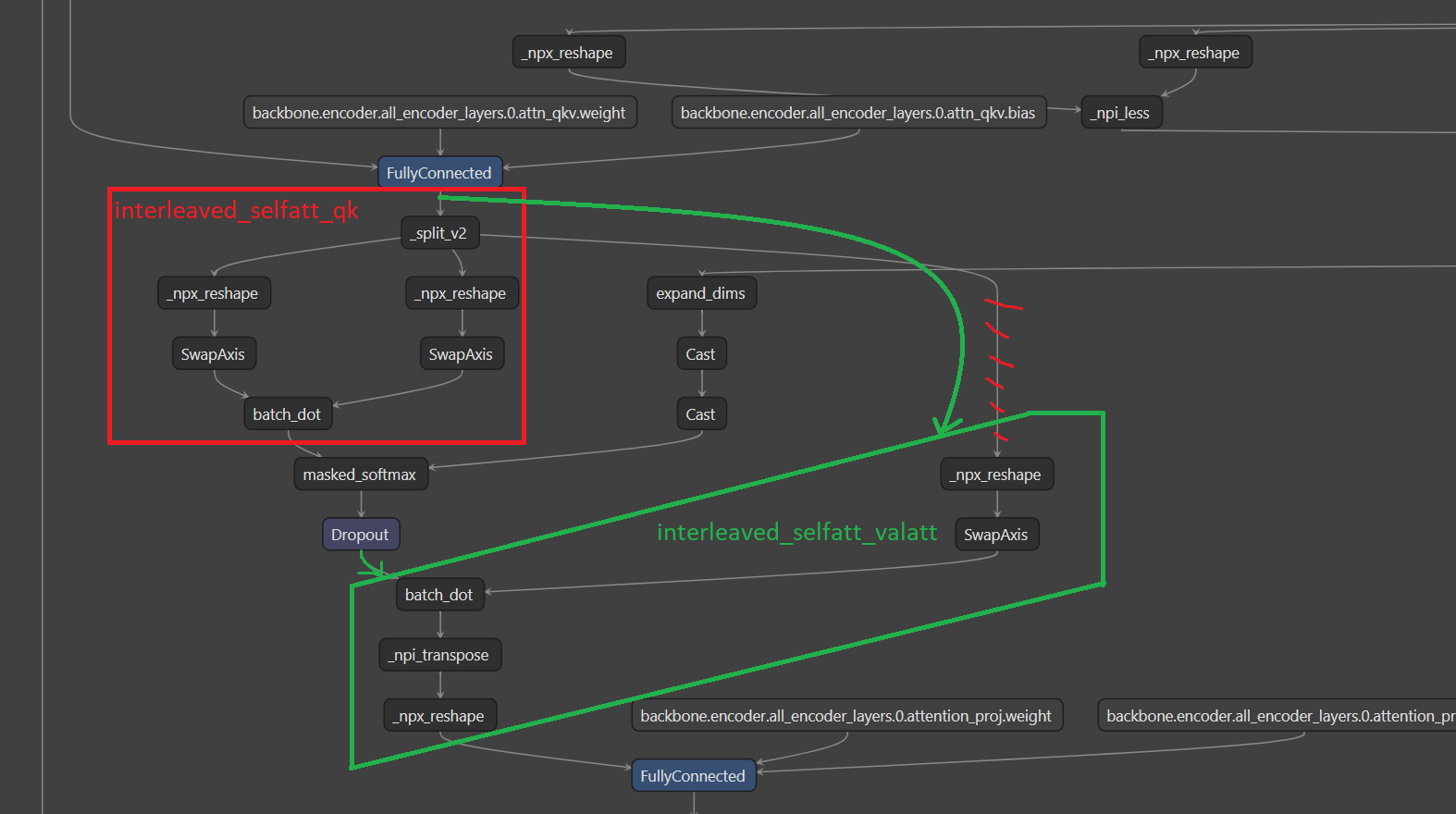

This change utilizes oneDNN matmul primitive capabilities and fuses following sequences of operators:

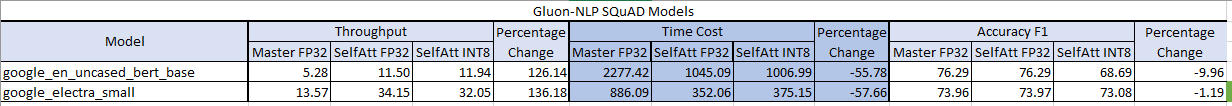

Tested on run_squad.py script from GluonNLP repository (weights downloaded from QA README.md file)

Checklist

Essentials