- Predicting the demand of Clothes using LSTM and 3-layer neural network.

- To run the given codes, install Keras with tensorflow backend in your IPython shell (preferably Anaconda).

Business Problem

- Predicting the demand of various Clothing types in order to avoid inventory wastage.

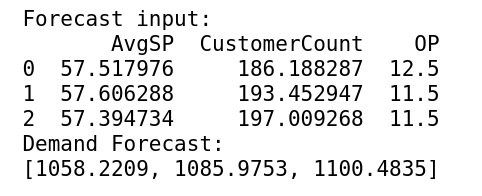

- No input is at disposal, hence the input variables need to be forecasted and then the target variable is regressed through the forecasted input variable

Data Definition and Understanding

- Input variables

- AvgSP - Average Selling Price of SKU

- OP - Average Selling Price of various clothes

- CustomerCount - Total GT Customers for the given SKU ( = CustomerCount + Missed Customers)

-

Target Variable - ActualDemand of SKU ( = Ordered Quantity + Missed Demand)

-

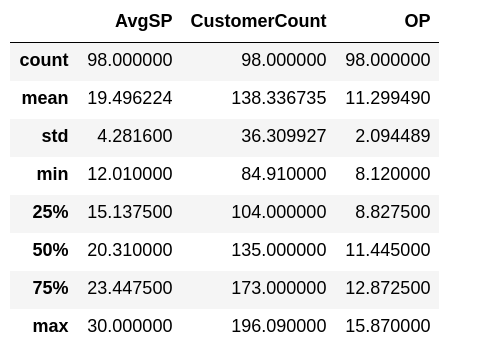

Summary Stats

Training and Test Datasets

- The last week of the complete dataset is considered for testing while the rest of the dataset is considered for training

Function to create Data Input to model

- AvgSP

- @AvgSP is predicted using time series forecasting.

- Long Short-Term Memory (Recurrent Neural Network) method is used for forecasting. The forecasting problem is now considered as a supervised learning problem where the input is the value prior to the target day.

- LSTM is a special type of Neural Network which remembers information across long sequences to facilitate the forecasting.

- Forecasting results

- CustomerCount

- @CustomerCount is predicted using the same method as @AvgSP

- Forecasting Results

Data Modelling

Model Name

- 3-layer Neural Network using Keras Library (tensorflow backend)

- The network is made up of 3 layers:

- Input layer

- Takes input variables and converts them into input equation

- Parameters: no. of neurons (memory blocks) = 16, activation function = linear, weight initializer = normal distribution, kernel and activity regularizer = L1 (alpha = 0.1)

- Hidden Layer

- The processing (optimization) takes place in this layer.

- Parameters: no. of neurons = 8, activation function = linear, weight initializer = normal distribution, kernel and activity regularizer = L1 (alpha = 0.1)

- Output Layer

- Converts the processed results into a reverse scaled output.

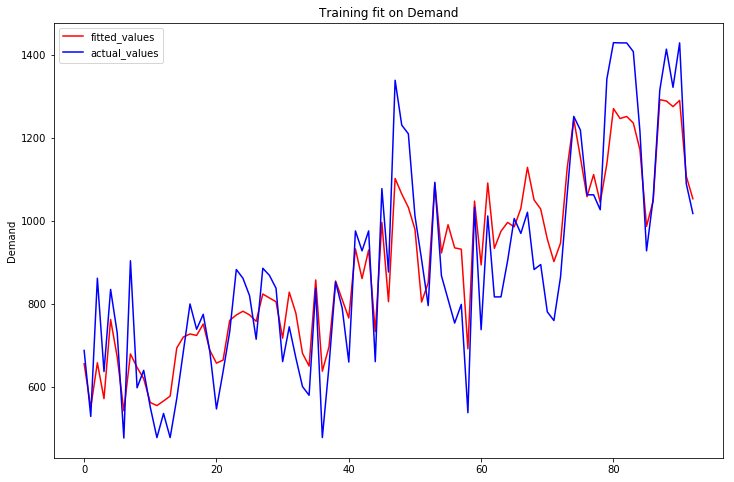

Model Performance