This is the official code for PlaStIL (CoLLAs2023): Plastic and Stable Exemplar-Free Class-Incremental Learning

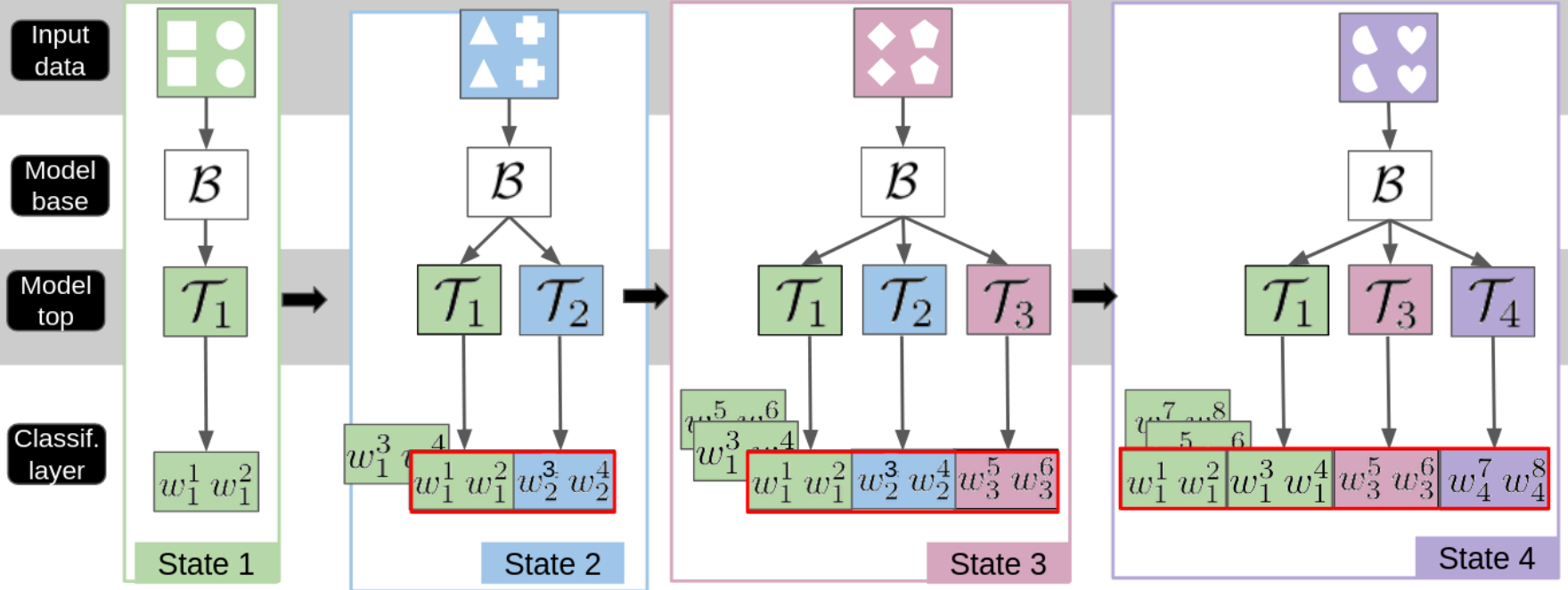

Plasticity and stability are needed in class-incremental learning in order to learn from new data while preserving past knowledge. Due to catastrophic forgetting, finding a compromise between these two properties is particularly challenging when no memory buffer is available. Mainstream methods need to store two deep models since they integrate new classes using fine-tuning with knowledge distillation from the previous incremental state. We propose a method which has similar number of parameters but distributes them differently in order to find a better balance between plasticity and stability. Following an approach already deployed by transfer-based incremental methods, we freeze the feature extractor after the initial state. Classes in the oldest incremental states are trained with this frozen extractor to ensure stability. Recent classes are predicted using partially fine-tuned models in order to introduce plasticity. Our proposed plasticity layer can be incorporated to any transfer-based method designed for exemplar-free incremental learning, and we apply it to two such methods. Evaluation is done with three large-scale datasets. Results show that performance gains are obtained in all tested configurations compared to existing methods.

For the following results K denominates the number of states. The baselines results are recomputed using the original configurations of the methods.

To install the required packages, please run the following command (conda is required), using plastil.yml file:

conda env create -f plastil.ymlIf the installation fails, please try to install the packages manually with the following command:

conda create -n plastil python=3.7

conda activate plastil

conda install pytorch torchvision pytorch-cuda=11.6 -c pytorch -c nvidia

pip install typing-extensions --upgrade

conda install pandas

pip install -U scikit-learn scipy matplotlibThe code depends on the repository utilsCIL which contains the code for the datasets and the incremental learning process. Please clone the repository on your home (PlaStIL code will find it) or add it to your PYTHONPATH:

git clone [email protected]:GregoirePetit/utilsCIL.gitThe chosen exemple is given for the ILSVRC dataset, for equal number of classes per state (100 classes per state). The code can be adapted to other datasets and other number of classes per state.

Using the configs/ilsvrc_b100_seed-1.cf file, you can prepare your experiment. You can change the following parameters:

nb_classes: the total number of classes in the dataset (1000 for ILSVRC, Landmarks and iNaturalist)dataset: the name of the dataset (ilsvrc for ILSVRC, google_landmarks for Landmarks or inat for iNaturalist)first_batch_size: the number of classes in the first state, and the number of classes in the other states (in our experiments, we use 50, 100 or 200 classes per state)random_seed: the random seed used to split the dataset in states, -1 for no random seednum_workers: the number of workers used to load the dataregul: the regularization parameter used for the linearSVC of the PlaStIL classifierstoler: the tolerance parameter used for the linearSVC of the PlaStIL classifiersepochs: the number of epochs used to train the PlaStIL first modellist_root: the path to the list of images of the datasetmodel_root: the path to the modelsfeat_root: the path to the featuresclassifiers_root: the path to the classifierspred_root: the path to the predictionslogs_root: the path to the logsmean_std: the path to the mean and std of the datasetbatch_size: the batch size used to train the PlaStIL first modellr: the learning rate used to train the PlaStIL first modelmomentum: the momentum used to train the PlaStIL first modelweight_decay: the weight decay used to train the PlaStIL first modellrd: the learning rate decay used to train the PlaStIL first model

Once the configuration file is ready, you can run the following command to launch the experiment:

python codes/scratch.py configs/ilsvrc_b100_seed-1.cfpython codes/ft.py configs/ilsvrc_b100_seed-1.cf {last,half,all} {2, ..., S}for example to fine-tune PlaStIL_all:

python codes/ft.py configs/ilsvrc_b100_seed-1.cf all 2

python codes/ft.py configs/ilsvrc_b100_seed-1.cf all 3

...

python codes/ft.py configs/ilsvrc_b100_seed-1.cf all 10python codes/features_extraction.py configs/ilsvrc_b100_seed-1.cf {last,half,all} {1, ..., S}for example to fine-tune PlaStIL_all:

python codes/features_extraction.py configs/ilsvrc_b100_seed-1.cf all 1

python codes/features_extraction.py configs/ilsvrc_b100_seed-1.cf all 2

...

python codes/features_extraction.py configs/ilsvrc_b100_seed-1.cf all 10python codes/train_classifiers.py configs/ilsvrc_b100_seed-1.cf {last,half,all}python codes/compute_predictions.py configs/ilsvrc_b100_seed-1.cf {last,half,all}python codes/eval.py configs/ilsvrc_b100_seed-1.cf {last,half,all} {deesil,plastil}Logs will be saved in the logs_root folder.