diff --git a/.gitattributes b/.gitattributes

index 38cef04d..98345124 100644

--- a/.gitattributes

+++ b/.gitattributes

@@ -1,2 +1,3 @@

* text=auto

test/fixtures/csv/eol.csv text eol=crlf

+test/fixtures/csv/escapeEOL.csv text eol=crlf

diff --git a/.npmignore b/.npmignore

index 23bced8c..b40844a4 100644

--- a/.npmignore

+++ b/.npmignore

@@ -1,5 +1,7 @@

-test

-CHANGELOG.md

.travis.yml

-coverage

.github/

+devtools

+CHANGELOG.md

+test

+coverage

+.nyc_output

diff --git a/README.md b/README.md

index 088d6e2a..ad5694ee 100644

--- a/README.md

+++ b/README.md

@@ -10,64 +10,223 @@ Can be used as a module and from the command line.

See the [CHANGELOG] for details about the latest release.

-## How to use

+## Features

+

+- Uses proper line endings on various operating systems

+- Handles double quotes

+- Allows custom column selection

+- Allows specifying nested properties

+- Reads column selection from file

+- Pretty writing to stdout

+- Supports optional custom delimiters

+- Supports optional custom eol value

+- Supports optional custom quotation marks

+- Optional header.

+- If field doesn't exist in object the field value in CSV will be empty.

+- Preserve new lines in values. Should be used with \r\n line endings for full compatibility with Excel.

+- Add a BOM character at the beginning of the csv to make Excel displaying special characters correctly.

-Install

+## How to install

```bash

+# Global so it can be call from anywhere

+$ npm install -g json2csv

+# or as a dependency of a project

$ npm install json2csv --save

```

-Include the module and run or [use it from the Command Line](https://github.com/zemirco/json2csv#command-line-interface). It's also possible to include `json2csv` as a global using an HTML script tag, though it's normally recommended that modules are used.

+## Command Line Interface

+

+`json2csv` can be called from the command line if installed globally (using the `-g` flag).

+

+```bash

+ Usage: json2csv [options]

+

+

+ Options:

+

+ -V, --version output the version number

+ -i, --input Path and name of the incoming json file. If not provided, will read from stdin.

+ -o, --output [output] Path and name of the resulting csv file. Defaults to stdout.

+ -L, --ldjson Treat the input as Line-Delimited JSON.

+ -s, --no-streamming Process the whole JSON array in memory instead of doing it line by line.

+ -f, --fields Specify the fields to convert.

+ -l, --field-list [list] Specify a file with a list of fields to include. One field per line.

+ -u, --unwind Creates multiple rows from a single JSON document similar to MongoDB unwind.

+ -F, --flatten Flatten nested objects

+ -v, --default-value [defaultValue] Specify a default value other than empty string.

+ -q, --quote [value] Specify an alternate quote value.

+ -Q, --double-quotes [value] Specify a value to replace double quote in strings

+ -d, --delimiter [delimiter] Specify a delimiter other than the default comma to use.

+ -e, --eol [value] Specify an End-of-Line value for separating rows.

+ -E, --excel-strings Converts string data into normalized Excel style data

+ -H, --no-header Disable the column name header

+ -a, --include-empty-rows Includes empty rows in the resulting CSV output.

+ -b, --with-bom Includes BOM character at the beginning of the csv.

+ -p, --pretty Use only when printing to console. Logs output in pretty tables.

+ -h, --help output usage information

+```

+

+An input file `-i` and fields `-f` are required. If no output `-o` is specified the result is logged to the console.

+Use `-p` to show the result in a beautiful table inside the console.

+

+### CLI examples

+

+#### Input file and specify fields

+

+```bash

+$ json2csv -i input.json -f carModel,price,color

+carModel,price,color

+"Audi",10000,"blue"

+"BMW",15000,"red"

+"Mercedes",20000,"yellow"

+"Porsche",30000,"green"

+```

+

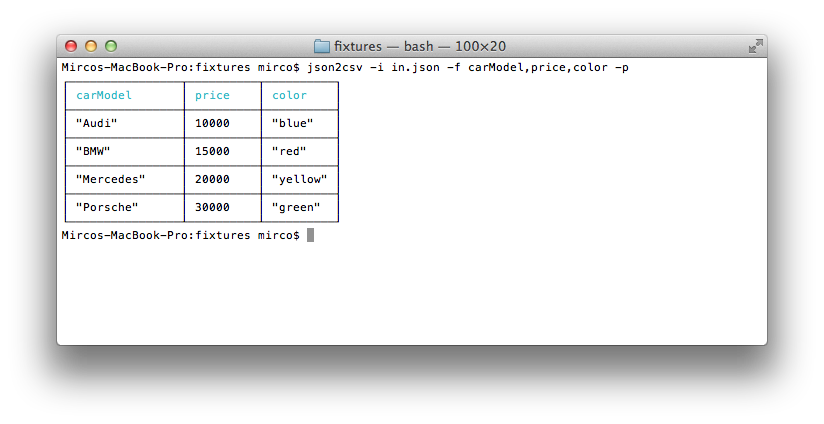

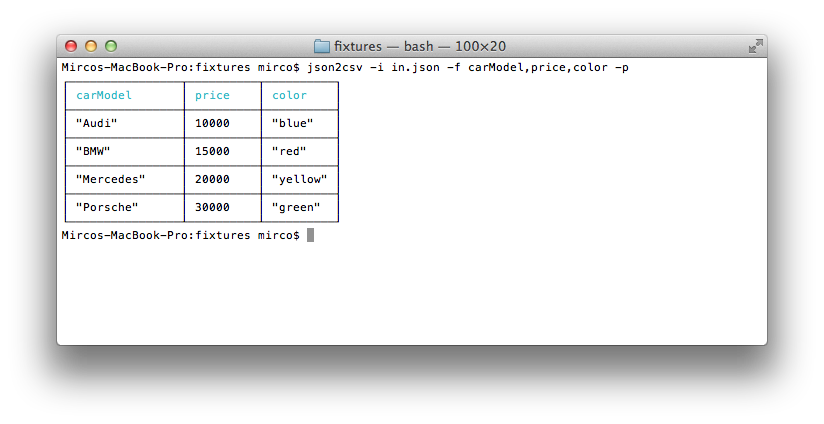

+#### Input file, specify fields and use pretty logging

+

+```bash

+$ json2csv -i input.json -f carModel,price,color -p

+```

+

+

+

+#### Generating CSV containing only specific fields

+

+```bash

+$ json2csv -i input.json -f carModel,price,color -o out.csv

+$ cat out.csv

+carModel,price,color

+"Audi",10000,"blue"

+"BMW",15000,"red"

+"Mercedes",20000,"yellow"

+"Porsche",30000,"green"

+```

+

+Same result will be obtained using passing the fields as a file.

+

+```bash

+$ json2csv -i input.json -l fieldList.txt -o out.csv

+```

+

+where the file `fieldList.txt` contains

+

+```

+carModel

+price

+color

+```

+

+#### Read input from stdin

+

+```bash

+$ json2csv -f price

+[{"price":1000},{"price":2000}]

+```

+

+Hit Enter and afterwards CTRL + D to end reading from stdin. The terminal should show

+

+```

+price

+1000

+2000

+```

+

+#### Appending to existing CSV

+

+Sometimes you want to add some additional rows with the same columns.

+This is how you can do that.

+

+```bash

+# Initial creation of csv with headings

+$ json2csv -i test.json -f name,version > test.csv

+# Append additional rows

+$ json2csv -i test.json -f name,version --no-header >> test.csv

+```

+

+## Javascript module

+

+`json2csv` can also be use programatically from you javascript codebase.

+### Available Options

+

+The programatic APIs take a configuration object very equivalent to the CLI options.

+

+- `fields` - Array of Objects/Strings. Defaults to toplevel JSON attributes. See example below.

+- `ldjson` - Only effective on the streaming API. Indicates that data coming through the stream is ld-json.

+- `unwind` - Array of Strings, creates multiple rows from a single JSON document similar to MongoDB's $unwind

+- `flatten` - Boolean, flattens nested JSON using [flat]. Defaults to `false`.

+- `defaultValue` - String, default value to use when missing data. Defaults to `` if not specified. (Overridden by `fields[].default`)

+- `quote` - String, quote around cell values and column names. Defaults to `"` if not specified.

+- `doubleQuote` - String, the value to replace double quote in strings. Defaults to 2x`quotes` (for example `""`) if not specified.

+- `delimiter` - String, delimiter of columns. Defaults to `,` if not specified.

+- `eol` - String, overrides the default OS line ending (i.e. `\n` on Unix and `\r\n` on Windows).

+- `excelStrings` - Boolean, converts string data into normalized Excel style data.

+- `header` - Boolean, determines whether or not CSV file will contain a title column. Defaults to `true` if not specified.

+- `includeEmptyRows` - Boolean, includes empty rows. Defaults to `false`.

+- `withBOM` - Boolean, with BOM character. Defaults to `false`.

+

+### json2csv parser (Synchronous API)

+

+`json2csv` can also be use programatically as a synchronous converter using its `parse` method.

```javascript

-const json2csv = require('json2csv');

+const Json2csvParser = require('json2csv').Parser;

const fields = ['field1', 'field2', 'field3'];

+const opts = { fields };

try {

- const result = json2csv(myData, { fields });

- console.log(result);

+ const parser = new Json2csvParser(opts);

+ const csv = parser.parse(myData);

+ console.log(csv);

} catch (err) {

- // Errors are thrown for bad options, or if the data is empty and no fields are provided.

- // Be sure to provide fields if it is possible that your data array will be empty.

console.error(err);

}

```

-[other examples](#example-1)

-## Features

+you can also use the convenience method `parse`

-- Uses proper line endings on various operating systems

-- Handles double quotes

-- Allows custom column selection

-- Allows specifying nested properties

-- Reads column selection from file

-- Pretty writing to stdout

-- Supports optional custom delimiters

-- Supports optional custom eol value

-- Supports optional custom quotation marks

-- Not create CSV column title by passing header: false, into params.

-- If field is not exist in object then the field value in CSV will be empty.

-- Preserve new lines in values. Should be used with \r\n line endings for full compatibility with Excel.

-- Add a BOM character at the beginning of the csv to make Excel displaying special characters correctly.

+```javascript

+const json2csv = require('json2csv').parse;

+const fields = ['field1', 'field2', 'field3'];

+const opts = { fields };

-## Use as a module

+try {

+ const csv = json2csv(myData, opts);

+ console.log(csv);

+} catch (err) {

+ console.error(err);

+}

+```

-### Available Options

+### json2csv transform (Streaming API)

+

+The parse method is really good but has the downside of loading the entire JSON array in memory. This might not be optimal or even possible for large JSON files.

+

+For such cases json2csv offers a stream transform so pipe your json content into it and it will output it.

+

+One very important difference between the transform and the parser is that the json objects are processed one by one. In practice, this means that only the fields in the first object of the array are considered and fields in other other objects that were not present in the first one are just ignored. To avoid this. It's advisable to ensure that all the objects contain exactly the same fields or provide the list of fields using the `fields` option.

+

+```javascript

+const fs = require('fs');

+const Json2csvTransform = require('json2csv').Transform;

-- `options` - **Required**; Options hash.

- - `fields` - Array of Objects/Strings. Defaults to toplevel JSON attributes. See example below.

- - `unwind` - Array of Strings, creates multiple rows from a single JSON document similar to MongoDB's $unwind

- - `flatten` - Boolean, flattens nested JSON using [flat]. Defaults to `false`.

- - `defaultValue` - String, default value to use when missing data. Defaults to `` if not specified. (Overridden by `fields[].default`)

- - `quote` - String, quote around cell values and column names. Defaults to `"` if not specified.

- - `doubleQuote` - String, the value to replace double quote in strings. Defaults to 2x`quotes` (for example `""`) if not specified.

- - `delimiter` - String, delimiter of columns. Defaults to `,` if not specified.

- - `eol` - String, overrides the default OS line ending (i.e. `\n` on Unix and `\r\n` on Windows).

- - `excelStrings` - Boolean, converts string data into normalized Excel style data.

- - `header` - Boolean, determines whether or not CSV file will contain a title column. Defaults to `true` if not specified.

- - `includeEmptyRows` - Boolean, includes empty rows. Defaults to `false`.

- - `withBOM` - Boolean, with BOM character. Defaults to `false`.

+const fields = ['field1', 'field2', 'field3'];

+const opts = { fields };

+

+const input = fs.createReadStream(inputPath, { encoding: 'utf8' });

+const output = fs.createWriteStream(outputPath, { encoding: 'utf8' });

+const json2csv = new Json2csvTransform(opts);

+

+const processor = input.pipe(json2csv).pipe(output);

+

+// You can also listen for events on the conversion and see how the header or the lines are coming out.

+json2csv

+ .on('header', header => console.log(header))

+ .on('line', line => console.log(line))

+ .on('error', err => console.log(err));

+```

+

+### Javascript module examples

#### Example `fields` option

``` javascript

@@ -90,12 +249,12 @@ try {

// Support pathname -> pathvalue

'simplepath', // equivalent to {value:'simplepath'}

- 'path.to.value' // also equivalent to {label:'path.to.value', value:'path.to.value'}

+ 'path.to.value' // also equivalent to {value:'path.to.value'}

]

}

```

-### Example 1

+#### Example 1

```javascript

const json2csv = require('json2csv');

@@ -118,13 +277,10 @@ const myCars = [

];

const csv = json2csv(myCars, { fields });

-fs.writeFile('file.csv', csv, (err) => {

- if (err) throw err;

- console.log('file saved');

-});

+console.log(csv);

```

-The content of the "file.csv" should be

+will output to console

```

car, price, color

@@ -133,7 +289,7 @@ car, price, color

"Porsche", 60000, "green"

```

-### Example 2

+#### Example 2

Similarly to [mongoexport](http://www.mongodb.org/display/DOCS/mongoexport) you can choose which fields to export.

@@ -155,31 +311,7 @@ car, color

"Porsche", "green"

```

-### Example 3

-

-Use a custom delimiter to create tsv files. Add it as the value of the delimiter property on the parameters:

-

-```javascript

-const json2csv = require('json2csv');

-const fields = ['car', 'price', 'color'];

-const tsv = json2csv(myCars, { fields, delimiter: '\t' });

-

-console.log(tsv);

-```

-

-Will output:

-

-```

-car price color

-"Audi" 10000 "blue"

-"BMW" 15000 "red"

-"Mercedes" 20000 "yellow"

-"Porsche" 30000 "green"

-```

-

-If no delimiter is specified, the default `,` is used

-

-### Example 4

+#### Example 3

You can choose custom column names for the exported file.

@@ -197,34 +329,7 @@ const csv = json2csv(myCars, { fields });

console.log(csv);

```

-### Example 5

-

-You can choose custom quotation marks.

-

-```javascript

-const json2csv = require('json2csv');

-const fields = [{

- label: 'Car Name',

- value: 'car'

-},{

- label: 'Price USD',

- value: 'price'

-}];

-const csv = json2csv(myCars, { fields, quote: '' });

-

-console.log(csv);

-```

-

-Results in

-

-```

-Car Name, Price USD

-Audi, 10000

-BMW, 15000

-Porsche, 30000

-```

-

-### Example 6

+#### Example 4

You can also specify nested properties using dot notation.

@@ -249,13 +354,10 @@ const myCars = [

];

const csv = json2csv(myCars, { fields });

-fs.writeFile('file.csv', csv, (err) => {

- if (err) throw err;

- console.log('file saved');

-});

+console.log(csv);

```

-The content of the "file.csv" should be

+will output to console

```

car.make, car.model, price, color

@@ -264,7 +366,58 @@ car.make, car.model, price, color

"Porsche", "9PA AF1", 60000, "green"

```

-### Example 7

+#### Example 5

+

+Use a custom delimiter to create tsv files. Add it as the value of the delimiter property on the parameters:

+

+```javascript

+const json2csv = require('json2csv');

+const fields = ['car', 'price', 'color'];

+const tsv = json2csv(myCars, { fields, delimiter: '\t' });

+

+console.log(tsv);

+```

+

+Will output:

+

+```

+car price color

+"Audi" 10000 "blue"

+"BMW" 15000 "red"

+"Mercedes" 20000 "yellow"

+"Porsche" 30000 "green"

+```

+

+If no delimiter is specified, the default `,` is used

+

+#### Example 6

+

+You can choose custom quotation marks.

+

+```javascript

+const json2csv = require('json2csv');

+const fields = [{

+ label: 'Car Name',

+ value: 'car'

+},{

+ label: 'Price USD',

+ value: 'price'

+}];

+const csv = json2csv(myCars, { fields, quote: '' });

+

+console.log(csv);

+```

+

+Results in

+

+```

+Car Name, Price USD

+Audi, 10000

+BMW, 15000

+Porsche, 30000

+```

+

+#### Example 7

You can unwind arrays similar to MongoDB's $unwind operation using the `unwind` option.

@@ -293,13 +446,10 @@ const myCars = [

];

const csv = json2csv(myCars, { fields, unwind: 'colors' });

-fs.writeFile('file.csv', csv, (err) => {

- if (err) throw err;

- console.log('file saved');

-});

+console.log(csv);

```

-The content of the "file.csv" should be

+will output to console

```

"carModel","price","colors"

@@ -314,7 +464,7 @@ The content of the "file.csv" should be

"Porsche",30000,"aqua"

```

-### Example 8

+#### Example 8

You can also unwind arrays multiple times or with nested objects.

@@ -367,13 +517,10 @@ const myCars = [

];

const csv = json2csv(myCars, { fields, unwind: ['items', 'items.items'] });

-fs.writeFile('file.csv', csv, (err) => {

- if (err) throw err;

- console.log('file saved');

-});

+console.log(csv);

```

-The content of the "file.csv" should be

+will output to console

```

"carModel","price","items.name","items.color","items.items.position","items.items.color"

@@ -385,176 +532,35 @@ The content of the "file.csv" should be

"Porsche",30000,"dashboard",,"right","black"

```

-## Command Line Interface

-

-`json2csv` can also be called from the command line if installed with `-g`.

-

-```bash

- Usage: json2csv [options]

-

-

- Options:

-

- -V, --version output the version number

- -i, --input Path and name of the incoming json file. If not provided, will read from stdin.

- -o, --output [output] Path and name of the resulting csv file. Defaults to stdout.

- -L, --ldjson Treat the input as Line-Delimited JSON.

- -f, --fields Specify the fields to convert.

- -l, --field-list [list] Specify a file with a list of fields to include. One field per line.

- -u, --unwind Creates multiple rows from a single JSON document similar to MongoDB unwind.

- -F, --flatten Flatten nested objects

- -v, --default-value [defaultValue] Specify a default value other than empty string.

- -q, --quote [value] Specify an alternate quote value.

- -dq, --double-quotes [value] Specify a value to replace double quote in strings

- -d, --delimiter [delimiter] Specify a delimiter other than the default comma to use.

- -e, --eol [value] Specify an End-of-Line value for separating rows.

- -ex, --excel-strings Converts string data into normalized Excel style data

- -n, --no-header Disable the column name header

- -a, --include-empty-rows Includes empty rows in the resulting CSV output.

- -b, --with-bom Includes BOM character at the beginning of the csv.

- -p, --pretty Use only when printing to console. Logs output in pretty tables.

- -h, --help output usage information

-```

-

-An input file `-i` and fields `-f` are required. If no output `-o` is specified the result is logged to the console.

-Use `-p` to show the result in a beautiful table inside the console.

-

-### CLI examples

-

-#### Input file and specify fields

+## Building

-```bash

-$ json2csv -i input.json -f carModel,price,color

-```

-

-```

-carModel,price,color

-"Audi",10000,"blue"

-"BMW",15000,"red"

-"Mercedes",20000,"yellow"

-"Porsche",30000,"green"

-```

-

-#### Input file, specify fields and use pretty logging

-

-```bash

-$ json2csv -i input.json -f carModel,price,color -p

-```

-

-

-

-#### Input file, specify fields and write to file

-

-```bash

-$ json2csv -i input.json -f carModel,price,color -o out.csv

-```

-

-Content of `out.csv` is

-

-```

-carModel,price,color

-"Audi",10000,"blue"

-"BMW",15000,"red"

-"Mercedes",20000,"yellow"

-"Porsche",30000,"green"

-```

-

-#### Input file, use field list and write to file

-

-The file `fieldList` contains

-

-```

-carModel

-price

-color

-```

-

-Use the following command with the `-l` flag

-

-```bash

-$ json2csv -i input.json -l fieldList -o out.csv

-```

-

-Content of `out.csv` is

-

-```

-carModel,price,color

-"Audi",10000,"blue"

-"BMW",15000,"red"

-"Mercedes",20000,"yellow"

-"Porsche",30000,"green"

-```

-

-#### Read from stdin

-

-```bash

-$ json2csv -f price

-[{"price":1000},{"price":2000}]

-```

-

-Hit Enter and afterwards CTRL + D to end reading from stdin. The terminal should show

+When developing, it's necessary to run `webpack` to prepare the built script. This can be done easily with `npm run build`.

-```

-price

-1000

-2000

-```

+If `webpack` is not already available from the command line, use `npm install -g webpack`.

-#### Appending to existing CSV

+## Testing

-Sometimes you want to add some additional rows with the same columns.

-This is how you can do that.

+Run the folowing command to check the code style.

```bash

-# Initial creation of csv with headings

-$ json2csv -i test.json -f name,version > test.csv

-# Append additional rows

-$ json2csv -i test.json -f name,version --no-header >> test.csv

-```

-

-## Include using a script tag (not recommended)

-

-If it's not possible to work with node modules, `json2csv` can be declared as a global by requesting `dist/json2csv.js` via an HTML script tag:

-

-```

-

-

+$ npm run lint

```

-### Building

-

-When developing, it's necessary to run `webpack` to prepare the built script. This can be done easily with `npm run build`.

-

-If `webpack` is not already available from the command line, use `npm install -g webpack`.

-

-## Testing

-

-Run the following command to test and return coverage

+Run the following command to run the tests and return coverage

```bash

-$ npm test

+$ npm run test-with-coverage

```

## Contributors

-Install require packages for development run following command under json2csv dir.

-

-Run

+After you clone the repository you just need to install the required packages for development by runnning following command under json2csv dir.

```bash

$ npm install

```

-Could you please make sure code is formatted and test passed before submit Pull Requests?

-

-See Testing section above.

-

-## But I want streams!

-

-Check out my other module [json2csv-stream](https://github.com/zemirco/json2csv-stream). It transforms an incoming

-stream containing `json` data into an outgoing `csv` stream.

+Before making any pull request please ensure sure that your code is formatted, test are passing and test coverage haven't decreased. (See [Testing](#testing))

## Similar Projects

diff --git a/bin/json2csv.js b/bin/json2csv.js

index d7498695..ecf57fda 100755

--- a/bin/json2csv.js

+++ b/bin/json2csv.js

@@ -12,27 +12,46 @@ const json2csv = require('../lib/json2csv');

const parseLdJson = require('../lib/parse-ldjson');

const pkg = require('../package');

+const JSON2CSVParser = json2csv.Parser;

+const Json2csvTransform = json2csv.Transform;

+

program

.version(pkg.version)

.option('-i, --input ', 'Path and name of the incoming json file. If not provided, will read from stdin.')

.option('-o, --output [output]', 'Path and name of the resulting csv file. Defaults to stdout.')

.option('-L, --ldjson', 'Treat the input as Line-Delimited JSON.')

+ .option('-s, --no-streamming', 'Process the whole JSON array in memory instead of doing it line by line.')

.option('-f, --fields ', 'Specify the fields to convert.')

.option('-l, --field-list [list]', 'Specify a file with a list of fields to include. One field per line.')

.option('-u, --unwind ', 'Creates multiple rows from a single JSON document similar to MongoDB unwind.')

.option('-F, --flatten', 'Flatten nested objects')

.option('-v, --default-value [defaultValue]', 'Specify a default value other than empty string.')

.option('-q, --quote [value]', 'Specify an alternate quote value.')

- .option('-dq, --double-quotes [value]', 'Specify a value to replace double quote in strings')

+ .option('-Q, --double-quotes [value]', 'Specify a value to replace double quote in strings')

.option('-d, --delimiter [delimiter]', 'Specify a delimiter other than the default comma to use.')

.option('-e, --eol [value]', 'Specify an End-of-Line value for separating rows.')

- .option('-ex, --excel-strings','Converts string data into normalized Excel style data')

- .option('-n, --no-header', 'Disable the column name header')

+ .option('-E, --excel-strings','Converts string data into normalized Excel style data')

+ .option('-H, --no-header', 'Disable the column name header')

.option('-a, --include-empty-rows', 'Includes empty rows in the resulting CSV output.')

.option('-b, --with-bom', 'Includes BOM character at the beginning of the csv.')

.option('-p, --pretty', 'Use only when printing to console. Logs output in pretty tables.')

.parse(process.argv);

+const inputPath = (program.input && !path.isAbsolute(program.input))

+ ? path.join(process.cwd(), program.input)

+ : program.input;

+

+const outputPath = (program.output && !path.isAbsolute(program.output))

+ ? path.join(process.cwd(), program.output)

+ : program.output;

+

+// don't fail if piped to e.g. head

+process.stdout.on('error', (error) => {

+ if (error.code === 'EPIPE') {

+ process.exit();

+ }

+});

+

function getFields(fieldList, fields) {

if (fieldList) {

return new Promise((resolve, reject) => {

@@ -54,11 +73,7 @@ function getFields(fieldList, fields) {

}

function getInput(input, ldjson) {

- if (input) {

- const inputPath = path.isAbsolute(input)

- ? input

- : path.join(process.cwd(), input);

-

+ if (inputPath) {

if (ldjson) {

return new Promise((resolve, reject) => {

fs.readFile(inputPath, 'utf8', (err, data) => {

@@ -103,14 +118,27 @@ function logPretty(csv) {

return table.toString();

}

-Promise.all([

- getInput(program.input, program.ldjson),

- getFields(program.fieldList, program.fields)

-])

- .then((results) => {

- const input = results[0];

- const fields = results[1];

+function processOutput(csv) {

+ if (outputPath) {

+ return new Promise((resolve, reject) => {

+ fs.writeFile(outputPath, csv, (err) => {

+ if (err) {

+ reject(new Error('Cannot save to ' + program.output + ': ' + err));

+ return;

+ }

+

+ debug(program.input + ' successfully converted to ' + program.output);

+ resolve();

+ });

+ });

+ }

+

+ // eslint-disable-next-line no-console

+ console.log(program.pretty ? logPretty(csv) : csv);

+}

+getFields(program.fieldList, program.fields)

+ .then((fields) => {

const opts = {

fields: fields,

unwind: program.unwind ? program.unwind.split(',') : [],

@@ -126,32 +154,42 @@ Promise.all([

withBOM: program.withBom

};

- return json2csv(input, opts);

- })

- .then((csv) => {

+ if (program.streamming === false) {

+ return getInput(program.input, program.ldjson)

+ .then(input => new JSON2CSVParser(opts).parse(input))

+ .then(processOutput);

+ }

+

+ const transform = new Json2csvTransform(opts);

+ const input = fs.createReadStream(inputPath, { encoding: 'utf8' });

+ const stream = input.pipe(transform);

+

if (program.output) {

+ const output = fs.createWriteStream(outputPath, { encoding: 'utf8' });

+ const outputStream = stream.pipe(output);

return new Promise((resolve, reject) => {

- fs.writeFile(program.output, csv, (err) => {

- if (err) {

- reject(Error('Cannot save to ' + program.output + ': ' + err));

- return;

- }

-

- debug(program.input + ' successfully converted to ' + program.output);

- resolve();

- });

+ outputStream.on('finish', () => resolve()); // not sure why you want to pass a boolean

+ outputStream.on('error', reject); // don't forget this!

});

}

- // don't fail if piped to e.g. head

- process.stdout.on('error', (error) => {

- if (error.code === 'EPIPE') {

- process.exit();

- }

- });

+ if (!program.pretty) {

+ const output = stream.pipe(process.stdout);

+ return new Promise((resolve, reject) => {

+ output.on('finish', () => resolve()); // not sure why you want to pass a boolean

+ output.on('error', reject); // don't forget this!

+ });

+ }

- // eslint-disable-next-line no-console

- console.log(program.pretty ? logPretty(csv) : csv);

+ let csv = '';

+ return new Promise((resolve, reject) => {

+ stream

+ .on('data', chunk => (csv += chunk.toString()))

+ .on('end', () => resolve(csv))

+ .on('error', reject);

+ })

+ // eslint-disable-next-line no-console

+ .then(() => console.log(logPretty(csv)));

})

// eslint-disable-next-line no-console

.catch(console.log);

diff --git a/index.d.ts b/index.d.ts

index fa097c35..865ae33f 100644

--- a/index.d.ts

+++ b/index.d.ts

@@ -1,6 +1,6 @@

declare namespace json2csv {

interface FieldValueCallback {

- (row: T, field: string, data: string): string;

+ (row: T, field: string): string;

}

interface FieldBase {

@@ -17,6 +17,7 @@ declare namespace json2csv {

}

interface Options {

+ ldjson?: boolean;

fields?: (string | Field | CallbackField)[];

unwind?: string | string[];

flatten?: boolean;

@@ -30,15 +31,9 @@ declare namespace json2csv {

includeEmptyRows?: boolean;

withBOM?: boolean;

}

-

- interface Callback {

- (error: Error, csv: string): void;

- }

}

-declare function json2csv(data: Any, options: json2csv.Options, callback: json2csv.Callback): void;

-declare function json2csv(data: Any, options: json2csv.Options): string;

-declare function json2csv(data: Any, options: json2csv.Options<{ [key: string]: string; }>, callback: json2csv.Callback): void;

-declare function json2csv(data: Any, options: json2csv.Options<{ [key: string]: string; }>): string;

+declare function parse(data: Any, options: json2csv.Options): string;

+declare function parse(data: Any, options: json2csv.Options<{ [key: string]: string; }>): string;

-export = json2csv;

+export = parse;

diff --git a/lib/JSON2CSVBase.js b/lib/JSON2CSVBase.js

new file mode 100644

index 00000000..cac5b7e1

--- /dev/null

+++ b/lib/JSON2CSVBase.js

@@ -0,0 +1,216 @@

+'use strict';

+

+const os = require('os');

+const lodashGet = require('lodash.get');

+const lodashSet = require('lodash.set');

+const lodashCloneDeep = require('lodash.clonedeep');

+const flatten = require('flat');

+

+class JSON2CSVBase {

+ constructor(params) {

+ this.params = this.preprocessParams(params);

+ }

+

+ /**

+ * Check passing params and set defaults.

+ *

+ * @param {Array|Object} data Array or object to be converted to CSV

+ * @param {Json2CsvParams} params Function parameters containing fields,

+ * delimiter, default value, mark quote and header

+ */

+ preprocessParams(params) {

+ const processedParams = params || {};

+ processedParams.unwind = !Array.isArray(processedParams.unwind)

+ ? (processedParams.unwind ? [processedParams.unwind] : [])

+ : processedParams.unwind

+ processedParams.delimiter = processedParams.delimiter || ',';

+ processedParams.eol = processedParams.eol || os.EOL;

+ processedParams.quote = typeof processedParams.quote === 'string'

+ ? params.quote

+ : '"';

+ processedParams.doubleQuote = typeof processedParams.doubleQuote === 'string'

+ ? processedParams.doubleQuote

+ : Array(3).join(processedParams.quote);

+ processedParams.defaultValue = processedParams.defaultValue;

+ processedParams.header = processedParams.header !== false;

+ processedParams.includeEmptyRows = processedParams.includeEmptyRows || false;

+ processedParams.withBOM = processedParams.withBOM || false;

+

+ return processedParams;

+ }

+

+ /**

+ * Create the title row with all the provided fields as column headings

+ *

+ * @returns {String} titles as a string

+ */

+ getHeader() {

+ return this.params.fields

+ .map(field =>

+ (typeof field === 'string')

+ ? field

+ : (field.label || field.value)

+ ).map(header =>

+ JSON.stringify(header)

+ .replace(/"/g, this.params.quote)

+ )

+ .join(this.params.delimiter);

+ }

+

+ /**

+ * Preprocess each object according to the give params (unwind, flatten, etc.).

+ *

+ * @param {Object} row JSON object to be converted in a CSV row

+ */

+ preprocessRow(row) {

+ const processedRow = (this.params.unwind && this.params.unwind.length)

+ ? this.unwindData(row, this.params.unwind)

+ : [row];

+ if (this.params.flatten) {

+ return processedRow.map(flatten);

+ }

+

+ return processedRow;

+ }

+

+ /**

+ * Create the content of a specific CSV row

+ *

+ * @param {Object} row JSON object to be converted in a CSV row

+ * @returns {String} CSV string (row)

+ */

+ processRow(row) {

+ if (!row

+ || (Object.getOwnPropertyNames(row).length === 0

+ && !this.params.includeEmptyRows)) {

+ return undefined;

+ }

+

+ return this.params.fields

+ .map(fieldInfo => this.processField(row, fieldInfo))

+ .join(this.params.delimiter);

+ }

+

+ /**

+ * Create the content of a specfic CSV row cell

+ *

+ * @param {Object} row JSON object representing the CSV row that the cell belongs to

+ * @param {Object} fieldInfo Details of the field to process to be a CSV cell

+ * @param {Object} params Function parameters

+ * @returns {String} CSV string (cell)

+ */

+ processField(row, fieldInfo) {

+ const isFieldInfoObject = typeof fieldInfo === 'object';

+ const defaultValue = isFieldInfoObject && 'default' in fieldInfo

+ ? fieldInfo.default

+ : this.params.defaultValue;

+ const stringify = isFieldInfoObject && fieldInfo.stringify !== undefined

+ ? fieldInfo.stringify

+ : true;

+

+ let value;

+ if (fieldInfo) {

+ if (typeof fieldInfo === 'string') {

+ value = lodashGet(row, fieldInfo, defaultValue);

+ } else if (typeof fieldInfo === 'object') {

+ if (typeof fieldInfo.value === 'string') {

+ value = lodashGet(row, fieldInfo.value, defaultValue);

+ } else if (typeof fieldInfo.value === 'function') {

+ const field = {

+ label: fieldInfo.label,

+ default: fieldInfo.default

+ };

+ value = fieldInfo.value(row, field);

+ }

+ }

+ }

+

+ value = (value === null || value === undefined)

+ ? defaultValue

+ : value;

+

+ if (value === null || value === undefined) {

+ return undefined;

+ }

+

+ const isValueString = typeof value === 'string';

+ if (isValueString) {

+ value = value

+ .replace(/\n/g, '\u2028')

+ .replace(/\r/g, '\u2029');

+ }

+

+ //JSON.stringify('\\') results in a string with two backslash

+ //characters in it. I.e. '\\\\'.

+ let stringifiedValue = (stringify

+ ? JSON.stringify(value)

+ : value);

+

+ if (typeof value === 'object' && !/^"(.*)"$/.test(stringifiedValue)) {

+ // Stringify object that are not stringified to a

+ // JSON string (like Date) to escape commas, quotes, etc.

+ stringifiedValue = JSON.stringify(stringifiedValue);

+ }

+

+ if (stringifiedValue === undefined) {

+ return undefined;

+ }

+

+ if (isValueString) {

+ stringifiedValue = stringifiedValue

+ .replace(/\u2028/g, '\n')

+ .replace(/\u2029/g, '\r');

+ }

+

+ //Replace single quote with double quote. Single quote are preceeded by

+ //a backslash, and it's not at the end of the stringifiedValue.

+ stringifiedValue = stringifiedValue

+ .replace(/^"(.*)"$/, this.params.quote + '$1' + this.params.quote)

+ .replace(/(\\")(?=.)/g, this.params.doubleQuote)

+ .replace(/\\\\/g, '\\');

+

+ if (this.params.excelStrings && typeof value === 'string') {

+ stringifiedValue = '"="' + stringifiedValue + '""';

+ }

+

+ return stringifiedValue;

+ }

+

+ /**

+ * Performs the unwind recursively in specified sequence

+ *

+ * @param {Array} dataRow Original JSON object

+ * @param {String[]} unwindPaths The paths as strings to be used to deconstruct the array

+ * @returns {Array} Array of objects containing all rows after unwind of chosen paths

+ */

+ unwindData(dataRow, unwindPaths) {

+ return Array.prototype.concat.apply([],

+ unwindPaths.reduce((data, unwindPath) =>

+ Array.prototype.concat.apply([],

+ data.map((dataEl) => {

+ const unwindArray = lodashGet(dataEl, unwindPath);

+

+ if (!Array.isArray(unwindArray)) {

+ return dataEl;

+ }

+

+ if (unwindArray.length) {

+ return unwindArray.map((unwindEl) => {

+ const dataCopy = lodashCloneDeep(dataEl);

+ lodashSet(dataCopy, unwindPath, unwindEl);

+ return dataCopy;

+ });

+ }

+

+ const dataCopy = lodashCloneDeep(dataEl);

+ lodashSet(dataCopy, unwindPath, undefined);

+ return dataCopy;

+ })

+ ),

+ [dataRow]

+ )

+ )

+ }

+}

+

+module.exports = JSON2CSVBase;

diff --git a/lib/JSON2CSVParser.js b/lib/JSON2CSVParser.js

new file mode 100644

index 00000000..181e9eea

--- /dev/null

+++ b/lib/JSON2CSVParser.js

@@ -0,0 +1,68 @@

+'use strict';

+

+const JSON2CSVBase = require('./JSON2CSVBase');

+

+class JSON2CSVParser extends JSON2CSVBase {

+ /**

+ * Main function that converts json to csv.

+ *

+ * @param {Array} data Array of JSON objects to be converted to CSV

+ * @param {Json2CsvParams} params parameters containing data and

+ * and options to configure how that data is processed.

+ * @returns {String} The CSV formated data as a string

+ */

+ parse(data) {

+ const processedData = this.preprocessData(data);

+

+ if (!this.params.fields) {

+ const dataFields = Array.prototype.concat.apply([],

+ processedData.map(item => Object.keys(item))

+ );

+ this.params.fields = dataFields

+ .filter((field, pos, arr) => arr.indexOf(field) == pos);

+ }

+

+ const header = this.params.header ? this.getHeader() : '';

+ const rows = this.processData(processedData);

+ const csv = (this.params.withBOM ? '\ufeff' : '')

+ + header

+ + ((header && rows) ? this.params.eol : '')

+ + rows;

+

+ return csv;

+ }

+

+ /**

+ * Preprocess the data according to the give params (unwind, flatten, etc.)

+ and calculate the fields and field names if they are not provided.

+ *

+ * @param {Array|Object} data Array or object to be converted to CSV

+ */

+ preprocessData(data) {

+ const processedData = Array.isArray(data) ? data : [data];

+

+ if (processedData.length === 0 || typeof processedData[0] !== 'object') {

+ throw new Error('params should include "fields" and/or non-empty "data" array of objects');

+ }

+

+ return Array.prototype.concat.apply([],

+ processedData.map(row => this.preprocessRow(row))

+ );

+ }

+

+ /**

+ * Create the content row by row below the header

+ *

+ * @param {Array} data Array of JSON objects to be converted to CSV

+ * @param {Object} params Function parameters

+ * @returns {String} CSV string (body)

+ */

+ processData(data) {

+ return data

+ .map(row => this.processRow(row))

+ .filter(row => row) // Filter empty rows

+ .join(this.params.eol);

+ }

+}

+

+module.exports = JSON2CSVParser

\ No newline at end of file

diff --git a/lib/JSON2CSVTransform.js b/lib/JSON2CSVTransform.js

new file mode 100644

index 00000000..e1b30eb9

--- /dev/null

+++ b/lib/JSON2CSVTransform.js

@@ -0,0 +1,153 @@

+'use strict';

+

+const Transform = require('stream').Transform;

+const Parser = require('jsonparse');

+const JSON2CSVBase = require('./JSON2CSVBase');

+

+class JSON2CSVTransform extends Transform {

+ constructor(params) {

+ super(params);

+

+ // Inherit methods from JSON2CSVBase since extends doesn't

+ // allow multiple inheritance and manually preprocess params

+ Object.getOwnPropertyNames(JSON2CSVBase.prototype)

+ .forEach(key => (this[key] = JSON2CSVBase.prototype[key]));

+ this.params = this.preprocessParams(params);

+

+ this._data = '';

+ this._hasWritten = false;

+

+ if (this.params.ldjson) {

+ this.initLDJSONParse();

+ } else {

+ this.initJSONParser();

+ }

+

+ if (this.params.withBOM) {

+ this.push('\ufeff');

+ }

+

+ }

+

+ /**

+ * Init the transform with a parser to process LD-JSON data.

+ * It maintains a buffer of received data, parses each line

+ * as JSON and send it to `pushLine for processing.

+ */

+ initLDJSONParse() {

+ const transform = this;

+

+ this.parser = {

+ _data: '',

+ write(chunk) {

+ this._data += chunk.toString();

+ const lines = this._data

+ .split('\n')

+ .map(line => line.trim())

+ .filter(line => line !== '');

+

+ lines

+ .forEach((line, i) => {

+ try {

+ transform.pushLine(JSON.parse(line));

+ } catch(e) {

+ if (i !== lines.length - 1) {

+ e.message = 'Invalid JSON (' + line + ')'

+ transform.emit('error', e);

+ }

+ }

+ });

+ this._data = this._data.slice(this._data.lastIndexOf('\n'));

+ }

+ };

+ }

+

+ /**

+ * Init the transform with a parser to process JSON data.

+ * It maintains a buffer of received data, parses each as JSON

+ * item if the data is an array or the data itself otherwise

+ * and send it to `pushLine` for processing.

+ */

+ initJSONParser() {

+ const transform = this;

+ this.parser = new Parser();

+ this.parser.onValue = function (value) {

+ if (this.stack.length !== this.depthToEmit) return;

+ transform.pushLine(value);

+ }

+

+ this.parser._onToken = this.parser.onToken;

+

+ this.parser.onToken = function (token, value) {

+ transform.parser._onToken(token, value);

+

+ if (this.stack.length === 0

+ && !transform.params.fields

+ && this.mode !== Parser.C.ARRAY

+ && this.mode !== Parser.C.OBJECT) {

+ this.onError(new Error('params should include "fields" and/or non-empty "data" array of objects'));

+ }

+ if (this.stack.length === 1) {

+ if(this.depthToEmit === undefined) {

+ // If Array emit its content, else emit itself

+ this.depthToEmit = (this.mode === Parser.C.ARRAY) ? 1 : 0;

+ }

+

+ if (this.depthToEmit !== 0 && this.stack.length === 1) {

+ // No need to store the whole root array in memory

+ this.value = undefined;

+ }

+ }

+ }

+

+ this.parser.onError = function (err) {

+ if(err.message.indexOf('Unexpected') > -1) {

+ err.message = 'Invalid JSON (' + err.message + ')';

+ }

+ transform.emit('error', err);

+ }

+ }

+

+ /**

+ * Main function that send data to the parse to be processed.

+ *

+ * @param {Buffer} chunk Incoming data

+ * @param {String} encoding Encoding of the incoming data. Defaults to 'utf8'

+ * @param {Function} done Called when the proceesing of the supplied chunk is done

+ */

+ _transform(chunk, encoding, done) {

+ this.parser.write(chunk);

+ done();

+ }

+

+ /**

+ * Transforms an incoming json data to csv and pushes it downstream.

+ *

+ * @param {Object} data JSON object to be converted in a CSV row

+ */

+ pushLine(data) {

+ const processedData = this.preprocessRow(data);

+

+ if (!this._hasWritten) {

+ this.params.fields = this.params.fields || Object.keys(processedData[0]);

+ if (this.params.header) {

+ const header = this.getHeader(this.params);

+ this.emit('header', header);

+ this.push(header);

+ this._hasWritten = true;

+ }

+ }

+

+ processedData.forEach(row => {

+ const line = this.processRow(row, this.params);

+ if (line === undefined) return;

+ const eoledLine = (this._hasWritten ? this.params.eol : '')

+ + line;

+ this.emit('line', eoledLine);

+ this.push(eoledLine);

+ this._hasWritten = true;

+ });

+ }

+}

+

+module.exports = JSON2CSVTransform;

diff --git a/lib/json2csv.js b/lib/json2csv.js

index 195b31d9..ac2c1a37 100644

--- a/lib/json2csv.js

+++ b/lib/json2csv.js

@@ -1,296 +1,10 @@

'use strict';

-/**

- * Module dependencies.

- */

-const os = require('os');

-const lodashGet = require('lodash.get');

-const lodashSet = require('lodash.set');

-const lodashCloneDeep = require('lodash.clonedeep');

-const flatten = require('flat');

+const JSON2CSVParser = require('./JSON2CSVParser');

+const JSON2CSVTransform = require('./JSON2CSVTransform');

-/**

- * @name Json2CsvParams

- * @typedef {Object}

- * @property {Array} [fields] - see documentation for details

- * @property {String[]} [unwind] - similar to MongoDB's $unwind, Deconstructs an array field from the input JSON to output a row for each element

- * @property {Boolean} [flatten=false] - flattens nested JSON using flat (https://www.npmjs.com/package/flat)

- * @property {String} [defaultValue=""] - default value to use when missing data

- * @property {String} [quote='"'] - quote around cell values and column names

- * @property {String} [doubleQuote='""'] - the value to replace double quote in strings

- * @property {String} [delimiter=","] - delimiter of columns

- * @property {String} [eol=''] - overrides the default OS line ending (\n on Unix \r\n on Windows)

- * @property {Boolean} [excelStrings] - converts string data into normalized Excel style data

- * @property {Boolean} [header=true] - determines whether or not CSV file will contain a title column

- * @property {Boolean} [includeEmptyRows=false] - includes empty rows

- * @property {Boolean} [withBOM=false] - includes BOM character at the beginning of the csv

- */

+module.exports.Parser = JSON2CSVParser;

+module.exports.Transform = JSON2CSVTransform;

-/**

- * Main function that converts json to csv.

- *

- * @param {Array} data Array of JSON objects to be converted to CSV

- * @param {Json2CsvParams} params parameters containing data and

- * and options to configure how that data is processed.

- * @returns {String} The CSV formated data as a string

- */

-module.exports = function (data, params) {

- const processedParams = preprocessParams(params);

- const processedData = preprocessData(data, processedParams);

-

- if (!processedParams.fields) {

- const dataFields = Array.prototype.concat.apply([],

- processedData.map(item => Object.keys(item))

- );

- processedParams.fields = dataFields

- .filter((field, pos, arr) => arr.indexOf(field) == pos);

- }

-

- const header = processedParams.header ? processHeaders(processedParams) : '';

- const rows = processData(processedData, processedParams);

- const csv = (processedParams.withBOM ? '\ufeff' : '')

- + header

- + ((header && rows) ? processedParams.eol : '')

- + rows;

-

- return csv;

-};

-

-/**

- * Check passing params and set defaults.

- *

- * @param {Array|Object} data Array or object to be converted to CSV

- * @param {Json2CsvParams} params Function parameters containing fields,

- * delimiter, default value, mark quote and header

- */

-function preprocessParams(params) {

- const processedParams = params || {};

- processedParams.unwind = !Array.isArray(processedParams.unwind)

- ? (processedParams.unwind ? [processedParams.unwind] : [])

- : processedParams.unwind

- processedParams.delimiter = processedParams.delimiter || ',';

- processedParams.eol = processedParams.eol || os.EOL;

- processedParams.quote = typeof processedParams.quote === 'string'

- ? params.quote

- : '"';

- processedParams.doubleQuote = typeof processedParams.doubleQuote === 'string'

- ? processedParams.doubleQuote

- : Array(3).join(processedParams.quote);

- processedParams.defaultValue = processedParams.defaultValue;

- processedParams.header = processedParams.header !== false;

- processedParams.includeEmptyRows = processedParams.includeEmptyRows || false;

- processedParams.withBOM = processedParams.withBOM || false;

-

- return processedParams;

-}

-

-/**

- * Preprocess the data according to the give params (unwind, flatten, etc.)

- and calculate the fields and field names if they are not provided.

- *

- * @param {Array|Object} data Array or object to be converted to CSV

- * @param {Json2CsvParams} params Function parameters containing fields,

- * delimiter, default value, mark quote and header

- */

-function preprocessData(data, params) {

- // if data is an Object, not in array [{}], then just create 1 item array.

- // So from now all data in array of object format.

- let processedData = Array.isArray(data) ? data : [data];

-

- // Set params.fields default to first data element's keys

- if (processedData.length === 0 || typeof processedData[0] !== 'object') {

- throw new Error('params should include "fields" and/or non-empty "data" array of objects');

- }

-

- processedData = Array.prototype.concat.apply([],

- processedData.map(row => preprocessRow(row, params))

- );

-

- return processedData;

-}

-

-/**

- * Preprocess each object according to the give params (unwind, flatten, etc.).

- *

- * @param {Object} row JSON object to be converted in a CSV row

- * @param {Json2CsvParams} params Function parameters containing fields,

- * delimiter, default value, mark quote and header

- */

-function preprocessRow(row, params) {

- const processedRow = (params.unwind && params.unwind.length)

- ? unwindData(row, params.unwind)

- : [row];

- if (params.flatten) {

- return processedRow.map(flatten);

- }

-

- return processedRow;

-}

-

-/**

- * Create the title row with all the provided fields as column headings

- *

- * @param {Json2CsvParams} params Function parameters containing data, fields and delimiter

- * @returns {String} titles as a string

- */

-function processHeaders(params) {

- return params.fields

- .map((field) =>

- (typeof field === 'string')

- ? field

- : (field.label || field.value)

- ).map(header => JSON.stringify(header).replace(/"/g, params.quote))

- .join(params.delimiter);

-}

-

-/**

- * Create the content row by row below the headers

- *

- * @param {Array} data Array of JSON objects to be converted to CSV

- * @param {Object} params Function parameters

- * @returns {String} CSV string (body)

- */

-function processData(data, params) {

- return data

- .map(row => processRow(row, params))

- .filter(row => row) // Filter empty rows

- .join(params.eol);

-}

-

-/**

- * Create the content of a specific CSV row

- *

- * @param {Object} row JSON object to be converted in a CSV row

- * @param {Object} params Function parameters

- * @returns {String} CSV string (row)

- */

-function processRow(row, params) {

- if (!row || (Object.getOwnPropertyNames(row).length === 0 && !params.includeEmptyRows)) {

- return undefined;

- }

-

- return params.fields

- .map(fieldInfo => processField(row, fieldInfo, params))

- .join(params.delimiter);

-}

-

-/**

- * Create the content of a specfic CSV row cell

- *

- * @param {Object} row JSON object representing the CSV row that the cell belongs to

- * @param {Object} fieldInfo Details of the field to process to be a CSV cell

- * @param {Object} params Function parameters

- * @returns {String} CSV string (cell)

- */

-function processField(row, fieldInfo, params) {

- const isFieldInfoObject = typeof fieldInfo === 'object';

- const defaultValue = isFieldInfoObject && 'default' in fieldInfo

- ? fieldInfo.default

- : params.defaultValue;

- const stringify = isFieldInfoObject && fieldInfo.stringify !== undefined

- ? fieldInfo.stringify

- : true;

-

- let value;

- if (fieldInfo) {

- if (typeof fieldInfo === 'string') {

- value = lodashGet(row, fieldInfo, defaultValue);

- } else if (typeof fieldInfo === 'object') {

- if (typeof fieldInfo.value === 'string') {

- value = lodashGet(row, fieldInfo.value, defaultValue);

- } else if (typeof fieldInfo.value === 'function') {

- const field = {

- label: fieldInfo.label,

- default: fieldInfo.default

- };

- value = fieldInfo.value(row, field);

- }

- }

- }

-

- value = (value === null || value === undefined)

- ? defaultValue

- : value;

-

- if (value === null || value === undefined) {

- return undefined;

- }

-

- const isValueString = typeof value === 'string';

- if (isValueString) {

- value = value

- .replace(/\n/g, '\u2028')

- .replace(/\r/g, '\u2029');

- }

-

- //JSON.stringify('\\') results in a string with two backslash

- //characters in it. I.e. '\\\\'.

- let stringifiedValue = (stringify

- ? JSON.stringify(value)

- : value);

-

- if (typeof value === 'object' && !/^"(.*)"$/.test(stringifiedValue)) {

- // Stringify object that are not stringified to a

- // JSON string (like Date) to escape commas, quotes, etc.

- stringifiedValue = JSON.stringify(stringifiedValue);

- }

-

- if (stringifiedValue === undefined) {

- return undefined;

- }

-

- if (isValueString) {

- stringifiedValue = stringifiedValue

- .replace(/\u2028/g, '\n')

- .replace(/\u2029/g, '\r');

- }

-

- //Replace single quote with double quote. Single quote are preceeded by

- //a backslash, and it's not at the end of the stringifiedValue.

- stringifiedValue = stringifiedValue

- .replace(/^"(.*)"$/, params.quote + '$1' + params.quote)

- .replace(/(\\")(?=.)/g, params.doubleQuote)

- .replace(/\\\\/g, '\\');

-

- if (params.excelStrings && typeof value === 'string') {

- stringifiedValue = '"="' + stringifiedValue + '""';

- }

-

- return stringifiedValue;

-}

-

-/**

- * Performs the unwind recursively in specified sequence

- *

- * @param {Array} dataRow Original JSON object

- * @param {String[]} unwindPaths The params.unwind value. Unwind strings to be used to deconstruct array

- * @returns {Array} Array of objects containing all rows after unwind of chosen paths

- */

-function unwindData(dataRow, unwindPaths) {

- return Array.prototype.concat.apply([],

- unwindPaths.reduce((data, unwindPath) =>

- Array.prototype.concat.apply([],

- data.map((dataEl) => {

- const unwindArray = lodashGet(dataEl, unwindPath);

-

- if (!Array.isArray(unwindArray)) {

- return dataEl;

- }

-

- if (unwindArray.length) {

- return unwindArray.map((unwindEl) => {

- const dataCopy = lodashCloneDeep(dataEl);

- lodashSet(dataCopy, unwindPath, unwindEl);

- return dataCopy;

- });

- }

-

- const dataCopy = lodashCloneDeep(dataEl);

- lodashSet(dataCopy, unwindPath, undefined);

- return dataCopy;

- })

- ),

- [dataRow]

- )

- )

-}

+// Convenience method to keep the API similar to version 3.X

+module.exports.parse = (data, opts) => new JSON2CSVParser(opts).parse(data);

diff --git a/lib/parse-ldjson.js b/lib/parse-ldjson.js

index b9b1bc37..4ba7e253 100644

--- a/lib/parse-ldjson.js

+++ b/lib/parse-ldjson.js

@@ -1,3 +1,5 @@

+'use strict';

+

function parseLdJson(input) {

return input

.split('\n')

diff --git a/package-lock.json b/package-lock.json

index 42cde0fe..9d6f9def 100644

--- a/package-lock.json

+++ b/package-lock.json

@@ -4846,8 +4846,7 @@

"jsonparse": {

"version": "1.3.1",

"resolved": "https://registry.npmjs.org/jsonparse/-/jsonparse-1.3.1.tgz",

- "integrity": "sha1-P02uSpH6wxX3EGL4UhzCOfE2YoA=",

- "dev": true

+ "integrity": "sha1-P02uSpH6wxX3EGL4UhzCOfE2YoA="

},

"jsprim": {

"version": "1.4.1",

diff --git a/package.json b/package.json

index 8c31b5f6..5de3bd3f 100644

--- a/package.json

+++ b/package.json

@@ -41,6 +41,7 @@

"commander": "^2.8.1",

"debug": "^3.1.0",

"flat": "^4.0.0",

+ "jsonparse": "^1.3.1",

"lodash.clonedeep": "^4.5.0",

"lodash.flatten": "^4.4.0",

"lodash.get": "^4.4.0",

diff --git a/test/fixtures/csv/deepJSON.csv b/test/fixtures/csv/deepJSON.csv

new file mode 100644

index 00000000..6c51e864

--- /dev/null

+++ b/test/fixtures/csv/deepJSON.csv

@@ -0,0 +1,2 @@

+"field1"

+"{""embeddedField1"":""embeddedValue1"",""embeddedField2"":""embeddedValue2""}"

\ No newline at end of file

diff --git a/test/fixtures/csv/escapeEOL.csv b/test/fixtures/csv/escapeEOL.csv

new file mode 100644

index 00000000..1c418ac4

--- /dev/null

+++ b/test/fixtures/csv/escapeEOL.csv

@@ -0,0 +1,5 @@

+"a string"

+"with a \ndescription\\n and\na new line"

+"with a \r\ndescription and\r\nanother new line"

+

+//TODO

\ No newline at end of file

diff --git a/test/fixtures/csv/flattenedDeepJSON.csv b/test/fixtures/csv/flattenedDeepJSON.csv

new file mode 100644

index 00000000..45649f0f

--- /dev/null

+++ b/test/fixtures/csv/flattenedDeepJSON.csv

@@ -0,0 +1,2 @@

+"field1.embeddedField1","field1.embeddedField2"

+"embeddedValue1","embeddedValue2"

\ No newline at end of file

diff --git a/test/fixtures/csv/functionField.csv b/test/fixtures/csv/functionField.csv

new file mode 100644

index 00000000..e99908e6

--- /dev/null

+++ b/test/fixtures/csv/functionField.csv

@@ -0,0 +1,2 @@

+"a","funct"

+1,

\ No newline at end of file

diff --git a/test/fixtures/csv/ldjson.csv b/test/fixtures/csv/ldjson.csv

new file mode 100644

index 00000000..5356fb4b

--- /dev/null

+++ b/test/fixtures/csv/ldjson.csv

@@ -0,0 +1,5 @@

+"carModel","price","color","transmission"

+"Audi",0,"blue",

+"BMW",15000,"red","manual"

+"Mercedes",20000,"yellow",

+"Porsche",30000,"green",

\ No newline at end of file

diff --git a/test/fixtures/csv/withoutHeader.csv b/test/fixtures/csv/withoutHeader.csv

new file mode 100644

index 00000000..a57a6e66

--- /dev/null

+++ b/test/fixtures/csv/withoutHeader.csv

@@ -0,0 +1,4 @@

+"Audi",0,"blue",

+"BMW",15000,"red","manual"

+"Mercedes",20000,"yellow",

+"Porsche",30000,"green",

\ No newline at end of file

diff --git a/test/fixtures/csv/withoutTitle.csv b/test/fixtures/csv/withoutTitle.csv

deleted file mode 100644

index 1e3d575f..00000000

--- a/test/fixtures/csv/withoutTitle.csv

+++ /dev/null

@@ -1,4 +0,0 @@

-"Audi",0,"blue"

-"BMW",15000,"red"

-"Mercedes",20000,"yellow"

-"Porsche",30000,"green"

\ No newline at end of file

diff --git a/test/fixtures/json/date.js b/test/fixtures/json/date.js

new file mode 100644

index 00000000..0d6a50dd

--- /dev/null

+++ b/test/fixtures/json/date.js

@@ -0,0 +1,3 @@

+module.exports = {

+ "date": new Date("2017-01-01T00:00:00.000Z")

+}

\ No newline at end of file

diff --git a/test/fixtures/json/deepJSON.json b/test/fixtures/json/deepJSON.json

new file mode 100644

index 00000000..ca2f6282

--- /dev/null

+++ b/test/fixtures/json/deepJSON.json

@@ -0,0 +1,6 @@

+{

+ "field1": {

+ "embeddedField1": "embeddedValue1",

+ "embeddedField2": "embeddedValue2"

+ }

+}

\ No newline at end of file

diff --git a/test/fixtures/json/defaultInvalid.json b/test/fixtures/json/defaultInvalid.json

new file mode 100644

index 00000000..c30f6c47

--- /dev/null

+++ b/test/fixtures/json/defaultInvalid.json

@@ -0,0 +1,6 @@

+[

+ { "carModel": "Audi", "price": 0, "color": "blue" },

+ { "carModel": "BMW", "price": 15000, "color": "red", "transmission": "manual" },

+ { "carModel": "Mercedes", "price": 20000, "color": "yellow",

+ { "carModel": "Porsche", "price": 30000, "color": "green" }

+]

diff --git a/test/fixtures/json/delimiter.json b/test/fixtures/json/delimiter.json

new file mode 100644

index 00000000..38f55648

--- /dev/null

+++ b/test/fixtures/json/delimiter.json

@@ -0,0 +1,4 @@

+[

+ { "firstname": "foo", "lastname": "bar", "email": "foo.bar@json2csv.com" },

+ { "firstname": "bar", "lastname": "foo", "email": "bar.foo@json2csv.com" }

+]

\ No newline at end of file

diff --git a/test/fixtures/json/escapeEOL.json b/test/fixtures/json/escapeEOL.json

new file mode 100644

index 00000000..94c19c7f

--- /dev/null

+++ b/test/fixtures/json/escapeEOL.json

@@ -0,0 +1,4 @@

+[

+ {"a string": "with a \u2028description\\n and\na new line"},

+ {"a string": "with a \u2029\u2028description and\r\nanother new line"}

+]

diff --git a/test/fixtures/json/fancyfields.json b/test/fixtures/json/fancyfields.json

new file mode 100644

index 00000000..5bc8d2c4

--- /dev/null

+++ b/test/fixtures/json/fancyfields.json

@@ -0,0 +1,18 @@

+[{

+ "path1": "hello ",

+ "path2": "world!",

+ "bird": {

+ "nest1": "chirp",

+ "nest2": "cheep"

+ },

+ "fake": {

+ "path": "overrides default"

+ }

+}, {

+ "path1": "good ",

+ "path2": "bye!",

+ "bird": {

+ "nest1": "meep",

+ "nest2": "meep"

+ }

+}]

\ No newline at end of file

diff --git a/test/fixtures/json/functionField.js b/test/fixtures/json/functionField.js

new file mode 100644

index 00000000..b268e6d9

--- /dev/null

+++ b/test/fixtures/json/functionField.js

@@ -0,0 +1,4 @@

+module.exports = {

+ a: 1,

+ funct: (a) => a + 1

+};

\ No newline at end of file

diff --git a/test/fixtures/json/functionNoStringify.json b/test/fixtures/json/functionNoStringify.json

new file mode 100644

index 00000000..b820ee5e

--- /dev/null

+++ b/test/fixtures/json/functionNoStringify.json

@@ -0,0 +1,5 @@

+[{

+ "value1": "\"abc\""

+}, {

+ "value1": "1234"

+}]

\ No newline at end of file

diff --git a/test/fixtures/json/functionStringifyByDefault.json b/test/fixtures/json/functionStringifyByDefault.json

new file mode 100644

index 00000000..b8bd9f1c

--- /dev/null

+++ b/test/fixtures/json/functionStringifyByDefault.json

@@ -0,0 +1,4 @@

+[

+ { "value1": "abc" },

+ { "value1": "1234" }

+]

\ No newline at end of file

diff --git a/test/fixtures/json/ldjson.json b/test/fixtures/json/ldjson.json

new file mode 100644

index 00000000..5c4c11a0

--- /dev/null

+++ b/test/fixtures/json/ldjson.json

@@ -0,0 +1,4 @@

+{ "carModel": "Audi", "price": 0, "color": "blue" }

+{ "carModel": "BMW", "price": 15000, "color": "red", "transmission": "manual" }

+{ "carModel": "Mercedes", "price": 20000, "color": "yellow" }

+{ "carModel": "Porsche", "price": 30000, "color": "green" }

\ No newline at end of file

diff --git a/test/fixtures/json/ldjsonInvalid.json b/test/fixtures/json/ldjsonInvalid.json

new file mode 100644

index 00000000..ae563c9d

--- /dev/null

+++ b/test/fixtures/json/ldjsonInvalid.json

@@ -0,0 +1,4 @@

+{ "carModel": "Audi", "price": 0, "color": "blue" }

+{ "carModel": "BMW", "price": 15000, "color": "red", "transmission": "manual" }

+{ "carModel": "Mercedes", "price": 20000, "color": "yellow"

+{ "carModel": "Porsche", "price": 30000, "color": "green" }

\ No newline at end of file

diff --git a/test/helpers/load-fixtures.js b/test/helpers/load-fixtures.js

index 274faaf2..acc0ed71 100644

--- a/test/helpers/load-fixtures.js

+++ b/test/helpers/load-fixtures.js

@@ -20,18 +20,52 @@ function getFilesInDirectory(dir) {

function parseToJson(fixtures) {

return fixtures.reduce((data, fixture) => {

- data[fixture.name] = fixture.csv;

+ if (!fixture) return data;

+ data[fixture.name] = fixture.content;

return data;

} ,{})

}

module.exports.loadJSON = function () {

+ return getFilesInDirectory(jsonDirectory)

+ .then(filenames => Promise.all(filenames.map((filename) => {

+ if (filename.startsWith('.')) return;

+ const filePath = path.join(jsonDirectory, filename);

+ try {

+ return Promise.resolve({

+ name: path.parse(filename).name,

+ content: require(filePath)

+ });

+ } catch (e) {

+ // Do nothing.

+ }

+

+ return new Promise((resolve, reject) => {

+ const filePath = path.join(jsonDirectory, filename);

+ fs.readFile(filePath, 'utf-8', (err, data) => {

+ if (err) {

+ reject(err);

+ return;

+ }

+

+ resolve({

+ name: path.parse(filename).name,

+ content: data.toString()

+ });

+ });

+ });

+ })))

+ .then(parseToJson);

+};

+

+module.exports.loadJSONStreams = function () {

return getFilesInDirectory(jsonDirectory)

.then(filenames => filenames.map((filename) => {

+ if (filename.startsWith('.')) return;

const filePath = path.join(jsonDirectory, filename);

return {

name: path.parse(filename).name,

- csv: require(filePath)

+ content: () => fs.createReadStream(filePath, { highWaterMark: 175 })

};

}))

.then(parseToJson);

@@ -40,6 +74,7 @@ module.exports.loadJSON = function () {

module.exports.loadCSV = function () {

return getFilesInDirectory(csvDirectory)

.then(filenames => Promise.all(filenames.map((filename) => {

+ if (filename.startsWith('.')) return;

return new Promise((resolve, reject) => {

const filePath = path.join(csvDirectory, filename);

fs.readFile(filePath, 'utf-8', (err, data) => {

@@ -50,7 +85,7 @@ module.exports.loadCSV = function () {

resolve({

name: path.parse(filename).name,

- csv: data.toString()

+ content: data.toString()

});

});

});

diff --git a/test/index.js b/test/index.js

index 49d6467b..ae578459 100644

--- a/test/index.js

+++ b/test/index.js

@@ -1,195 +1,152 @@

'use strict';

+const Readable = require('stream').Readable

const test = require('tape');

const json2csv = require('../lib/json2csv');

+const Json2csvParser = json2csv.Parser;

+const Json2csvTransform = json2csv.Transform;

const parseLdJson = require('../lib/parse-ldjson');

const loadFixtures = require('./helpers/load-fixtures');

-Promise.all([loadFixtures.loadJSON(), loadFixtures.loadCSV()])

+Promise.all([

+ loadFixtures.loadJSON(),

+ loadFixtures.loadJSONStreams(),

+ loadFixtures.loadCSV()])

.then((fixtures) => {

const jsonFixtures = fixtures[0];

- const csvFixtures = fixtures[1];

+ const jsonFixturesStreams = fixtures[1];

+ const csvFixtures = fixtures[2];

- test('should output a string', (t) => {

- const csv = json2csv(jsonFixtures.default);

+ test('should parse json to csv and infer the fields automatically using parse method', (t) => {

+ const csv = json2csv.parse(jsonFixtures.default);

t.ok(typeof csv === 'string');

t.equal(csv, csvFixtures.default);

t.end();

});

- test('should remove last delimiter |@|', (t) => {

- let csv = json2csv([

- { firstname: 'foo', lastname: 'bar', email: 'foo.bar@json2csv.com' },

- { firstname: 'bar', lastname: 'foo', email: 'bar.foo@json2csv.com' }

- ], {

- delimiter: '|@|'

- });

-

- t.equal(csv, csvFixtures.delimiter);

- t.end();

- });

-

- test('should parse json to csv', (t) => {

- const csv = json2csv(jsonFixtures.default, {

- fields: ['carModel', 'price', 'color', 'transmission']

- });

-

- t.equal(csv, csvFixtures.default);

- t.end();

- });

-

- test('should parse json to csv without fields', (t) => {

- const csv = json2csv(jsonFixtures.default);

-

- t.equal(csv, csvFixtures.default);

- t.end();

- });

-

- test('should parse json to csv without column title', (t) => {

- const csv = json2csv(jsonFixtures.default, {

- fields: ['carModel', 'price', 'color'],

- header: false

- });

-

- t.equal(csv, csvFixtures.withoutTitle);

- t.end();

- });

-

- test('should parse json to csv even if json include functions', (t) => {

- const csv = json2csv({

- a: 1,

- funct: (a) => a + 1

- });

+ test('should error if input data is not an object', (t) => {

+ const input = 'not an object';

+ try {

+ const parser = new Json2csvParser();

+ parser.parse(input);

- t.equal(csv, '"a","funct"\n1,');

- t.end();

+ t.notOk(true);

+ } catch(error) {

+ t.equal(error.message, 'params should include "fields" and/or non-empty "data" array of objects');

+ t.end();

+ }

});

- test('should parse data:{} to csv with only column title', (t) => {

- const csv = json2csv({}, {

+ test('should handle empty object', (t) => {

+ const input = {};

+ const opts = {

fields: ['carModel', 'price', 'color']

- });

+ };

+

+ const parser = new Json2csvParser(opts);

+ const csv = parser.parse(input);

t.equal(csv, '"carModel","price","color"');

t.end();

});

- test('should parse data:[null] to csv with only column title', (t) => {

- const csv = json2csv([null], {

+ test('should hanlde array with nulls', (t) => {

+ const input = [null];

+ const opts = {

fields: ['carModel', 'price', 'color']

- });

+ };

+

+ const parser = new Json2csvParser(opts);

+ const csv = parser.parse(input);

t.equal(csv, '"carModel","price","color"');

t.end();

});

- test('should output only selected fields', (t) => {

- const csv = json2csv(jsonFixtures.default, {

- fields: ['carModel', 'price']

- });

+ test('should handle date in input', (t) => {

+ const parser = new Json2csvParser();

+ const csv = parser.parse(jsonFixtures.date);

- t.equal(csv, csvFixtures.selected);

+ t.equal(csv, csvFixtures.date);

t.end();

});

- test('should output not exist field with empty value', (t) => {

- const csv = json2csv(jsonFixtures.default, {

- fields: ['first not exist field', 'carModel', 'price', 'not exist field', 'color']

- });

+ test('should handle functions in input', (t) => {

+ const parser = new Json2csvParser();

+ const csv = parser.parse(jsonFixtures.functionField);

- t.equal(csv, csvFixtures.withNotExistField);

+ t.equal(csv, csvFixtures.functionField);

t.end();

});

- test('should output reversed order', (t) => {

- const csv = json2csv(jsonFixtures.default, {

- fields: ['price', 'carModel']

- });

+ test('should handle deep JSON objects', (t) => {

+ const parser = new Json2csvParser();

+ const csv = parser.parse(jsonFixtures.deepJSON);

- t.equal(csv, csvFixtures.reversed);

+ t.equal(csv, csvFixtures.deepJSON);

t.end();

});

- test('should escape quotes with double quotes', (t) => {

- const csv = json2csv(jsonFixtures.quotes, {

- fields: ['a string']

- });

+ test('should parse json to csv and infer the fields automatically ', (t) => {

+ const parser = new Json2csvParser();

+ const csv = parser.parse(jsonFixtures.default);

- t.equal(csv, csvFixtures.quotes);

+ t.ok(typeof csv === 'string');

+ t.equal(csv, csvFixtures.default);

t.end();

});

- test('should escape quotes with value in doubleQuote', (t) => {

- const csv = json2csv(jsonFixtures.doubleQuotes, {

- fields: ['a string'],

- doubleQuote: '*'

- });

-

- t.equal(csv, csvFixtures.doubleQuotes);

- t.end();

- });

+ test('should parse json to csv using custom fields', (t) => {

+ const opts = {

+ fields: ['carModel', 'price', 'color', 'transmission']

+ };

- test('should not escape quotes with double quotes, when there is a backslah in the end', (t) => {

- const csv = json2csv(jsonFixtures.backslashAtEnd, {

- fields: ['a string']

- });

+ const parser = new Json2csvParser(opts);

+ const csv = parser.parse(jsonFixtures.default);

- t.equal(csv, csvFixtures.backslashAtEnd);

+ t.equal(csv, csvFixtures.default);

t.end();

});

- test('should not escape quotes with double quotes, when there is a backslah in the end, and its not the last column', (t) => {

- const csv = json2csv(jsonFixtures.backslashAtEndInMiddleColumn, {

- fields: ['uuid','title','id']

- });

-

- t.equal(csv, csvFixtures.backslashAtEndInMiddleColumn);

- t.end();

- });

+ test('should output only selected fields', (t) => {

+ const opts = {

+ fields: ['carModel', 'price']

+ };

- test('should use a custom delimiter when \'quote\' property is present', (t) => {

- const csv = json2csv(jsonFixtures.default, {

- fields: ['carModel', 'price'],

- quote: '\''

- });

+ const parser = new Json2csvParser(opts);

+ const csv = parser.parse(jsonFixtures.default);