diff --git a/Introduction/SCR-20230813-nhkq.png b/Introduction/SCR-20230813-nhkq.png

new file mode 100644

index 0000000..51ca427

Binary files /dev/null and b/Introduction/SCR-20230813-nhkq.png differ

diff --git a/Introduction/SCR-20230813-nkzc.png b/Introduction/SCR-20230813-nkzc.png

new file mode 100644

index 0000000..1d60c1c

Binary files /dev/null and b/Introduction/SCR-20230813-nkzc.png differ

diff --git a/Introduction/SCR-20230813-nmcj.png b/Introduction/SCR-20230813-nmcj.png

new file mode 100644

index 0000000..5a4ba1c

Binary files /dev/null and b/Introduction/SCR-20230813-nmcj.png differ

diff --git a/Introduction/SCR-20230813-nngy.png b/Introduction/SCR-20230813-nngy.png

new file mode 100644

index 0000000..e887758

Binary files /dev/null and b/Introduction/SCR-20230813-nngy.png differ

diff --git "a/Introduction/\346\234\211\351\201\223\347\277\273\350\257\221.md" "b/Introduction/\346\234\211\351\201\223\347\277\273\350\257\221.md"

new file mode 100644

index 0000000..05baf4b

--- /dev/null

+++ "b/Introduction/\346\234\211\351\201\223\347\277\273\350\257\221.md"

@@ -0,0 +1,77 @@

+# 有道翻译

+

+> 注意

+>

+> 本文信息可能会过时,仅供参考,请以服务商最新官方文档为准。

+>

+> 官方文档:[https://ai.youdao.com/doc.s#guide在新窗口打开](https://ai.youdao.com/doc.s#guide)

+

+## [#](https://bobtranslate.com/service/translate/youdao.html#_0-收费模式)0. 收费模式

+

+[查看详情在新窗口打开](https://ai.youdao.com/DOCSIRMA/html/自然语言翻译/产品定价/文本翻译服务/文本翻译服务-产品定价.html)

+

+| 服务 | 免费额度 | 超出免费额度 | 并发请求数 |

+| :--------------- | :------- | :-------------- | :--------- |

+| 中文与语种一互译 | 无 | 48元/100万字符 | - |

+| 中文与语种二互译 | 无 | 100元/100万字符 | - |

+| 其他语种间互译 | 无 | 100元/100万字符 | - |

+

+> 提示

+>

+> 新注册用户领100元体验金

+>

+> 新用户注册即免费获得10元体验金,实名认证通过再获得40元体验金,添加客服微信再得50元体验金!

+

+## [#](https://bobtranslate.com/service/translate/youdao.html#_1-注册登录)1. 注册登录

+

+[点击此处跳转网页在新窗口打开](https://ai.youdao.com/)

+

+

+

+> 注册完成后,按照页面提示添加有道客服微信并发送账号信息,可再获得50元体验金。

+

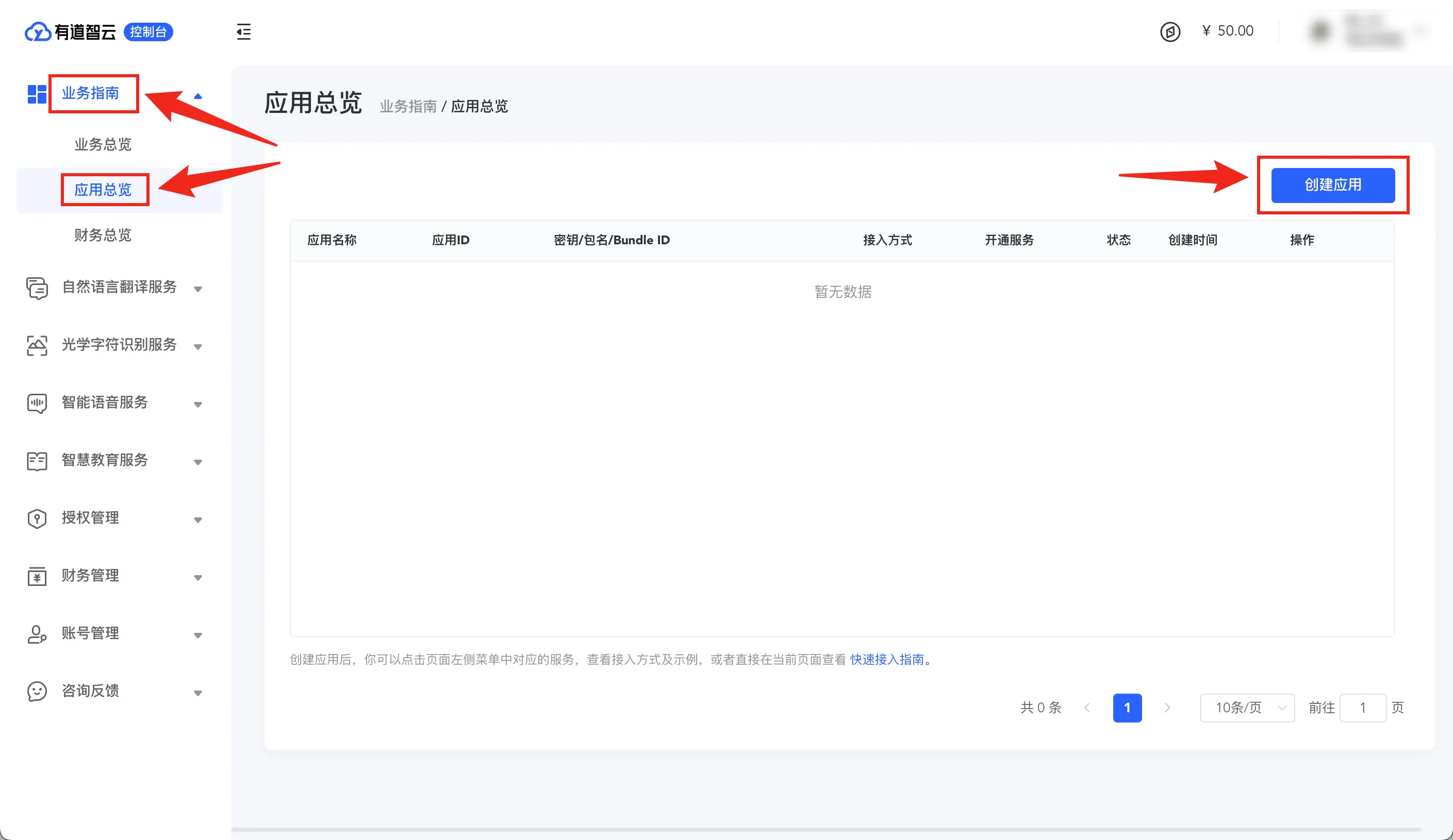

+## [#](https://bobtranslate.com/service/translate/youdao.html#_2-创建应用)2. 创建应用

+

+登录完成后,进入 [「业务指南-应用总览」在新窗口打开](https://ai.youdao.com/console/#/app-overview),点击「创建应用」

+

+

+

+应用名称随意填写,服务需勾选「文本翻译」,并按需勾选「语音合成」,接入方式选「API」,应用类别可随意选择,其他信息不用填,然后点击「确定」

+

+在翻译单词时,有道翻译可以为单词提供额外的美音和英音发音。如希望使用有道提供的发音功能,此处需同时勾选「语音合成」。语音合成是收费服务,会单独按发音使用量计费,详见 [「智能语音合成服务-产品定价」在新窗口打开](https://ai.youdao.com/DOCSIRMA/html/语音合成TTS/产品定价/语音合成服务/语音合成服务-产品定价.html)

+

+

+

+> 注意

+>

+> 请不要填写「服务器 IP」这一项设定,填写后很可能会导致你无法正常访问服务。

+

+## [#](https://bobtranslate.com/service/translate/youdao.html#_3-获取秘钥)3. 获取秘钥

+

+> 警告

+>

+> **前面的步骤,只要没明确说可以跳过,那就是不能跳过的,不然获取到秘钥也用不了!**

+

+此外,请妥善保管自己的秘钥,秘钥泄露可能会给你带来损失!

+

+进入 [「业务指南-业务总览」在新窗口打开](https://ai.youdao.com/console/#/),在「我的应用」中找到开通了「文本翻译」服务的应用,点击「应用 ID」和「应用密钥」旁的复制按钮可分别复制所需的应用 ID 和应用密钥

+

+

+

+

+

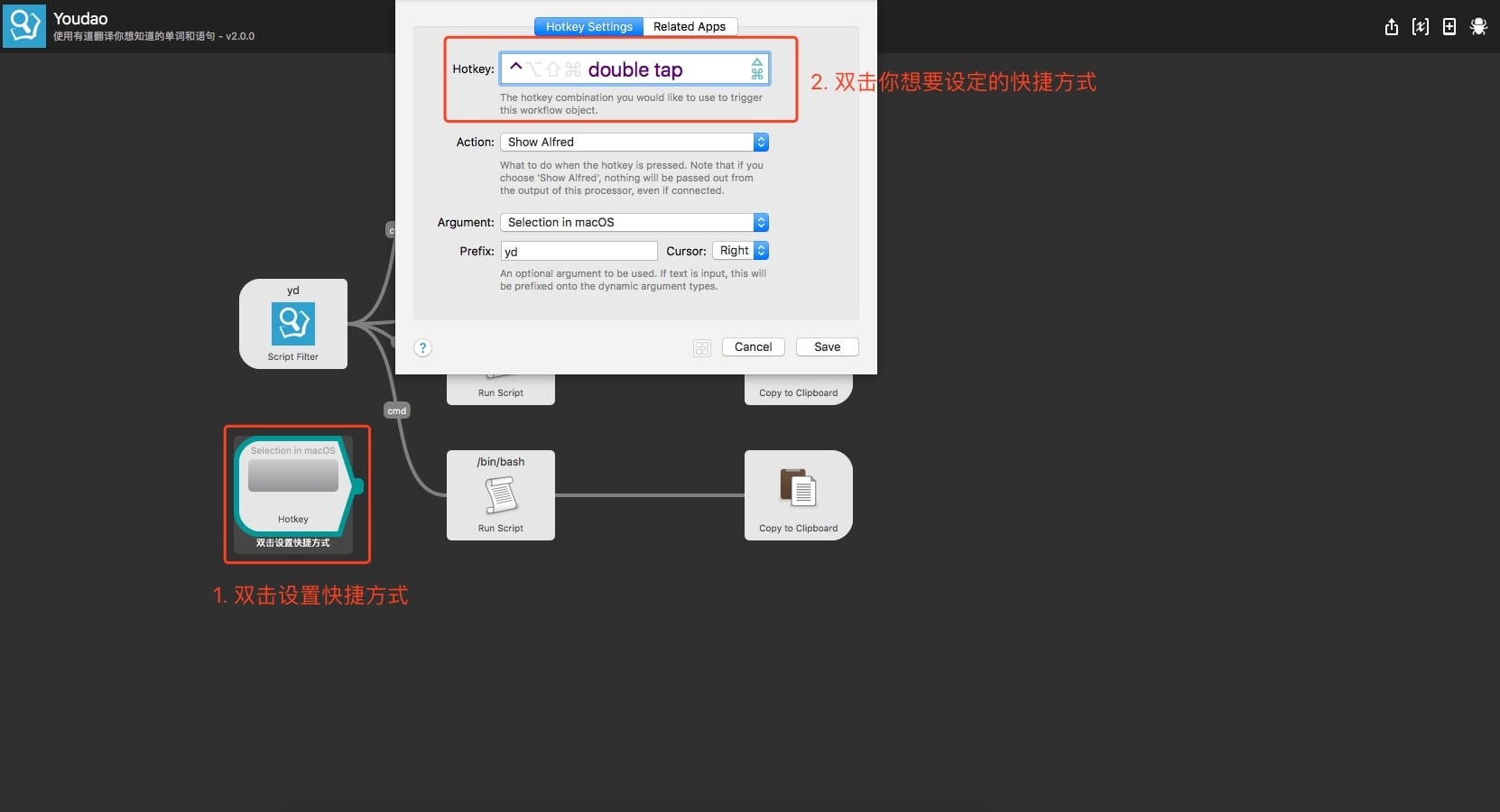

+## 4. 填写秘钥

+

+在 Alfred 的 Workflows 中,选中「Youdao」,点击 `Configure Workflow` 。

+

+

+

+然后将刚才获取到的应用 ID 和应用密钥填写到对应位置即可。

+

+

+

+

+

+## 5. 错误说明

+

+如果新版本有道智云遇到问题,请参见 [错误代码列表](https://ai.youdao.com/DOCSIRMA/html/trans/api/wbfy/index.html#section-14)。

diff --git a/README.md b/README.md

index e3fc832..e468b74 100755

--- a/README.md

+++ b/README.md

@@ -1,6 +1,6 @@

# whyliam.workflows.youdao

-## 有道翻译 workflow v3.0.0

+## 有道翻译 workflow v3.1.0

默认快捷键 `yd`,查看翻译结果。

@@ -24,31 +24,13 @@

### 下载

-#### Python 3 版本

+[Python 3 版本](https://github.com/whyliam/whyliam.workflows.youdao/releases/download/3.1.0/whyliam.workflows.youdao.alfredworkflow) - 感谢 [Pid](https://github.com/zhugexiaobo)

-[Python 3 版本](https://github.com/whyliam/whyliam.workflows.youdao/releases/download/3.0.0/whyliam.workflows.youdao.alfredworkflow) - 感谢 [Pid](https://github.com/zhugexiaobo)

+### 使用说明

-#### Python 2 版本

-`macOS 12.3` 以下的用户请使用以下版本

+[使用说明](Introduction/有道翻译.md)

-[Python 2 版本](https://github.com/whyliam/whyliam.workflows.youdao/releases/download/2.2.5/whyliam.workflows.youdao.alfredworkflow)

-### 安装

-

-1\. [下载](https://github.com/whyliam/whyliam.workflows.youdao/releases)最新版本双击安装

-

-2\. [注册](http://ai.youdao.com/appmgr.s)有道智云应用

-

-3\. 在 Alfred 的设置中填入对应的`应用ID`和`应用密钥`

-

-

-

-4\. 在 Alfred 的设置中设置快捷方式键

-

-

-### 问题

-

-如果新版本有道智云遇到问题,请参见 [错误代码列表](http://ai.youdao.com/docs/doc-trans-api.s#p08)。

### 演示

diff --git a/info.plist b/info.plist

index a0a6bcd..956a718 100644

--- a/info.plist

+++ b/info.plist

@@ -152,12 +152,14 @@

browser

+ skipqueryencode

+

+ skipvarencode

+

spaces

url

- http://dict.youdao.com/search?q={query}

- utf8

-

+ https://dict.youdao.com/result?word={query}&lang=en&keyfrom=whyliam.workflows.youdao

type

alfred.workflow.action.openurl

@@ -399,7 +401,7 @@

readme

- 有道翻译 Workflow v3.0.0

+ 有道翻译 Workflow v3.1.0

默认快捷键 yd, 查看翻译结果。

@@ -426,113 +428,144 @@

0907BEF4-816F-48FF-B157-03F5C2AACEAB

xpos

- 830

+ 830

ypos

- 420

+ 420

27E60581-8105-41DD-8E29-4FE811179098

xpos

- 500

+ 500

ypos

- 290

+ 290

4473C9D3-7A15-4D31-84F6-A096A7CFF46C

xpos

- 830

+ 830

ypos

- 150

+ 150

5751065C-52C1-4D19-8F7D-03B730BFE440

note

双击设置快捷方式

xpos

- 270

+ 270

ypos

- 440

+ 440

6A03FDC5-89AC-4F9D-9456-3762ACA751FE

xpos

- 830

+ 830

ypos

- 40

+ 40

7C1ABC41-3B36-401F-96C7-30BCB39181FF

xpos

- 500

+ 500

ypos

- 410

+ 410

7CAE8B02-CE31-4941-AD5F-C36CC84D164B

xpos

- 500

+ 500

ypos

- 560

+ 560

91C343E7-50D8-4B0D-9034-1C16C20DA8D4

xpos

- 270

+ 270

ypos

- 250

+ 250

DA8E4597-4B45-4C4C-A8D0-755BD785BD7A

xpos

- 830

+ 830

ypos

- 560

+ 560

DBA62127-3B78-4B80-B82B-1C6AEC393003

xpos

- 830

+ 830

ypos

- 290

+ 290

F99C4C55-10F5-4D62-A77D-F27058629B21

xpos

- 500

+ 500

ypos

- 40

+ 40

+ userconfigurationconfig

+

+

+ config

+

+ default

+

+ placeholder

+ 请输入应用ID

+ required

+

+ trim

+

+

+ description

+ 应用ID

+ label

+ 应用ID

+ type

+ textfield

+ variable

+ zhiyun_id

+

+

+ config

+

+ default

+

+ placeholder

+ 请输入应用密钥

+ required

+

+ trim

+

+

+ description

+ 应用密钥

+ label

+ 应用密钥

+ type

+ textfield

+ variable

+ zhiyun_key

+

+

variables

filepath

~/Documents/Alfred-youdao-wordbook.xml

password

- sentry

- True

username

- youdao_key

-

- youdao_keyfrom

-

- zhiyun_id

-

- zhiyun_key

-

variablesdontexport

- youdao_key

username

password

- youdao_keyfrom

- zhiyun_id

- zhiyun_key

version

- 3.0.0

+ 3.1.0

webaddress

https://github.com/whyliam/whyliam.workflows.youdao

diff --git a/sentry_sdk/__init__.py b/sentry_sdk/__init__.py

deleted file mode 100644

index ab5123e..0000000

--- a/sentry_sdk/__init__.py

+++ /dev/null

@@ -1,40 +0,0 @@

-from sentry_sdk.hub import Hub, init

-from sentry_sdk.scope import Scope

-from sentry_sdk.transport import Transport, HttpTransport

-from sentry_sdk.client import Client

-

-from sentry_sdk.api import * # noqa

-

-from sentry_sdk.consts import VERSION # noqa

-

-__all__ = [ # noqa

- "Hub",

- "Scope",

- "Client",

- "Transport",

- "HttpTransport",

- "init",

- "integrations",

- # From sentry_sdk.api

- "capture_event",

- "capture_message",

- "capture_exception",

- "add_breadcrumb",

- "configure_scope",

- "push_scope",

- "flush",

- "last_event_id",

- "start_span",

- "start_transaction",

- "set_tag",

- "set_context",

- "set_extra",

- "set_user",

- "set_level",

-]

-

-# Initialize the debug support after everything is loaded

-from sentry_sdk.debug import init_debug_support

-

-init_debug_support()

-del init_debug_support

diff --git a/sentry_sdk/_compat.py b/sentry_sdk/_compat.py

deleted file mode 100644

index 49a5539..0000000

--- a/sentry_sdk/_compat.py

+++ /dev/null

@@ -1,89 +0,0 @@

-import sys

-

-from sentry_sdk._types import MYPY

-

-if MYPY:

- from typing import Optional

- from typing import Tuple

- from typing import Any

- from typing import Type

- from typing import TypeVar

-

- T = TypeVar("T")

-

-

-PY2 = sys.version_info[0] == 2

-

-if PY2:

- import urlparse # noqa

-

- text_type = unicode # noqa

-

- string_types = (str, text_type)

- number_types = (int, long, float) # noqa

- int_types = (int, long) # noqa

- iteritems = lambda x: x.iteritems() # noqa: B301

-

- def implements_str(cls):

- # type: (T) -> T

- cls.__unicode__ = cls.__str__

- cls.__str__ = lambda x: unicode(x).encode("utf-8") # noqa

- return cls

-

- exec("def reraise(tp, value, tb=None):\n raise tp, value, tb")

-

-

-else:

- import urllib.parse as urlparse # noqa

-

- text_type = str

- string_types = (text_type,) # type: Tuple[type]

- number_types = (int, float) # type: Tuple[type, type]

- int_types = (int,) # noqa

- iteritems = lambda x: x.items()

-

- def implements_str(x):

- # type: (T) -> T

- return x

-

- def reraise(tp, value, tb=None):

- # type: (Optional[Type[BaseException]], Optional[BaseException], Optional[Any]) -> None

- assert value is not None

- if value.__traceback__ is not tb:

- raise value.with_traceback(tb)

- raise value

-

-

-def with_metaclass(meta, *bases):

- # type: (Any, *Any) -> Any

- class MetaClass(type):

- def __new__(metacls, name, this_bases, d):

- # type: (Any, Any, Any, Any) -> Any

- return meta(name, bases, d)

-

- return type.__new__(MetaClass, "temporary_class", (), {})

-

-

-def check_thread_support():

- # type: () -> None

- try:

- from uwsgi import opt # type: ignore

- except ImportError:

- return

-

- # When `threads` is passed in as a uwsgi option,

- # `enable-threads` is implied on.

- if "threads" in opt:

- return

-

- if str(opt.get("enable-threads", "0")).lower() in ("false", "off", "no", "0"):

- from warnings import warn

-

- warn(

- Warning(

- "We detected the use of uwsgi with disabled threads. "

- "This will cause issues with the transport you are "

- "trying to use. Please enable threading for uwsgi. "

- '(Add the "enable-threads" flag).'

- )

- )

diff --git a/sentry_sdk/_functools.py b/sentry_sdk/_functools.py

deleted file mode 100644

index 8dcf79c..0000000

--- a/sentry_sdk/_functools.py

+++ /dev/null

@@ -1,66 +0,0 @@

-"""

-A backport of Python 3 functools to Python 2/3. The only important change

-we rely upon is that `update_wrapper` handles AttributeError gracefully.

-"""

-

-from functools import partial

-

-from sentry_sdk._types import MYPY

-

-if MYPY:

- from typing import Any

- from typing import Callable

-

-

-WRAPPER_ASSIGNMENTS = (

- "__module__",

- "__name__",

- "__qualname__",

- "__doc__",

- "__annotations__",

-)

-WRAPPER_UPDATES = ("__dict__",)

-

-

-def update_wrapper(

- wrapper, wrapped, assigned=WRAPPER_ASSIGNMENTS, updated=WRAPPER_UPDATES

-):

- # type: (Any, Any, Any, Any) -> Any

- """Update a wrapper function to look like the wrapped function

-

- wrapper is the function to be updated

- wrapped is the original function

- assigned is a tuple naming the attributes assigned directly

- from the wrapped function to the wrapper function (defaults to

- functools.WRAPPER_ASSIGNMENTS)

- updated is a tuple naming the attributes of the wrapper that

- are updated with the corresponding attribute from the wrapped

- function (defaults to functools.WRAPPER_UPDATES)

- """

- for attr in assigned:

- try:

- value = getattr(wrapped, attr)

- except AttributeError:

- pass

- else:

- setattr(wrapper, attr, value)

- for attr in updated:

- getattr(wrapper, attr).update(getattr(wrapped, attr, {}))

- # Issue #17482: set __wrapped__ last so we don't inadvertently copy it

- # from the wrapped function when updating __dict__

- wrapper.__wrapped__ = wrapped

- # Return the wrapper so this can be used as a decorator via partial()

- return wrapper

-

-

-def wraps(wrapped, assigned=WRAPPER_ASSIGNMENTS, updated=WRAPPER_UPDATES):

- # type: (Callable[..., Any], Any, Any) -> Callable[[Callable[..., Any]], Callable[..., Any]]

- """Decorator factory to apply update_wrapper() to a wrapper function

-

- Returns a decorator that invokes update_wrapper() with the decorated

- function as the wrapper argument and the arguments to wraps() as the

- remaining arguments. Default arguments are as for update_wrapper().

- This is a convenience function to simplify applying partial() to

- update_wrapper().

- """

- return partial(update_wrapper, wrapped=wrapped, assigned=assigned, updated=updated)

diff --git a/sentry_sdk/_queue.py b/sentry_sdk/_queue.py

deleted file mode 100644

index e368da2..0000000

--- a/sentry_sdk/_queue.py

+++ /dev/null

@@ -1,227 +0,0 @@

-"""

-A fork of Python 3.6's stdlib queue with Lock swapped out for RLock to avoid a

-deadlock while garbage collecting.

-

-See

-https://codewithoutrules.com/2017/08/16/concurrency-python/

-https://bugs.python.org/issue14976

-https://github.com/sqlalchemy/sqlalchemy/blob/4eb747b61f0c1b1c25bdee3856d7195d10a0c227/lib/sqlalchemy/queue.py#L1

-

-We also vendor the code to evade eventlet's broken monkeypatching, see

-https://github.com/getsentry/sentry-python/pull/484

-"""

-

-import threading

-

-from collections import deque

-from time import time

-

-from sentry_sdk._types import MYPY

-

-if MYPY:

- from typing import Any

-

-__all__ = ["Empty", "Full", "Queue"]

-

-

-class Empty(Exception):

- "Exception raised by Queue.get(block=0)/get_nowait()."

- pass

-

-

-class Full(Exception):

- "Exception raised by Queue.put(block=0)/put_nowait()."

- pass

-

-

-class Queue(object):

- """Create a queue object with a given maximum size.

-

- If maxsize is <= 0, the queue size is infinite.

- """

-

- def __init__(self, maxsize=0):

- self.maxsize = maxsize

- self._init(maxsize)

-

- # mutex must be held whenever the queue is mutating. All methods

- # that acquire mutex must release it before returning. mutex

- # is shared between the three conditions, so acquiring and

- # releasing the conditions also acquires and releases mutex.

- self.mutex = threading.RLock()

-

- # Notify not_empty whenever an item is added to the queue; a

- # thread waiting to get is notified then.

- self.not_empty = threading.Condition(self.mutex)

-

- # Notify not_full whenever an item is removed from the queue;

- # a thread waiting to put is notified then.

- self.not_full = threading.Condition(self.mutex)

-

- # Notify all_tasks_done whenever the number of unfinished tasks

- # drops to zero; thread waiting to join() is notified to resume

- self.all_tasks_done = threading.Condition(self.mutex)

- self.unfinished_tasks = 0

-

- def task_done(self):

- """Indicate that a formerly enqueued task is complete.

-

- Used by Queue consumer threads. For each get() used to fetch a task,

- a subsequent call to task_done() tells the queue that the processing

- on the task is complete.

-

- If a join() is currently blocking, it will resume when all items

- have been processed (meaning that a task_done() call was received

- for every item that had been put() into the queue).

-

- Raises a ValueError if called more times than there were items

- placed in the queue.

- """

- with self.all_tasks_done:

- unfinished = self.unfinished_tasks - 1

- if unfinished <= 0:

- if unfinished < 0:

- raise ValueError("task_done() called too many times")

- self.all_tasks_done.notify_all()

- self.unfinished_tasks = unfinished

-

- def join(self):

- """Blocks until all items in the Queue have been gotten and processed.

-

- The count of unfinished tasks goes up whenever an item is added to the

- queue. The count goes down whenever a consumer thread calls task_done()

- to indicate the item was retrieved and all work on it is complete.

-

- When the count of unfinished tasks drops to zero, join() unblocks.

- """

- with self.all_tasks_done:

- while self.unfinished_tasks:

- self.all_tasks_done.wait()

-

- def qsize(self):

- """Return the approximate size of the queue (not reliable!)."""

- with self.mutex:

- return self._qsize()

-

- def empty(self):

- """Return True if the queue is empty, False otherwise (not reliable!).

-

- This method is likely to be removed at some point. Use qsize() == 0

- as a direct substitute, but be aware that either approach risks a race

- condition where a queue can grow before the result of empty() or

- qsize() can be used.

-

- To create code that needs to wait for all queued tasks to be

- completed, the preferred technique is to use the join() method.

- """

- with self.mutex:

- return not self._qsize()

-

- def full(self):

- """Return True if the queue is full, False otherwise (not reliable!).

-

- This method is likely to be removed at some point. Use qsize() >= n

- as a direct substitute, but be aware that either approach risks a race

- condition where a queue can shrink before the result of full() or

- qsize() can be used.

- """

- with self.mutex:

- return 0 < self.maxsize <= self._qsize()

-

- def put(self, item, block=True, timeout=None):

- """Put an item into the queue.

-

- If optional args 'block' is true and 'timeout' is None (the default),

- block if necessary until a free slot is available. If 'timeout' is

- a non-negative number, it blocks at most 'timeout' seconds and raises

- the Full exception if no free slot was available within that time.

- Otherwise ('block' is false), put an item on the queue if a free slot

- is immediately available, else raise the Full exception ('timeout'

- is ignored in that case).

- """

- with self.not_full:

- if self.maxsize > 0:

- if not block:

- if self._qsize() >= self.maxsize:

- raise Full()

- elif timeout is None:

- while self._qsize() >= self.maxsize:

- self.not_full.wait()

- elif timeout < 0:

- raise ValueError("'timeout' must be a non-negative number")

- else:

- endtime = time() + timeout

- while self._qsize() >= self.maxsize:

- remaining = endtime - time()

- if remaining <= 0.0:

- raise Full

- self.not_full.wait(remaining)

- self._put(item)

- self.unfinished_tasks += 1

- self.not_empty.notify()

-

- def get(self, block=True, timeout=None):

- """Remove and return an item from the queue.

-

- If optional args 'block' is true and 'timeout' is None (the default),

- block if necessary until an item is available. If 'timeout' is

- a non-negative number, it blocks at most 'timeout' seconds and raises

- the Empty exception if no item was available within that time.

- Otherwise ('block' is false), return an item if one is immediately

- available, else raise the Empty exception ('timeout' is ignored

- in that case).

- """

- with self.not_empty:

- if not block:

- if not self._qsize():

- raise Empty()

- elif timeout is None:

- while not self._qsize():

- self.not_empty.wait()

- elif timeout < 0:

- raise ValueError("'timeout' must be a non-negative number")

- else:

- endtime = time() + timeout

- while not self._qsize():

- remaining = endtime - time()

- if remaining <= 0.0:

- raise Empty()

- self.not_empty.wait(remaining)

- item = self._get()

- self.not_full.notify()

- return item

-

- def put_nowait(self, item):

- """Put an item into the queue without blocking.

-

- Only enqueue the item if a free slot is immediately available.

- Otherwise raise the Full exception.

- """

- return self.put(item, block=False)

-

- def get_nowait(self):

- """Remove and return an item from the queue without blocking.

-

- Only get an item if one is immediately available. Otherwise

- raise the Empty exception.

- """

- return self.get(block=False)

-

- # Override these methods to implement other queue organizations

- # (e.g. stack or priority queue).

- # These will only be called with appropriate locks held

-

- # Initialize the queue representation

- def _init(self, maxsize):

- self.queue = deque() # type: Any

-

- def _qsize(self):

- return len(self.queue)

-

- # Put a new item in the queue

- def _put(self, item):

- self.queue.append(item)

-

- # Get an item from the queue

- def _get(self):

- return self.queue.popleft()

diff --git a/sentry_sdk/_types.py b/sentry_sdk/_types.py

deleted file mode 100644

index 7ce7e9e..0000000

--- a/sentry_sdk/_types.py

+++ /dev/null

@@ -1,50 +0,0 @@

-try:

- from typing import TYPE_CHECKING as MYPY

-except ImportError:

- MYPY = False

-

-

-if MYPY:

- from types import TracebackType

- from typing import Any

- from typing import Callable

- from typing import Dict

- from typing import Optional

- from typing import Tuple

- from typing import Type

- from typing import Union

- from typing_extensions import Literal

-

- ExcInfo = Tuple[

- Optional[Type[BaseException]], Optional[BaseException], Optional[TracebackType]

- ]

-

- Event = Dict[str, Any]

- Hint = Dict[str, Any]

-

- Breadcrumb = Dict[str, Any]

- BreadcrumbHint = Dict[str, Any]

-

- SamplingContext = Dict[str, Any]

-

- EventProcessor = Callable[[Event, Hint], Optional[Event]]

- ErrorProcessor = Callable[[Event, ExcInfo], Optional[Event]]

- BreadcrumbProcessor = Callable[[Breadcrumb, BreadcrumbHint], Optional[Breadcrumb]]

-

- TracesSampler = Callable[[SamplingContext], Union[float, int, bool]]

-

- # https://github.com/python/mypy/issues/5710

- NotImplementedType = Any

-

- EventDataCategory = Literal[

- "default",

- "error",

- "crash",

- "transaction",

- "security",

- "attachment",

- "session",

- "internal",

- ]

- SessionStatus = Literal["ok", "exited", "crashed", "abnormal"]

- EndpointType = Literal["store", "envelope"]

diff --git a/sentry_sdk/api.py b/sentry_sdk/api.py

deleted file mode 100644

index f4a44e4..0000000

--- a/sentry_sdk/api.py

+++ /dev/null

@@ -1,214 +0,0 @@

-import inspect

-

-from sentry_sdk.hub import Hub

-from sentry_sdk.scope import Scope

-

-from sentry_sdk._types import MYPY

-

-if MYPY:

- from typing import Any

- from typing import Dict

- from typing import Optional

- from typing import overload

- from typing import Callable

- from typing import TypeVar

- from typing import ContextManager

- from typing import Union

-

- from sentry_sdk._types import Event, Hint, Breadcrumb, BreadcrumbHint, ExcInfo

- from sentry_sdk.tracing import Span, Transaction

-

- T = TypeVar("T")

- F = TypeVar("F", bound=Callable[..., Any])

-else:

-

- def overload(x):

- # type: (T) -> T

- return x

-

-

-# When changing this, update __all__ in __init__.py too

-__all__ = [

- "capture_event",

- "capture_message",

- "capture_exception",

- "add_breadcrumb",

- "configure_scope",

- "push_scope",

- "flush",

- "last_event_id",

- "start_span",

- "start_transaction",

- "set_tag",

- "set_context",

- "set_extra",

- "set_user",

- "set_level",

-]

-

-

-def hubmethod(f):

- # type: (F) -> F

- f.__doc__ = "%s\n\n%s" % (

- "Alias for :py:meth:`sentry_sdk.Hub.%s`" % f.__name__,

- inspect.getdoc(getattr(Hub, f.__name__)),

- )

- return f

-

-

-def scopemethod(f):

- # type: (F) -> F

- f.__doc__ = "%s\n\n%s" % (

- "Alias for :py:meth:`sentry_sdk.Scope.%s`" % f.__name__,

- inspect.getdoc(getattr(Scope, f.__name__)),

- )

- return f

-

-

-@hubmethod

-def capture_event(

- event, # type: Event

- hint=None, # type: Optional[Hint]

- scope=None, # type: Optional[Any]

- **scope_args # type: Any

-):

- # type: (...) -> Optional[str]

- return Hub.current.capture_event(event, hint, scope=scope, **scope_args)

-

-

-@hubmethod

-def capture_message(

- message, # type: str

- level=None, # type: Optional[str]

- scope=None, # type: Optional[Any]

- **scope_args # type: Any

-):

- # type: (...) -> Optional[str]

- return Hub.current.capture_message(message, level, scope=scope, **scope_args)

-

-

-@hubmethod

-def capture_exception(

- error=None, # type: Optional[Union[BaseException, ExcInfo]]

- scope=None, # type: Optional[Any]

- **scope_args # type: Any

-):

- # type: (...) -> Optional[str]

- return Hub.current.capture_exception(error, scope=scope, **scope_args)

-

-

-@hubmethod

-def add_breadcrumb(

- crumb=None, # type: Optional[Breadcrumb]

- hint=None, # type: Optional[BreadcrumbHint]

- **kwargs # type: Any

-):

- # type: (...) -> None

- return Hub.current.add_breadcrumb(crumb, hint, **kwargs)

-

-

-@overload

-def configure_scope(): # noqa: F811

- # type: () -> ContextManager[Scope]

- pass

-

-

-@overload

-def configure_scope( # noqa: F811

- callback, # type: Callable[[Scope], None]

-):

- # type: (...) -> None

- pass

-

-

-@hubmethod

-def configure_scope( # noqa: F811

- callback=None, # type: Optional[Callable[[Scope], None]]

-):

- # type: (...) -> Optional[ContextManager[Scope]]

- return Hub.current.configure_scope(callback)

-

-

-@overload

-def push_scope(): # noqa: F811

- # type: () -> ContextManager[Scope]

- pass

-

-

-@overload

-def push_scope( # noqa: F811

- callback, # type: Callable[[Scope], None]

-):

- # type: (...) -> None

- pass

-

-

-@hubmethod

-def push_scope( # noqa: F811

- callback=None, # type: Optional[Callable[[Scope], None]]

-):

- # type: (...) -> Optional[ContextManager[Scope]]

- return Hub.current.push_scope(callback)

-

-

-@scopemethod # noqa

-def set_tag(key, value):

- # type: (str, Any) -> None

- return Hub.current.scope.set_tag(key, value)

-

-

-@scopemethod # noqa

-def set_context(key, value):

- # type: (str, Dict[str, Any]) -> None

- return Hub.current.scope.set_context(key, value)

-

-

-@scopemethod # noqa

-def set_extra(key, value):

- # type: (str, Any) -> None

- return Hub.current.scope.set_extra(key, value)

-

-

-@scopemethod # noqa

-def set_user(value):

- # type: (Optional[Dict[str, Any]]) -> None

- return Hub.current.scope.set_user(value)

-

-

-@scopemethod # noqa

-def set_level(value):

- # type: (str) -> None

- return Hub.current.scope.set_level(value)

-

-

-@hubmethod

-def flush(

- timeout=None, # type: Optional[float]

- callback=None, # type: Optional[Callable[[int, float], None]]

-):

- # type: (...) -> None

- return Hub.current.flush(timeout=timeout, callback=callback)

-

-

-@hubmethod

-def last_event_id():

- # type: () -> Optional[str]

- return Hub.current.last_event_id()

-

-

-@hubmethod

-def start_span(

- span=None, # type: Optional[Span]

- **kwargs # type: Any

-):

- # type: (...) -> Span

- return Hub.current.start_span(span=span, **kwargs)

-

-

-@hubmethod

-def start_transaction(

- transaction=None, # type: Optional[Transaction]

- **kwargs # type: Any

-):

- # type: (...) -> Transaction

- return Hub.current.start_transaction(transaction, **kwargs)

diff --git a/sentry_sdk/attachments.py b/sentry_sdk/attachments.py

deleted file mode 100644

index b7b6b0b..0000000

--- a/sentry_sdk/attachments.py

+++ /dev/null

@@ -1,55 +0,0 @@

-import os

-import mimetypes

-

-from sentry_sdk._types import MYPY

-from sentry_sdk.envelope import Item, PayloadRef

-

-if MYPY:

- from typing import Optional, Union, Callable

-

-

-class Attachment(object):

- def __init__(

- self,

- bytes=None, # type: Union[None, bytes, Callable[[], bytes]]

- filename=None, # type: Optional[str]

- path=None, # type: Optional[str]

- content_type=None, # type: Optional[str]

- add_to_transactions=False, # type: bool

- ):

- # type: (...) -> None

- if bytes is None and path is None:

- raise TypeError("path or raw bytes required for attachment")

- if filename is None and path is not None:

- filename = os.path.basename(path)

- if filename is None:

- raise TypeError("filename is required for attachment")

- if content_type is None:

- content_type = mimetypes.guess_type(filename)[0]

- self.bytes = bytes

- self.filename = filename

- self.path = path

- self.content_type = content_type

- self.add_to_transactions = add_to_transactions

-

- def to_envelope_item(self):

- # type: () -> Item

- """Returns an envelope item for this attachment."""

- payload = None # type: Union[None, PayloadRef, bytes]

- if self.bytes is not None:

- if callable(self.bytes):

- payload = self.bytes()

- else:

- payload = self.bytes

- else:

- payload = PayloadRef(path=self.path)

- return Item(

- payload=payload,

- type="attachment",

- content_type=self.content_type,

- filename=self.filename,

- )

-

- def __repr__(self):

- # type: () -> str

- return "" % (self.filename,)

diff --git a/sentry_sdk/client.py b/sentry_sdk/client.py

deleted file mode 100644

index 1720993..0000000

--- a/sentry_sdk/client.py

+++ /dev/null

@@ -1,462 +0,0 @@

-import os

-import uuid

-import random

-from datetime import datetime

-import socket

-

-from sentry_sdk._compat import string_types, text_type, iteritems

-from sentry_sdk.utils import (

- capture_internal_exceptions,

- current_stacktrace,

- disable_capture_event,

- format_timestamp,

- get_type_name,

- get_default_release,

- handle_in_app,

- logger,

-)

-from sentry_sdk.serializer import serialize

-from sentry_sdk.transport import make_transport

-from sentry_sdk.consts import DEFAULT_OPTIONS, SDK_INFO, ClientConstructor

-from sentry_sdk.integrations import setup_integrations

-from sentry_sdk.utils import ContextVar

-from sentry_sdk.sessions import SessionFlusher

-from sentry_sdk.envelope import Envelope

-from sentry_sdk.tracing_utils import has_tracestate_enabled, reinflate_tracestate

-

-from sentry_sdk._types import MYPY

-

-if MYPY:

- from typing import Any

- from typing import Callable

- from typing import Dict

- from typing import Optional

-

- from sentry_sdk.scope import Scope

- from sentry_sdk._types import Event, Hint

- from sentry_sdk.session import Session

-

-

-_client_init_debug = ContextVar("client_init_debug")

-

-

-def _get_options(*args, **kwargs):

- # type: (*Optional[str], **Any) -> Dict[str, Any]

- if args and (isinstance(args[0], (text_type, bytes, str)) or args[0] is None):

- dsn = args[0] # type: Optional[str]

- args = args[1:]

- else:

- dsn = None

-

- rv = dict(DEFAULT_OPTIONS)

- options = dict(*args, **kwargs)

- if dsn is not None and options.get("dsn") is None:

- options["dsn"] = dsn

-

- for key, value in iteritems(options):

- if key not in rv:

- raise TypeError("Unknown option %r" % (key,))

- rv[key] = value

-

- if rv["dsn"] is None:

- rv["dsn"] = os.environ.get("SENTRY_DSN")

-

- if rv["release"] is None:

- rv["release"] = get_default_release()

-

- if rv["environment"] is None:

- rv["environment"] = os.environ.get("SENTRY_ENVIRONMENT") or "production"

-

- if rv["server_name"] is None and hasattr(socket, "gethostname"):

- rv["server_name"] = socket.gethostname()

-

- return rv

-

-

-class _Client(object):

- """The client is internally responsible for capturing the events and

- forwarding them to sentry through the configured transport. It takes

- the client options as keyword arguments and optionally the DSN as first

- argument.

- """

-

- def __init__(self, *args, **kwargs):

- # type: (*Any, **Any) -> None

- self.options = get_options(*args, **kwargs) # type: Dict[str, Any]

- self._init_impl()

-

- def __getstate__(self):

- # type: () -> Any

- return {"options": self.options}

-

- def __setstate__(self, state):

- # type: (Any) -> None

- self.options = state["options"]

- self._init_impl()

-

- def _init_impl(self):

- # type: () -> None

- old_debug = _client_init_debug.get(False)

-

- def _capture_envelope(envelope):

- # type: (Envelope) -> None

- if self.transport is not None:

- self.transport.capture_envelope(envelope)

-

- try:

- _client_init_debug.set(self.options["debug"])

- self.transport = make_transport(self.options)

-

- self.session_flusher = SessionFlusher(capture_func=_capture_envelope)

-

- request_bodies = ("always", "never", "small", "medium")

- if self.options["request_bodies"] not in request_bodies:

- raise ValueError(

- "Invalid value for request_bodies. Must be one of {}".format(

- request_bodies

- )

- )

-

- self.integrations = setup_integrations(

- self.options["integrations"],

- with_defaults=self.options["default_integrations"],

- with_auto_enabling_integrations=self.options[

- "auto_enabling_integrations"

- ],

- )

- finally:

- _client_init_debug.set(old_debug)

-

- @property

- def dsn(self):

- # type: () -> Optional[str]

- """Returns the configured DSN as string."""

- return self.options["dsn"]

-

- def _prepare_event(

- self,

- event, # type: Event

- hint, # type: Hint

- scope, # type: Optional[Scope]

- ):

- # type: (...) -> Optional[Event]

-

- if event.get("timestamp") is None:

- event["timestamp"] = datetime.utcnow()

-

- if scope is not None:

- is_transaction = event.get("type") == "transaction"

- event_ = scope.apply_to_event(event, hint)

-

- # one of the event/error processors returned None

- if event_ is None:

- if self.transport:

- self.transport.record_lost_event(

- "event_processor",

- data_category=("transaction" if is_transaction else "error"),

- )

- return None

-

- event = event_

-

- if (

- self.options["attach_stacktrace"]

- and "exception" not in event

- and "stacktrace" not in event

- and "threads" not in event

- ):

- with capture_internal_exceptions():

- event["threads"] = {

- "values": [

- {

- "stacktrace": current_stacktrace(

- self.options["with_locals"]

- ),

- "crashed": False,

- "current": True,

- }

- ]

- }

-

- for key in "release", "environment", "server_name", "dist":

- if event.get(key) is None and self.options[key] is not None:

- event[key] = text_type(self.options[key]).strip()

- if event.get("sdk") is None:

- sdk_info = dict(SDK_INFO)

- sdk_info["integrations"] = sorted(self.integrations.keys())

- event["sdk"] = sdk_info

-

- if event.get("platform") is None:

- event["platform"] = "python"

-

- event = handle_in_app(

- event, self.options["in_app_exclude"], self.options["in_app_include"]

- )

-

- # Postprocess the event here so that annotated types do

- # generally not surface in before_send

- if event is not None:

- event = serialize(

- event,

- smart_transaction_trimming=self.options["_experiments"].get(

- "smart_transaction_trimming"

- ),

- )

-

- before_send = self.options["before_send"]

- if before_send is not None and event.get("type") != "transaction":

- new_event = None

- with capture_internal_exceptions():

- new_event = before_send(event, hint or {})

- if new_event is None:

- logger.info("before send dropped event (%s)", event)

- if self.transport:

- self.transport.record_lost_event(

- "before_send", data_category="error"

- )

- event = new_event # type: ignore

-

- return event

-

- def _is_ignored_error(self, event, hint):

- # type: (Event, Hint) -> bool

- exc_info = hint.get("exc_info")

- if exc_info is None:

- return False

-

- type_name = get_type_name(exc_info[0])

- full_name = "%s.%s" % (exc_info[0].__module__, type_name)

-

- for errcls in self.options["ignore_errors"]:

- # String types are matched against the type name in the

- # exception only

- if isinstance(errcls, string_types):

- if errcls == full_name or errcls == type_name:

- return True

- else:

- if issubclass(exc_info[0], errcls):

- return True

-

- return False

-

- def _should_capture(

- self,

- event, # type: Event

- hint, # type: Hint

- scope=None, # type: Optional[Scope]

- ):

- # type: (...) -> bool

- if event.get("type") == "transaction":

- # Transactions are sampled independent of error events.

- return True

-

- if scope is not None and not scope._should_capture:

- return False

-

- if (

- self.options["sample_rate"] < 1.0

- and random.random() >= self.options["sample_rate"]

- ):

- # record a lost event if we did not sample this.

- if self.transport:

- self.transport.record_lost_event("sample_rate", data_category="error")

- return False

-

- if self._is_ignored_error(event, hint):

- return False

-

- return True

-

- def _update_session_from_event(

- self,

- session, # type: Session

- event, # type: Event

- ):

- # type: (...) -> None

-

- crashed = False

- errored = False

- user_agent = None

-

- exceptions = (event.get("exception") or {}).get("values")

- if exceptions:

- errored = True

- for error in exceptions:

- mechanism = error.get("mechanism")

- if mechanism and mechanism.get("handled") is False:

- crashed = True

- break

-

- user = event.get("user")

-

- if session.user_agent is None:

- headers = (event.get("request") or {}).get("headers")

- for (k, v) in iteritems(headers or {}):

- if k.lower() == "user-agent":

- user_agent = v

- break

-

- session.update(

- status="crashed" if crashed else None,

- user=user,

- user_agent=user_agent,

- errors=session.errors + (errored or crashed),

- )

-

- def capture_event(

- self,

- event, # type: Event

- hint=None, # type: Optional[Hint]

- scope=None, # type: Optional[Scope]

- ):

- # type: (...) -> Optional[str]

- """Captures an event.

-

- :param event: A ready-made event that can be directly sent to Sentry.

-

- :param hint: Contains metadata about the event that can be read from `before_send`, such as the original exception object or a HTTP request object.

-

- :returns: An event ID. May be `None` if there is no DSN set or of if the SDK decided to discard the event for other reasons. In such situations setting `debug=True` on `init()` may help.

- """

- if disable_capture_event.get(False):

- return None

-

- if self.transport is None:

- return None

- if hint is None:

- hint = {}

- event_id = event.get("event_id")

- hint = dict(hint or ()) # type: Hint

-

- if event_id is None:

- event["event_id"] = event_id = uuid.uuid4().hex

- if not self._should_capture(event, hint, scope):

- return None

-

- event_opt = self._prepare_event(event, hint, scope)

- if event_opt is None:

- return None

-

- # whenever we capture an event we also check if the session needs

- # to be updated based on that information.

- session = scope._session if scope else None

- if session:

- self._update_session_from_event(session, event)

-

- attachments = hint.get("attachments")

- is_transaction = event_opt.get("type") == "transaction"

-

- # this is outside of the `if` immediately below because even if we don't

- # use the value, we want to make sure we remove it before the event is

- # sent

- raw_tracestate = (

- event_opt.get("contexts", {}).get("trace", {}).pop("tracestate", "")

- )

-

- # Transactions or events with attachments should go to the /envelope/

- # endpoint.

- if is_transaction or attachments:

-

- headers = {

- "event_id": event_opt["event_id"],

- "sent_at": format_timestamp(datetime.utcnow()),

- }

-

- tracestate_data = raw_tracestate and reinflate_tracestate(

- raw_tracestate.replace("sentry=", "")

- )

- if tracestate_data and has_tracestate_enabled():

- headers["trace"] = tracestate_data

-

- envelope = Envelope(headers=headers)

-

- if is_transaction:

- envelope.add_transaction(event_opt)

- else:

- envelope.add_event(event_opt)

-

- for attachment in attachments or ():

- envelope.add_item(attachment.to_envelope_item())

- self.transport.capture_envelope(envelope)

- else:

- # All other events go to the /store/ endpoint.

- self.transport.capture_event(event_opt)

- return event_id

-

- def capture_session(

- self, session # type: Session

- ):

- # type: (...) -> None

- if not session.release:

- logger.info("Discarded session update because of missing release")

- else:

- self.session_flusher.add_session(session)

-

- def close(

- self,

- timeout=None, # type: Optional[float]

- callback=None, # type: Optional[Callable[[int, float], None]]

- ):

- # type: (...) -> None

- """

- Close the client and shut down the transport. Arguments have the same

- semantics as :py:meth:`Client.flush`.

- """

- if self.transport is not None:

- self.flush(timeout=timeout, callback=callback)

- self.session_flusher.kill()

- self.transport.kill()

- self.transport = None

-

- def flush(

- self,

- timeout=None, # type: Optional[float]

- callback=None, # type: Optional[Callable[[int, float], None]]

- ):

- # type: (...) -> None

- """

- Wait for the current events to be sent.

-

- :param timeout: Wait for at most `timeout` seconds. If no `timeout` is provided, the `shutdown_timeout` option value is used.

-

- :param callback: Is invoked with the number of pending events and the configured timeout.

- """

- if self.transport is not None:

- if timeout is None:

- timeout = self.options["shutdown_timeout"]

- self.session_flusher.flush()

- self.transport.flush(timeout=timeout, callback=callback)

-

- def __enter__(self):

- # type: () -> _Client

- return self

-

- def __exit__(self, exc_type, exc_value, tb):

- # type: (Any, Any, Any) -> None

- self.close()

-

-

-from sentry_sdk._types import MYPY

-

-if MYPY:

- # Make mypy, PyCharm and other static analyzers think `get_options` is a

- # type to have nicer autocompletion for params.

- #

- # Use `ClientConstructor` to define the argument types of `init` and

- # `Dict[str, Any]` to tell static analyzers about the return type.

-

- class get_options(ClientConstructor, Dict[str, Any]): # noqa: N801

- pass

-

- class Client(ClientConstructor, _Client):

- pass

-

-

-else:

- # Alias `get_options` for actual usage. Go through the lambda indirection

- # to throw PyCharm off of the weakly typed signature (it would otherwise

- # discover both the weakly typed signature of `_init` and our faked `init`

- # type).

-

- get_options = (lambda: _get_options)()

- Client = (lambda: _Client)()

diff --git a/sentry_sdk/consts.py b/sentry_sdk/consts.py

deleted file mode 100644

index fe3b2f0..0000000

--- a/sentry_sdk/consts.py

+++ /dev/null

@@ -1,109 +0,0 @@

-from sentry_sdk._types import MYPY

-

-if MYPY:

- import sentry_sdk

-

- from typing import Optional

- from typing import Callable

- from typing import Union

- from typing import List

- from typing import Type

- from typing import Dict

- from typing import Any

- from typing import Sequence

- from typing_extensions import TypedDict

-

- from sentry_sdk.integrations import Integration

-

- from sentry_sdk._types import (

- BreadcrumbProcessor,

- Event,

- EventProcessor,

- TracesSampler,

- )

-

- # Experiments are feature flags to enable and disable certain unstable SDK

- # functionality. Changing them from the defaults (`None`) in production

- # code is highly discouraged. They are not subject to any stability

- # guarantees such as the ones from semantic versioning.

- Experiments = TypedDict(

- "Experiments",

- {

- "max_spans": Optional[int],

- "record_sql_params": Optional[bool],

- "smart_transaction_trimming": Optional[bool],

- "propagate_tracestate": Optional[bool],

- },

- total=False,

- )

-

-DEFAULT_QUEUE_SIZE = 100

-DEFAULT_MAX_BREADCRUMBS = 100

-

-

-# This type exists to trick mypy and PyCharm into thinking `init` and `Client`

-# take these arguments (even though they take opaque **kwargs)

-class ClientConstructor(object):

- def __init__(

- self,

- dsn=None, # type: Optional[str]

- with_locals=True, # type: bool

- max_breadcrumbs=DEFAULT_MAX_BREADCRUMBS, # type: int

- release=None, # type: Optional[str]

- environment=None, # type: Optional[str]

- server_name=None, # type: Optional[str]

- shutdown_timeout=2, # type: float

- integrations=[], # type: Sequence[Integration] # noqa: B006

- in_app_include=[], # type: List[str] # noqa: B006

- in_app_exclude=[], # type: List[str] # noqa: B006

- default_integrations=True, # type: bool

- dist=None, # type: Optional[str]

- transport=None, # type: Optional[Union[sentry_sdk.transport.Transport, Type[sentry_sdk.transport.Transport], Callable[[Event], None]]]

- transport_queue_size=DEFAULT_QUEUE_SIZE, # type: int

- sample_rate=1.0, # type: float

- send_default_pii=False, # type: bool

- http_proxy=None, # type: Optional[str]

- https_proxy=None, # type: Optional[str]

- ignore_errors=[], # type: List[Union[type, str]] # noqa: B006

- request_bodies="medium", # type: str

- before_send=None, # type: Optional[EventProcessor]

- before_breadcrumb=None, # type: Optional[BreadcrumbProcessor]

- debug=False, # type: bool

- attach_stacktrace=False, # type: bool

- ca_certs=None, # type: Optional[str]

- propagate_traces=True, # type: bool

- traces_sample_rate=None, # type: Optional[float]

- traces_sampler=None, # type: Optional[TracesSampler]

- auto_enabling_integrations=True, # type: bool

- auto_session_tracking=True, # type: bool

- send_client_reports=True, # type: bool

- _experiments={}, # type: Experiments # noqa: B006

- ):

- # type: (...) -> None

- pass

-

-

-def _get_default_options():

- # type: () -> Dict[str, Any]

- import inspect

-

- if hasattr(inspect, "getfullargspec"):

- getargspec = inspect.getfullargspec

- else:

- getargspec = inspect.getargspec # type: ignore

-

- a = getargspec(ClientConstructor.__init__)

- defaults = a.defaults or ()

- return dict(zip(a.args[-len(defaults) :], defaults))

-

-

-DEFAULT_OPTIONS = _get_default_options()

-del _get_default_options

-

-

-VERSION = "1.5.8"

-SDK_INFO = {

- "name": "sentry.python",

- "version": VERSION,

- "packages": [{"name": "pypi:sentry-sdk", "version": VERSION}],

-}

diff --git a/sentry_sdk/debug.py b/sentry_sdk/debug.py

deleted file mode 100644

index fe8ae50..0000000

--- a/sentry_sdk/debug.py

+++ /dev/null

@@ -1,44 +0,0 @@

-import sys

-import logging

-

-from sentry_sdk import utils

-from sentry_sdk.hub import Hub

-from sentry_sdk.utils import logger

-from sentry_sdk.client import _client_init_debug

-from logging import LogRecord

-

-

-class _HubBasedClientFilter(logging.Filter):

- def filter(self, record):

- # type: (LogRecord) -> bool

- if _client_init_debug.get(False):

- return True

- hub = Hub.current

- if hub is not None and hub.client is not None:

- return hub.client.options["debug"]

- return False

-

-

-def init_debug_support():

- # type: () -> None

- if not logger.handlers:

- configure_logger()

- configure_debug_hub()

-

-

-def configure_logger():

- # type: () -> None

- _handler = logging.StreamHandler(sys.stderr)

- _handler.setFormatter(logging.Formatter(" [sentry] %(levelname)s: %(message)s"))

- logger.addHandler(_handler)

- logger.setLevel(logging.DEBUG)

- logger.addFilter(_HubBasedClientFilter())

-

-

-def configure_debug_hub():

- # type: () -> None

- def _get_debug_hub():

- # type: () -> Hub

- return Hub.current

-

- utils._get_debug_hub = _get_debug_hub

diff --git a/sentry_sdk/envelope.py b/sentry_sdk/envelope.py

deleted file mode 100644

index 928c691..0000000

--- a/sentry_sdk/envelope.py

+++ /dev/null

@@ -1,317 +0,0 @@

-import io

-import json

-import mimetypes

-

-from sentry_sdk._compat import text_type, PY2

-from sentry_sdk._types import MYPY

-from sentry_sdk.session import Session

-from sentry_sdk.utils import json_dumps, capture_internal_exceptions

-

-if MYPY:

- from typing import Any

- from typing import Optional

- from typing import Union

- from typing import Dict

- from typing import List

- from typing import Iterator

-

- from sentry_sdk._types import Event, EventDataCategory

-

-

-def parse_json(data):

- # type: (Union[bytes, text_type]) -> Any

- # on some python 3 versions this needs to be bytes

- if not PY2 and isinstance(data, bytes):

- data = data.decode("utf-8", "replace")

- return json.loads(data)

-

-

-class Envelope(object):

- def __init__(

- self,

- headers=None, # type: Optional[Dict[str, Any]]

- items=None, # type: Optional[List[Item]]

- ):

- # type: (...) -> None

- if headers is not None:

- headers = dict(headers)

- self.headers = headers or {}

- if items is None:

- items = []

- else:

- items = list(items)

- self.items = items

-

- @property

- def description(self):

- # type: (...) -> str

- return "envelope with %s items (%s)" % (

- len(self.items),

- ", ".join(x.data_category for x in self.items),

- )

-

- def add_event(

- self, event # type: Event

- ):

- # type: (...) -> None

- self.add_item(Item(payload=PayloadRef(json=event), type="event"))

-

- def add_transaction(

- self, transaction # type: Event

- ):

- # type: (...) -> None

- self.add_item(Item(payload=PayloadRef(json=transaction), type="transaction"))

-

- def add_session(

- self, session # type: Union[Session, Any]

- ):

- # type: (...) -> None

- if isinstance(session, Session):

- session = session.to_json()

- self.add_item(Item(payload=PayloadRef(json=session), type="session"))

-

- def add_sessions(

- self, sessions # type: Any

- ):

- # type: (...) -> None

- self.add_item(Item(payload=PayloadRef(json=sessions), type="sessions"))

-

- def add_item(

- self, item # type: Item

- ):

- # type: (...) -> None

- self.items.append(item)

-

- def get_event(self):

- # type: (...) -> Optional[Event]

- for items in self.items:

- event = items.get_event()

- if event is not None:

- return event

- return None

-

- def get_transaction_event(self):

- # type: (...) -> Optional[Event]

- for item in self.items:

- event = item.get_transaction_event()

- if event is not None:

- return event

- return None

-

- def __iter__(self):

- # type: (...) -> Iterator[Item]

- return iter(self.items)

-

- def serialize_into(

- self, f # type: Any

- ):

- # type: (...) -> None

- f.write(json_dumps(self.headers))

- f.write(b"\n")

- for item in self.items:

- item.serialize_into(f)

-

- def serialize(self):

- # type: (...) -> bytes

- out = io.BytesIO()

- self.serialize_into(out)

- return out.getvalue()

-

- @classmethod

- def deserialize_from(

- cls, f # type: Any

- ):

- # type: (...) -> Envelope

- headers = parse_json(f.readline())

- items = []

- while 1:

- item = Item.deserialize_from(f)

- if item is None:

- break

- items.append(item)

- return cls(headers=headers, items=items)

-

- @classmethod

- def deserialize(

- cls, bytes # type: bytes

- ):

- # type: (...) -> Envelope

- return cls.deserialize_from(io.BytesIO(bytes))

-

- def __repr__(self):

- # type: (...) -> str

- return "" % (self.headers, self.items)

-

-

-class PayloadRef(object):

- def __init__(

- self,

- bytes=None, # type: Optional[bytes]

- path=None, # type: Optional[Union[bytes, text_type]]

- json=None, # type: Optional[Any]

- ):

- # type: (...) -> None

- self.json = json

- self.bytes = bytes

- self.path = path

-

- def get_bytes(self):

- # type: (...) -> bytes

- if self.bytes is None:

- if self.path is not None:

- with capture_internal_exceptions():

- with open(self.path, "rb") as f:

- self.bytes = f.read()

- elif self.json is not None:

- self.bytes = json_dumps(self.json)

- else:

- self.bytes = b""

- return self.bytes

-

- @property

- def inferred_content_type(self):

- # type: (...) -> str

- if self.json is not None:

- return "application/json"

- elif self.path is not None:

- path = self.path

- if isinstance(path, bytes):

- path = path.decode("utf-8", "replace")

- ty = mimetypes.guess_type(path)[0]

- if ty:

- return ty

- return "application/octet-stream"

-

- def __repr__(self):

- # type: (...) -> str

- return "" % (self.inferred_content_type,)

-

-

-class Item(object):

- def __init__(

- self,

- payload, # type: Union[bytes, text_type, PayloadRef]

- headers=None, # type: Optional[Dict[str, Any]]

- type=None, # type: Optional[str]

- content_type=None, # type: Optional[str]

- filename=None, # type: Optional[str]

- ):

- if headers is not None:

- headers = dict(headers)

- elif headers is None:

- headers = {}

- self.headers = headers

- if isinstance(payload, bytes):

- payload = PayloadRef(bytes=payload)

- elif isinstance(payload, text_type):

- payload = PayloadRef(bytes=payload.encode("utf-8"))

- else:

- payload = payload

-

- if filename is not None:

- headers["filename"] = filename

- if type is not None:

- headers["type"] = type

- if content_type is not None:

- headers["content_type"] = content_type

- elif "content_type" not in headers:

- headers["content_type"] = payload.inferred_content_type

-

- self.payload = payload

-

- def __repr__(self):

- # type: (...) -> str

- return "- " % (

- self.headers,

- self.payload,

- self.data_category,

- )

-

- @property

- def type(self):

- # type: (...) -> Optional[str]

- return self.headers.get("type")

-

- @property

- def data_category(self):

- # type: (...) -> EventDataCategory

- ty = self.headers.get("type")

- if ty == "session":

- return "session"

- elif ty == "attachment":

- return "attachment"

- elif ty == "transaction":

- return "transaction"

- elif ty == "event":

- return "error"

- elif ty == "client_report":

- return "internal"

- else:

- return "default"

-

- def get_bytes(self):

- # type: (...) -> bytes

- return self.payload.get_bytes()

-

- def get_event(self):

- # type: (...) -> Optional[Event]

- """

- Returns an error event if there is one.

- """

- if self.type == "event" and self.payload.json is not None:

- return self.payload.json

- return None

-

- def get_transaction_event(self):

- # type: (...) -> Optional[Event]

- if self.type == "transaction" and self.payload.json is not None:

- return self.payload.json

- return None

-

- def serialize_into(

- self, f # type: Any

- ):

- # type: (...) -> None

- headers = dict(self.headers)

- bytes = self.get_bytes()

- headers["length"] = len(bytes)

- f.write(json_dumps(headers))

- f.write(b"\n")

- f.write(bytes)

- f.write(b"\n")

-

- def serialize(self):

- # type: (...) -> bytes

- out = io.BytesIO()

- self.serialize_into(out)

- return out.getvalue()

-

- @classmethod

- def deserialize_from(

- cls, f # type: Any

- ):

- # type: (...) -> Optional[Item]

- line = f.readline().rstrip()

- if not line:

- return None

- headers = parse_json(line)

- length = headers.get("length")

- if length is not None:

- payload = f.read(length)

- f.readline()

- else:

- # if no length was specified we need to read up to the end of line

- # and remove it (if it is present, i.e. not the very last char in an eof terminated envelope)

- payload = f.readline().rstrip(b"\n")

- if headers.get("type") in ("event", "transaction", "metric_buckets"):

- rv = cls(headers=headers, payload=PayloadRef(json=parse_json(payload)))

- else:

- rv = cls(headers=headers, payload=payload)

- return rv

-

- @classmethod

- def deserialize(

- cls, bytes # type: bytes

- ):

- # type: (...) -> Optional[Item]

- return cls.deserialize_from(io.BytesIO(bytes))

diff --git a/sentry_sdk/hub.py b/sentry_sdk/hub.py

deleted file mode 100644

index addca57..0000000

--- a/sentry_sdk/hub.py

+++ /dev/null

@@ -1,708 +0,0 @@

-import copy

-import sys

-

-from datetime import datetime

-from contextlib import contextmanager

-

-from sentry_sdk._compat import with_metaclass

-from sentry_sdk.scope import Scope

-from sentry_sdk.client import Client

-from sentry_sdk.tracing import Span, Transaction

-from sentry_sdk.session import Session

-from sentry_sdk.utils import (

- exc_info_from_error,

- event_from_exception,

- logger,

- ContextVar,

-)

-

-from sentry_sdk._types import MYPY

-

-if MYPY:

- from typing import Union

- from typing import Any

- from typing import Optional

- from typing import Tuple

- from typing import Dict

- from typing import List

- from typing import Callable

- from typing import Generator

- from typing import Type

- from typing import TypeVar

- from typing import overload

- from typing import ContextManager

-

- from sentry_sdk.integrations import Integration

- from sentry_sdk._types import (

- Event,

- Hint,

- Breadcrumb,

- BreadcrumbHint,

- ExcInfo,

- )

- from sentry_sdk.consts import ClientConstructor

-

- T = TypeVar("T")

-

-else:

-

- def overload(x):

- # type: (T) -> T

- return x

-

-

-_local = ContextVar("sentry_current_hub")

-

-

-def _update_scope(base, scope_change, scope_kwargs):

- # type: (Scope, Optional[Any], Dict[str, Any]) -> Scope

- if scope_change and scope_kwargs:

- raise TypeError("cannot provide scope and kwargs")

- if scope_change is not None:

- final_scope = copy.copy(base)

- if callable(scope_change):

- scope_change(final_scope)

- else:

- final_scope.update_from_scope(scope_change)

- elif scope_kwargs:

- final_scope = copy.copy(base)

- final_scope.update_from_kwargs(**scope_kwargs)

- else:

- final_scope = base

- return final_scope

-

-

-def _should_send_default_pii():

- # type: () -> bool

- client = Hub.current.client

- if not client:

- return False

- return client.options["send_default_pii"]

-

-

-class _InitGuard(object):

- def __init__(self, client):

- # type: (Client) -> None

- self._client = client

-

- def __enter__(self):

- # type: () -> _InitGuard

- return self

-

- def __exit__(self, exc_type, exc_value, tb):

- # type: (Any, Any, Any) -> None

- c = self._client

- if c is not None:

- c.close()

-

-

-def _init(*args, **kwargs):

- # type: (*Optional[str], **Any) -> ContextManager[Any]

- """Initializes the SDK and optionally integrations.

-

- This takes the same arguments as the client constructor.

- """

- client = Client(*args, **kwargs) # type: ignore

- Hub.current.bind_client(client)

- rv = _InitGuard(client)

- return rv

-

-

-from sentry_sdk._types import MYPY

-

-if MYPY:

- # Make mypy, PyCharm and other static analyzers think `init` is a type to

- # have nicer autocompletion for params.

- #

- # Use `ClientConstructor` to define the argument types of `init` and

- # `ContextManager[Any]` to tell static analyzers about the return type.

-

- class init(ClientConstructor, ContextManager[Any]): # noqa: N801

- pass

-

-

-else:

- # Alias `init` for actual usage. Go through the lambda indirection to throw

- # PyCharm off of the weakly typed signature (it would otherwise discover

- # both the weakly typed signature of `_init` and our faked `init` type).

-

- init = (lambda: _init)()

-

-

-class HubMeta(type):

- @property

- def current(cls):

- # type: () -> Hub

- """Returns the current instance of the hub."""

- rv = _local.get(None)

- if rv is None:

- rv = Hub(GLOBAL_HUB)

- _local.set(rv)

- return rv

-

- @property

- def main(cls):

- # type: () -> Hub

- """Returns the main instance of the hub."""

- return GLOBAL_HUB

-

-

-class _ScopeManager(object):

- def __init__(self, hub):

- # type: (Hub) -> None

- self._hub = hub

- self._original_len = len(hub._stack)

- self._layer = hub._stack[-1]

-

- def __enter__(self):

- # type: () -> Scope

- scope = self._layer[1]

- assert scope is not None

- return scope

-

- def __exit__(self, exc_type, exc_value, tb):

- # type: (Any, Any, Any) -> None

- current_len = len(self._hub._stack)

- if current_len < self._original_len:

- logger.error(

- "Scope popped too soon. Popped %s scopes too many.",

- self._original_len - current_len,

- )

- return

- elif current_len > self._original_len:

- logger.warning(

- "Leaked %s scopes: %s",

- current_len - self._original_len,

- self._hub._stack[self._original_len :],

- )

-

- layer = self._hub._stack[self._original_len - 1]

- del self._hub._stack[self._original_len - 1 :]

-

- if layer[1] != self._layer[1]:

- logger.error(

- "Wrong scope found. Meant to pop %s, but popped %s.",

- layer[1],

- self._layer[1],

- )

- elif layer[0] != self._layer[0]:

- warning = (

- "init() called inside of pushed scope. This might be entirely "

- "legitimate but usually occurs when initializing the SDK inside "

- "a request handler or task/job function. Try to initialize the "

- "SDK as early as possible instead."

- )

- logger.warning(warning)

-

-

-class Hub(with_metaclass(HubMeta)): # type: ignore

- """The hub wraps the concurrency management of the SDK. Each thread has

- its own hub but the hub might transfer with the flow of execution if

- context vars are available.

-

- If the hub is used with a with statement it's temporarily activated.

- """

-

- _stack = None # type: List[Tuple[Optional[Client], Scope]]

-

- # Mypy doesn't pick up on the metaclass.

-

- if MYPY:

- current = None # type: Hub

- main = None # type: Hub

-

- def __init__(

- self,

- client_or_hub=None, # type: Optional[Union[Hub, Client]]

- scope=None, # type: Optional[Any]

- ):

- # type: (...) -> None

- if isinstance(client_or_hub, Hub):

- hub = client_or_hub

- client, other_scope = hub._stack[-1]

- if scope is None:

- scope = copy.copy(other_scope)

- else:

- client = client_or_hub

- if scope is None:

- scope = Scope()

-

- self._stack = [(client, scope)]

- self._last_event_id = None # type: Optional[str]

- self._old_hubs = [] # type: List[Hub]

-

- def __enter__(self):

- # type: () -> Hub

- self._old_hubs.append(Hub.current)

- _local.set(self)

- return self

-

- def __exit__(

- self,

- exc_type, # type: Optional[type]

- exc_value, # type: Optional[BaseException]

- tb, # type: Optional[Any]

- ):

- # type: (...) -> None

- old = self._old_hubs.pop()

- _local.set(old)

-

- def run(

- self, callback # type: Callable[[], T]

- ):

- # type: (...) -> T

- """Runs a callback in the context of the hub. Alternatively the

- with statement can be used on the hub directly.

- """

- with self:

- return callback()

-

- def get_integration(

- self, name_or_class # type: Union[str, Type[Integration]]

- ):

- # type: (...) -> Any

- """Returns the integration for this hub by name or class. If there

- is no client bound or the client does not have that integration

- then `None` is returned.

-

- If the return value is not `None` the hub is guaranteed to have a

- client attached.

- """

- if isinstance(name_or_class, str):

- integration_name = name_or_class

- elif name_or_class.identifier is not None:

- integration_name = name_or_class.identifier

- else:

- raise ValueError("Integration has no name")

-

- client = self.client

- if client is not None:

- rv = client.integrations.get(integration_name)

- if rv is not None:

- return rv

-

- @property

- def client(self):

- # type: () -> Optional[Client]

- """Returns the current client on the hub."""

- return self._stack[-1][0]

-

- @property

- def scope(self):

- # type: () -> Scope

- """Returns the current scope on the hub."""

- return self._stack[-1][1]

-

- def last_event_id(self):

- # type: () -> Optional[str]

- """Returns the last event ID."""

- return self._last_event_id

-

- def bind_client(

- self, new # type: Optional[Client]

- ):

- # type: (...) -> None

- """Binds a new client to the hub."""

- top = self._stack[-1]

- self._stack[-1] = (new, top[1])

-

- def capture_event(

- self,

- event, # type: Event

- hint=None, # type: Optional[Hint]

- scope=None, # type: Optional[Any]

- **scope_args # type: Any

- ):

- # type: (...) -> Optional[str]

- """Captures an event. Alias of :py:meth:`sentry_sdk.Client.capture_event`."""

- client, top_scope = self._stack[-1]

- scope = _update_scope(top_scope, scope, scope_args)

- if client is not None:

- is_transaction = event.get("type") == "transaction"

- rv = client.capture_event(event, hint, scope)

- if rv is not None and not is_transaction:

- self._last_event_id = rv

- return rv

- return None

-

- def capture_message(

- self,

- message, # type: str

- level=None, # type: Optional[str]

- scope=None, # type: Optional[Any]

- **scope_args # type: Any

- ):

- # type: (...) -> Optional[str]

- """Captures a message. The message is just a string. If no level

- is provided the default level is `info`.

-

- :returns: An `event_id` if the SDK decided to send the event (see :py:meth:`sentry_sdk.Client.capture_event`).

- """

- if self.client is None:

- return None

- if level is None:

- level = "info"

- return self.capture_event(

- {"message": message, "level": level}, scope=scope, **scope_args

- )

-

- def capture_exception(

- self,

- error=None, # type: Optional[Union[BaseException, ExcInfo]]

- scope=None, # type: Optional[Any]

- **scope_args # type: Any

- ):

- # type: (...) -> Optional[str]

- """Captures an exception.

-

- :param error: An exception to catch. If `None`, `sys.exc_info()` will be used.

-

- :returns: An `event_id` if the SDK decided to send the event (see :py:meth:`sentry_sdk.Client.capture_event`).

- """

- client = self.client

- if client is None:

- return None

- if error is not None:

- exc_info = exc_info_from_error(error)

- else:

- exc_info = sys.exc_info()

-

- event, hint = event_from_exception(exc_info, client_options=client.options)

- try:

- return self.capture_event(event, hint=hint, scope=scope, **scope_args)

- except Exception:

- self._capture_internal_exception(sys.exc_info())

-

- return None

-

- def _capture_internal_exception(

- self, exc_info # type: Any

- ):

- # type: (...) -> Any

- """

- Capture an exception that is likely caused by a bug in the SDK

- itself.

-

- These exceptions do not end up in Sentry and are just logged instead.

- """

- logger.error("Internal error in sentry_sdk", exc_info=exc_info)

-

- def add_breadcrumb(

- self,

- crumb=None, # type: Optional[Breadcrumb]

- hint=None, # type: Optional[BreadcrumbHint]

- **kwargs # type: Any

- ):

- # type: (...) -> None

- """

- Adds a breadcrumb.

-

- :param crumb: Dictionary with the data as the sentry v7/v8 protocol expects.

-

- :param hint: An optional value that can be used by `before_breadcrumb`

- to customize the breadcrumbs that are emitted.

- """

- client, scope = self._stack[-1]

- if client is None:

- logger.info("Dropped breadcrumb because no client bound")

- return

-

- crumb = dict(crumb or ()) # type: Breadcrumb

- crumb.update(kwargs)

- if not crumb:

- return

-

- hint = dict(hint or ()) # type: Hint

-

- if crumb.get("timestamp") is None:

- crumb["timestamp"] = datetime.utcnow()

- if crumb.get("type") is None:

- crumb["type"] = "default"

-

- if client.options["before_breadcrumb"] is not None:

- new_crumb = client.options["before_breadcrumb"](crumb, hint)

- else:

- new_crumb = crumb

-

- if new_crumb is not None:

- scope._breadcrumbs.append(new_crumb)

- else:

- logger.info("before breadcrumb dropped breadcrumb (%s)", crumb)

-

- max_breadcrumbs = client.options["max_breadcrumbs"] # type: int

- while len(scope._breadcrumbs) > max_breadcrumbs:

- scope._breadcrumbs.popleft()

-

- def start_span(

- self,

- span=None, # type: Optional[Span]

- **kwargs # type: Any

- ):

- # type: (...) -> Span

- """

- Create and start timing a new span whose parent is the currently active

- span or transaction, if any. The return value is a span instance,