From c905a3d7dabfc33e0a85b9b0626e137c912cd790 Mon Sep 17 00:00:00 2001

From: cooper-lzy <78672629+cooper-lzy@users.noreply.github.com>

Date: Mon, 13 Mar 2023 10:12:18 +0800

Subject: [PATCH] merge from 3.4.1

---

.github/workflows/deploy.yml | 8 +-

docs-2.0/1.introduction/3.vid.md | 2 +-

.../2.quick-start/1.quick-start-workflow.md | 37 +--

docs-2.0/20.appendix/history.md | 6 -

.../dashboard-comm-release-note.md | 71 ++++-

.../dashboard-ent-release-note.md | 8 -

.../release-notes/nebula-comm-release-note.md | 68 ++++-

.../release-notes/nebula-ent-release-note.md | 85 +++++-

.../keywords-and-reserved-words.md | 2 +-

.../10.tag-statements/1.create-tag.md | 2 +-

.../1.create-native-index.md | 2 +-

.../16.subgraph-and-path/1.get-subgraph.md | 14 -

.../2.user-defined-variables.md | 3 +-

docs-2.0/3.ngql-guide/5.operators/4.pipe.md | 2 +

.../6.functions-and-expressions/4.schema.md | 17 --

.../upgrade-nebula-ent-from-3.x-3.4.md | 7 +-

.../upgrade-nebula-from-300-to-latest.md | 5 +-

.../upgrade-nebula-graph-to-latest.md | 8 +-

.../1.configurations/.1.get-configurations.md | 36 +++

.../1.configurations/.5.console-config.md | 10 +

.../1.configurations/1.configurations.md | 26 --

.../1.configurations/2.meta-config.md | 92 +++----

.../1.configurations/3.graph-config.md | 164 ++++++-----

.../1.configurations/4.storage-config.md | 164 ++++++-----

.../enable_autofdo_for_nebulagraph.md | 46 ++--

docs-2.0/README.md | 17 ++

.../nebula-br-ent/2.install-tools.md | 2 +-

.../nebula-br/1.what-is-br.md | 2 +-

.../nebula-br/2.compile-br.md | 2 +-

.../graph-computing/algorithm-description.md | 8 -

docs-2.0/graph-computing/nebula-algorithm.md | 10 +-

docs-2.0/graph-computing/nebula-analytics.md | 3 +-

.../1.what-is-dashboard-ent.md | 1 -

.../nebula-dashboard/1.what-is-dashboard.md | 1 -

.../about-exchange/ex-ug-what-is-exchange.md | 5 +-

.../parameter-reference/ex-ug-parameter.md | 2 +-

.../use-exchange/ex-ug-export-from-nebula.md | 254 ++++--------------

.../ex-ug-import-from-clickhouse.md | 4 +-

.../use-exchange/ex-ug-import-from-csv.md | 4 +-

.../use-exchange/ex-ug-import-from-hbase.md | 4 +-

.../use-exchange/ex-ug-import-from-hive.md | 4 +-

.../use-exchange/ex-ug-import-from-jdbc.md | 4 +-

.../use-exchange/ex-ug-import-from-json.md | 4 +-

.../use-exchange/ex-ug-import-from-kafka.md | 4 +-

.../ex-ug-import-from-maxcompute.md | 4 +-

.../use-exchange/ex-ug-import-from-mysql.md | 4 +-

.../use-exchange/ex-ug-import-from-neo4j.md | 4 +-

.../use-exchange/ex-ug-import-from-oracle.md | 4 +-

.../use-exchange/ex-ug-import-from-orc.md | 4 +-

.../use-exchange/ex-ug-import-from-parquet.md | 4 +-

.../use-exchange/ex-ug-import-from-pulsar.md | 4 +-

.../use-exchange/ex-ug-import-from-sst.md | 4 +-

.../about-explorer/ex-ug-what-is-explorer.md | 1 -

.../workflow-api/workflow-api-overview.md | 4 +-

.../nebula-importer/config-with-header.md | 3 +-

.../nebula-importer/config-without-header.md | 3 +-

.../1.introduction-to-nebula-operator.md | 4 +-

.../10.backup-restore-using-operator.md | 230 ----------------

.../8.2.pv-reclaim.md | 6 +-

docs-2.0/nebula-spark-connector.md | 22 --

.../st-ug-what-is-graph-studio.md | 2 -

.../deploy-connect/st-ug-deploy.md | 2 +-

docs-2.0/stylesheets/extra.css | 10 +-

mkdocs.yml | 158 ++++++-----

64 files changed, 685 insertions(+), 1012 deletions(-)

create mode 100644 docs-2.0/5.configurations-and-logs/1.configurations/.1.get-configurations.md

create mode 100644 docs-2.0/5.configurations-and-logs/1.configurations/.5.console-config.md

delete mode 100644 docs-2.0/nebula-operator/10.backup-restore-using-operator.md

diff --git a/.github/workflows/deploy.yml b/.github/workflows/deploy.yml

index 5db8b42e9eb..59e16da6c14 100644

--- a/.github/workflows/deploy.yml

+++ b/.github/workflows/deploy.yml

@@ -3,7 +3,7 @@ on:

push:

branches:

# Remember to add v before the following version number unless the version is master.

- - v3.4.1

+ - master

jobs:

deploy:

@@ -11,7 +11,7 @@ jobs:

steps:

- uses: actions/checkout@v2

with:

- fetch-depth: 1 # fetch all commits/branches for gitversion

+ fetch-depth: 0 # fetch all commits/branches for gitversion

- name: Setup Python

uses: actions/setup-python@v1

@@ -29,8 +29,8 @@ jobs:

run: |

# mike delete master -p

git fetch origin gh-pages --depth=1 # fix mike's CI update

- mike deploy 3.4.1 -p --rebase

- mike set-default 3.4.1 -p --rebase

+ mike list

+ mike deploy master -p --rebase

mike list

# - name: Deploy

diff --git a/docs-2.0/1.introduction/3.vid.md b/docs-2.0/1.introduction/3.vid.md

index 56ff991f6bd..2c44a7dc076 100644

--- a/docs-2.0/1.introduction/3.vid.md

+++ b/docs-2.0/1.introduction/3.vid.md

@@ -1,6 +1,6 @@

# VID

-In a graph space, a vertex is uniquely identified by its ID, which is called a VID or a Vertex ID.

+In NebulaGraph, a vertex is uniquely identified by its ID, which is called a VID or a Vertex ID.

## Features

diff --git a/docs-2.0/2.quick-start/1.quick-start-workflow.md b/docs-2.0/2.quick-start/1.quick-start-workflow.md

index e8f358fcb3f..5c9b7f0cf49 100644

--- a/docs-2.0/2.quick-start/1.quick-start-workflow.md

+++ b/docs-2.0/2.quick-start/1.quick-start-workflow.md

@@ -1,39 +1,10 @@

-# Getting started with NebulaGraph

+# Quick start workflow

-This topic describes how to use NebulaGraph with Docker Desktop and on-premises deployment workflow to quickly get started with NebulaGraph.

+The quick start introduces the simplest workflow to use NebulaGraph, including deploying NebulaGraph, connecting to NebulaGraph, and doing basic CRUD.

-## Using NebulaGraph with Docker Desktop

+## Steps

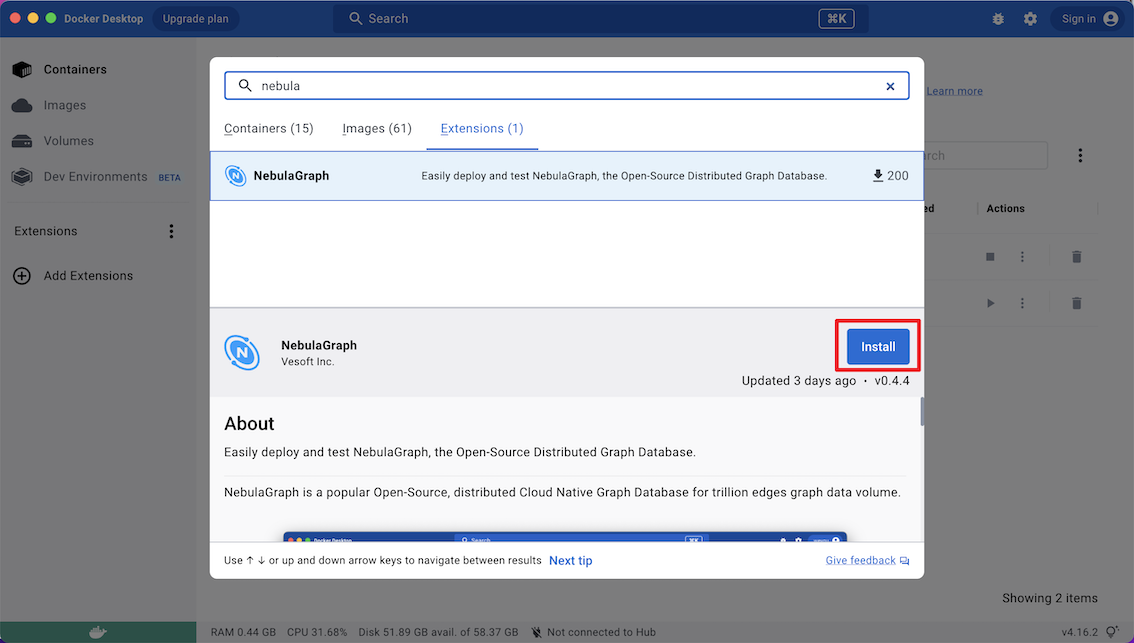

-NebulaGraph is available as a [Docker Extension](https://hub.docker.com/extensions/weygu/nebulagraph-dd-ext) that you can easily install and run on your Docker Desktop. You can quickly deploy NebulaGraph using Docker Desktop with just one click.

-

-1. Install Docker Desktop

-

- - [Install Docker Desktop on Mac](https://docs.docker.com/docker-for-mac/install/)

- - [Install Docker Desktop on Windows](https://docs.docker.com/docker-for-windows/install/)

-

- !!! caution

- To install Docker Desktop, you need to install [WSL 2](https://docs.docker.com/desktop/install/windows-install/#system-requirements) first.

-

-2. In the left sidebar of Docker Desktop, click **Extensions** or **Add Extensions**.

-3. On the Extensions Marketplace, search for NebulaGraph and click **Install**.

-

-

-

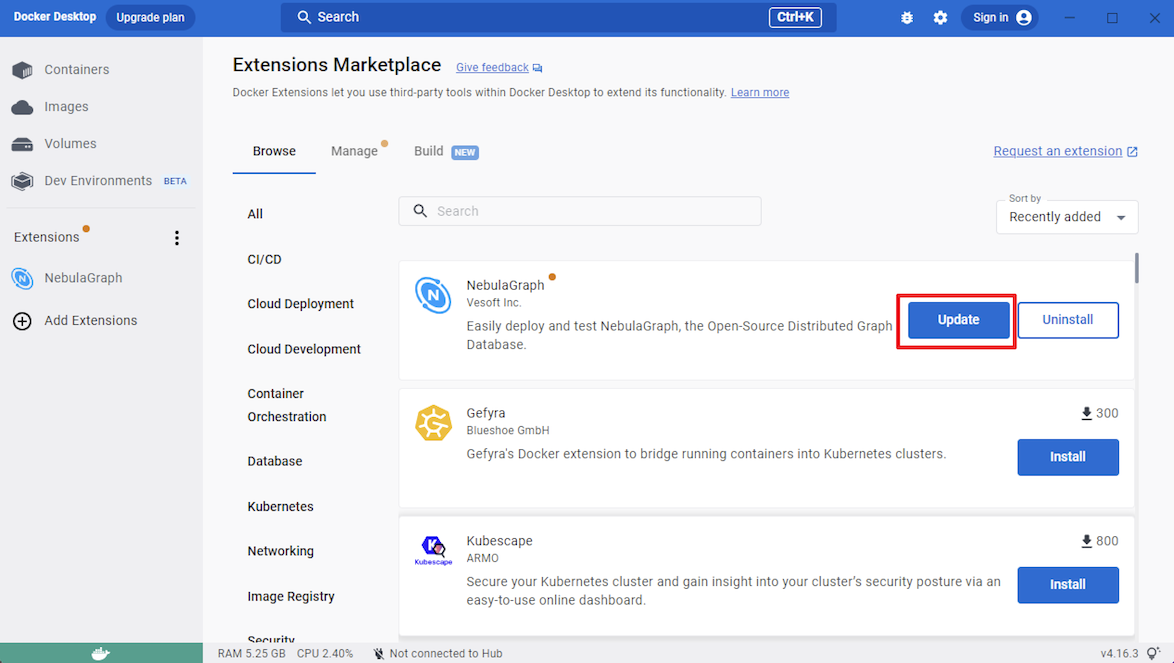

- Click **Update** to update NebulaGraph to the latest version when a new version is available.

-

-

-

-4. Click **Open** to navigate to the NebulaGraph extension page.

-

-5. At the top of the page, click **Studio in Browser** to use NebulaGraph.

-

-For more information about how to use NebulaGraph with Docker Desktop, see the following video:

-

-

-

-## Deploying NebulaGraph on-premises workflow

-

-The following workflow describes how to use NebulaGraph on-premises, including deploying NebulaGraph, connecting to NebulaGraph, and running basic CRUD.

+Users can quickly deploy and use NebulaGraph in the following steps.

1. [Deploy NebulaGraph](2.install-nebula-graph.md)

diff --git a/docs-2.0/20.appendix/history.md b/docs-2.0/20.appendix/history.md

index dbedd8eb996..9937ca5115c 100644

--- a/docs-2.0/20.appendix/history.md

+++ b/docs-2.0/20.appendix/history.md

@@ -36,9 +36,3 @@

9. 2022.2: NebulaGraph v3.0.0 was released.

10. 2022.4: NebulaGraph v3.1.0 was released.

-

-11. 2022.7: NebulaGraph v3.2.0 was released.

-

-12. 2022.10: NebulaGraph v3.3.0 was released.

-

-13. 2023.2: NebulaGraph v3.4.0 was released.

\ No newline at end of file

diff --git a/docs-2.0/20.appendix/release-notes/dashboard-comm-release-note.md b/docs-2.0/20.appendix/release-notes/dashboard-comm-release-note.md

index a1b2f26f601..c1af5b77117 100644

--- a/docs-2.0/20.appendix/release-notes/dashboard-comm-release-note.md

+++ b/docs-2.0/20.appendix/release-notes/dashboard-comm-release-note.md

@@ -1,12 +1,67 @@

# NebulaGraph Dashboard Community Edition {{ nebula.release }} release notes

-## Community Edition 3.4.0

+## Feature

-- Feature

- - Support the built-in [dashboard.service](../../nebula-dashboard/2.deploy-dashboard.md) script to manage the Dashboard services with one-click and view the Dashboard version.

- - Support viewing the configuration of Meta services.

+- Support [killing sessions](../../3.ngql-guide/17.query-tuning-statements/2.kill-session.md). [#5146](https://github.com/vesoft-inc/nebula/pull/5146)

+- Support [Memory Tracker](../../5.configurations-and-logs/1.configurations/4.storage-config.md) to optimize memory management. [#5082](https://github.com/vesoft-inc/nebula/pull/5082)

-- Enhancement

- - Adjust the directory structure and simplify the [deployment steps](../../nebula-dashboard/2.deploy-dashboard.md).

- - Display the names of the monitoring metrics on the overview page of `machine`.

- - Optimize the calculation of monitoring metrics such as `num_queries`, and adjust the display to time series aggregation.

+## Enhancement

+

+- Optimize job management. [#5212](https://github.com/vesoft-inc/nebula/pull/5212) [#5093](https://github.com/vesoft-inc/nebula/pull/5093) [#5099](https://github.com/vesoft-inc/nebula/pull/5099) [#4872](https://github.com/vesoft-inc/nebula/pull/4872)

+

+- Modify the default value of the Graph service parameter `session_reclaim_interval_secs` to 60 seconds. [#5246](https://github.com/vesoft-inc/nebula/pull/5246)

+

+- Adjust the default level of `stderrthreshold` in the configuration file. [#5188](https://github.com/vesoft-inc/nebula/pull/5188)

+

+- Optimize the full-text index. [#5077](https://github.com/vesoft-inc/nebula/pull/5077) [#4900](https://github.com/vesoft-inc/nebula/pull/4900) [#4925](https://github.com/vesoft-inc/nebula/pull/4925)

+

+- Limit the maximum depth of the plan tree in the optimizer to avoid stack overflows. [#5050](https://github.com/vesoft-inc/nebula/pull/5050)

+

+- Optimize the treatment scheme when the pattern expressions are used as predicates. [#4916](https://github.com/vesoft-inc/nebula/pull/4916)

+

+## Bugfix

+

+- Fix the bug about query plan generation and optimization. [#4863](https://github.com/vesoft-inc/nebula/pull/4863) [#4813](https://github.com/vesoft-inc/nebula/pull/4813)

+

+- Fix the bugs related to indexes:

+

+ - Full-text indexes [#5214](https://github.com/vesoft-inc/nebula/pull/5214) [#5260](https://github.com/vesoft-inc/nebula/pull/5260)

+ - String indexes [5126](https://github.com/vesoft-inc/nebula/pull/5126)

+

+- Fix the bugs related to query statements:

+

+ - Variables [#5192](https://github.com/vesoft-inc/nebula/pull/5192)

+ - Filter conditions and expressions [#4952](https://github.com/vesoft-inc/nebula/pull/4952) [#4893](https://github.com/vesoft-inc/nebula/pull/4893) [#4863](https://github.com/vesoft-inc/nebula/pull/4863)

+ - Properties of vertices or edges [#5230](https://github.com/vesoft-inc/nebula/pull/5230) [#4846](https://github.com/vesoft-inc/nebula/pull/4846) [#4841](https://github.com/vesoft-inc/nebula/pull/4841) [#5238](https://github.com/vesoft-inc/nebula/pull/5238)

+ - Functions and aggregations [#5135](https://github.com/vesoft-inc/nebula/pull/5135) [#5121](https://github.com/vesoft-inc/nebula/pull/5121) [#4884](https://github.com/vesoft-inc/nebula/pull/4884)

+ - Using illegal data types [#5242](https://github.com/vesoft-inc/nebula/pull/5242)

+ - Clauses and operators [#5241](https://github.com/vesoft-inc/nebula/pull/5241) [#4965](https://github.com/vesoft-inc/nebula/pull/4965)

+

+- Fix the bugs related to DDL and DML statements:

+

+ - ALTER TAG [#5105](https://github.com/vesoft-inc/nebula/pull/5105) [#5136](https://github.com/vesoft-inc/nebula/pull/5136)

+ - UPDATE [#4933](https://github.com/vesoft-inc/nebula/pull/4933)

+

+- Fix the bugs related to other functions:

+

+ - TTL [#4961](https://github.com/vesoft-inc/nebula/pull/4961)

+ - Authentication [#4885](https://github.com/vesoft-inc/nebula/pull/4885)

+ - Services [#4896](https://github.com/vesoft-inc/nebula/pull/4896)

+

+## Change

+

+- The added property name can not be the same as an existing or deleted property name, otherwise, the operation of adding a property fails. [#5130](https://github.com/vesoft-inc/nebula/pull/5130)

+- Limit the type conversion when modifying the schema. [#5098](https://github.com/vesoft-inc/nebula/pull/5098)

+- The default value must be specified when creating a property of type `NOT NULL`. [#5105](https://github.com/vesoft-inc/nebula/pull/5105)

+- Add the multithreaded query parameter `query_concurrently` to the configuration file with a default value of `true`. [#5119](https://github.com/vesoft-inc/nebula/pull/5119)

+- Remove the parameter `kv_separation` of the KV separation storage function from the configuration file, which is turned off by default. [#5119](https://github.com/vesoft-inc/nebula/pull/5119)

+- Modify the default value of `local_config` in the configuration file to `true`. [#5119](https://github.com/vesoft-inc/nebula/pull/5119)

+- Consistent use of `v.tag.property` to get property values, because it is necessary to specify the Tag. Using `v.property` to access the property of a Tag on `v` was incorrectly allowed in the previous version. [#5230](https://github.com/vesoft-inc/nebula/pull/5230)

+- Remove the column `HTTP port` from the command `SHOW HOSTS`. [#5056](https://github.com/vesoft-inc/nebula/pull/5056)

+- Disable the queries of the form `OPTIONAL MATCH WHERE `. [#5273](https://github.com/vesoft-inc/nebula/pull/5273)

+- Disable TOSS. [#5119](https://github.com/vesoft-inc/nebula/pull/5119)

+- Rename Listener's pid filename and log directory name. [#5119](https://github.com/vesoft-inc/nebula/pull/5119)

+

+## Legacy versions

+

+[Release notes of legacy versions](https://nebula-graph.io/posts/)

\ No newline at end of file

diff --git a/docs-2.0/20.appendix/release-notes/dashboard-ent-release-note.md b/docs-2.0/20.appendix/release-notes/dashboard-ent-release-note.md

index 17775f4fc66..2f41e8cb386 100644

--- a/docs-2.0/20.appendix/release-notes/dashboard-ent-release-note.md

+++ b/docs-2.0/20.appendix/release-notes/dashboard-ent-release-note.md

@@ -1,13 +1,5 @@

# NebulaGraph Dashboard Enterprise Edition release notes

-## Enterprise Edition 3.4.1

-

-- Bugfix

-

- - Fix the bug that the RPM package cannot execute `nebula-agent` due to permission issues.

- - Fix the bug that the cluster import information can not be viewed due to the `goconfig` folder permission.

- - Fix the page error when the license expiration time is less than `30` days and `gracePeriod` is greater than `0`.

-

## Enterprise Edition 3.4.0

- Feature

diff --git a/docs-2.0/20.appendix/release-notes/nebula-comm-release-note.md b/docs-2.0/20.appendix/release-notes/nebula-comm-release-note.md

index a486162f145..9f941d7c16e 100644

--- a/docs-2.0/20.appendix/release-notes/nebula-comm-release-note.md

+++ b/docs-2.0/20.appendix/release-notes/nebula-comm-release-note.md

@@ -1,15 +1,75 @@

# NebulaGraph {{ nebula.release }} release notes

+## Enhancement

+

+- Optimized the performance of k-hop. [#4560](https://github.com/vesoft-inc/nebula/pull/4560) [#4736](https://github.com/vesoft-inc/nebula/pull/4736) [#4566](https://github.com/vesoft-inc/nebula/pull/4566) [#4582](https://github.com/vesoft-inc/nebula/pull/4582) [#4558](https://github.com/vesoft-inc/nebula/pull/4558) [#4556](https://github.com/vesoft-inc/nebula/pull/4556) [#4555](https://github.com/vesoft-inc/nebula/pull/4555) [#4516](https://github.com/vesoft-inc/nebula/pull/4516) [#4531](https://github.com/vesoft-inc/nebula/pull/4531) [#4522](https://github.com/vesoft-inc/nebula/pull/4522) [#4754](https://github.com/vesoft-inc/nebula/pull/4754) [#4762](https://github.com/vesoft-inc/nebula/pull/4762)

+

+- Optimized `GO` statement join performance. [#4599](https://github.com/vesoft-inc/nebula/pull/4599) [#4750](https://github.com/vesoft-inc/nebula/pull/4750)

+

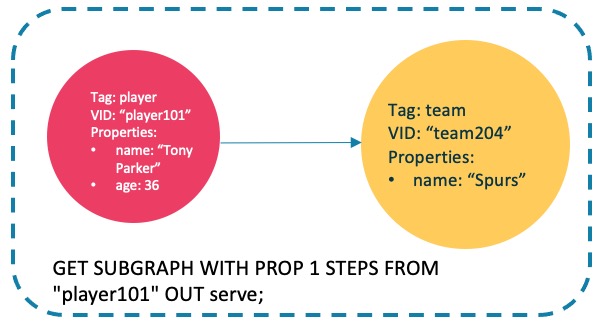

+- Supported using `GET SUBGRAPH` to filter vertices. [#4357](https://github.com/vesoft-inc/nebula/pull/4357)

+

+- Supported using `GetNeighbors` to filter vertices. [#4671](https://github.com/vesoft-inc/nebula/pull/4671)

+

+- Optimized the loop handling of `FIND SHORTEST PATH`. [#4672](https://github.com/vesoft-inc/nebula/pull/4672)

+

+- Supported the conversion between timestamp and date time. [#4626](https://github.com/vesoft-inc/nebula/pull/4526)

+

+- Supported the reference of local variable in pattern expressions. [#4498](https://github.com/vesoft-inc/nebula/pull/4498)

+

+- Optimized the job manager. [#4446](https://github.com/vesoft-inc/nebula/pull/4446) [#4442](https://github.com/vesoft-inc/nebula/pull/4442) [#4444](https://github.com/vesoft-inc/nebula/pull/4444) [#4460](https://github.com/vesoft-inc/nebula/pull/4460) [#4500](https://github.com/vesoft-inc/nebula/pull/4500) [#4633](https://github.com/vesoft-inc/nebula/pull/4633) [#4654](https://github.com/vesoft-inc/nebula/pull/4654) [#4663](https://github.com/vesoft-inc/nebula/pull/4663) [#4722](https://github.com/vesoft-inc/nebula/pull/4722) [#4742](https://github.com/vesoft-inc/nebula/pull/4742)

+

+- Added flags of experimental features, `enable_data_balance` for `BALANCE DATA`. [#4728](https://github.com/vesoft-inc/nebula/pull/4728)

+

+- Stats log print to console when the process is started. [#4550](https://github.com/vesoft-inc/nebula/pull/4550)

+

+- Supported the `JSON_EXTRACT` function. [#4743](https://github.com/vesoft-inc/nebula/pull/4743)

+

## Bugfix

-- Fix the crash caused by encoding parameter expressions to the storage layer for execution. [#5336](https://github.com/vesoft-inc/nebula/pull/5336)

+- Fixed the crash of variable types collected. [#4724](https://github.com/vesoft-inc/nebula/pull/4724)

-- Fix some crashes for the list function. [#5383](https://github.com/vesoft-inc/nebula/pull/5383)

+- Fixed the crash in the optimization phase of multiple `MATCH`. [#4780](https://github.com/vesoft-inc/nebula/pull/4780)

-## Legacy versions

+- Fixed the bug of aggregate expression type deduce. [#4706](https://github.com/vesoft-inc/nebula/pull/4706)

-[Release notes of legacy versions](https://nebula-graph.io/posts/)

+- Fixed the incorrect result of the `OPTIONAL MATCH` statement. [#4670](https://github.com/vesoft-inc/nebula/pull/4670)

+

+- Fixed the bug of parameter expression in the `LOOKUP` statement. [#4664](https://github.com/vesoft-inc/nebula/pull/4664)

+

+- Fixed the bug that `YIELD DISTINCT` returned a distinct result set in the `LOOKUP` statement. [#4651](https://github.com/vesoft-inc/nebula/pull/4651)

+- Fixed the bug that `ColumnExpression` encode and decode are not matched. [#4413](https://github.com/vesoft-inc/nebula/pull/4413)

+- Fixed the bug that `id($$)` filter was incorrect in the `GO` statement. [#4768](https://github.com/vesoft-inc/nebula/pull/4768)

+- Fixed the bug that full scan of `MATCH` statement when there is a relational `In` predicate. [#4748](https://github.com/vesoft-inc/nebula/pull/4748)

+- Fixed the optimizer error of `MATCH` statement.[#4771](https://github.com/vesoft-inc/nebula/pull/4771)

+

+- Fixed wrong output when using `pattern` expression as the filter in `MATCH` statement. [#4778](https://github.com/vesoft-inc/nebula/pull/4778)

+

+- Fixed the bug that tag, edge, tag index and edge index display incorrectly. [#4616](https://github.com/vesoft-inc/nebula/pull/4616)

+

+- Fixed the bug of date time format. [#4524](https://github.com/vesoft-inc/nebula/pull/4524)

+

+- Fixed the bug that the return value of the date time vertex was changed. [#4448](https://github.com/vesoft-inc/nebula/pull/4448)

+

+- Fixed the bug that the startup service failed when the log directory not existed and `enable_breakpad` was enabled. [#4623](https://github.com/vesoft-inc/nebula/pull/4623)

+

+- Fixed the bug that after the metad stopped, the status remained online. [#4610](https://github.com/vesoft-inc/nebula/pull/4610)

+

+- Fixed the corruption of the log file. [#4409](https://github.com/vesoft-inc/nebula/pull/4409)

+

+- Fixed the bug that `ENABLE_CCACHE` option didn't work. [#4648](https://github.com/vesoft-inc/nebula/pull/4648)

+

+- Abandoned uppercase letters in full-text index names. [#4628](https://github.com/vesoft-inc/nebula/pull/4628)

+

+- Disable `COUNT(DISTINCT *)` . [#4553](https://github.com/vesoft-inc/nebula/pull/4553)

+

+### Change

+

+- Vertices without tags are not supported by default. If you want to use the vertex without tags, add `--graph_use_vertex_key=true` to the configuration files (`nebula-graphd.conf`) of all Graph services in the cluster, add `--use_vertex_key=true` to the configuration files (`nebula-storaged.conf`) of all Storage services in the cluster. [#4629](https://github.com/vesoft-inc/nebula/pull/4629)

+

+## Legacy versions

+

+[Release notes of legacy versions](https://nebula-graph.io/posts/)

diff --git a/docs-2.0/20.appendix/release-notes/nebula-ent-release-note.md b/docs-2.0/20.appendix/release-notes/nebula-ent-release-note.md

index 6f1ffeb76e2..9fbbd11a97d 100644

--- a/docs-2.0/20.appendix/release-notes/nebula-ent-release-note.md

+++ b/docs-2.0/20.appendix/release-notes/nebula-ent-release-note.md

@@ -1,11 +1,90 @@

# NebulaGraph {{ nebula.release }} release notes

+## Feature

+

+- Support [incremental backup](../../backup-and-restore/nebula-br-ent/1.br-ent-overview.md).

+- Support [fine-grained permission management]((../../7.data-security/1.authentication/3.role-list.md)) at the Tag/Edge type level.

+- Support [killing sessions](../../3.ngql-guide/17.query-tuning-statements/2.kill-session.md).

+- Support [Memory Tracker](../../5.configurations-and-logs/1.configurations/4.storage-config.md) to optimize memory management.

+- Support [black-box monitoring](../../6.monitor-and-metrics/3.bbox/3.1.bbox.md).

+- Support function [json_extract](../../3.ngql-guide/6.functions-and-expressions/2.string.md).

+- Support function [extract](../../3.ngql-guide/6.functions-and-expressions/2.string.md).

+

+## Enhancement

+

+- Support using `GET SUBGRAPH` to filter vertices.

+- Support using `GetNeighbors` to filter vertices.

+- Support the conversion between timestamp and date time.

+- Support the reference of local variable in pattern expressions.

+- Optimize job management.

+- Optimize the full-text index.

+- Optimize the treatment scheme when the pattern expressions are used as predicates.

+- Optimize the join performance of the GO statement.

+- Optimize the performance of k-hop.

+- Optimize the performance of the shortest path query.

+- Optimize the push-down of the filtering of the vertex property.

+- Optimize the push-down of the edge filtering.

+- Optimize the loop conditions of the subgraph query.

+- Optimize the rules of the property cropping.

+- Remove the invalid `Project` operators.

+- Remove the invalid `AppendVertices` operators.

+- Reduce the amount of data replication for connection operations.

+- Reduce the amount of data replication for `Traverse` and `AppendVertices` operators.

+- Modify the default value of the Graph service parameter `session_reclaim_interval_secs` to 60 seconds.

+- Adjust the default level of `stderrthreshold` in the configuration file.

+- Get the property values by subscript to reduce the time of property query.

+- Limit the maximum depth of the plan tree in the optimizer to avoid stack overflows.

+

## Bugfix

-- Fix the crash caused by encoding parameter expressions to the storage layer for execution.

+- Fix the bug about query plan generation and optimization.

+

+- Fix the bugs related to indexes:

+

+ - Full-text indexes

+ - String indexes

+

+- Fix the bugs related to query statements:

+

+ - Variables

+ - Filter conditions and expressions

+ - Properties of vertices or edges

+ - parameters

+ - Functions and aggregations

+ - Using illegal data types

+ - Time zone, date, time, etc

+ - Clauses and operators

+

+- Fix the bugs related to DDL and DML statements:

+

+ - ALTER TAG

+ - UPDATE

+

+- Fix the bugs related to other functions:

+

+ - TTL

+ - Synchronization

+ - Authentication

+ - Services

+ - Logs

+ - Monitoring and statistics

+

+## Change

-- Fix some crashes for the list function.

+- If you want to upgrade NebulaGraph from version 3.1 to 3.4, please follow the instructions in the [upgrade document](../../4.deployment-and-installation/3.upgrade-nebula-graph/upgrade-nebula-ent-from-3.x-3.4.md).

+- The added property name can not be the same as an existing or deleted property name, otherwise, the operation of adding a property fails.

+- Limit the type conversion when modifying the schema.

+- The default value must be specified when creating a property of type `NOT NULL`.

+- Add the multithreaded query parameter `query_concurrently` to the configuration file with a default value of `true`.

+- Remove the parameter `kv_separation` of the KV separation storage function from the configuration file, which is turned off by default.

+- Modify the default value of `local_config` in the configuration file to `true`.

+- Consistent use of `v.tag.property` to get property values, because it is necessary to specify the Tag. Using `v.property` to access the property of a Tag on `v` was incorrectly allowed in the previous version.

+- Remove the column `HTTP port` from the command `SHOW HOSTS`.

+- Disable the queries of the form `OPTIONAL MATCH WHERE `.

+- Disable the functions of the form `COUNT(DISTINCT *)`.

+- Disable TOSS.

+- Rename Listener's pid filename and log directory name.

## Legacy versions

-[Release notes of legacy versions](https://www.nebula-graph.io/tags/release-notes)

+[Release notes of legacy versions](https://nebula-graph.io/posts/)

diff --git a/docs-2.0/3.ngql-guide/1.nGQL-overview/keywords-and-reserved-words.md b/docs-2.0/3.ngql-guide/1.nGQL-overview/keywords-and-reserved-words.md

index 578e92e3a15..7c82add3a0e 100644

--- a/docs-2.0/3.ngql-guide/1.nGQL-overview/keywords-and-reserved-words.md

+++ b/docs-2.0/3.ngql-guide/1.nGQL-overview/keywords-and-reserved-words.md

@@ -4,7 +4,7 @@ Keywords have significance in nGQL. It can be classified into reserved keywords

If you must use keywords in schema:

-- Non-reserved keywords can be used as identifiers without quotes if they are all in lowercase. However, if a non-reserved keyword contains any uppercase letters when used as an identifier, it must be enclosed in backticks (\`), for example, \`Comment\`.

+- Non-reserved keywords are permitted as identifiers without quoting.

- To use special characters or reserved keywords as identifiers, quote them with backticks such as `AND`.

diff --git a/docs-2.0/3.ngql-guide/10.tag-statements/1.create-tag.md b/docs-2.0/3.ngql-guide/10.tag-statements/1.create-tag.md

index 7c3f3baf959..3b0cfe7d1cd 100644

--- a/docs-2.0/3.ngql-guide/10.tag-statements/1.create-tag.md

+++ b/docs-2.0/3.ngql-guide/10.tag-statements/1.create-tag.md

@@ -31,7 +31,7 @@ CREATE TAG [IF NOT EXISTS]

|Parameter|Description|

|:---|:---|

|`IF NOT EXISTS`|Detects if the tag that you want to create exists. If it does not exist, a new one will be created. The tag existence detection here only compares the tag names (excluding properties).|

-|``|1. The tag name must be **unique** in a graph space.

2. Once the tag name is set, it can not be altered.

3. The name of the tag supports 1 to 4 bytes UTF-8 encoded characters, such as English letters (case-sensitive), digits, and Chinese characters, but does not support special characters except underscores. To use special characters (the period character (.) is excluded) or reserved keywords as identifiers, quote them with backticks. For more information, see [Keywords and reserved words](../../3.ngql-guide/1.nGQL-overview/keywords-and-reserved-words.md).|

+|``|1. The tag name must be **unique** in a graph space.

2. Once the tag name is set, it can not be altered.

3. The name of the tag starts with a letter, supports 1 to 4 bytes UTF-8 encoded characters, such as English letters (case-sensitive), digits, and Chinese characters, but does not support special characters except underscores. To use special characters (the period character (.) is excluded) or reserved keywords as identifiers, quote them with backticks. For more information, see [Keywords and reserved words](../../3.ngql-guide/1.nGQL-overview/keywords-and-reserved-words.md).|

|``|The name of the property. It must be unique for each tag. The rules for permitted property names are the same as those for tag names.|

|``|Shows the data type of each property. For a full description of the property data types, see [Data types](../3.data-types/1.numeric.md) and [Boolean](../3.data-types/2.boolean.md).|

|`NULL \| NOT NULL`|Specifies if the property supports `NULL | NOT NULL`. The default value is `NULL`. `DEFAULT` must be specified if `NOT NULL` is set.|

diff --git a/docs-2.0/3.ngql-guide/14.native-index-statements/1.create-native-index.md b/docs-2.0/3.ngql-guide/14.native-index-statements/1.create-native-index.md

index ae4fa2ed47c..82a5d2f610d 100644

--- a/docs-2.0/3.ngql-guide/14.native-index-statements/1.create-native-index.md

+++ b/docs-2.0/3.ngql-guide/14.native-index-statements/1.create-native-index.md

@@ -16,7 +16,7 @@ You can use `CREATE INDEX` to add native indexes for the existing tags, edge typ

- Property indexes apply to property-based queries. For example, you can use the `age` property to retrieve the VID of all vertices that meet `age == 19`.

-If a property index `i_TA` is created for the property `A` of the tag `T` and `i_T` for the tag `T`, the indexes can be replaced as follows (the same for edge type indexes):

+If a property index `i_TA` is created for the property `A` of the tag `T`, the indexes can be replaced as follows (the same for edge type indexes):

- The query engine can use `i_TA` to replace `i_T`.

diff --git a/docs-2.0/3.ngql-guide/16.subgraph-and-path/1.get-subgraph.md b/docs-2.0/3.ngql-guide/16.subgraph-and-path/1.get-subgraph.md

index f26f24a0997..82eb136c934 100644

--- a/docs-2.0/3.ngql-guide/16.subgraph-and-path/1.get-subgraph.md

+++ b/docs-2.0/3.ngql-guide/16.subgraph-and-path/1.get-subgraph.md

@@ -109,20 +109,6 @@ nebula> INSERT EDGE serve(start_year, end_year) VALUES "player101" -> "team204":

The returned subgraph is as follows.

-

- * This example goes two steps from the vertex `player101` over `follow` edges, filters by degree > 90 and age > 30, and shows the properties of edges.

-

- ```ngql

- nebula> GET SUBGRAPH WITH PROP 2 STEPS FROM "player101" \

- WHERE follow.degree > 90 AND $$.player.age > 30 \

- YIELD VERTICES AS nodes, EDGES AS relationships;

- +-------------------------------------------------------+------------------------------------------------------+

- | nodes | relationships |

- +-------------------------------------------------------+------------------------------------------------------+

- | [("player101" :player{age: 36, name: "Tony Parker"})] | [[:follow "player101"->"player100" @0 {degree: 95}]] |

- | [("player100" :player{age: 42, name: "Tim Duncan"})] | [] |

- +-------------------------------------------------------+------------------------------------------------------+

- ```

## FAQ

diff --git a/docs-2.0/3.ngql-guide/4.variable-and-composite-queries/2.user-defined-variables.md b/docs-2.0/3.ngql-guide/4.variable-and-composite-queries/2.user-defined-variables.md

index aeef106bf1f..b80905d6d48 100644

--- a/docs-2.0/3.ngql-guide/4.variable-and-composite-queries/2.user-defined-variables.md

+++ b/docs-2.0/3.ngql-guide/4.variable-and-composite-queries/2.user-defined-variables.md

@@ -31,8 +31,7 @@ You can use user-defined variables in composite queries. Details about composite

!!! note

- - User-defined variables are case-sensitive.

- - To define a user-defined variable in a compound statement, end the statement with a semicolon (;). For details, please refer to the [nGQL Style Guide](../../3.ngql-guide/1.nGQL-overview/ngql-style-guide.md).

+ User-defined variables are case-sensitive.

## Example

diff --git a/docs-2.0/3.ngql-guide/5.operators/4.pipe.md b/docs-2.0/3.ngql-guide/5.operators/4.pipe.md

index 8bd8c691714..7621f6199b6 100644

--- a/docs-2.0/3.ngql-guide/5.operators/4.pipe.md

+++ b/docs-2.0/3.ngql-guide/5.operators/4.pipe.md

@@ -31,6 +31,8 @@ nebula> GO FROM "player100" OVER follow \

+-------------+

```

+If there is no `YIELD` clause to define the output, the destination vertex ID is returned by default. If a YIELD clause is applied, the output is defined by the YIELD clause.

+

Users must define aliases in the `YIELD` clause for the reference operator `$-` to use, just like `$-.dstid` in the preceding example.

## Performance tips

diff --git a/docs-2.0/3.ngql-guide/6.functions-and-expressions/4.schema.md b/docs-2.0/3.ngql-guide/6.functions-and-expressions/4.schema.md

index e6dd6f69523..89a1c26d84f 100644

--- a/docs-2.0/3.ngql-guide/6.functions-and-expressions/4.schema.md

+++ b/docs-2.0/3.ngql-guide/6.functions-and-expressions/4.schema.md

@@ -51,19 +51,6 @@ nebula> LOOKUP ON player WHERE player.age > 45 \

+-------------------------------------+

```

-You can also use the property reference symbols (`$^` and `$$`) instead of the `vertex` field in the `properties()` function to get all properties of a vertex.

-

-- `$^` represents the data of the starting vertex at the beginning of exploration. For example, in `GO FROM "player100" OVER follow reversely YIELD properties($^)`, `$^` refers to the vertex `player100`.

-

-- `$$` represents the data of the end vertex at the end of exploration.

-

-`properties($^)` and `properties($$)` are generally used in `GO` statements. For more information, see [Property reference](../5.operators/5.property-reference.md).

-

-!!! caution

-

- You can use `properties().` to get a specific property of a vertex. However, it is not recommended to use this method to obtain specific properties because the `properties()` function returns all properties, which can decrease query performance.

-

-

### properties(edge)

properties(edge) returns the properties of an edge.

@@ -85,10 +72,6 @@ nebula> GO FROM "player100" OVER follow \

+------------------+

```

-!!! caution

-

- You can use `properties(edge).` to get a specific property of an edge. However, it is not recommended to use this method to obtain specific properties because the `properties(edge)` function returns all properties, which can decrease query performance.

-

### type(edge)

type(edge) returns the edge type of an edge.

diff --git a/docs-2.0/4.deployment-and-installation/3.upgrade-nebula-graph/upgrade-nebula-ent-from-3.x-3.4.md b/docs-2.0/4.deployment-and-installation/3.upgrade-nebula-graph/upgrade-nebula-ent-from-3.x-3.4.md

index c1571d155d7..6affdf04794 100644

--- a/docs-2.0/4.deployment-and-installation/3.upgrade-nebula-graph/upgrade-nebula-ent-from-3.x-3.4.md

+++ b/docs-2.0/4.deployment-and-installation/3.upgrade-nebula-graph/upgrade-nebula-ent-from-3.x-3.4.md

@@ -4,17 +4,12 @@ This topic takes the enterprise edition of NebulaGraph v3.1.0 as an example and

## Notes

-- This upgrade is only applicable for upgrading the enterprise edition of NebulaGraph v3.x to v3.4.0. If your version is below 3.0.0, please upgrade to enterprise edition 3.1.0 before upgrading to v3.4.0. For details, see [Upgrade NebulaGraph Enterprise Edition 2.x to 3.1.0](https://docs.nebula-graph.io/3.1.0/4.deployment-and-installation/3.upgrade-nebula-graph/upgrade-nebula-graph-to-latest/).

+- This upgrade is only applicable for upgrading the enterprise edition of NebulaGraph v3.x to v3.4.0. If your version is below 3.0.0, please upgrade to enterprise edition 3.x before upgrading to v3.4.0. For details, see [Upgrade NebulaGraph Enterprise Edition 2.x to 3.1.0](https://docs.nebula-graph.io/3.1.0/4.deployment-and-installation/3.upgrade-nebula-graph/upgrade-nebula-graph-to-latest/).

- The IP address of the machine performing the upgrade operation must be the same as the original machine.

- The remaining disk space on the machine must be at least 1.5 times the size of the original data directory.

-- Before upgrading a NebulaGraph cluster with full-text indexes deployed, you must manually delete the full-text indexes in Elasticsearch, and then run the `SIGN IN` command to log into ES and recreate the indexes after the upgrade is complete.

-

- !!! note

-

- To manually delete the full-text indexes in Elasticsearch, you can use the curl command `curl -XDELETE -u : ':/'`, for example, `curl -XDELETE -u elastic:elastic 'http://192.168.8.223:9200/nebula_index_2534'`. If no username and password are set for Elasticsearch, you can omit the `-u :` part.

## Steps

diff --git a/docs-2.0/4.deployment-and-installation/3.upgrade-nebula-graph/upgrade-nebula-from-300-to-latest.md b/docs-2.0/4.deployment-and-installation/3.upgrade-nebula-graph/upgrade-nebula-from-300-to-latest.md

index 29ed7ff7a2b..40eeb6cee45 100644

--- a/docs-2.0/4.deployment-and-installation/3.upgrade-nebula-graph/upgrade-nebula-from-300-to-latest.md

+++ b/docs-2.0/4.deployment-and-installation/3.upgrade-nebula-graph/upgrade-nebula-from-300-to-latest.md

@@ -2,13 +2,10 @@

To upgrade NebulaGraph v3.x to v{{nebula.release}}, you only need to use the RPM/DEB package of v{{nebula.release}} for the upgrade, or [compile it](../2.compile-and-install-nebula-graph/1.install-nebula-graph-by-compiling-the-source-code.md) and then reinstall.

-!!! caution

-

- Before upgrading a NebulaGraph cluster with full-text indexes deployed, you must manually delete the full-text indexes in Elasticsearch, and then run the `SIGN IN` command to log into ES and recreate the indexes after the upgrade is complete. To manually delete the full-text indexes in Elasticsearch, you can use the curl command `curl -XDELETE -u : ':/'`, for example, `curl -XDELETE -u elastic:elastic 'http://192.168.8.223:9200/nebula_index_2534'`. If no username and password are set for Elasticsearch, you can omit the `-u :` part.

## Upgrade steps with RPM/DEB packages

-1. Download the [RPM/DEB package](https://www.nebula-graph.io/download).

+1. Download the [RPM/DEB package](https://github.com/vesoft-inc/nebula-graph/releases/tag/v{{nebula.release}}).

2. Stop all NebulaGraph services. For details, see [Manage NebulaGraph Service](../../2.quick-start/5.start-stop-service.md). It is recommended to back up the configuration file before updating.

diff --git a/docs-2.0/4.deployment-and-installation/3.upgrade-nebula-graph/upgrade-nebula-graph-to-latest.md b/docs-2.0/4.deployment-and-installation/3.upgrade-nebula-graph/upgrade-nebula-graph-to-latest.md

index 147480e4c0b..98045084670 100644

--- a/docs-2.0/4.deployment-and-installation/3.upgrade-nebula-graph/upgrade-nebula-graph-to-latest.md

+++ b/docs-2.0/4.deployment-and-installation/3.upgrade-nebula-graph/upgrade-nebula-graph-to-latest.md

@@ -4,11 +4,11 @@ This topic describes how to upgrade NebulaGraph from version 2.x to {{nebula.rel

## Applicable source versions

-This topic applies to upgrading NebulaGraph from 2.5.0 and later 2.x versions to {{nebula.release}}. It does not apply to historical versions earlier than 2.5.0, including the 1.x versions.

+This topic applies to upgrading NebulaGraph from 2.0.0 and later 2.x versions to {{nebula.release}}. It does not apply to historical versions earlier than 2.0.0, including the 1.x versions.

To upgrade NebulaGraph from historical versions to {{nebula.release}}:

-1. Upgrade it to the latest 2.5 version according to the docs of that version.

+1. Upgrade it to the latest 2.x version according to the docs of that version.

2. Follow this topic to upgrade it to {{nebula.release}}.

!!! caution

@@ -63,10 +63,6 @@ To upgrade NebulaGraph from historical versions to {{nebula.release}}:

- It is required to specify a tag to query properties of a vertex in a `MATCH` statement. For example, from `return v.name` to `return v.player.name`.

-- Full-text indexes

-

- Before upgrading a NebulaGraph cluster with full-text indexes deployed, you must manually delete the full-text indexes in Elasticsearch, and then run the `SIGN IN` command to log into ES and recreate the indexes after the upgrade is complete. To manually delete the full-text indexes in Elasticsearch, you can use the curl command `curl -XDELETE -u : ':/'`, for example, `curl -XDELETE -u elastic:elastic 'http://192.168.8.xxx:9200/nebula_index_2534'`. If no username and password are set for Elasticsearch, you can omit the `-u :` part.

-

!!! caution

There may be other undiscovered influences. Before the upgrade, we recommend that you read the release notes and user manual carefully, and keep an eye on the [posts](https://github.com/vesoft-inc/nebula/discussions) on the forum and [issues](https://github.com/vesoft-inc/nebula/issues) on Github.

diff --git a/docs-2.0/5.configurations-and-logs/1.configurations/.1.get-configurations.md b/docs-2.0/5.configurations-and-logs/1.configurations/.1.get-configurations.md

new file mode 100644

index 00000000000..f49843221fd

--- /dev/null

+++ b/docs-2.0/5.configurations-and-logs/1.configurations/.1.get-configurations.md

@@ -0,0 +1,36 @@

+# Get configurations

+

+This document gives some methods to get configurations in NebulaGraph.

+

+!!! note

+

+ You must use ONLY ONE method in one cluster. To avoid errors, we suggest that you get configurations from local.

+

+## Get configurations from local

+

+Add `--local_config=true` to the top of each configuration file (the default path is `/usr/local/nebula/etc/`). Restart all the NebulaGraph services to make your modifications take effect. We suggest that new users use this method.

+

+## Get configuration from Meta Service

+

+To get configuration from Meta Service, set the `--local_config` parameter to `false` or use the default configuration files.

+

+When the services are started for the first time, NebulaGraph reads the configurations from local and then persists them in the Meta Service. Once the Meta Service is persisted, NebulaGraph reads configurations only from the Meta Service, even you restart NebulaGraph.

+

+## FAQ

+

+## How to modify configurations

+

+You can modify NebulaGraph configurations by using these methods:

+

+- Modify configurations by using `UPDATE CONFIG`. For more information see UPDATE CONFIG (doc TODO).

+- Modify configurations by configuring the configuration files. For more information, see [Get configuration from local](#get_configuration_from_local).

+

+## What is the configuration priority and how to modify it

+

+The **default** configuration reading precedence is Meta Service > `UPDATE CONFIG`> configuration files.

+

+When `--local_config` is set to `true`, the configuration reading precedence is configuration files > Meta Service.

+

+!!! danger

+

+ Don't use `UPDATE CONFIG` to update configurations when `--local_config` is set to `true`.

diff --git a/docs-2.0/5.configurations-and-logs/1.configurations/.5.console-config.md b/docs-2.0/5.configurations-and-logs/1.configurations/.5.console-config.md

new file mode 100644

index 00000000000..8889872658f

--- /dev/null

+++ b/docs-2.0/5.configurations-and-logs/1.configurations/.5.console-config.md

@@ -0,0 +1,10 @@

+

diff --git a/docs-2.0/5.configurations-and-logs/1.configurations/1.configurations.md b/docs-2.0/5.configurations-and-logs/1.configurations/1.configurations.md

index f42fe2bfab0..dbf32455204 100644

--- a/docs-2.0/5.configurations-and-logs/1.configurations/1.configurations.md

+++ b/docs-2.0/5.configurations-and-logs/1.configurations/1.configurations.md

@@ -104,14 +104,6 @@ For clusters installed with Kubectl through NebulaGraph Operator, the configurat

## Modify configurations

-You can modify the configurations of NebulaGraph in the configuration file or use commands to dynamically modify configurations.

-

-!!! caution

-

- Using both methods to modify the configuration can cause the configuration information to be managed inconsistently, which may result in confusion. It is recommended to only use the configuration file to manage the configuration, or to make the same modifications to the configuration file after dynamically updating the configuration through commands to ensure consistency.

-

-### Modifying configurations in the configuration file

-

By default, each NebulaGraph service gets configured from its configuration files. You can modify configurations and make them valid according to the following steps:

* For clusters installed from source, with a RPM/DEB, or a TAR package

@@ -128,21 +120,3 @@ By default, each NebulaGraph service gets configured from its configuration file

* For clusters installed with Kubectl

For details, see [Customize configuration parameters for a NebulaGraph cluster](../../nebula-operator/8.custom-cluster-configurations/8.1.custom-conf-parameter.md).

-

-### Dynamically modifying configurations using command

-

-You can dynamically modify the configuration of NebulaGraph by using the curl command. For example, to modify the `wal_ttl` parameter of the Storage service to `600`, use the following command:

-

-```bash

-curl -X PUT -H "Content-Type: application/json" -d'{"wal_ttl":"600"}' -s "http://192.168.15.6:19779/flags"

-```

-

-In this command, ` {"wal_ttl":"600"}` specifies the configuration parameter and its value to be modified, and `192.168.15.6:19779` specifies the IP address and HTTP port number of the Storage service.

-

-!!! caution

-

- - The functionality of dynamically modifying configurations is only applicable to prototype verification and testing environments. It is not recommended to use this feature in production environments. This is because when the `local_config` value is set to `true`, the dynamically modified configuration is not persisted, and the configuration will be restored to the initial configuration after the service is restarted.

-

- - Only **part of** the configuration parameters can be dynamically modified. For the specific list of parameters that can be modified, see the description of **Whether supports runtime dynamic modifications** in the respective service configuration.

-

-

diff --git a/docs-2.0/5.configurations-and-logs/1.configurations/2.meta-config.md b/docs-2.0/5.configurations-and-logs/1.configurations/2.meta-config.md

index d5344a0ab9d..2d85008992b 100644

--- a/docs-2.0/5.configurations-and-logs/1.configurations/2.meta-config.md

+++ b/docs-2.0/5.configurations-and-logs/1.configurations/2.meta-config.md

@@ -15,24 +15,20 @@ To use the initial configuration file, choose one of the above two files and del

If a parameter is not set in the configuration file, NebulaGraph uses the default value. Not all parameters are predefined. And the predefined parameters in the two initial configuration files are different. This topic uses the parameters in `nebula-metad.conf.default`.

-!!! caution

-

- Some parameter values in the configuration file can be dynamically modified during runtime. We label these parameters as **Yes** that supports runtime dynamic modification in this article. When the `local_config` value is set to `true`, the dynamically modified configuration is not persisted, and the configuration will be restored to the initial configuration after the service is restarted. For more information, see [Modify configurations](1.configurations.md).

-

For all parameters and their current values, see [Configurations](1.configurations.md).

## Basics configurations

-| Name | Predefined value | Description | Whether supports runtime dynamic modifications|

-| ----------- | ----------------------- | ---------------------------------------------------- |-------------------- |

-| `daemonize` | `true` | When set to `true`, the process is a daemon process. | No|

-| `pid_file` | `pids/nebula-metad.pid` | The file that records the process ID. | No|

-| `timezone_name` | - | Specifies the NebulaGraph time zone. This parameter is not predefined in the initial configuration files. You can manually set it if you need it. The system default value is `UTC+00:00:00`. For the format of the parameter value, see [Specifying the Time Zone with TZ](https://www.gnu.org/software/libc/manual/html_node/TZ-Variable.html "Click to view the timezone-related content in the GNU C Library manual"). For example, `--timezone_name=UTC+08:00` represents the GMT+8 time zone.|No|

+| Name | Predefined value | Description |

+| ----------- | ----------------------- | ---------------------------------------------------- |

+| `daemonize` | `true` | When set to `true`, the process is a daemon process. |

+| `pid_file` | `pids/nebula-metad.pid` | The file that records the process ID. |

+| `timezone_name` | - | Specifies the NebulaGraph time zone. This parameter is not predefined in the initial configuration files. You can manually set it if you need it. The system default value is `UTC+00:00:00`. For the format of the parameter value, see [Specifying the Time Zone with TZ](https://www.gnu.org/software/libc/manual/html_node/TZ-Variable.html "Click to view the timezone-related content in the GNU C Library manual"). For example, `--timezone_name=UTC+08:00` represents the GMT+8 time zone.|

{{ ent.ent_begin }}

-| Name | Predefined value | Description |Whether supports runtime dynamic modifications|

-| ----------- | ----------------------- | ---------------------------------------------------- |----------------- |

-|`license_path`|`share/resources/nebula.license`| Path of the license of the NebulaGraph Enterprise Edition. Users need to [deploy a license file](../../4.deployment-and-installation/deploy-license.md) before starting the Enterprise Edition. This parameter is required only for the NebulaGraph Enterprise Edition. For details about how to configure licenses for other ecosystem tools, see the deployment documents of the corresponding ecosystem tools.| No|

+| Name | Predefined value | Description |

+| ----------- | ----------------------- | ---------------------------------------------------- |

+|`license_path`|`share/resources/nebula.license`| Path of the license of the NebulaGraph Enterprise Edition. Users need to [deploy a license file](../../4.deployment-and-installation/deploy-license.md) before starting the Enterprise Edition. This parameter is required only for the NebulaGraph Enterprise Edition. For details about how to configure licenses for other ecosystem tools, see the deployment documents of the corresponding ecosystem tools.|

{{ ent.ent_end }}

@@ -43,29 +39,29 @@ For all parameters and their current values, see [Configurations](1.configuratio

## Logging configurations

-| Name | Predefined value | Description |Whether supports runtime dynamic modifications|

-| :------------- | :------------------------ | :------------------------------------------------ |:----------------- |

-| `log_dir` | `logs` | The directory that stores the Meta Service log. It is recommended to put logs on a different hard disk from the data. | No|

-| `minloglevel` | `0` | Specifies the minimum level of the log. That is, log messages at or above this level. Optional values are `0` (INFO), `1` (WARNING), `2` (ERROR), `3` (FATAL). It is recommended to set it to `0` during debugging and `1` in a production environment. If it is set to `4`, NebulaGraph will not print any logs. | Yes|

-| `v` | `0` | Specifies the detailed level of VLOG. That is, log all VLOG messages less or equal to the level. Optional values are `0`, `1`, `2`, `3`, `4`, `5`. The VLOG macro provided by glog allows users to define their own numeric logging levels and control verbose messages that are logged with the parameter `v`. For details, see [Verbose Logging](https://github.com/google/glog#verbose-logging).| Yes|

-| `logbufsecs` | `0` | Specifies the maximum time to buffer the logs. If there is a timeout, it will output the buffered log to the log file. `0` means real-time output. This configuration is measured in seconds. | No|

-|`redirect_stdout` |`true` | When set to `true`, the process redirects the`stdout` and `stderr` to separate output files. | No|

-|`stdout_log_file` |`metad-stdout.log` | Specifies the filename for the `stdout` log. | No|

-|`stderr_log_file` |`metad-stderr.log` | Specifies the filename for the `stderr` log. | No|

-|`stderrthreshold` | `3` | Specifies the `minloglevel` to be copied to the `stderr` log. | No|

-| `timestamp_in_logfile_name` | `true` | Specifies if the log file name contains a timestamp. `true` indicates yes, `false` indicates no. | No|

+| Name | Predefined value | Description |

+| :------------- | :------------------------ | :------------------------------------------------ |

+| `log_dir` | `logs` | The directory that stores the Meta Service log. It is recommended to put logs on a different hard disk from the data. |

+| `minloglevel` | `0` | Specifies the minimum level of the log. That is, log messages at or above this level. Optional values are `0` (INFO), `1` (WARNING), `2` (ERROR), `3` (FATAL). It is recommended to set it to `0` during debugging and `1` in a production environment. If it is set to `4`, NebulaGraph will not print any logs. |

+| `v` | `0` | Specifies the detailed level of VLOG. That is, log all VLOG messages less or equal to the level. Optional values are `0`, `1`, `2`, `3`, `4`, `5`. The VLOG macro provided by glog allows users to define their own numeric logging levels and control verbose messages that are logged with the parameter `v`. For details, see [Verbose Logging](https://github.com/google/glog#verbose-logging).|

+| `logbufsecs` | `0` | Specifies the maximum time to buffer the logs. If there is a timeout, it will output the buffered log to the log file. `0` means real-time output. This configuration is measured in seconds. |

+|`redirect_stdout` |`true` | When set to `true`, the process redirects the`stdout` and `stderr` to separate output files. |

+|`stdout_log_file` |`metad-stdout.log` | Specifies the filename for the `stdout` log. |

+|`stderr_log_file` |`metad-stderr.log` | Specifies the filename for the `stderr` log. |

+|`stderrthreshold` | `3` | Specifies the `minloglevel` to be copied to the `stderr` log. |

+| `timestamp_in_logfile_name` | `true` | Specifies if the log file name contains a timestamp. `true` indicates yes, `false` indicates no. |

## Networking configurations

-| Name | Predefined value | Description |Whether supports runtime dynamic modifications|

-| :----------------------- | :---------------- | :---------------------------------------------------- |:----------------- |

-| `meta_server_addrs` | `127.0.0.1:9559` | Specifies the IP addresses and ports of all Meta Services. Multiple addresses are separated with commas. | No|

-|`local_ip` | `127.0.0.1` | Specifies the local IP for the Meta Service. The local IP address is used to identify the nebula-metad process. If it is a distributed cluster or requires remote access, modify it to the corresponding address.| No|

-| `port` | `9559` | Specifies RPC daemon listening port of the Meta service. The external port for the Meta Service is predefined to `9559`. The internal port is predefined to `port + 1`, i.e., `9560`. Nebula Graph uses the internal port for multi-replica interactions. | No|

-| `ws_ip` | `0.0.0.0` | Specifies the IP address for the HTTP service. | No|

-| `ws_http_port` | `19559` | Specifies the port for the HTTP service. | No|

-|`ws_storage_http_port`|`19779`| Specifies the Storage service listening port used by the HTTP protocol. It must be consistent with the `ws_http_port` in the Storage service configuration file. This parameter only applies to standalone NebulaGraph.| No|

-|`heartbeat_interval_secs` | `10` | Specifies the default heartbeat interval. Make sure the `heartbeat_interval_secs` values for all services are the same, otherwise NebulaGraph **CANNOT** work normally. This configuration is measured in seconds. | Yes|

+| Name | Predefined value | Description |

+| :----------------------- | :---------------- | :---------------------------------------------------- |

+| `meta_server_addrs` | `127.0.0.1:9559` | Specifies the IP addresses and ports of all Meta Services. Multiple addresses are separated with commas. |

+|`local_ip` | `127.0.0.1` | Specifies the local IP for the Meta Service. The local IP address is used to identify the nebula-metad process. If it is a distributed cluster or requires remote access, modify it to the corresponding address.|

+| `port` | `9559` | Specifies RPC daemon listening port of the Meta service. The external port for the Meta Service is predefined to `9559`. The internal port is predefined to `port + 1`, i.e., `9560`. Nebula Graph uses the internal port for multi-replica interactions. |

+| `ws_ip` | `0.0.0.0` | Specifies the IP address for the HTTP service. |

+| `ws_http_port` | `19559` | Specifies the port for the HTTP service. |

+|`ws_storage_http_port`|`19779`| Specifies the Storage service listening port used by the HTTP protocol. It must be consistent with the `ws_http_port` in the Storage service configuration file. This parameter only applies to standalone NebulaGraph.|

+|`heartbeat_interval_secs` | `10` | Specifies the default heartbeat interval. Make sure the `heartbeat_interval_secs` values for all services are the same, otherwise NebulaGraph **CANNOT** work normally. This configuration is measured in seconds. |

!!! caution

@@ -73,22 +69,22 @@ For all parameters and their current values, see [Configurations](1.configuratio

## Storage configurations

-| Name | Predefined Value | Description |Whether supports runtime dynamic modifications|

-| :------------------- | :------------------------ | :------------------------------------------ |:----------------- |

-| `data_path` | `data/meta` | The storage path for Meta data. | No|

+| Name | Predefined Value | Description |

+| :------------------- | :------------------------ | :------------------------------------------ |

+| `data_path` | `data/meta` | The storage path for Meta data. |

## Misc configurations

-| Name | Predefined Value | Description |Whether supports runtime dynamic modifications|

-| :------------------------- | :-------------------- | :---------------------------------------------------------------------------- |:----------------- |

-|`default_parts_num` | `100` | Specifies the default partition number when creating a new graph space. | No|

-|`default_replica_factor` | `1` | Specifies the default replica number when creating a new graph space. | No|

+| Name | Predefined Value | Description |

+| :------------------------- | :-------------------- | :---------------------------------------------------------------------------- |

+|`default_parts_num` | `100` | Specifies the default partition number when creating a new graph space. |

+|`default_replica_factor` | `1` | Specifies the default replica number when creating a new graph space. |

## RocksDB options configurations

-| Name | Predefined Value | Description |Whether supports runtime dynamic modifications|

-| :--------------- | :----------------- | :---------------------------------------- |:----------------- |

-|`rocksdb_wal_sync`| `true` | Enables or disables RocksDB WAL synchronization. Available values are `true` (enable) and `false` (disable).| No|

+| Name | Predefined Value | Description |

+| :--------------- | :----------------- | :---------------------------------------- |

+|`rocksdb_wal_sync`| `true` | Enables or disables RocksDB WAL synchronization. Available values are `true` (enable) and `false` (disable).|

{{ ent.ent_begin }}

## Black box configurations

@@ -97,11 +93,11 @@ For all parameters and their current values, see [Configurations](1.configuratio

The Nebula-BBox configurations are for the Enterprise Edition only.

-| Name | Predefined Value | Description |Whether supports runtime dynamic modifications|

-| :------------------- | :------------------------ | :------------------------------------------ |:----------------- |

-|`ng_black_box_switch` |`true` |Whether to enable the [Nebula-BBox](../../6.monitor-and-metrics/3.bbox/3.1.bbox.md) feature.| No|

-|`ng_black_box_home` |`black_box` |The name of the directory to store Nebula-BBox file data.| No|

-|`ng_black_box_dump_period_seconds` |`5` |The time interval for Nebula-BBox to collect metric data. Unit: Second.| No|

-|`ng_black_box_file_lifetime_seconds` |`1800` |Storage time for Nebula-BBox files generated after collecting metric data. Unit: Second.| Yes|

+| Name | Predefined Value | Description |

+| :------------------- | :------------------------ | :------------------------------------------ |

+|`ng_black_box_switch` |`true` |Whether to enable the [Nebula-BBox](../../6.monitor-and-metrics/3.bbox/3.1.bbox.md) feature.|

+|`ng_black_box_home` |`black_box` |The name of the directory to store Nebula-BBox file data.|

+|`ng_black_box_dump_period_seconds` |`5` |The time interval for Nebula-BBox to collect metric data. Unit: Second.|

+|`ng_black_box_file_lifetime_seconds` |`1800` |Storage time for Nebula-BBox files generated after collecting metric data. Unit: Second.|

{{ ent.ent_end }}

\ No newline at end of file

diff --git a/docs-2.0/5.configurations-and-logs/1.configurations/3.graph-config.md b/docs-2.0/5.configurations-and-logs/1.configurations/3.graph-config.md

index 1e667b8bef0..85d0e003b42 100644

--- a/docs-2.0/5.configurations-and-logs/1.configurations/3.graph-config.md

+++ b/docs-2.0/5.configurations-and-logs/1.configurations/3.graph-config.md

@@ -15,21 +15,17 @@ To use the initial configuration file, choose one of the above two files and del

If a parameter is not set in the configuration file, NebulaGraph uses the default value. Not all parameters are predefined. And the predefined parameters in the two initial configuration files are different. This topic uses the parameters in `nebula-metad.conf.default`.

-!!! caution

-

- Some parameter values in the configuration file can be dynamically modified during runtime. We label these parameters as **Yes** that supports runtime dynamic modification in this article. When the `local_config` value is set to `true`, the dynamically modified configuration is not persisted, and the configuration will be restored to the initial configuration after the service is restarted. For more information, see [Modify configurations](1.configurations.md).

-

For all parameters and their current values, see [Configurations](1.configurations.md).

## Basics configurations

-| Name | Predefined value | Description |Whether supports runtime dynamic modifications|

-| ----------------- | ----------------------- | ------------------|------------------|

-| `daemonize` | `true` | When set to `true`, the process is a daemon process. | No|

-| `pid_file` | `pids/nebula-graphd.pid`| The file that records the process ID. | No|

-|`enable_optimizer` |`true` | When set to `true`, the optimizer is enabled. | No|

-| `timezone_name` | - | Specifies the NebulaGraph time zone. This parameter is not predefined in the initial configuration files. The system default value is `UTC+00:00:00`. For the format of the parameter value, see [Specifying the Time Zone with TZ](https://www.gnu.org/software/libc/manual/html_node/TZ-Variable.html "Click to view the timezone-related content in the GNU C Library manual"). For example, `--timezone_name=UTC+08:00` represents the GMT+8 time zone. | No|

-| `local_config` | `true` | When set to `true`, the process gets configurations from the configuration files. | No|

+| Name | Predefined value | Description |

+| ----------------- | ----------------------- | ------------------|

+| `daemonize` | `true` | When set to `true`, the process is a daemon process. |

+| `pid_file` | `pids/nebula-graphd.pid`| The file that records the process ID. |

+|`enable_optimizer` |`true` | When set to `true`, the optimizer is enabled. |

+| `timezone_name` | - | Specifies the NebulaGraph time zone. This parameter is not predefined in the initial configuration files. The system default value is `UTC+00:00:00`. For the format of the parameter value, see [Specifying the Time Zone with TZ](https://www.gnu.org/software/libc/manual/html_node/TZ-Variable.html "Click to view the timezone-related content in the GNU C Library manual"). For example, `--timezone_name=UTC+08:00` represents the GMT+8 time zone. |

+| `local_config` | `true` | When set to `true`, the process gets configurations from the configuration files. |

!!! note

@@ -38,49 +34,49 @@ For all parameters and their current values, see [Configurations](1.configuratio

## Logging configurations

-| Name | Predefined value | Description |Whether supports runtime dynamic modifications|

-| ------------- | ------------------------ | ------------------------------------------------ |------------------|

-| `log_dir` | `logs` | The directory that stores the Meta Service log. It is recommended to put logs on a different hard disk from the data. | No|

-| `minloglevel` | `0` | Specifies the minimum level of the log. That is, log messages at or above this level. Optional values are `0` (INFO), `1` (WARNING), `2` (ERROR), `3` (FATAL). It is recommended to set it to `0` during debugging and `1` in a production environment. If it is set to `4`, NebulaGraph will not print any logs. | Yes|

-| `v` | `0` | Specifies the detailed level of VLOG. That is, log all VLOG messages less or equal to the level. Optional values are `0`, `1`, `2`, `3`, `4`, `5`. The VLOG macro provided by glog allows users to define their own numeric logging levels and control verbose messages that are logged with the parameter `v`. For details, see [Verbose Logging](https://github.com/google/glog#verbose-logging).| Yes|

-| `logbufsecs` | `0` | Specifies the maximum time to buffer the logs. If there is a timeout, it will output the buffered log to the log file. `0` means real-time output. This configuration is measured in seconds. | No|

-|`redirect_stdout` |`true` | When set to `true`, the process redirects the`stdout` and `stderr` to separate output files. | No|

-|`stdout_log_file` |`graphd-stdout.log` | Specifies the filename for the `stdout` log. | No|

-|`stderr_log_file` |`graphd-stderr.log` | Specifies the filename for the `stderr` log. | No|

-|`stderrthreshold` | `3` | Specifies the `minloglevel` to be copied to the `stderr` log. | No|

-| `timestamp_in_logfile_name` | `true` | Specifies if the log file name contains a timestamp. `true` indicates yes, `false` indicates no. | No|

+| Name | Predefined value | Description |

+| ------------- | ------------------------ | ------------------------------------------------ |

+| `log_dir` | `logs` | The directory that stores the Meta Service log. It is recommended to put logs on a different hard disk from the data. |

+| `minloglevel` | `0` | Specifies the minimum level of the log. That is, log messages at or above this level. Optional values are `0` (INFO), `1` (WARNING), `2` (ERROR), `3` (FATAL). It is recommended to set it to `0` during debugging and `1` in a production environment. If it is set to `4`, NebulaGraph will not print any logs. |

+| `v` | `0` | Specifies the detailed level of VLOG. That is, log all VLOG messages less or equal to the level. Optional values are `0`, `1`, `2`, `3`, `4`, `5`. The VLOG macro provided by glog allows users to define their own numeric logging levels and control verbose messages that are logged with the parameter `v`. For details, see [Verbose Logging](https://github.com/google/glog#verbose-logging).|

+| `logbufsecs` | `0` | Specifies the maximum time to buffer the logs. If there is a timeout, it will output the buffered log to the log file. `0` means real-time output. This configuration is measured in seconds. |

+|`redirect_stdout` |`true` | When set to `true`, the process redirects the`stdout` and `stderr` to separate output files. |

+|`stdout_log_file` |`graphd-stdout.log` | Specifies the filename for the `stdout` log. |

+|`stderr_log_file` |`graphd-stderr.log` | Specifies the filename for the `stderr` log. |

+|`stderrthreshold` | `3` | Specifies the `minloglevel` to be copied to the `stderr` log. |

+| `timestamp_in_logfile_name` | `true` | Specifies if the log file name contains a timestamp. `true` indicates yes, `false` indicates no. |

## Query configurations

-| Name | Predefined value | Description |Whether supports runtime dynamic modifications|

-| ----------------------------- | ------------------------ | ------------------------------------------ |------------------|

-|`accept_partial_success` |`false` | When set to `false`, the process treats partial success as an error. This configuration only applies to read-only requests. Write requests always treat partial success as an error. | Yes|

-|`session_reclaim_interval_secs`|`60` | Specifies the interval that the Session information is sent to the Meta service. This configuration is measured in seconds. | Yes|

-|`max_allowed_query_size` |`4194304` | Specifies the maximum length of queries. Unit: bytes. The default value is `4194304`, namely 4MB.| Yes|

+| Name | Predefined value | Description |

+| ----------------------------- | ------------------------ | ------------------------------------------ |

+|`accept_partial_success` |`false` | When set to `false`, the process treats partial success as an error. This configuration only applies to read-only requests. Write requests always treat partial success as an error. |

+|`session_reclaim_interval_secs`|`60` | Specifies the interval that the Session information is sent to the Meta service. This configuration is measured in seconds. |

+|`max_allowed_query_size` |`4194304` | Specifies the maximum length of queries. Unit: bytes. The default value is `4194304`, namely 4MB.|

## Networking configurations

-| Name | Predefined value | Description |Whether supports runtime dynamic modifications|

-| ----------------------- | ---------------- | ---------------------------------------------------- |------------------|

-| `meta_server_addrs` | `127.0.0.1:9559` | Specifies the IP addresses and ports of all Meta Services. Multiple addresses are separated with commas.| No|

-|`local_ip` | `127.0.0.1` | Specifies the local IP for the Graph Service. The local IP address is used to identify the nebula-graphd process. If it is a distributed cluster or requires remote access, modify it to the corresponding address.| No|

-|`listen_netdev` |`any` | Specifies the listening network device. | No|

-| `port` | `9669` | Specifies RPC daemon listening port of the Graph service. | No|

-|`reuse_port` |`false` | When set to `false`, the `SO_REUSEPORT` is closed. | No|

-|`listen_backlog` |`1024` | Specifies the maximum length of the connection queue for socket monitoring. This configuration must be modified together with the `net.core.somaxconn`. | No|

-|`client_idle_timeout_secs` |`28800` | Specifies the time to expire an idle connection. The value ranges from 1 to 604800. The default is 8 hours. This configuration is measured in seconds. | No|

-|`session_idle_timeout_secs` |`28800` | Specifies the time to expire an idle session. The value ranges from 1 to 604800. The default is 8 hours. This configuration is measured in seconds. | No|

-|`num_accept_threads` |`1` | Specifies the number of threads that accept incoming connections. | No|

-|`num_netio_threads` |`0` | Specifies the number of networking IO threads. `0` is the number of CPU cores. | No|

-|`num_worker_threads` |`0` | Specifies the number of threads that execute queries. `0` is the number of CPU cores. | No|

-| `ws_ip` | `0.0.0.0` | Specifies the IP address for the HTTP service. | No|

-| `ws_http_port` | `19669` | Specifies the port for the HTTP service. | No|

-|`heartbeat_interval_secs` | `10` | Specifies the default heartbeat interval. Make sure the `heartbeat_interval_secs` values for all services are the same, otherwise NebulaGraph **CANNOT** work normally. This configuration is measured in seconds. | Yes|

-|`storage_client_timeout_ms` |-| Specifies the RPC connection timeout threshold between the Graph Service and the Storage Service. This parameter is not predefined in the initial configuration files. You can manually set it if you need it. The system default value is `60000` ms. | No|

-|`enable_record_slow_query`|`true`|Whether to record slow queries.

Only available in NebulaGraph Enterprise Edition.| No|

-|`slow_query_limit`|`100`|The maximum number of slow queries that can be recorded.

Only available in NebulaGraph Enterprise Edition.| No|

-|`slow_query_threshold_us`|`200000`|When the execution time of a query exceeds the value, the query is called a slow query. Unit: Microsecond.| No|

-|`ws_meta_http_port` |`19559`| Specifies the Meta service listening port used by the HTTP protocol. It must be consistent with the `ws_http_port` in the Meta service configuration file.| No|

+| Name | Predefined value | Description |

+| ----------------------- | ---------------- | ---------------------------------------------------- |

+| `meta_server_addrs` | `127.0.0.1:9559` | Specifies the IP addresses and ports of all Meta Services. Multiple addresses are separated with commas.|

+|`local_ip` | `127.0.0.1` | Specifies the local IP for the Graph Service. The local IP address is used to identify the nebula-graphd process. If it is a distributed cluster or requires remote access, modify it to the corresponding address.|

+|`listen_netdev` |`any` | Specifies the listening network device. |

+| `port` | `9669` | Specifies RPC daemon listening port of the Graph service. |

+|`reuse_port` |`false` | When set to `false`, the `SO_REUSEPORT` is closed. |

+|`listen_backlog` |`1024` | Specifies the maximum length of the connection queue for socket monitoring. This configuration must be modified together with the `net.core.somaxconn`. |

+|`client_idle_timeout_secs` |`28800` | Specifies the time to expire an idle connection. The value ranges from 1 to 604800. The default is 8 hours. This configuration is measured in seconds. |

+|`session_idle_timeout_secs` |`28800` | Specifies the time to expire an idle session. The value ranges from 1 to 604800. The default is 8 hours. This configuration is measured in seconds. |

+|`num_accept_threads` |`1` | Specifies the number of threads that accept incoming connections. |

+|`num_netio_threads` |`0` | Specifies the number of networking IO threads. `0` is the number of CPU cores. |

+|`num_worker_threads` |`0` | Specifies the number of threads that execute queries. `0` is the number of CPU cores. |

+| `ws_ip` | `0.0.0.0` | Specifies the IP address for the HTTP service. |

+| `ws_http_port` | `19669` | Specifies the port for the HTTP service. |

+|`heartbeat_interval_secs` | `10` | Specifies the default heartbeat interval. Make sure the `heartbeat_interval_secs` values for all services are the same, otherwise NebulaGraph **CANNOT** work normally. This configuration is measured in seconds. |

+|`storage_client_timeout_ms` |-| Specifies the RPC connection timeout threshold between the Graph Service and the Storage Service. This parameter is not predefined in the initial configuration files. You can manually set it if you need it. The system default value is `60000` ms. |

+|`enable_record_slow_query`|`true`|Whether to record slow queries.

Only available in NebulaGraph Enterprise Edition.|

+|`slow_query_limit`|`100`|The maximum number of slow queries that can be recorded.

Only available in NebulaGraph Enterprise Edition.|