-

-

Notifications

You must be signed in to change notification settings - Fork 16.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

How to use Yolo v5 without pytorch #10028

Comments

|

👋 Hello! Thanks for asking about Export Formats. YOLOv5 🚀 offers export to almost all of the common export formats. See our TFLite, ONNX, CoreML, TensorRT Export Tutorial for full details. FormatsYOLOv5 inference is officially supported in 11 formats: 💡 ProTip: Export to ONNX or OpenVINO for up to 3x CPU speedup. See CPU Benchmarks.

BenchmarksBenchmarks below run on a Colab Pro with the YOLOv5 tutorial notebook python benchmarks.py --weights yolov5s.pt --imgsz 640 --device 0Colab Pro V100 GPUColab Pro CPUExport a Trained YOLOv5 ModelThis command exports a pretrained YOLOv5s model to TorchScript and ONNX formats. python export.py --weights yolov5s.pt --include torchscript onnx💡 ProTip: Add Output: export: data=data/coco128.yaml, weights=['yolov5s.pt'], imgsz=[640, 640], batch_size=1, device=cpu, half=False, inplace=False, train=False, keras=False, optimize=False, int8=False, dynamic=False, simplify=False, opset=12, verbose=False, workspace=4, nms=False, agnostic_nms=False, topk_per_class=100, topk_all=100, iou_thres=0.45, conf_thres=0.25, include=['torchscript', 'onnx']

YOLOv5 🚀 v6.2-104-ge3e5122 Python-3.7.13 torch-1.12.1+cu113 CPU

Downloading https://github.com/ultralytics/yolov5/releases/download/v6.2/yolov5s.pt to yolov5s.pt...

100% 14.1M/14.1M [00:00<00:00, 274MB/s]

Fusing layers...

YOLOv5s summary: 213 layers, 7225885 parameters, 0 gradients

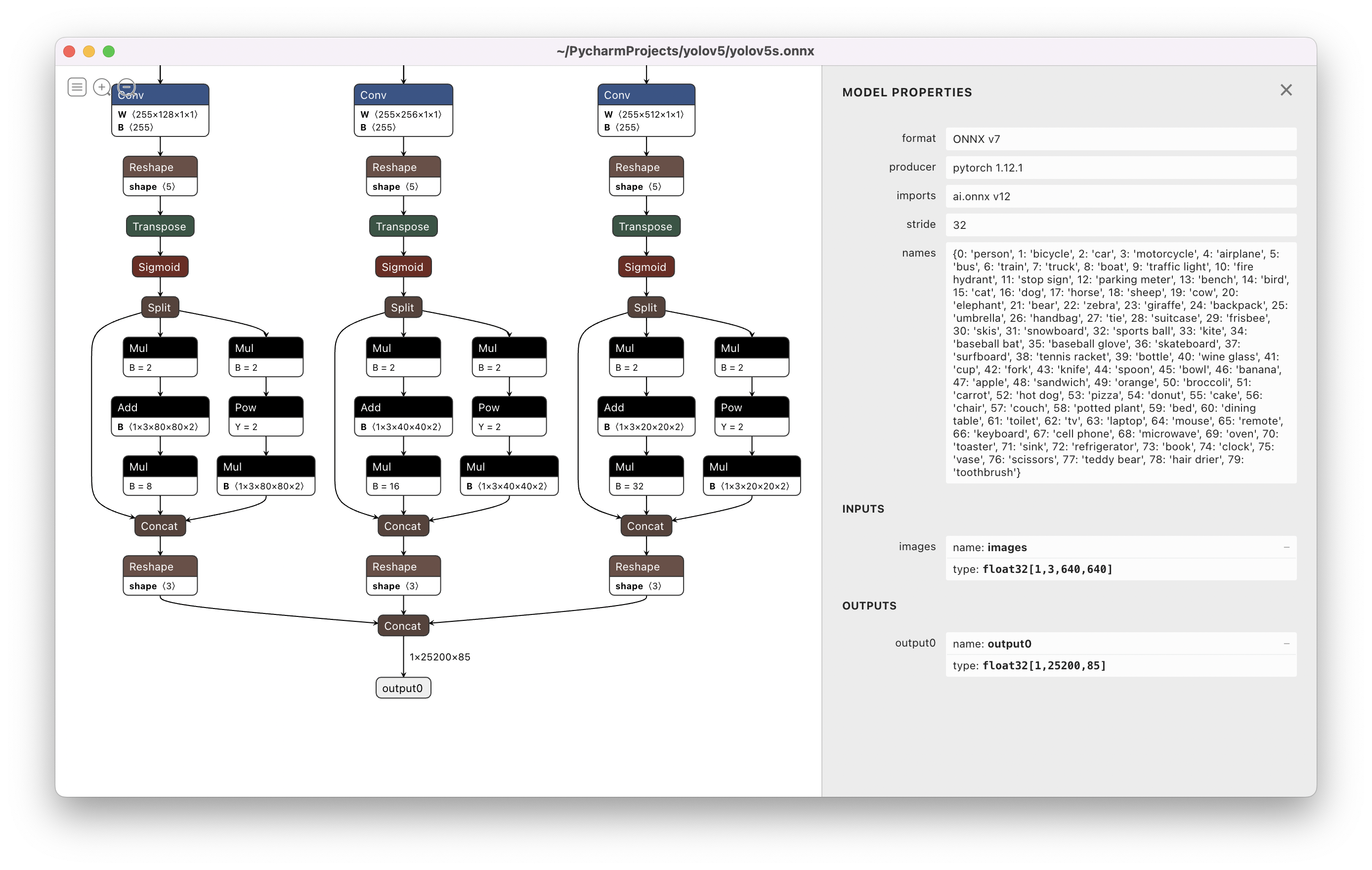

PyTorch: starting from yolov5s.pt with output shape (1, 25200, 85) (14.1 MB)

TorchScript: starting export with torch 1.12.1+cu113...

TorchScript: export success ✅ 1.7s, saved as yolov5s.torchscript (28.1 MB)

ONNX: starting export with onnx 1.12.0...

ONNX: export success ✅ 2.3s, saved as yolov5s.onnx (28.0 MB)

Export complete (5.5s)

Results saved to /content/yolov5

Detect: python detect.py --weights yolov5s.onnx

Validate: python val.py --weights yolov5s.onnx

PyTorch Hub: model = torch.hub.load('ultralytics/yolov5', 'custom', 'yolov5s.onnx')

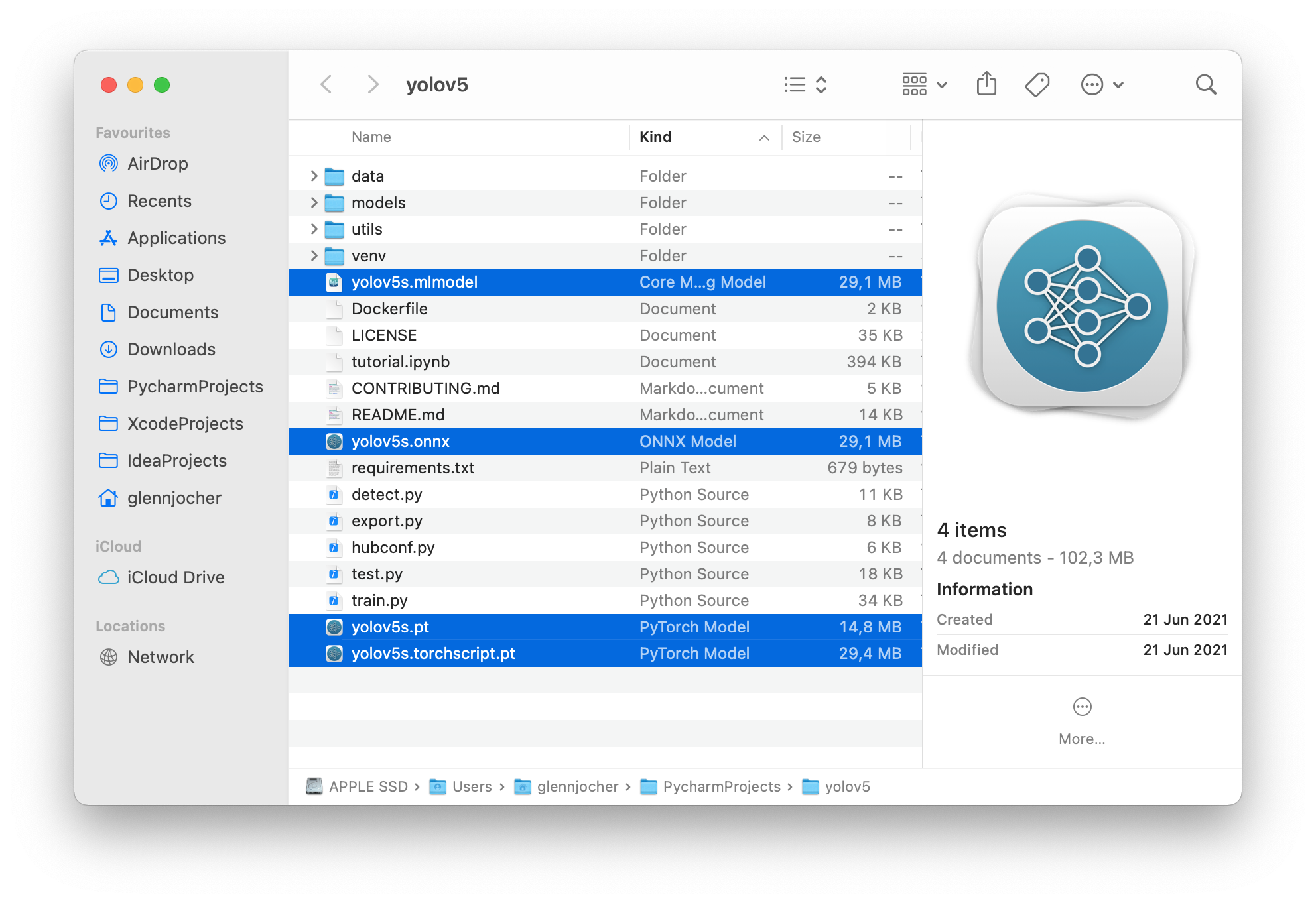

Visualize: https://netron.app/The 3 exported models will be saved alongside the original PyTorch model: Netron Viewer is recommended for visualizing exported models: Exported Model Usage Examples

python detect.py --weights yolov5s.pt # PyTorch

yolov5s.torchscript # TorchScript

yolov5s.onnx # ONNX Runtime or OpenCV DNN with --dnn

yolov5s_openvino_model # OpenVINO

yolov5s.engine # TensorRT

yolov5s.mlmodel # CoreML (macOS only)

yolov5s_saved_model # TensorFlow SavedModel

yolov5s.pb # TensorFlow GraphDef

yolov5s.tflite # TensorFlow Lite

yolov5s_edgetpu.tflite # TensorFlow Edge TPU

yolov5s_paddle_model # PaddlePaddle

python val.py --weights yolov5s.pt # PyTorch

yolov5s.torchscript # TorchScript

yolov5s.onnx # ONNX Runtime or OpenCV DNN with --dnn

yolov5s_openvino_model # OpenVINO

yolov5s.engine # TensorRT

yolov5s.mlmodel # CoreML (macOS Only)

yolov5s_saved_model # TensorFlow SavedModel

yolov5s.pb # TensorFlow GraphDef

yolov5s.tflite # TensorFlow Lite

yolov5s_edgetpu.tflite # TensorFlow Edge TPU

yolov5s_paddle_model # PaddlePaddleUse PyTorch Hub with exported YOLOv5 models: import torch

# Model

model = torch.hub.load('ultralytics/yolov5', 'custom', 'yolov5s.pt')

'yolov5s.torchscript ') # TorchScript

'yolov5s.onnx') # ONNX Runtime

'yolov5s_openvino_model') # OpenVINO

'yolov5s.engine') # TensorRT

'yolov5s.mlmodel') # CoreML (macOS Only)

'yolov5s_saved_model') # TensorFlow SavedModel

'yolov5s.pb') # TensorFlow GraphDef

'yolov5s.tflite') # TensorFlow Lite

'yolov5s_edgetpu.tflite') # TensorFlow Edge TPU

'yolov5s_paddle_model') # PaddlePaddle

# Images

img = 'https://ultralytics.com/images/zidane.jpg' # or file, Path, PIL, OpenCV, numpy, list

# Inference

results = model(img)

# Results

results.print() # or .show(), .save(), .crop(), .pandas(), etc.OpenCV DNN inferenceOpenCV inference with ONNX models: python export.py --weights yolov5s.pt --include onnx

python detect.py --weights yolov5s.onnx --dnn # detect

python val.py --weights yolov5s.onnx --dnn # validateC++ InferenceYOLOv5 OpenCV DNN C++ inference on exported ONNX model examples:

YOLOv5 OpenVINO C++ inference examples:

Good luck 🍀 and let us know if you have any other questions! |

|

Could you please share how did you run it on fpga |

|

@pythonscriptsPro try to use onnx environment |

But the onnx has to be converted to a fpga format, is that right? |

|

@pythonscriptsPro no , there is fpga kit that run ubuntu os, you will work like raspberry or jetson nano |

|

Ok, many thanks 😊 |

|

@pythonscriptsPro you're welcome! If you have any more questions, feel free to ask. Good luck with your YOLOv5 implementation on the FPGA kit! 😊 |

|

@glenn-jocher , thanks a lot for your reply, I deployed YOLOv5 on a KRIA260 device using DPU Overlay PYNQ. Some layers unsupported by KRIA were replaced, affecting performance. The quantization process led to a slight quality degradation, but the performance is comparable to the original code, achieving around 50 fps. |

|

@pythonscriptsPro that's fantastic to hear! Kudos to your successful deployment on the KRIA260 device using DPU Overlay PYNQ! Achieving a comparable performance of around 50 fps, despite the slight quality degradation, speaks volumes about the capability of YOLOv5. The effort you've put into overcoming the challenges is commendable. Keep up the great work and feel free to reach out if you have any further questions or need assistance. 😊 |

|

@glenn-jocher Thank you for your kind words! Your dedication to coding and making it accessible is invaluable. |

|

@pythonscriptsPro thank you for your kind words! I'm glad I could help. The dedication of the YOLO community and the Ultralytics team to making coding accessible is truly invaluable. Keep up the great work, and if you have any further questions or need assistance, don't hesitate to reach out. 😊 |

|

@pythonscriptsPro how did you run yolov5 on kria?? Have you used yolov5.xmodel or yolov5.onnx to run on kria? can you provide details how you have run inference |

Search before asking

Question

Hi I am running YOLO v5 on FPGA, but I face a problem on installing torch .

Can I run Yolo v5 without torch.

Additional

No response

The text was updated successfully, but these errors were encountered: