3 and a chunk size of (64,64,64) voxel.

+A maximum of 4 parallel jobs will be used to parallelize the conversion, compression and downsampling.

+Using the `--data-format zarr3` argument will produce sharded Zarr v3 datasets.

Read the full documentation at [WEBKNOSSOS CLI](https://docs.webknossos.org/cli).

@@ -170,3 +175,5 @@ To get the best streaming performance for Zarr datasets consider the following s

- Use chunk sizes of 32 - 128 voxels^3

- Enable sharding (only available in Zarr 3+)

+- Use 3D downsampling

+

diff --git a/docs/volume_annotation/pen_tablets.md b/docs/volume_annotation/pen_tablets.md

index dbe9402708a..1238ae15934 100644

--- a/docs/volume_annotation/pen_tablets.md

+++ b/docs/volume_annotation/pen_tablets.md

@@ -5,7 +5,7 @@ Beyond the mouse and keyboard WEBKNOSSOS is great for annotating datasets with a

## Using Wacom/Pen tablets

Using pen tablet can significantly boost your annotation productivity, especially if you set it up correctly with WEBKNOSSOS.

-

+

To streamline your workflow, program your tablet and pen buttons to match the WEBKNOSSOS shortcuts. By doing so, you can focus on your pen without the need of a mouse or keyboard. Here is an example configuration using a Wacom tablet and the Wacom driver software:

@@ -26,7 +26,7 @@ You can find the full list for keyboard shortcuts in the [documentation](../ui/k

### Annotating with Wacom Pens

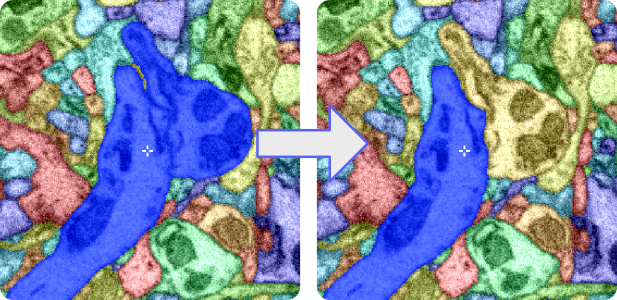

Now, let’s dive into the annotation process! In this example, we begin by quick-selecting a cell.

-

+

If the annotation isn’t precise enough, we can easily switch to the eraser tool (middle left button) and erase a corner. Selecting the brush tool is as simple as pressing the left button, allowing us to add small surfaces to the annotation.

When ready, pressing the right button creates a new segment, and we can repeat the process for other cells.

diff --git a/frontend/javascripts/admin/admin_rest_api.ts b/frontend/javascripts/admin/admin_rest_api.ts

index 5af922c7a3a..6c3b4ef8e78 100644

--- a/frontend/javascripts/admin/admin_rest_api.ts

+++ b/frontend/javascripts/admin/admin_rest_api.ts

@@ -2035,7 +2035,7 @@ export function computeAdHocMesh(

},

},

);

- const neighbors = Utils.parseMaybe(headers.neighbors) || [];

+ const neighbors = (Utils.parseMaybe(headers.neighbors) as number[] | null) || [];

return {

buffer,

neighbors,

diff --git a/frontend/javascripts/admin/api/token.ts b/frontend/javascripts/admin/api/token.ts

index 54f730d40e7..3a430d55756 100644

--- a/frontend/javascripts/admin/api/token.ts

+++ b/frontend/javascripts/admin/api/token.ts

@@ -2,9 +2,12 @@ import { location } from "libs/window";

import Request from "libs/request";

import * as Utils from "libs/utils";

+const MAX_TOKEN_RETRY_ATTEMPTS = 3;

+

let tokenPromise: Promise

3 and a chunk size of (64,64,64) voxel.

+A maximum of 4 parallel jobs will be used to parallelize the conversion, compression and downsampling.

+Using the `--data-format zarr3` argument will produce sharded Zarr v3 datasets.

Read the full documentation at [WEBKNOSSOS CLI](https://docs.webknossos.org/cli).

@@ -170,3 +175,5 @@ To get the best streaming performance for Zarr datasets consider the following s

- Use chunk sizes of 32 - 128 voxels^3

- Enable sharding (only available in Zarr 3+)

+- Use 3D downsampling

+

diff --git a/docs/volume_annotation/pen_tablets.md b/docs/volume_annotation/pen_tablets.md

index dbe9402708a..1238ae15934 100644

--- a/docs/volume_annotation/pen_tablets.md

+++ b/docs/volume_annotation/pen_tablets.md

@@ -5,7 +5,7 @@ Beyond the mouse and keyboard WEBKNOSSOS is great for annotating datasets with a

## Using Wacom/Pen tablets

Using pen tablet can significantly boost your annotation productivity, especially if you set it up correctly with WEBKNOSSOS.

-

+

To streamline your workflow, program your tablet and pen buttons to match the WEBKNOSSOS shortcuts. By doing so, you can focus on your pen without the need of a mouse or keyboard. Here is an example configuration using a Wacom tablet and the Wacom driver software:

@@ -26,7 +26,7 @@ You can find the full list for keyboard shortcuts in the [documentation](../ui/k

### Annotating with Wacom Pens

Now, let’s dive into the annotation process! In this example, we begin by quick-selecting a cell.

-

+

If the annotation isn’t precise enough, we can easily switch to the eraser tool (middle left button) and erase a corner. Selecting the brush tool is as simple as pressing the left button, allowing us to add small surfaces to the annotation.

When ready, pressing the right button creates a new segment, and we can repeat the process for other cells.

diff --git a/frontend/javascripts/admin/admin_rest_api.ts b/frontend/javascripts/admin/admin_rest_api.ts

index 5af922c7a3a..6c3b4ef8e78 100644

--- a/frontend/javascripts/admin/admin_rest_api.ts

+++ b/frontend/javascripts/admin/admin_rest_api.ts

@@ -2035,7 +2035,7 @@ export function computeAdHocMesh(

},

},

);

- const neighbors = Utils.parseMaybe(headers.neighbors) || [];

+ const neighbors = (Utils.parseMaybe(headers.neighbors) as number[] | null) || [];

return {

buffer,

neighbors,

diff --git a/frontend/javascripts/admin/api/token.ts b/frontend/javascripts/admin/api/token.ts

index 54f730d40e7..3a430d55756 100644

--- a/frontend/javascripts/admin/api/token.ts

+++ b/frontend/javascripts/admin/api/token.ts

@@ -2,9 +2,12 @@ import { location } from "libs/window";

import Request from "libs/request";

import * as Utils from "libs/utils";

+const MAX_TOKEN_RETRY_ATTEMPTS = 3;

+

let tokenPromise: PromiseAn Auth Token is a series of symbols that serves to authenticate you. It is used in - communication with the backend API and sent with every request to verify your identity. + communication with the Python API and sent with every request to verify your identity.

You should revoke it if somebody else has acquired your token or you have the suspicion this has happened.{" "} - - Read more - + Read more

diff --git a/frontend/javascripts/admin/dataset/dataset_add_view.tsx b/frontend/javascripts/admin/dataset/dataset_add_view.tsx index c1513f1f7c7..c7677831751 100644 --- a/frontend/javascripts/admin/dataset/dataset_add_view.tsx +++ b/frontend/javascripts/admin/dataset/dataset_add_view.tsx @@ -183,7 +183,7 @@ const alignBanner = ( />

diff --git a/frontend/javascripts/admin/dataset/dataset_upload_view.tsx b/frontend/javascripts/admin/dataset/dataset_upload_view.tsx

index b4f235e9110..653d2ff1249 100644

--- a/frontend/javascripts/admin/dataset/dataset_upload_view.tsx

+++ b/frontend/javascripts/admin/dataset/dataset_upload_view.tsx

@@ -1243,7 +1243,7 @@ function FileUploadArea({

To learn more about the task system in WEBKNOSSOS,{" "}

diff --git a/frontend/javascripts/admin/user/permissions_and_teams_modal_view.tsx b/frontend/javascripts/admin/user/permissions_and_teams_modal_view.tsx

index ebd803d0dd2..33adc70da4f 100644

--- a/frontend/javascripts/admin/user/permissions_and_teams_modal_view.tsx

+++ b/frontend/javascripts/admin/user/permissions_and_teams_modal_view.tsx

@@ -243,7 +243,7 @@ function PermissionsAndTeamsModalView({

WEBKNOSSOS supports a variety of (remote){" "}

diff --git a/frontend/javascripts/libs/request.ts b/frontend/javascripts/libs/request.ts

index 1b1271e4846..25bf31657e5 100644

--- a/frontend/javascripts/libs/request.ts

+++ b/frontend/javascripts/libs/request.ts

@@ -311,7 +311,11 @@ class Request {

...message,

key: json.status.toString(),

}));

- if (showErrorToast) Toast.messages(messages);

+ if (showErrorToast) {

+ Toast.messages(messages); // Note: Toast.error internally logs to console

+ } else {

+ console.error(messages);

+ }

// Check whether the error chain mentions an url which belongs

// to a datastore. Then, ping the datastore

pingMentionedDataStores(text);

@@ -319,7 +323,11 @@ class Request {

/* eslint-disable-next-line prefer-promise-reject-errors */

return Promise.reject({ ...json, url: requestedUrl });

} catch (_jsonError) {

- if (showErrorToast) Toast.error(text);

+ if (showErrorToast) {

+ Toast.error(text); // Note: Toast.error internally logs to console

+ } else {

+ console.error(`Request failed for ${requestedUrl}:`, text);

+ }

/* eslint-disable-next-line prefer-promise-reject-errors */

return Promise.reject({

diff --git a/frontend/javascripts/oxalis/model/sagas/save_saga.ts b/frontend/javascripts/oxalis/model/sagas/save_saga.ts

index e9e09a12a32..d2acc8ca949 100644

--- a/frontend/javascripts/oxalis/model/sagas/save_saga.ts

+++ b/frontend/javascripts/oxalis/model/sagas/save_saga.ts

@@ -196,6 +196,9 @@ export function* sendRequestToServer(

method: "POST",

data: compactedSaveQueue,

compress: process.env.NODE_ENV === "production",

+ // Suppressing error toast, as the doWithToken retry with personal token functionality should not show an error.

+ // Instead the error is logged and toggleErrorHighlighting should take care of showing an error to the user.

+ showErrorToast: false,

},

);

const endTime = Date.now();

diff --git a/frontend/javascripts/oxalis/view/action-bar/default-predict-workflow-template.ts b/frontend/javascripts/oxalis/view/action-bar/default-predict-workflow-template.ts

index fdfcc186963..ffaffb19b0c 100644

--- a/frontend/javascripts/oxalis/view/action-bar/default-predict-workflow-template.ts

+++ b/frontend/javascripts/oxalis/view/action-bar/default-predict-workflow-template.ts

@@ -1,8 +1,9 @@

export default `predict:

task: PredictTask

distribution:

- default:

- processes: 2

+ step:

+ strategy: sequential

+ num_io_threads: 5

inputs:

model: TO_BE_SET_BY_WORKER

config:

@@ -19,6 +20,6 @@ publish_dataset_meshes:

config:

name: TO_BE_SET_BY_WORKER

public_directory: TO_BE_SET_BY_WORKER

- webknossos_organization: TO_BE_SET_BY_WORKER

use_symlinks: False

- move_dataset_symlink_artifact: True`;

+ move_dataset_symlink_artifact: True

+ keep_symlinks_to: TO_BE_SET_BY_WORKER`;

diff --git a/frontend/javascripts/oxalis/view/action-bar/download_modal_view.tsx b/frontend/javascripts/oxalis/view/action-bar/download_modal_view.tsx

index 9be46f578f4..a0ef1d37e31 100644

--- a/frontend/javascripts/oxalis/view/action-bar/download_modal_view.tsx

+++ b/frontend/javascripts/oxalis/view/action-bar/download_modal_view.tsx

@@ -433,7 +433,7 @@ function _DownloadModalView({

>

For more information on how to work with {typeDependentFileName} visit the{" "}

diff --git a/frontend/javascripts/oxalis/view/right-border-tabs/advanced_search_popover.tsx b/frontend/javascripts/oxalis/view/right-border-tabs/advanced_search_popover.tsx

index 76bcb9e0399..54852103229 100644

--- a/frontend/javascripts/oxalis/view/right-border-tabs/advanced_search_popover.tsx

+++ b/frontend/javascripts/oxalis/view/right-border-tabs/advanced_search_popover.tsx

@@ -1,5 +1,5 @@

import { Input, Tooltip, Popover, Space, type InputRef } from "antd";

-import { DownOutlined, UpOutlined } from "@ant-design/icons";

+import { CheckSquareOutlined, DownOutlined, UpOutlined } from "@ant-design/icons";

import * as React from "react";

import memoizeOne from "memoize-one";

import ButtonComponent from "oxalis/view/components/button_component";

@@ -7,10 +7,13 @@ import Shortcut from "libs/shortcut_component";

import DomVisibilityObserver from "oxalis/view/components/dom_visibility_observer";

import { mod } from "libs/utils";

+const PRIMARY_COLOR = "var(--ant-color-primary)";

+

type Props

Organization Permissions{" "}

diff --git a/frontend/javascripts/dashboard/dashboard_task_list_view.tsx b/frontend/javascripts/dashboard/dashboard_task_list_view.tsx

index 899a5957c87..cec4d65b369 100644

--- a/frontend/javascripts/dashboard/dashboard_task_list_view.tsx

+++ b/frontend/javascripts/dashboard/dashboard_task_list_view.tsx

@@ -414,7 +414,7 @@ class DashboardTaskListView extends React.PureComponent

= {

data: S[];

searchKey: keyof S | ((item: S) => string);

onSelect: (arg0: S) => void;

+ onSelectAllMatches?: (arg0: S[]) => void;

children: React.ReactNode;

provideShortcut?: boolean;

targetId: string;

@@ -20,6 +23,7 @@ type State = {

isVisible: boolean;

searchQuery: string;

currentPosition: number | null | undefined;

+ areAllMatchesSelected: boolean;

};

export default class AdvancedSearchPopover<

@@ -29,6 +33,7 @@ export default class AdvancedSearchPopover<

isVisible: false,

searchQuery: "",

currentPosition: null,

+ areAllMatchesSelected: false,

};

getAvailableOptions = memoizeOne(

@@ -69,6 +74,7 @@ export default class AdvancedSearchPopover<

currentPosition = mod(currentPosition + offset, numberOfAvailableOptions);

this.setState({

currentPosition,

+ areAllMatchesSelected: false,

});

this.props.onSelect(availableOptions[currentPosition]);

};

@@ -101,7 +107,7 @@ export default class AdvancedSearchPopover<

render() {

const { data, searchKey, provideShortcut, children, targetId } = this.props;

- const { searchQuery, isVisible } = this.state;

+ const { searchQuery, isVisible, areAllMatchesSelected } = this.state;

let { currentPosition } = this.state;

const availableOptions = this.getAvailableOptions(data, searchQuery, searchKey);

const numberOfAvailableOptions = availableOptions.length;

@@ -109,13 +115,17 @@ export default class AdvancedSearchPopover<

currentPosition =

currentPosition == null ? -1 : Math.min(currentPosition, numberOfAvailableOptions - 1);

const hasNoResults = numberOfAvailableOptions === 0;

- const hasMultipleResults = numberOfAvailableOptions > 1;

+ const availableOptionsToSelectAllMatches = availableOptions.filter(

+ (result) => result.type === "Tree" || result.type === "segment",

+ );

+ const isSelectAllMatchesDisabled = availableOptionsToSelectAllMatches.length < 2;

const additionalInputStyle =

hasNoResults && searchQuery !== ""

? {

color: "red",

}

: {};

+ const selectAllMatchesButtonColor = areAllMatchesSelected ? PRIMARY_COLOR : undefined;

return (