diff --git a/.github/workflows/deploy.yml b/.github/workflows/deploy.yml

index f6f600ae..5449904d 100644

--- a/.github/workflows/deploy.yml

+++ b/.github/workflows/deploy.yml

@@ -79,19 +79,19 @@ jobs:

runs-on: ${{ matrix.os }}

strategy:

matrix:

- python-version: ["3.8", "3.9", "3.10"]

+ python-version: ["3.9", "3.10", "3.11"]

os: [ubuntu-latest]

include:

- os: macos-11

- python-version: "3.10"

+ python-version: "3.11"

- os: macos-latest

- python-version: "3.10"

+ python-version: "3.11"

- os: windows-2019

- python-version: "3.10"

+ python-version: "3.11"

- os: windows-latest

- python-version: "3.10"

+ python-version: "3.11"

- os: ubuntu-20.04

- python-version: "3.10"

+ python-version: "3.11"

steps:

- name: Checkout source

diff --git a/.github/workflows/test.yml b/.github/workflows/test.yml

index 30ddb2b0..d1992835 100644

--- a/.github/workflows/test.yml

+++ b/.github/workflows/test.yml

@@ -50,19 +50,19 @@ jobs:

runs-on: ${{ matrix.os }}

strategy:

matrix:

- python-version: ["3.8", "3.9", "3.10"]

+ python-version: ["3.9", "3.10", "3.11"]

os: [ubuntu-latest]

include:

- os: macos-11

- python-version: "3.10"

+ python-version: "3.11"

- os: macos-latest

- python-version: "3.10"

+ python-version: "3.11"

- os: windows-2019

- python-version: "3.10"

+ python-version: "3.11"

- os: windows-latest

- python-version: "3.10"

+ python-version: "3.11"

- os: ubuntu-20.04

- python-version: "3.10"

+ python-version: "3.11"

steps:

- uses: actions/checkout@v3

@@ -80,23 +80,16 @@ jobs:

cache: "pip"

cache-dependency-path: "pyproject.toml"

- - uses: conda-incubator/setup-miniconda@v2

- with:

- auto-update-conda: true

- python-version: ${{ matrix.python-version }}

-

# these libraries enable testing on Qt on linux

- uses: tlambert03/setup-qt-libs@v1

- # note: if you need dependencies from conda, considering using

- # setup-miniconda: https://github.com/conda-incubator/setup-miniconda

- # and

- # tox-conda: https://github.com/tox-dev/tox-conda

- name: Install dependencies

- run: python -m pip install "tox<4" tox-gh-actions tox-conda

+ run: python -m pip install tox tox-gh-actions

- name: Test with tox

- run: tox

+ run: tox run

+ env:

+ OS: ${{ matrix.os }}

- name: Coverage

uses: codecov/codecov-action@v3

diff --git a/.gitignore b/.gitignore

index f9c3d531..cddb80a1 100644

--- a/.gitignore

+++ b/.gitignore

@@ -1,4 +1,5 @@

__pycache__

+_version.py

.cache

.coverage

.coverage.*

@@ -54,8 +55,7 @@ instance

lib

lib64

local_settings.py

-models/MDCK_*

-models/test_config.json

+models

nosetests.xml

notebooks

parts

@@ -63,5 +63,5 @@ pip-delete-this-directory.txt

pip-log.txt

sdist

target

+user_config.json

var

-_version.py

diff --git a/.napari-hub/DESCRIPTION.md b/.napari-hub/DESCRIPTION.md

index d12f6534..067dc216 100644

--- a/.napari-hub/DESCRIPTION.md

+++ b/.napari-hub/DESCRIPTION.md

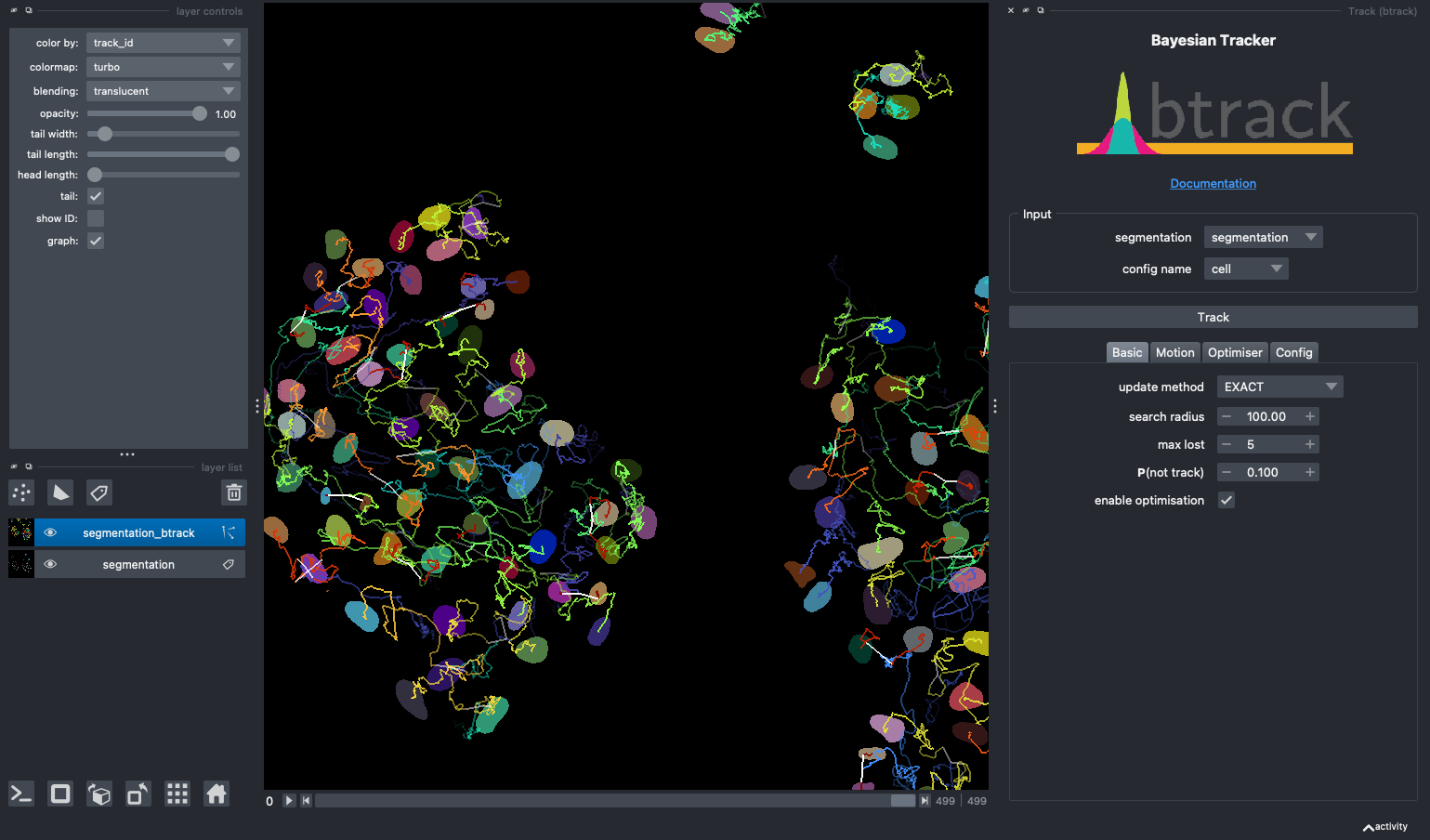

@@ -19,7 +19,7 @@ linkages.

We developed `btrack` for cell tracking in time-lapse microscopy data.

-

+

+## Installation

-## associated plugins

+To install the `napari` plugin associated with `btrack` run the command.

+

+```sh

+pip install btrack[napari]

+```

+

+## Example data

+

+You can try out the btrack plugin using sample data:

+

+```sh

+python btrack/napari/examples/show_btrack_widget.py

+```

+

+which will launch `napari` and the `btrack` widget, along with some sample data.

+

+

+## Setting parameters

+

+There are detailed tips and instructions on parameter settings over at the [documentation](https://btrack.readthedocs.io/en/latest/user_guide/index.html).

+

+

+## Associated plugins

* [napari-arboretum](https://www.napari-hub.org/plugins/napari-arboretum) - Napari plugin to enable track graph and lineage tree visualization.

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

index 757dcee2..a8de317e 100644

--- a/.pre-commit-config.yaml

+++ b/.pre-commit-config.yaml

@@ -1,10 +1,10 @@

repos:

- repo: https://github.com/charliermarsh/ruff-pre-commit

- rev: v0.0.262

+ rev: v0.0.278

hooks:

- id: ruff

- repo: https://github.com/Lucas-C/pre-commit-hooks

- rev: v1.5.1

+ rev: v1.5.3

hooks:

- id: remove-tabs

exclude: Makefile|docs/Makefile|\.bat$

@@ -19,19 +19,18 @@ repos:

- id: end-of-file-fixer

- id: mixed-line-ending

args: [--fix=lf]

- - id: requirements-txt-fixer

- id: trailing-whitespace

args: [--markdown-linebreak-ext=md]

- repo: https://github.com/psf/black

- rev: 23.3.0

+ rev: 23.7.0

hooks:

- id: black

- repo: https://github.com/pappasam/toml-sort

- rev: v0.23.0

+ rev: v0.23.1

hooks:

- id: toml-sort-fix

- repo: https://github.com/pre-commit/mirrors-clang-format

- rev: v15.0.7

+ rev: v16.0.6

hooks:

- id: clang-format

types_or: [c++, c, cuda]

diff --git a/.readthedocs.yaml b/.readthedocs.yaml

index d15aa007..db1289be 100644

--- a/.readthedocs.yaml

+++ b/.readthedocs.yaml

@@ -9,7 +9,7 @@ version: 2

build:

os: ubuntu-20.04

tools:

- python: "3.10"

+ python: "3.11"

# Optionally declare the Python requirements required to build your docs

python:

diff --git a/MANIFEST.in b/MANIFEST.in

index f3c33ca5..8e90931e 100644

--- a/MANIFEST.in

+++ b/MANIFEST.in

@@ -1 +1,5 @@

graft btrack/libs

+prune docs

+prune tests

+prune models

+prune examples

diff --git a/Makefile b/Makefile

index 1e90e77e..c7afcab0 100644

--- a/Makefile

+++ b/Makefile

@@ -18,15 +18,15 @@ ifeq ($(UNAME), Darwin)

# do something OSX

CXX = clang++ -arch x86_64 -arch arm64

EXT = dylib

- XLD_FLAGS = -arch x86_64 -arch arm64

+ XLDFLAGS =

endif

NVCC = nvcc

-# If your compiler is a bit older you may need to change -std=c++17 to -std=c++0x

+# If your compiler is a bit older you may need to change -std=c++11 to -std=c++0x

#-I/usr/include/python2.7 -L/usr/lib/python2.7 # -O3

LLDBFLAGS =

-CXXFLAGS = -c -std=c++17 -m64 -fPIC -I"./btrack/include" \

+CXXFLAGS = -c -std=c++11 -m64 -fPIC -I"./btrack/include" \

-DDEBUG=false -DBUILD_SHARED_LIB

OPTFLAGS = -O3

LDFLAGS = -shared $(XLDFLAGS)

diff --git a/README.md b/README.md

index d972a8c1..9b89613d 100644

--- a/README.md

+++ b/README.md

@@ -1,5 +1,5 @@

[](https://pypi.org/project/btrack)

-[](https://pepy.tech/project/btrack)

+[](https://pepy.tech/project/btrack)

[](https://github.com/psf/black)

[](https://github.com/quantumjot/btrack/actions/workflows/test.yml)

[](https://github.com/pre-commit/pre-commit)

@@ -32,22 +32,12 @@ Note that `btrack<=0.5.0` was built against earlier version of

[Eigen](https://eigen.tuxfamily.org) which used `C++=11`, as of `btrack==0.5.1`

it is now built against `C++=17`.

-#### Installing the latest stable version

+### Installing the latest stable version

```sh

pip install btrack

```

-## Installing on M1 Mac/Apple Silicon/osx-arm64

-

-Best done with [conda](https://github.com/conda-forge/miniforge)

-

-```sh

-conda env create -f environment.yml

-conda activate btrack

-pip install btrack

-```

-

## Usage examples

Visit [btrack documentation](https://btrack.readthedocs.io) to learn how to use it and see other examples.

diff --git a/btrack/btypes.py b/btrack/btypes.py

index 6921ff3e..b1c3563b 100644

--- a/btrack/btypes.py

+++ b/btrack/btypes.py

@@ -17,9 +17,10 @@

import ctypes

from collections import OrderedDict

-from typing import Any, Dict, List, NamedTuple, Optional, Tuple

+from typing import Any, ClassVar, NamedTuple, Optional

import numpy as np

+from numpy import typing as npt

from . import constants

@@ -27,9 +28,9 @@

class ImagingVolume(NamedTuple):

- x: Tuple[float, float]

- y: Tuple[float, float]

- z: Optional[Tuple[float, float]] = None

+ x: tuple[float, float]

+ y: tuple[float, float]

+ z: Optional[tuple[float, float]] = None

@property

def ndim(self) -> int:

@@ -75,7 +76,7 @@ class PyTrackObject(ctypes.Structure):

Attributes

----------

- properties : Dict[str, Union[int, float]]

+ properties : dict[str, Union[int, float]]

Dictionary of properties associated with this object.

state : constants.States

A state label for the object. See `constants.States`

@@ -86,7 +87,7 @@ class PyTrackObject(ctypes.Structure):

"""

- _fields_ = [

+ _fields_: ClassVar[list] = [

("ID", ctypes.c_long),

("x", ctypes.c_double),

("y", ctypes.c_double),

@@ -108,13 +109,11 @@ def __init__(self):

self._properties = {}

@property

- def properties(self) -> Dict[str, Any]:

- if self.dummy:

- return {}

- return self._properties

+ def properties(self) -> dict[str, Any]:

+ return {} if self.dummy else self._properties

@properties.setter

- def properties(self, properties: Dict[str, Any]):

+ def properties(self, properties: dict[str, Any]):

"""Set the object properties."""

self._properties.update(properties)

@@ -122,17 +121,15 @@ def properties(self, properties: Dict[str, Any]):

def state(self) -> constants.States:

return constants.States(self.label)

- def set_features(self, keys: List[str]) -> None:

+ def set_features(self, keys: list[str]) -> None:

"""Set features to be used by the tracking update."""

if not keys:

self.n_features = 0

return

- if not all(k in self.properties for k in keys):

- missing_features = list(

- set(keys).difference(set(self.properties.keys()))

- )

+ if any(k not in self.properties for k in keys):

+ missing_features = list(set(keys).difference(set(self.properties.keys())))

raise KeyError(f"Feature(s) missing: {missing_features}.")

# store a reference to the numpy array so that Python maintains

@@ -146,18 +143,18 @@ def set_features(self, keys: List[str]) -> None:

self.features = np.ctypeslib.as_ctypes(self._features)

self.n_features = len(self._features)

- def to_dict(self) -> Dict[str, Any]:

+ def to_dict(self) -> dict[str, Any]:

"""Return a dictionary of the fields and their values."""

node = {

k: getattr(self, k)

for k, _ in PyTrackObject._fields_

if k not in ("features", "n_features")

}

- node.update(self.properties)

+ node |= self.properties

return node

@staticmethod

- def from_dict(properties: Dict[str, Any]) -> PyTrackObject:

+ def from_dict(properties: dict[str, Any]) -> PyTrackObject:

"""Build an object from a dictionary."""

obj = PyTrackObject()

fields = dict(PyTrackObject._fields_)

@@ -174,9 +171,7 @@ def from_dict(properties: Dict[str, Any]) -> PyTrackObject:

setattr(obj, key, float(new_data))

# we can add any extra details to the properties dictionary

- obj.properties = {

- k: v for k, v in properties.items() if k not in fields.keys()

- }

+ obj.properties = {k: v for k, v in properties.items() if k not in fields}

return obj

def __repr__(self):

@@ -221,7 +216,7 @@ class PyTrackingInfo(ctypes.Structure):

"""

- _fields_ = [

+ _fields_: ClassVar[list] = [

("error", ctypes.c_uint),

("n_tracks", ctypes.c_uint),

("n_active", ctypes.c_uint),

@@ -235,12 +230,11 @@ class PyTrackingInfo(ctypes.Structure):

("complete", ctypes.c_bool),

]

- def to_dict(self) -> Dict[str, Any]:

+ def to_dict(self) -> dict[str, Any]:

"""Return a dictionary of the statistics"""

# TODO(arl): make this more readable by converting seconds, ms

# and interpreting error messages?

- stats = {k: getattr(self, k) for k, typ in PyTrackingInfo._fields_}

- return stats

+ return {k: getattr(self, k) for k, typ in PyTrackingInfo._fields_}

@property

def tracker_active(self) -> bool:

@@ -269,7 +263,7 @@ class PyGraphEdge(ctypes.Structure):

source timestamp, we just assume that the tracker has done it's job.

"""

- _fields_ = [

+ _fields_: ClassVar[list] = [

("source", ctypes.c_long),

("target", ctypes.c_long),

("score", ctypes.c_double),

@@ -278,8 +272,7 @@ class PyGraphEdge(ctypes.Structure):

def to_dict(self) -> dict[str, Any]:

"""Return a dictionary describing the edge."""

- edge = {k: getattr(self, k) for k, _ in PyGraphEdge._fields_}

- return edge

+ return {k: getattr(self, k) for k, _ in PyGraphEdge._fields_}

class Tracklet:

@@ -312,6 +305,8 @@ class Tracklet:

A list specifying which objects are dummy objects inserted by the tracker.

parent : int, list

The identifiers of the parent track(s).

+ generation : int

+ If specified, the generational depth of the tracklet releative to the root.

refs : list[int]

Returns a list of :py:class:`btrack.btypes.PyTrackObject` identifiers

used to build the track. Useful for indexing back into the original

@@ -323,7 +318,7 @@ class Tracklet:

softmax : list[float]

If defined, return the softmax score for the label of each object in the

track.

- properties : Dict[str, np.ndarray]

+ properties : dict[str, npt.NDArray]

Return a dictionary of track properties derived from

:py:class:`btrack.btypes.PyTrackObject` properties.

root : int,

@@ -336,7 +331,7 @@ class Tracklet:

First time stamp of track.

stop : int, float

Last time stamp of track.

- kalman : np.ndarray

+ kalman : npt.NDArray

Return the complete output of the kalman filter for this track. Note,

that this may not have been returned while from the tracker. See

:py:attr:`btrack.BayesianTracker.return_kalman` for more details.

@@ -353,20 +348,20 @@ class Tracklet:

x values.

"""

- def __init__(

+ def __init__( # noqa: PLR0913

self,

ID: int,

- data: List[PyTrackObject],

+ data: list[PyTrackObject],

*,

parent: Optional[int] = None,

- children: Optional[List[int]] = None,

+ children: Optional[list[int]] = None,

fate: constants.Fates = constants.Fates.UNDEFINED,

):

assert all(isinstance(o, PyTrackObject) for o in data)

self.ID = ID

self._data = data

- self._kalman = None

+ self._kalman = np.empty(0)

self.root = None

self.parent = parent

@@ -385,10 +380,10 @@ def _repr_html_(self):

return _pandas_html_repr(self)

@property

- def properties(self) -> Dict[str, np.ndarray]:

+ def properties(self) -> dict:

"""Return the properties of the objects."""

# find the set of keys, then grab the properties

- keys = set()

+ keys: set = set()

for obj in self._data:

keys.update(obj.properties.keys())

@@ -397,11 +392,7 @@ def properties(self) -> Dict[str, np.ndarray]:

# this to fill the properties array with NaN for dummy objects

property_shapes = {

k: next(

- (

- np.asarray(o.properties[k]).shape

- for o in self._data

- if not o.dummy

- ),

+ (np.asarray(o.properties[k]).shape for o in self._data if not o.dummy),

None,

)

for k in keys

@@ -431,7 +422,7 @@ def properties(self) -> Dict[str, np.ndarray]:

return properties

@properties.setter

- def properties(self, properties: Dict[str, np.ndarray]):

+ def properties(self, properties: dict[str, npt.NDArray]):

"""Store properties associated with this Tracklet."""

# TODO(arl): this will need to set the object properties

pass

@@ -489,51 +480,49 @@ def softmax(self) -> list:

@property

def is_root(self) -> bool:

- return (

- self.parent == 0 or self.parent is None or self.parent == self.ID

- )

+ return self.parent == 0 or self.parent is None or self.parent == self.ID

@property

def is_leaf(self) -> bool:

return not self.children

@property

- def kalman(self) -> np.ndarray:

+ def kalman(self) -> npt.NDArray:

return self._kalman

@kalman.setter

- def kalman(self, data: np.ndarray) -> None:

+ def kalman(self, data: npt.NDArray) -> None:

assert isinstance(data, np.ndarray)

self._kalman = data

- def mu(self, index: int) -> np.ndarray:

+ def mu(self, index: int) -> npt.NDArray:

"""Return the Kalman filter mu. Note that we are only returning the mu

for the positions (e.g. 3x1)."""

return self.kalman[index, 1:4].reshape(3, 1)

- def covar(self, index: int) -> np.ndarray:

+ def covar(self, index: int) -> npt.NDArray:

"""Return the Kalman filter covariance matrix. Note that we are

only returning the covariance matrix for the positions (e.g. 3x3)."""

return self.kalman[index, 4:13].reshape(3, 3)

- def predicted(self, index: int) -> np.ndarray:

+ def predicted(self, index: int) -> npt.NDArray:

"""Return the motion model prediction for the given timestep."""

return self.kalman[index, 13:].reshape(3, 1)

def to_dict(

self, properties: list = constants.DEFAULT_EXPORT_PROPERTIES

- ) -> Dict[str, Any]:

+ ) -> dict[str, Any]:

"""Return a dictionary of the tracklet which can be used for JSON

export. This is an ordered dictionary for nicer JSON output.

"""

- trk_tuple = tuple([(p, getattr(self, p)) for p in properties])

+ trk_tuple = tuple((p, getattr(self, p)) for p in properties)

data = OrderedDict(trk_tuple)

- data.update(self.properties)

+ data |= self.properties

return data

def to_array(

self, properties: list = constants.DEFAULT_EXPORT_PROPERTIES

- ) -> np.ndarray:

+ ) -> npt.NDArray:

"""Return a representation of the trackled as a numpy array."""

data = self.to_dict(properties)

tmp_track = []

@@ -544,10 +533,10 @@ def to_array(

np_values = np.reshape(np_values, (len(self), -1))

tmp_track.append(np_values)

- tmp_track = np.concatenate(tmp_track, axis=-1)

- assert tmp_track.shape[0] == len(self)

- assert tmp_track.ndim == constants.Dimensionality.TWO

- return tmp_track.astype(np.float32)

+ tmp_track_arr = np.concatenate(tmp_track, axis=-1)

+ assert tmp_track_arr.shape[0] == len(self)

+ assert tmp_track_arr.ndim == constants.Dimensionality.TWO

+ return tmp_track_arr.astype(np.float32)

def in_frame(self, frame: int) -> bool:

"""Return true or false as to whether the track is in the frame."""

@@ -558,7 +547,7 @@ def trim(self, frame: int, tail: int = 75) -> Tracklet:

d = [o for o in self._data if o.t <= frame and o.t >= frame - tail]

return Tracklet(self.ID, d)

- def LBEP(self) -> Tuple[int]:

+ def LBEP(self) -> tuple[int, list, list, Optional[int], None, int]:

"""Return an LBEP table summarising the track."""

return (

self.ID,

@@ -576,8 +565,7 @@ def _pandas_html_repr(obj):

import pandas as pd

except ImportError:

return (

- "Install pandas for nicer, tabular rendering.

"

- + obj.__repr__()

+ "Install pandas for nicer, tabular rendering.

" + obj.__repr__()

)

obj_as_dict = obj.to_dict()

diff --git a/btrack/config.py b/btrack/config.py

index f6db4f17..c5aa15e3 100644

--- a/btrack/config.py

+++ b/btrack/config.py

@@ -2,7 +2,7 @@

import logging

import os

from pathlib import Path

-from typing import List, Optional

+from typing import ClassVar, Optional

import numpy as np

from pydantic import BaseModel, conlist, validator

@@ -66,6 +66,9 @@ class TrackerConfig(BaseModel):

tracking_updates : list

A list of features to be used for tracking, such as MOTION or VISUAL.

Must have at least one entry.

+ enable_optimisation

+ A flag which, if `False`, will report a warning to the user if they then

+ subsequently run the `BayesianTracker.optimise()` step.

Notes

-----

@@ -84,7 +87,7 @@ class TrackerConfig(BaseModel):

volume: Optional[ImagingVolume] = None

update_method: constants.BayesianUpdates = constants.BayesianUpdates.EXACT

optimizer_options: dict = constants.GLPK_OPTIONS

- features: List[str] = []

+ features: list[str] = []

tracking_updates: conlist(

constants.BayesianUpdateFeatures,

min_items=1,

@@ -92,20 +95,18 @@ class TrackerConfig(BaseModel):

) = [

constants.BayesianUpdateFeatures.MOTION,

]

+ enable_optimisation = True

@validator("volume", pre=True, always=True)

def _parse_volume(cls, v):

- if isinstance(v, tuple):

- return ImagingVolume(*v)

- return v

+ return ImagingVolume(*v) if isinstance(v, tuple) else v

@validator("tracking_updates", pre=True, always=True)

def _parse_tracking_updates(cls, v):

_tracking_updates = v

if all(isinstance(k, str) for k in _tracking_updates):

_tracking_updates = [

- constants.BayesianUpdateFeatures[k.upper()]

- for k in _tracking_updates

+ constants.BayesianUpdateFeatures[k.upper()] for k in _tracking_updates

]

_tracking_updates = list(set(_tracking_updates))

return _tracking_updates

@@ -113,7 +114,7 @@ def _parse_tracking_updates(cls, v):

class Config:

arbitrary_types_allowed = True

validate_assignment = True

- json_encoders = {

+ json_encoders: ClassVar[dict] = {

np.ndarray: lambda x: x.ravel().tolist(),

}

diff --git a/btrack/constants.py b/btrack/constants.py

index cbe954f3..2f948693 100644

--- a/btrack/constants.py

+++ b/btrack/constants.py

@@ -69,7 +69,7 @@ class Fates(enum.Enum):

@enum.unique

-class States(enum.Enum):

+class States(enum.IntEnum):

INTERPHASE = 0

PROMETAPHASE = 1

METAPHASE = 2

@@ -96,3 +96,4 @@ class Dimensionality(enum.IntEnum):

TWO: int = 2

THREE: int = 3

FOUR: int = 4

+ FIVE: int = 5

diff --git a/btrack/core.py b/btrack/core.py

index 177d7c84..a220eebc 100644

--- a/btrack/core.py

+++ b/btrack/core.py

@@ -3,9 +3,10 @@

import logging

import os

import warnings

-from typing import List, Optional, Tuple, Union

+from typing import Optional, Union

import numpy as np

+from numpy import typing as npt

from btrack import _version

@@ -49,7 +50,7 @@ class BayesianTracker:

:py:meth:`btrack.btypes.ImagingVolume` for more details.

frame_range : tuple

The frame range for tracking, essentially the last dimension of volume.

- LBEP : List[List]

+ LBEP : list[List]

Return an LBEP table of the track lineages.

configuration : config.TrackerConfig

Return the current configuration.

@@ -137,12 +138,10 @@ def __init__(

self._config = config.TrackerConfig(verbose=verbose)

# silently set the update method to EXACT

- self._lib.set_update_mode(

- self._engine, self.configuration.update_method.value

- )

+ self._lib.set_update_mode(self._engine, self.configuration.update_method.value)

# default parameters and space for stored objects

- self._objects: List[btypes.PyTrackObject] = []

+ self._objects: list[btypes.PyTrackObject] = []

self._frame_range = [0, 0]

def __enter__(self):

@@ -224,7 +223,7 @@ def _max_search_radius(self, max_search_radius: int):

"""Set the maximum search radius for fast cost updates."""

self._lib.max_search_radius(self._engine, max_search_radius)

- def _update_method(self, method: Union[str, constants.BayesianUpdates]):

+ def _update_method(self, method: constants.BayesianUpdates):

"""Set the method for updates, EXACT, APPROXIMATE, CUDA etc..."""

self._lib.set_update_mode(self._engine, method.value)

@@ -243,12 +242,10 @@ def n_tracks(self) -> int:

@property

def n_dummies(self) -> int:

"""Return the number of dummy objects (negative ID)."""

- return len(

- [d for d in itertools.chain.from_iterable(self.refs) if d < 0]

- )

+ return len([d for d in itertools.chain.from_iterable(self.refs) if d < 0])

@property

- def tracks(self) -> List[btypes.Tracklet]:

+ def tracks(self) -> list[btypes.Tracklet]:

"""Return a sorted list of tracks, default is to sort by increasing

length."""

return [self[i] for i in range(self.n_tracks)]

@@ -273,8 +270,7 @@ def refs(self):

def dummies(self):

"""Return a list of dummy objects."""

return [

- self._lib.get_dummy(self._engine, -(i + 1))

- for i in range(self.n_dummies)

+ self._lib.get_dummy(self._engine, -(i + 1)) for i in range(self.n_dummies)

]

@property

@@ -305,7 +301,7 @@ def LBEP(self):

"""

return utils._lbep_table(self.tracks)

- def _sort(self, tracks: List[btypes.Tracklet]) -> List[btypes.Tracklet]:

+ def _sort(self, tracks: list[btypes.Tracklet]) -> list[btypes.Tracklet]:

"""Return a sorted list of tracks"""

return sorted(tracks, key=lambda t: len(t), reverse=True)

@@ -374,18 +370,16 @@ def _object_model(self, model: models.ObjectModel) -> None:

)

@property

- def frame_range(self) -> Tuple[int, int]:

+ def frame_range(self) -> tuple:

"""Return the frame range."""

return tuple(self.configuration.frame_range)

@property

- def objects(self) -> List[btypes.PyTrackObject]:

+ def objects(self) -> list[btypes.PyTrackObject]:

"""Return the list of objects added through the append method."""

return self._objects

- def append(

- self, objects: Union[List[btypes.PyTrackObject], np.ndarray]

- ) -> None:

+ def append(self, objects: Union[list[btypes.PyTrackObject], npt.NDArray]) -> None:

"""Append a single track object, or list of objects to the stack. Note

that the tracker will automatically order these by frame number, so the

order here does not matter. This means several datasets can be

@@ -393,7 +387,7 @@ def append(

Parameters

----------

- objects : list, np.ndarray

+ objects : list, npt.NDArray

A list of objects to track.

"""

@@ -424,9 +418,7 @@ def _stats(self, info_ptr: ctypes.pointer) -> btypes.PyTrackingInfo:

return info_ptr.contents

def track_interactive(self, *args, **kwargs) -> None:

- logger.warning(

- "`track_interactive` will be deprecated. Use `track` instead."

- )

+ logger.warning("`track_interactive` will be deprecated. Use `track` instead.")

return self.track(*args, **kwargs)

def track(

@@ -434,7 +426,7 @@ def track(

*,

step_size: int = 100,

tracking_updates: Optional[

- List[Union[str, constants.BayesianUpdateFeatures]]

+ list[Union[str, constants.BayesianUpdateFeatures]]

] = None,

) -> None:

"""Run the tracking in an interactive mode.

@@ -462,7 +454,7 @@ def track(

# bitwise OR is equivalent to int sum here

self._lib.set_update_features(

self._engine,

- sum([int(f.value) for f in self.configuration.tracking_updates]),

+ sum(int(f.value) for f in self.configuration.tracking_updates),

)

stats = self.step()

@@ -488,8 +480,7 @@ def track(

f"(in {stats.t_total_time}s)"

)

logger.info(

- f" - Inserted {self.n_dummies} dummy objects to fill "

- "tracking gaps"

+ f" - Inserted {self.n_dummies} dummy objects to fill tracking gaps"

)

def step(self, n_steps: int = 1) -> Optional[btypes.PyTrackingInfo]:

@@ -499,7 +490,7 @@ def step(self, n_steps: int = 1) -> Optional[btypes.PyTrackingInfo]:

return None

return self._stats(self._lib.step(self._engine, n_steps))

- def hypotheses(self) -> List[hypothesis.Hypothesis]:

+ def hypotheses(self) -> list[hypothesis.Hypothesis]:

"""Calculate and return hypotheses using the hypothesis engine."""

if not self.hypothesis_model:

@@ -513,19 +504,13 @@ def hypotheses(self) -> List[hypothesis.Hypothesis]:

)

# now get all of the hypotheses

- h = [

- self._lib.get_hypothesis(self._engine, h)

- for h in range(n_hypotheses)

- ]

- return h

+ return [self._lib.get_hypothesis(self._engine, h) for h in range(n_hypotheses)]

def optimize(self, **kwargs):

"""Proxy for `optimise` for our American friends ;)"""

return self.optimise(**kwargs)

- def optimise(

- self, options: Optional[dict] = None

- ) -> List[hypothesis.Hypothesis]:

+ def optimise(self, options: Optional[dict] = None) -> list[hypothesis.Hypothesis]:

"""Optimize the tracks.

Parameters

@@ -544,19 +529,18 @@ def optimise(

optimiser and then performs track merging, removal of track fragments,

renumbering and assignment of branches.

"""

+ if not self.configuration.enable_optimisation:

+ logger.warning("The `enable_optimisation` flag is set to False")

+

logger.info(f"Loading hypothesis model: {self.hypothesis_model.name}")

- logger.info(

- f"Calculating hypotheses (relax: {self.hypothesis_model.relax})..."

- )

+ logger.info(f"Calculating hypotheses (relax: {self.hypothesis_model.relax})...")

hypotheses = self.hypotheses()

# if we have not been provided with optimizer options, use the default

# from the configuration.

options = (

- options

- if options is not None

- else self.configuration.optimizer_options

+ options if options is not None else self.configuration.optimizer_options

)

# if we don't have any hypotheses return

@@ -673,25 +657,22 @@ def export(

A string that represents how the data has been filtered prior to

tracking, e.g. using the object property `area>100`

"""

- export_delegator(

- filename, self, obj_type=obj_type, filter_by=filter_by

- )

+ export_delegator(filename, self, obj_type=obj_type, filter_by=filter_by)

def to_napari(

self,

replace_nan: bool = True, # noqa: FBT001,FBT002

ndim: Optional[int] = None,

- ) -> Tuple[np.ndarray, dict, dict]:

+ ) -> tuple[npt.NDArray, dict, dict]:

"""Return the data in a format for a napari tracks layer.

See :py:meth:`btrack.utils.tracks_to_napari`."""

+ assert self.configuration.volume is not None

ndim = self.configuration.volume.ndim if ndim is None else ndim

- return utils.tracks_to_napari(

- self.tracks, ndim=ndim, replace_nan=replace_nan

- )

+ return utils.tracks_to_napari(self.tracks, ndim=ndim, replace_nan=replace_nan)

- def candidate_graph_edges(self) -> List[btypes.PyGraphEdge]:

+ def candidate_graph_edges(self) -> list[btypes.PyGraphEdge]:

"""Return the edges from the full candidate graph."""

num_edges = self._lib.num_edges(self._engine)

if num_edges < 1:

@@ -700,7 +681,4 @@ def candidate_graph_edges(self) -> List[btypes.PyGraphEdge]:

"``config.store_candidate_graph`` is set to "

f"{self.configuration.store_candidate_graph}"

)

- return [

- self._lib.get_graph_edge(self._engine, idx)

- for idx in range(num_edges)

- ]

+ return [self._lib.get_graph_edge(self._engine, idx) for idx in range(num_edges)]

diff --git a/btrack/datasets.py b/btrack/datasets.py

index 585ae0c0..2d89763e 100644

--- a/btrack/datasets.py

+++ b/btrack/datasets.py

@@ -1,28 +1,24 @@

import os

-from typing import List

-import numpy as np

import pooch

+from numpy import typing as npt

from skimage.io import imread

-from .btypes import PyTrackObject

-from .io import import_CSV

+from .btypes import PyTrackObject, Tracklet

+from .io import HDF5FileHandler, import_CSV

-BASE_URL = (

- "https://raw.githubusercontent.com/lowe-lab-ucl/btrack-examples/main/"

-)

+BASE_URL = "https://raw.githubusercontent.com/lowe-lab-ucl/btrack-examples/main/"

CACHE_PATH = pooch.os_cache("btrack-examples")

def _remote_registry() -> os.PathLike:

- file_path = pooch.retrieve(

- # URL to one of Pooch's test files

+ # URL to one of Pooch's test files

+ return pooch.retrieve(

path=CACHE_PATH,

- url=BASE_URL + "registry.txt",

- known_hash="673de62c62eeb6f356fb1bff968748566d23936f567201cf61493d031d42d480",

+ url=f"{BASE_URL}registry.txt",

+ known_hash="20d8c44289f421ab52d109e6af2c76610e740230479fe5c46a4e94463c9b5d50",

)

- return file_path

POOCH = pooch.create(

@@ -36,39 +32,42 @@ def _remote_registry() -> os.PathLike:

def cell_config() -> os.PathLike:

"""Return the file path to the example `cell_config`."""

- file_path = POOCH.fetch("examples/cell_config.json")

- return file_path

+ return POOCH.fetch("examples/cell_config.json")

def particle_config() -> os.PathLike:

"""Return the file path to the example `particle_config`."""

- file_path = POOCH.fetch("examples/particle_config.json")

- return file_path

+ return POOCH.fetch("examples/particle_config.json")

def example_segmentation_file() -> os.PathLike:

"""Return the file path to the example U-Net segmentation image file."""

- file_path = POOCH.fetch("examples/segmented.tif")

- return file_path

+ return POOCH.fetch("examples/segmented.tif")

-def example_segmentation() -> np.ndarray:

+def example_segmentation() -> npt.NDArray:

"""Return the U-Net segmentation as a numpy array of dimensions (T, Y, X)."""

file_path = example_segmentation_file()

- segmentation = imread(file_path)

- return segmentation

+ return imread(file_path)

def example_track_objects_file() -> os.PathLike:

"""Return the file path to the example localized and classified objects

stored in a CSV file."""

- file_path = POOCH.fetch("examples/objects.csv")

- return file_path

+ return POOCH.fetch("examples/objects.csv")

-def example_track_objects() -> List[PyTrackObject]:

+def example_track_objects() -> list[PyTrackObject]:

"""Return the example localized and classified objects stored in a CSV file

as a list `PyTrackObject`s to be used by the tracker."""

file_path = example_track_objects_file()

- objects = import_CSV(file_path)

- return objects

+ return import_CSV(file_path)

+

+

+def example_tracks() -> list[Tracklet]:

+ """Return the example example localized and classified objected stored in an

+ HDF5 file as a list of `Tracklet`s."""

+ file_path = POOCH.fetch("examples/tracks.h5")

+ with HDF5FileHandler(file_path, "r", obj_type="obj_type_1") as reader:

+ tracks = reader.tracks

+ return tracks

diff --git a/btrack/include/tracker.h b/btrack/include/tracker.h

index ff2eb23f..db314c3f 100644

--- a/btrack/include/tracker.h

+++ b/btrack/include/tracker.h

@@ -20,7 +20,7 @@

#include

#include

#include

-#include

+// #include

#include

#include

#include

@@ -264,7 +264,7 @@ class BayesianTracker : public UpdateFeatures {

PyTrackInfo statistics;

// member variable to store an output path for debugging

- std::filesystem::path m_debug_filepath;

+ // std::experimental::filesystem::path m_debug_filepath;

};

// utils to write out belief matrix to CSV files

diff --git a/btrack/io/_localization.py b/btrack/io/_localization.py

index cfeaaff8..cb2da3da 100644

--- a/btrack/io/_localization.py

+++ b/btrack/io/_localization.py

@@ -2,13 +2,18 @@

import dataclasses

import logging

+from collections.abc import Generator

from multiprocessing.pool import Pool

-from typing import Callable, Dict, Generator, List, Optional, Tuple, Union

+from typing import Callable, Optional, Union

import numpy as np

import numpy.typing as npt

from skimage.measure import label, regionprops, regionprops_table

-from tqdm import tqdm

+

+try:

+ from napari.utils import progress as tqdm

+except ImportError:

+ from tqdm import tqdm

from btrack import btypes

from btrack.constants import Dimensionality

@@ -25,13 +30,11 @@ def _is_unique(x: npt.NDArray) -> bool:

def _concat_nodes(

- nodes: Dict[str, npt.NDArray], new_nodes: Dict[str, npt.NDArray]

-) -> Dict[str, npt.NDArray]:

+ nodes: dict[str, npt.NDArray], new_nodes: dict[str, npt.NDArray]

+) -> dict[str, npt.NDArray]:

"""Concatentate centroid dictionaries."""

for key, values in new_nodes.items():

- nodes[key] = (

- np.concatenate([nodes[key], values]) if key in nodes else values

- )

+ nodes[key] = np.concatenate([nodes[key], values]) if key in nodes else values

return nodes

@@ -44,21 +47,17 @@ class SegmentationContainer:

def __post_init__(self) -> None:

self._is_generator = isinstance(self.segmentation, Generator)

- self._next = (

- self._next_generator if self._is_generator else self._next_array

- )

+ self._next = self._next_generator if self._is_generator else self._next_array

- def _next_generator(self) -> Tuple[npt.NDArray, Optional[npt.NDArray]]:

+ def _next_generator(self) -> tuple[npt.NDArray, Optional[npt.NDArray]]:

"""__next__ method for a generator input."""

seg = next(self.segmentation)

intens = (

- next(self.intensity_image)

- if self.intensity_image is not None

- else None

+ next(self.intensity_image) if self.intensity_image is not None else None

)

return seg, intens

- def _next_array(self) -> Tuple[npt.NDArray, Optional[npt.NDArray]]:

+ def _next_array(self) -> tuple[npt.NDArray, Optional[npt.NDArray]]:

"""__next__ method for an array-like input."""

if self._iter >= len(self):

raise StopIteration

@@ -74,7 +73,7 @@ def __iter__(self) -> SegmentationContainer:

self._iter = 0

return self

- def __next__(self) -> Tuple[int, npt.NDArray, Optional[npt.NDArray]]:

+ def __next__(self) -> tuple[int, npt.NDArray, Optional[npt.NDArray]]:

seg, intens = self._next()

data = (self._iter, seg, intens)

self._iter += 1

@@ -88,26 +87,23 @@ def __len__(self) -> int:

class NodeProcessor:

"""Processor to extract nodes from a segmentation image."""

- properties: Tuple[str]

+ properties: tuple[str, ...]

centroid_type: str = "centroid"

intensity_image: Optional[npt.NDArray] = None

- scale: Optional[Tuple[float]] = None

+ scale: Optional[tuple[float]] = None

assign_class_ID: bool = False # noqa: N815

- extra_properties: Optional[Tuple[Callable]] = None

+ extra_properties: Optional[tuple[Callable]] = None

@property

- def img_props(self) -> List[str]:

+ def img_props(self) -> tuple[str, ...]:

# need to infer the name of the function provided

- extra_img_props = tuple(

- [str(fn.__name__) for fn in self.extra_properties]

+ return self.properties + (

+ tuple(str(fn.__name__) for fn in self.extra_properties)

if self.extra_properties

- else []

+ else ()

)

- return self.properties + extra_img_props

- def __call__(

- self, data: Tuple[int, npt.NDAarray, Optional[npt.NDArray]]

- ) -> Dict[str, npt.NDArray]:

+ def __call__(self, data: tuple[int, npt.NDArray, Optional[npt.NDArray]]) -> dict:

"""Return the object centroids from a numpy array representing the

image data."""

@@ -119,33 +115,20 @@ def __call__(

if segmentation.ndim not in (Dimensionality.TWO, Dimensionality.THREE):

raise ValueError("Segmentation array must have 3 or 4 dims.")

- labeled = (

- segmentation if _is_unique(segmentation) else label(segmentation)

- )

+ labeled = segmentation if _is_unique(segmentation) else label(segmentation)

props = regionprops(

labeled,

intensity_image=intensity_image,

extra_properties=self.extra_properties,

)

num_nodes = len(props)

- scale = (

- tuple([1.0] * segmentation.ndim)

- if self.scale is None

- else self.scale

- )

+ scale = tuple([1.0] * segmentation.ndim) if self.scale is None else self.scale

if len(scale) != segmentation.ndim:

- raise ValueError(

- f"Scale dimensions do not match segmentation: {scale}."

- )

+ raise ValueError(f"Scale dimensions do not match segmentation: {scale}.")

centroids = list(

- zip(

- *[

- getattr(props[idx], self.centroid_type)

- for idx in range(num_nodes)

- ]

- )

+ zip(*[getattr(props[idx], self.centroid_type) for idx in range(num_nodes)])

)[::-1]

centroid_dims = ["x", "y", "z"][: segmentation.ndim]

@@ -154,9 +137,7 @@ def __call__(

for dim in range(len(centroids))

}

- nodes = {"t": [frame] * num_nodes}

- nodes.update(coords)

-

+ nodes = {"t": [frame] * num_nodes} | coords

for img_prop in self.img_props:

nodes[img_prop] = [

getattr(props[idx], img_prop) for idx in range(num_nodes)

@@ -173,25 +154,25 @@ def __call__(

return nodes

-def segmentation_to_objects(

- segmentation: Union[np.ndarray, Generator],

+def segmentation_to_objects( # noqa: PLR0913

+ segmentation: Union[npt.NDArray, Generator],

*,

- intensity_image: Optional[Union[np.ndarray, Generator]] = None,

- properties: Optional[Tuple[str]] = (),

- extra_properties: Optional[Tuple[Callable]] = None,

- scale: Optional[Tuple[float]] = None,

+ intensity_image: Optional[Union[npt.NDArray, Generator]] = None,

+ properties: tuple[str, ...] = (),

+ extra_properties: Optional[tuple[Callable]] = None,

+ scale: Optional[tuple[float]] = None,

use_weighted_centroid: bool = True,

assign_class_ID: bool = False,

num_workers: int = 1,

-) -> List[btypes.PyTrackObject]:

+) -> list[btypes.PyTrackObject]:

"""Convert segmentation to a set of trackable objects.

Parameters

----------

- segmentation : np.ndarray, dask.array.core.Array or Generator

+ segmentation : npt.NDArray, dask.array.core.Array or Generator

Segmentation can be provided in several different formats. Arrays should

be ordered as T(Z)YX.

- intensity_image : np.ndarray, dask.array.core.Array or Generator, optional

+ intensity_image : npt.NDArray, dask.array.core.Array or Generator, optional

Intensity image with same size as segmentation, to be used to calculate

additional properties. See `skimage.measure.regionprops` for more info.

properties : tuple of str, optional

@@ -274,11 +255,11 @@ def segmentation_to_objects(

# we need to remove 'label' since this is a protected keyword for btrack

# objects

- if isinstance(properties, tuple) and "label" in properties:

+ if "label" in properties:

logger.warning("Cannot use `scikit-image` `label` as a property.")

- properties = set(properties)

- properties.remove("label")

- properties = tuple(properties)

+ properties_set = set(properties)

+ properties_set.remove("label")

+ properties = tuple(properties_set)

processor = NodeProcessor(

properties=properties,

@@ -297,14 +278,18 @@ def segmentation_to_objects(

num_workers = 1

if num_workers <= 1:

- for data in tqdm(container, total=len(container)):

+ for data in tqdm(container, total=len(container), position=0):

_nodes = processor(data)

nodes = _concat_nodes(nodes, _nodes)

else:

logger.info(f"Processing using {num_workers} workers.")

with Pool(processes=num_workers) as pool:

result = list(

- tqdm(pool.imap(processor, container), total=len(container))

+ tqdm(

+ pool.imap(processor, container),

+ total=len(container),

+ position=0,

+ )

)

for _nodes in result:

diff --git a/btrack/io/exporters.py b/btrack/io/exporters.py

index 5dbfa16c..806de6eb 100644

--- a/btrack/io/exporters.py

+++ b/btrack/io/exporters.py

@@ -3,6 +3,7 @@

import csv

import logging

import os

+from pathlib import Path

from typing import TYPE_CHECKING, Optional

import numpy as np

@@ -112,13 +113,16 @@ def export_LBEP(filename: os.PathLike, tracks: list):

def _export_HDF(

- filename: os.PathLike, tracker, obj_type=None, filter_by: str = None

+ filename: os.PathLike,

+ tracker,

+ obj_type: Optional[str] = None,

+ filter_by: Optional[str] = None,

):

"""Export to HDF."""

filename_noext, ext = os.path.splitext(filename)

if ext != ".h5":

- filename = filename_noext + ".h5"

+ filename = Path(f"{filename_noext}.h5")

logger.warning(f"Changing HDF filename to {filename}")

with HDF5FileHandler(filename, read_write="a", obj_type=obj_type) as hdf:

diff --git a/btrack/io/hdf.py b/btrack/io/hdf.py

index 52697cbf..20cdd6ec 100644

--- a/btrack/io/hdf.py

+++ b/btrack/io/hdf.py

@@ -4,11 +4,13 @@

import logging

import os

import re

+from ast import literal_eval

from functools import wraps

-from typing import TYPE_CHECKING, Any, Dict, List, Optional, Union

+from typing import TYPE_CHECKING, Any, Optional, Union

import h5py

import numpy as np

+from numpy import typing as npt

# import core

from btrack import _version, btypes, constants, utils

@@ -36,9 +38,7 @@ def wrapped_handler_property(*args, **kwargs):

self = args[0]

assert isinstance(self, HDF5FileHandler)

if property not in self._hdf:

- logger.error(

- f"{property.capitalize()} not found in {self.filename}"

- )

+ logger.error(f"{property.capitalize()} not found in {self.filename}")

return None

return fn(*args, **kwargs)

@@ -63,15 +63,15 @@ class HDF5FileHandler:

Attributes

----------

- segmentation : np.ndarray

+ segmentation : npt.NDArray

A numpy array representing the segmentation data. TZYX

objects : list [PyTrackObject]

A list of PyTrackObjects localised from the segmentation data.

- filtered_objects : np.ndarray

+ filtered_objects : npt.NDArray

Similar to objects, but filtered by property.

tracks : list [Tracklet]

A list of Tracklet objects.

- lbep : np.ndarray

+ lbep : npt.NDArray

The LBEP table representing the track graph.

Notes

@@ -140,7 +140,7 @@ def __init__(

self._states = list(constants.States)

@property

- def object_types(self) -> List[str]:

+ def object_types(self) -> list[str]:

return list(self._hdf["objects"].keys())

def __enter__(self):

@@ -167,17 +167,17 @@ def object_type(self, obj_type: str) -> None:

@property # type: ignore

@h5check_property_exists("segmentation")

- def segmentation(self) -> np.ndarray:

+ def segmentation(self) -> npt.NDArray:

segmentation = self._hdf["segmentation"]["images"][:].astype(np.uint16)

logger.info(f"Loading segmentation {segmentation.shape}")

return segmentation

- def write_segmentation(self, segmentation: np.ndarray) -> None:

+ def write_segmentation(self, segmentation: npt.NDArray) -> None:

"""Write out the segmentation to an HDF file.

Parameters

----------

- segmentation : np.ndarray

+ segmentation : npt.NDArray

A numpy array representing the segmentation data. T(Z)YX, uint16

"""

# write the segmentation out

@@ -191,7 +191,7 @@ def write_segmentation(self, segmentation: np.ndarray) -> None:

)

@property

- def objects(self) -> List[btypes.PyTrackObject]:

+ def objects(self) -> list[btypes.PyTrackObject]:

"""Return the objects in the file."""

return self.filtered_objects()

@@ -201,8 +201,8 @@ def filtered_objects(

f_expr: Optional[str] = None,

*,

lazy_load_properties: bool = True,

- exclude_properties: Optional[List[str]] = None,

- ) -> List[btypes.PyTrackObject]:

+ exclude_properties: Optional[list[str]] = None,

+ ) -> list[btypes.PyTrackObject]:

"""A filtered list of objects based on metadata.

Parameters

@@ -244,9 +244,7 @@ def filtered_objects(

properties = {}

if "properties" in grp:

p_keys = list(

- set(grp["properties"].keys()).difference(

- set(exclude_properties)

- )

+ set(grp["properties"].keys()).difference(set(exclude_properties))

)

properties = {k: grp["properties"][k][:] for k in p_keys}

assert all(len(p) == len(txyz) for p in properties.values())

@@ -263,14 +261,28 @@ def filtered_objects(

f_eval = f"x{m['op']}{m['cmp']}" # e.g. x > 10

+ data = None

+

if m["name"] in properties:

data = properties[m["name"]]

- filtered_idx = [i for i, x in enumerate(data) if eval(f_eval)]

+ elif m["name"] in grp:

+ logger.warning(

+ f"While trying to filter objects by `{f_expr}` encountered "

+ "a legacy HDF file."

+ )

+ logger.warning(

+ "Properties do not persist to objects. Use `hdf.tree()` to "

+ "inspect the file structure."

+ )

+ data = grp[m["name"]]

else:

raise ValueError(f"Cannot filter objects by {f_expr}")

+ filtered_idx = [i for i, x in enumerate(data) if literal_eval(f_eval)]

+

else:

- filtered_idx = range(txyz.shape[0]) # default filtering uses all

+ # default filtering uses all

+ filtered_idx = list(range(txyz.shape[0]))

# sanity check that coordinates matches labels

assert txyz.shape[0] == labels.shape[0]

@@ -293,12 +305,12 @@ def filtered_objects(

# add the filtered properties

for key, props in properties.items():

- objects_dict.update({key: props[filtered_idx]})

+ objects_dict[key] = props[filtered_idx]

return objects_from_dict(objects_dict)

def write_objects(

- self, data: Union[List[btypes.PyTrackObject], BayesianTracker]

+ self, data: Union[list[btypes.PyTrackObject], BayesianTracker]

) -> None:

"""Write objects to HDF file.

@@ -324,7 +336,7 @@ def write_objects(

if "objects" not in self._hdf:

self._hdf.create_group("objects")

grp = self._hdf["objects"].create_group(self.object_type)

- props = {k: [] for k in objects[0].properties}

+ props: dict = {k: [] for k in objects[0].properties}

n_objects = len(objects)

n_frames = np.max([o.t for o in objects]) + 1

@@ -358,7 +370,7 @@ def write_objects(

@h5check_property_exists("objects")

def write_properties(

- self, data: Dict[str, Any], *, allow_overwrite: bool = False

+ self, data: dict[str, Any], *, allow_overwrite: bool = False

) -> None:

"""Write object properties to HDF file.

@@ -378,7 +390,7 @@ def write_properties(

grp = self._hdf[f"objects/{self.object_type}"]

- if "properties" not in grp.keys():

+ if "properties" not in grp:

props_grp = grp.create_group("properties")

else:

props_grp = self._hdf[f"objects/{self.object_type}/properties"]

@@ -395,31 +407,23 @@ def write_properties(

# Check if the property is already in the props_grp:

if key in props_grp:

- if allow_overwrite is False:

- logger.info(

- f"Property '{key}' already written in the file"

- )

+ if allow_overwrite:

+ del self._hdf[f"objects/{self.object_type}/properties"][key]

+ logger.info(f"Property '{key}' erased to be overwritten...")

+

+ else:

+ logger.info(f"Property '{key}' already written in the file")

raise KeyError(

f"Property '{key}' already in file -> switch on "

"'overwrite' param to replace existing property "

)

- else:

- del self._hdf[f"objects/{self.object_type}/properties"][

- key

- ]

- logger.info(

- f"Property '{key}' erased to be overwritten..."

- )

-

# Now that you handled overwriting, write the values:

- logger.info(

- f"Writing properties/{self.object_type}/{key} {values.shape}"

- )

+ logger.info(f"Writing properties/{self.object_type}/{key} {values.shape}")

props_grp.create_dataset(key, data=data[key], dtype="float32")

@property # type: ignore

@h5check_property_exists("tracks")

- def tracks(self) -> List[btypes.Tracklet]:

+ def tracks(self) -> list[btypes.Tracklet]:

"""Return the tracks in the file."""

logger.info(f"Loading tracks/{self.object_type}")

@@ -449,10 +453,9 @@ def tracks(self) -> List[btypes.Tracklet]:

obj = self.filtered_objects(f_expr=f_expr)

- def _get_txyz(_ref: int) -> int:

- if _ref >= 0:

- return obj[_ref]

- return dummy_obj[abs(_ref) - 1] # references are -ve for dummies

+ def _get_txyz(_ref: int) -> btypes.PyTrackObject:

+ # references are -ve for dummies

+ return obj[_ref] if _ref >= 0 else dummy_obj[abs(_ref) - 1]

tracks = []

for i in range(track_map.shape[0]):

@@ -467,7 +470,7 @@ def _get_txyz(_ref: int) -> int:

tracks.append(track)

# once we have all of the tracks, populate the children

- to_update = {}

+ to_update: dict = {}

for track in tracks:

if not track.is_root:

parents = filter(lambda t: track.parent == t.ID, tracks)

@@ -478,9 +481,7 @@ def _get_txyz(_ref: int) -> int:

# sanity check, can be removed at a later date

MAX_N_CHILDREN = 2

- assert all(

- len(children) <= MAX_N_CHILDREN for children in to_update.values()

- )

+ assert all(len(children) <= MAX_N_CHILDREN for children in to_update.values())

# add the children to the parent

for track, children in to_update.items():

@@ -490,7 +491,7 @@ def _get_txyz(_ref: int) -> int:

def write_tracks( # noqa: PLR0912

self,

- data: Union[List[btypes.Tracklet], BayesianTracker],

+ data: Union[list[btypes.Tracklet], BayesianTracker],

*,

f_expr: Optional[str] = None,

) -> None:

@@ -509,8 +510,8 @@ def write_tracks( # noqa: PLR0912

if not check_track_type(data):

raise ValueError(f"Data of type {type(data)} not supported.")

- all_objects = itertools.chain.from_iterable(

- [trk._data for trk in data]

+ all_objects = list(

+ itertools.chain.from_iterable([trk._data for trk in data])

)

objects = [obj for obj in all_objects if not obj.dummy]

@@ -590,7 +591,39 @@ def write_tracks( # noqa: PLR0912

@property # type: ignore

@h5check_property_exists("tracks")

- def lbep(self) -> np.ndarray:

+ def lbep(self) -> npt.NDArray:

"""Return the LBEP data."""

logger.info(f"Loading LBEP/{self.object_type}")

return self._hdf["tracks"][self.object_type]["LBEPR"][:]

+

+ def tree(self) -> None:

+ """Recursively iterate over the H5 file to reveal the tree structure and number

+ of elements within."""

+ _h5_tree(self._hdf)

+

+

+def _h5_tree(hdf, *, prefix: str = "") -> None:

+ """Recursively iterate over an H5 file to reveal the tree structure and number

+ of elements within. Writes the output to the default logger.

+

+ Parameters

+ ----------

+ hdf : hdf object

+ The hdf object to iterate over

+ prefix : str

+ A prepended string for layout

+ """

+ n_items = len(hdf)

+ for idx, (key, val) in enumerate(hdf.items()):

+ if idx == (n_items - 1):

+ # the last item

+ if isinstance(val, h5py._hl.group.Group):

+ logger.info(f"{prefix}└── {key}")

+ _h5_tree(val, prefix=f"{prefix} ")

+ else:

+ logger.info(f"{prefix}└── {key} ({len(val)})")

+ elif isinstance(val, h5py._hl.group.Group):

+ logger.info(f"{prefix}├── {key}")

+ _h5_tree(val, prefix=f"{prefix}│ ")

+ else:

+ logger.info(f"{prefix}├── {key} ({len(val)})")

diff --git a/btrack/io/importers.py b/btrack/io/importers.py

index a6b349f8..d0b34029 100644

--- a/btrack/io/importers.py

+++ b/btrack/io/importers.py

@@ -2,12 +2,11 @@

import csv

import os

-from typing import List

from btrack import btypes

-def import_CSV(filename: os.PathLike) -> List[btypes.PyTrackObject]:

+def import_CSV(filename: os.PathLike) -> list[btypes.PyTrackObject]:

"""Import localizations from a CSV file.

Parameters

@@ -17,7 +16,7 @@ def import_CSV(filename: os.PathLike) -> List[btypes.PyTrackObject]:

Returns

-------

- objects : List[btypes.PyTrackObject]

+ objects : list[btypes.PyTrackObject]

A list of objects in the CSV file.

Notes

@@ -45,7 +44,7 @@ def import_CSV(filename: os.PathLike) -> List[btypes.PyTrackObject]:

csvreader = csv.DictReader(csv_file, delimiter=",", quotechar="|")

for i, row in enumerate(csvreader):

data = {k: float(v) for k, v in row.items()}

- data.update({"ID": i})

+ data["ID"] = i

obj = btypes.PyTrackObject.from_dict(data)

objects.append(obj)

return objects

diff --git a/btrack/io/utils.py b/btrack/io/utils.py

index 60aaba2c..948f64df 100644

--- a/btrack/io/utils.py

+++ b/btrack/io/utils.py

@@ -1,9 +1,10 @@

from __future__ import annotations

import logging

-from typing import Any, Dict, List, Union

+from typing import Any, Union

import numpy as np

+from numpy import typing as npt

# import core

from btrack import btypes, constants

@@ -13,15 +14,13 @@

def localizations_to_objects(

- localizations: Union[

- np.ndarray, List[btypes.PyTrackObject], Dict[str, Any]

- ]

-) -> List[btypes.PyTrackObject]:

+ localizations: Union[npt.NDArray, list[btypes.PyTrackObject], dict[str, Any]]

+) -> list[btypes.PyTrackObject]:

"""Take a numpy array or pandas dataframe and convert to PyTrackObjects.

Parameters

----------

- localizations : list[PyTrackObject], np.ndarray, pandas.DataFrame

+ localizations : list[PyTrackObject], npt.NDArray, pandas.DataFrame

A list or array of localizations.

Returns

@@ -39,16 +38,11 @@ def localizations_to_objects(

# do we have a numpy array or pandas dataframe?

if isinstance(localizations, np.ndarray):

return objects_from_array(localizations)

- else:

- try:

- objects_dict = {

- c: np.asarray(localizations[c]) for c in localizations

- }

- except ValueError as err:

- logger.error(f"Unknown localization type: {type(localizations)}")

- raise TypeError(

- f"Unknown localization type: {type(localizations)}"

- ) from err

+ try:

+ objects_dict = {c: np.asarray(localizations[c]) for c in localizations}

+ except ValueError as err:

+ logger.error(f"Unknown localization type: {type(localizations)}")

+ raise TypeError(f"Unknown localization type: {type(localizations)}") from err

# how many objects are there

n_objects = objects_dict["t"].shape[0]

@@ -57,7 +51,7 @@ def localizations_to_objects(

return objects_from_dict(objects_dict)

-def objects_from_dict(objects_dict: dict) -> List[btypes.PyTrackObject]:

+def objects_from_dict(objects_dict: dict) -> list[btypes.PyTrackObject]:

"""Construct PyTrackObjects from a dictionary"""

# now that we have the object dictionary, convert this to objects

objects = []

@@ -73,10 +67,10 @@ def objects_from_dict(objects_dict: dict) -> List[btypes.PyTrackObject]:

def objects_from_array(

- objects_arr: np.ndarray,

+ objects_arr: npt.NDArray,

*,

- default_keys: List[str] = constants.DEFAULT_OBJECT_KEYS,

-) -> List[btypes.PyTrackObject]:

+ default_keys: list[str] = constants.DEFAULT_OBJECT_KEYS,

+) -> list[btypes.PyTrackObject]:

"""Construct PyTrackObjects from a numpy array."""

assert objects_arr.ndim == constants.Dimensionality.TWO

diff --git a/btrack/libwrapper.py b/btrack/libwrapper.py

index 8b3911c7..e1c84f07 100644

--- a/btrack/libwrapper.py

+++ b/btrack/libwrapper.py

@@ -23,25 +23,19 @@ def numpy_pointer_decorator(func):

@numpy_pointer_decorator

def np_dbl_p():

"""Temporary function. Will remove in final release"""

- return np.ctypeslib.ndpointer(

- dtype=np.double, ndim=2, flags="C_CONTIGUOUS"

- )

+ return np.ctypeslib.ndpointer(dtype=np.double, ndim=2, flags="C_CONTIGUOUS")

@numpy_pointer_decorator

def np_dbl_pc():

"""Temporary function. Will remove in final release"""

- return np.ctypeslib.ndpointer(

- dtype=np.double, ndim=2, flags="F_CONTIGUOUS"

- )

+ return np.ctypeslib.ndpointer(dtype=np.double, ndim=2, flags="F_CONTIGUOUS")

@numpy_pointer_decorator

def np_uint_p():

"""Temporary function. Will remove in final release"""

- return np.ctypeslib.ndpointer(

- dtype=np.uint32, ndim=2, flags="C_CONTIGUOUS"

- )

+ return np.ctypeslib.ndpointer(dtype=np.uint32, ndim=2, flags="C_CONTIGUOUS")

@numpy_pointer_decorator

@@ -53,9 +47,7 @@ def np_int_p():

@numpy_pointer_decorator

def np_int_vec_p():

"""Temporary function. Will remove in final release"""

- return np.ctypeslib.ndpointer(

- dtype=np.int32, ndim=1

- ) # , flags='C_CONTIGUOUS')

+ return np.ctypeslib.ndpointer(dtype=np.int32, ndim=1) # , flags='C_CONTIGUOUS')

@log_debug_info

diff --git a/btrack/models.py b/btrack/models.py

index f780f2af..ad70bf51 100644

--- a/btrack/models.py

+++ b/btrack/models.py

@@ -1,6 +1,7 @@

-from typing import List, Optional

+from typing import Optional

import numpy as np

+from numpy import typing as npt

from pydantic import BaseModel, root_validator, validator

from . import constants

@@ -9,9 +10,7 @@

__all__ = ["MotionModel", "ObjectModel", "HypothesisModel"]

-def _check_symmetric(

- x: np.ndarray, rtol: float = 1e-5, atol: float = 1e-8

-) -> bool:

+def _check_symmetric(x: npt.NDArray, rtol: float = 1e-5, atol: float = 1e-8) -> bool:

"""Check that a matrix is symmetric by comparing with it's own transpose."""

return np.allclose(x, x.T, rtol=rtol, atol=atol)

@@ -80,12 +79,12 @@ class MotionModel(BaseModel):

measurements: int

states: int

- A: np.ndarray

- H: np.ndarray

- P: np.ndarray

- R: np.ndarray

- G: Optional[np.ndarray] = None

- Q: Optional[np.ndarray] = None

+ A: npt.NDArray

+ H: npt.NDArray

+ P: npt.NDArray

+ R: npt.NDArray

+ G: Optional[npt.NDArray] = None

+ Q: Optional[npt.NDArray] = None

dt: float = 1.0

accuracy: float = 2.0

max_lost: int = constants.MAX_LOST

@@ -175,9 +174,9 @@ class ObjectModel(BaseModel):

"""

states: int

- emission: np.ndarray

- transition: np.ndarray

- start: np.ndarray

+ emission: npt.NDArray

+ transition: npt.NDArray

+ start: npt.NDArray

name: str = "Default"

@validator("emission", "transition", "start", pre=True)

@@ -259,7 +258,7 @@ class HypothesisModel(BaseModel):

.. math:: e^{(-d / \lambda)}

"""

- hypotheses: List[str]

+ hypotheses: list[str]

lambda_time: float

lambda_dist: float

lambda_link: float

@@ -277,15 +276,13 @@ class HypothesisModel(BaseModel):

@validator("hypotheses", pre=True)

def parse_hypotheses(cls, hypotheses):

- if not all(h in H_TYPES for h in hypotheses):

+ if any(h not in H_TYPES for h in hypotheses):

raise ValueError("Unknown hypothesis type in `hypotheses`.")

return hypotheses

def hypotheses_to_generate(self) -> int:

"""Return an integer representation of the hypotheses to generate."""

- h_bin = "".join(

- [str(int(h)) for h in [h in self.hypotheses for h in H_TYPES]]

- )

+ h_bin = "".join([str(int(h)) for h in [h in self.hypotheses for h in H_TYPES]])

return int(h_bin[::-1], 2)

def as_ctype(self) -> PyHypothesisParams:

diff --git a/btrack/napari.yaml b/btrack/napari.yaml

index 990d8be5..a74342e3 100644

--- a/btrack/napari.yaml

+++ b/btrack/napari.yaml

@@ -7,6 +7,9 @@ contributions:

- id: btrack.read_btrack

title: Read btrack files

python_name: btrack.napari.reader:get_reader

+ - id: btrack.write_hdf

+ title: Export Tracks to HDF

+ python_name: btrack.napari.writer:export_to_hdf

- id: btrack.track

title: Create Track

python_name: btrack.napari.main:create_btrack_widget

@@ -19,6 +22,11 @@ contributions:

- '*.hdf5'

accepts_directories: false

+ writers:

+ - command: btrack.write_hdf

+ layer_types: ["tracks"]

+ filename_extensions: [".h5", ".hdf", ".hdf5"]

+

widgets:

- command: btrack.track

display_name: Track

diff --git a/btrack/napari/__init__.py b/btrack/napari/__init__.py

index 92442fee..92ee399d 100644

--- a/btrack/napari/__init__.py

+++ b/btrack/napari/__init__.py

@@ -1,10 +1,3 @@

-try:

- from ._version import version as __version__

-except ImportError:

- __version__ = "unknown"

-

-import logging

-

from btrack.napari import constants, main

__all__ = [

diff --git a/btrack/napari/assets/btrack_logo.png b/btrack/napari/assets/btrack_logo.png

new file mode 100644

index 00000000..219dc507

Binary files /dev/null and b/btrack/napari/assets/btrack_logo.png differ

diff --git a/btrack/napari/config.py b/btrack/napari/config.py

index 7012edf8..fa5536e6 100644

--- a/btrack/napari/config.py

+++ b/btrack/napari/config.py

@@ -12,7 +12,7 @@

import numpy as np

import btrack

-from btrack import datasets

+import btrack.datasets

__all__ = [

"create_default_configs",

@@ -38,7 +38,7 @@ def __getitem__(self, matrix_name):

return self.__dict__[matrix_name]

def __setitem__(self, matrix_name, sigma):

- if matrix_name not in self.__dict__.keys():

+ if matrix_name not in self.__dict__:

_msg = f"Unknown matrix name '{matrix_name}'"

raise ValueError(_msg)

self.__dict__[matrix_name] = sigma

@@ -74,11 +74,10 @@ def __post_init__(self):

config = btrack.config.load_config(self.filename)

self.tracker_config, self.sigmas = self._unscale_config(config)

- def _unscale_config(

- self, config: TrackerConfig

- ) -> tuple[TrackerConfig, Sigmas]:

+ def _unscale_config(self, config: TrackerConfig) -> tuple[TrackerConfig, Sigmas]:

"""Convert the matrices of a scaled TrackerConfig MotionModel to unscaled."""

+ assert config.motion_model is not None

P_sigma = np.max(config.motion_model.P)

config.motion_model.P /= P_sigma

@@ -90,6 +89,7 @@ def _unscale_config(

# Instead, use G if it exists. If not, determine G from Q, which we can

# do because Q = G.T @ G

if config.motion_model.G is None:

+ assert config.motion_model.Q is not None

config.motion_model.G = config.motion_model.Q.diagonal() ** 0.5

G_sigma = np.max(config.motion_model.G)

config.motion_model.G /= G_sigma

@@ -107,8 +107,10 @@ def scale_config(self) -> TrackerConfig:

# Create a copy so that config values stay in sync with widget values

scaled_config = copy.deepcopy(self.tracker_config)

+ assert scaled_config.motion_model is not None

scaled_config.motion_model.P *= self.sigmas.P

scaled_config.motion_model.R *= self.sigmas.R

+ assert scaled_config.motion_model.G is not None

scaled_config.motion_model.G *= self.sigmas.G

scaled_config.motion_model.Q = (

scaled_config.motion_model.G.T @ scaled_config.motion_model.G

@@ -138,12 +140,12 @@ def __post_init__(self):

"""Add the default cell and particle configs."""

self.add_config(

- filename=datasets.cell_config(),

+ filename=btrack.datasets.cell_config(),

name="cell",

overwrite=False,

)

self.add_config(

- filename=datasets.particle_config(),

+ filename=btrack.datasets.particle_config(),

name="particle",

overwrite=False,

)

diff --git a/btrack/napari/constants.py b/btrack/napari/constants.py

index 668afed4..45a1b0db 100644

--- a/btrack/napari/constants.py

+++ b/btrack/napari/constants.py

@@ -3,16 +3,6 @@

napari plugin of the btrack package.

"""

-HYPOTHESES = [

- "P_FP",

- "P_init",

- "P_term",

- "P_link",

- "P_branch",

- "P_dead",

- "P_merge",

-]

-

HYPOTHESIS_SCALING_FACTORS = [

"lambda_time",

"lambda_dist",

@@ -26,5 +16,4 @@

"dist_thresh",

"time_thresh",

"apop_thresh",

- "relax",

]

diff --git a/btrack/napari/examples/show_btrack_widget.py b/btrack/napari/examples/show_btrack_widget.py

index 7c9c64de..5b32b9a7 100644

--- a/btrack/napari/examples/show_btrack_widget.py

+++ b/btrack/napari/examples/show_btrack_widget.py

@@ -15,15 +15,14 @@

napari.current_viewer()

_, btrack_widget = viewer.window.add_plugin_dock_widget(

- plugin_name="napari-btrack", widget_name="Track"

+ plugin_name="btrack", widget_name="Track"

)

segmentation = datasets.example_segmentation()

viewer.add_labels(segmentation)

# napari takes the first image layer as default anyway here, but better to be explicit

-btrack_widget.segmentation.choices = viewer.layers

-btrack_widget.segmentation.value = viewer.layers["segmentation"]

+btrack_widget.segmentation.setCurrentText(viewer.layers["segmentation"].name)

if __name__ == "__main__":

# The napari event loop needs to be run under here to allow the window

diff --git a/btrack/napari/main.py b/btrack/napari/main.py

index 3d34e412..6d6a5b3b 100644

--- a/btrack/napari/main.py

+++ b/btrack/napari/main.py

@@ -4,17 +4,14 @@

if TYPE_CHECKING:

import numpy.typing as npt

-

- from magicgui.widgets import Container

+ from qtpy import QtWidgets

from btrack.config import TrackerConfig

from btrack.napari.config import TrackerConfigs

import logging

+from pathlib import Path

-import qtpy.QtWidgets

-

-import magicgui.widgets

import napari

import btrack

@@ -44,90 +41,211 @@

logger.setLevel(logging.DEBUG)

-def create_btrack_widget() -> Container:

+def create_btrack_widget() -> btrack.napari.widgets.BtrackWidget:

"""Create widgets for the btrack plugin."""

# First create our UI along with some default configs for the widgets

all_configs = btrack.napari.config.create_default_configs()

- widgets = btrack.napari.widgets.create_widgets()

- btrack_widget = magicgui.widgets.Container(

- widgets=widgets, scrollable=True

+ btrack_widget = btrack.napari.widgets.BtrackWidget(

+ napari_viewer=napari.current_viewer(),

)

- btrack_widget.viewer = napari.current_viewer()

# Set the cell_config defaults in the gui

btrack.napari.sync.update_widgets_from_config(

unscaled_config=all_configs["cell"],

- container=btrack_widget,

+ btrack_widget=btrack_widget,

+ )

+

+ # Add any existing Labels layers to the segmentation selector

+ add_existing_labels(

+ viewer=btrack_widget.viewer,

+ combobox=btrack_widget.segmentation,

)

# Now set the callbacks

- btrack_widget.config.changed.connect(

+ btrack_widget.viewer.layers.events.inserted.connect(

+ lambda event: select_inserted_labels(

+ new_layer=event.value,

+ combobox=btrack_widget.segmentation,

+ ),

+ )

+

+ btrack_widget.viewer.layers.events.removed.connect(

+ lambda event: remove_deleted_labels(

+ deleted_layer=event.value,

+ combobox=btrack_widget.segmentation,

+ ),

+ )

+

+ btrack_widget.config_name.currentTextChanged.connect(

lambda selected: select_config(btrack_widget, all_configs, selected),

)

- btrack_widget.call_button.changed.connect(

+ # Disable the Optimiser tab if unchecked

+ for tab in range(btrack_widget._tabs.count()):

+ if btrack_widget._tabs.tabText(tab) == "Optimiser":

+ break

+ btrack_widget.enable_optimisation.toggled.connect(

+ lambda is_checked: btrack_widget._tabs.setTabEnabled(tab, is_checked)

+ )

+

+ btrack_widget.track_button.clicked.connect(

lambda: run(btrack_widget, all_configs),

)

- btrack_widget.reset_button.changed.connect(

+ btrack_widget.reset_button.clicked.connect(

lambda: restore_defaults(btrack_widget, all_configs),

)

- btrack_widget.save_config_button.changed.connect(

+ btrack_widget.save_config_button.clicked.connect(

lambda: save_config_to_json(btrack_widget, all_configs)

)

- btrack_widget.load_config_button.changed.connect(

+ btrack_widget.load_config_button.clicked.connect(

lambda: load_config_from_json(btrack_widget, all_configs)

)

- # there are lots of widgets so make the container scrollable

- scroll = qtpy.QtWidgets.QScrollArea()

- scroll.setWidget(btrack_widget._widget._qwidget)

- btrack_widget._widget._qwidget = scroll

-

return btrack_widget

+def add_existing_labels(

+ viewer: napari.Viewer,

+ combobox: QtWidgets.QComboBox,

+):

+ """Add all existing Labels layers in the viewer to a combobox"""

+

+ labels_layers = [

+ layer.name for layer in viewer.layers if isinstance(layer, napari.layers.Labels)

+ ]

+ combobox.addItems(labels_layers)

+

+

+def select_inserted_labels(

+ new_layer: napari.layers.Layer,

+ combobox: QtWidgets.QComboBox,

+):

+ """Update the selected Labels when a labels layer is added"""

+

+ if not isinstance(new_layer, napari.layers.Labels):

+ message = (

+ f"Not selecting new layer {new_layer.name} as input for the "

+ f"segmentation widget as {new_layer.name} is {type(new_layer)} "

+ "layer not an Labels layer."

+ )

+ logger.debug(message)

+ return

+

+ combobox.addItem(new_layer.name)

+ combobox.setCurrentText(new_layer.name)

+

+ # Update layer name when it changes

+ viewer = napari.current_viewer()

+ new_layer.events.name.connect(

+ lambda event: update_labels_name(

+ layer=event.source,

+ labels_layers=[

+ layer

+ for layer in viewer.layers

+ if isinstance(layer, napari.layers.Labels)

+ ],

+ combobox=combobox,

+ ),

+ )

+

+

+def update_labels_name(

+ layer: napari.layers.Layer,

+ labels_layers: list[napari.layer.Layer],

+ combobox: QtWidgets.QComboBox,

+):

+ """Update the name of an Labels layer"""

+

+ if not isinstance(layer, napari.layers.Labels):

+ message = (

+ f"Not updating name of layer {layer.name} as input for the "

+ f"segmentation widget as {layer.name} is {type(layer)} "

+ "layer not a Labels layer."

+ )

+ logger.debug(message)

+ return

+

+ layer_index = [layer.name for layer in labels_layers].index(layer.name)

+ combobox.setItemText(layer_index, layer.name)

+

+

+def remove_deleted_labels(

+ deleted_layer: napari.layers.Layer,

+ combobox: QtWidgets.QComboBox,

+):

+ """Remove the deleted Labels layer name from the combobox"""

+

+ if not isinstance(deleted_layer, napari.layers.Labels):

+ message = (

+ f"Not deleting layer {deleted_layer.name} from the segmentation "

+ f"widget as {deleted_layer.name} is {type(deleted_layer)} "

+ "layer not an Labels layer."

+ )

+ logger.debug(message)

+ return

+

+ layer_index = combobox.findText(deleted_layer.name)

+ combobox.removeItem(layer_index)

+

+

def select_config(

- btrack_widget: Container,

+ btrack_widget: btrack.napari.widgets.BtrackWidget,