diff --git a/configs/albu_example/README.md b/configs/albu_example/README.md

index 7f0eff52b3c..49edbf3f833 100644

--- a/configs/albu_example/README.md

+++ b/configs/albu_example/README.md

@@ -1,24 +1,26 @@

# Albu Example

-## Abstract

+> [Albumentations: fast and flexible image augmentations](https://arxiv.org/abs/1809.06839)

-

+

+

+## Abstract

Data augmentation is a commonly used technique for increasing both the size and the diversity of labeled training sets by leveraging input transformations that preserve output labels. In computer vision domain, image augmentations have become a common implicit regularization technique to combat overfitting in deep convolutional neural networks and are ubiquitously used to improve performance. While most deep learning frameworks implement basic image transformations, the list is typically limited to some variations and combinations of flipping, rotating, scaling, and cropping. Moreover, the image processing speed varies in existing tools for image augmentation. We present Albumentations, a fast and flexible library for image augmentations with many various image transform operations available, that is also an easy-to-use wrapper around other augmentation libraries. We provide examples of image augmentations for different computer vision tasks and show that Albumentations is faster than other commonly used image augmentation tools on the most of commonly used image transformations.

-

-

-

+## Results and Models

-## Citation

+| Backbone | Style | Lr schd | Mem (GB) | Inf time (fps) | box AP | mask AP | Config | Download |

+|:---------:|:-------:|:-------:|:--------:|:--------------:|:------:|:-------:|:------:|:--------:|

+| R-50 | pytorch | 1x | 4.4 | 16.6 | 38.0 | 34.5 |[config](https://github.com/open-mmlab/mmdetection/tree/master/configs/albu_example/mask_rcnn_r50_fpn_albu_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/albu_example/mask_rcnn_r50_fpn_albu_1x_coco/mask_rcnn_r50_fpn_albu_1x_coco_20200208-ab203bcd.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/albu_example/mask_rcnn_r50_fpn_albu_1x_coco/mask_rcnn_r50_fpn_albu_1x_coco_20200208_225520.log.json) |

-

+## Citation

-```

+```latex

@article{2018arXiv180906839B,

author = {A. Buslaev, A. Parinov, E. Khvedchenya, V.~I. Iglovikov and A.~A. Kalinin},

title = "{Albumentations: fast and flexible image augmentations}",

@@ -27,9 +29,3 @@ Data augmentation is a commonly used technique for increasing both the size and

year = 2018

}

```

-

-## Results and Models

-

-| Backbone | Style | Lr schd | Mem (GB) | Inf time (fps) | box AP | mask AP | Config | Download |

-|:---------:|:-------:|:-------:|:--------:|:--------------:|:------:|:-------:|:------:|:--------:|

-| R-50 | pytorch | 1x | 4.4 | 16.6 | 38.0 | 34.5 |[config](https://github.com/open-mmlab/mmdetection/tree/master/configs/albu_example/mask_rcnn_r50_fpn_albu_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/albu_example/mask_rcnn_r50_fpn_albu_1x_coco/mask_rcnn_r50_fpn_albu_1x_coco_20200208-ab203bcd.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/albu_example/mask_rcnn_r50_fpn_albu_1x_coco/mask_rcnn_r50_fpn_albu_1x_coco_20200208_225520.log.json) |

diff --git a/configs/atss/README.md b/configs/atss/README.md

index 035964f9f76..1bf694983ed 100644

--- a/configs/atss/README.md

+++ b/configs/atss/README.md

@@ -1,22 +1,25 @@

-# Bridging the Gap Between Anchor-based and Anchor-free Detection via Adaptive Training Sample Selection

+# ATSS

-## Abstract

+> [Bridging the Gap Between Anchor-based and Anchor-free Detection via Adaptive Training Sample Selection](https://arxiv.org/abs/1912.02424)

-

+

+

+## Abstract

Object detection has been dominated by anchor-based detectors for several years. Recently, anchor-free detectors have become popular due to the proposal of FPN and Focal Loss. In this paper, we first point out that the essential difference between anchor-based and anchor-free detection is actually how to define positive and negative training samples, which leads to the performance gap between them. If they adopt the same definition of positive and negative samples during training, there is no obvious difference in the final performance, no matter regressing from a box or a point. This shows that how to select positive and negative training samples is important for current object detectors. Then, we propose an Adaptive Training Sample Selection (ATSS) to automatically select positive and negative samples according to statistical characteristics of object. It significantly improves the performance of anchor-based and anchor-free detectors and bridges the gap between them. Finally, we discuss the necessity of tiling multiple anchors per location on the image to detect objects. Extensive experiments conducted on MS COCO support our aforementioned analysis and conclusions. With the newly introduced ATSS, we improve state-of-the-art detectors by a large margin to 50.7% AP without introducing any overhead.

-

-

-

+## Results and Models

-## Citation

+| Backbone | Style | Lr schd | Mem (GB) | Inf time (fps) | box AP | Config | Download |

+|:---------:|:-------:|:-------:|:--------:|:--------------:|:------:|:------:|:--------:|

+| R-50 | pytorch | 1x | 3.7 | 19.7 | 39.4 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/atss/atss_r50_fpn_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/atss/atss_r50_fpn_1x_coco/atss_r50_fpn_1x_coco_20200209-985f7bd0.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/atss/atss_r50_fpn_1x_coco/atss_r50_fpn_1x_coco_20200209_102539.log.json) |

+| R-101 | pytorch | 1x | 5.6 | 12.3 | 41.5 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/atss/atss_r101_fpn_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/atss/atss_r101_fpn_1x_coco/atss_r101_fpn_1x_20200825-dfcadd6f.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/atss/atss_r101_fpn_1x_coco/atss_r101_fpn_1x_20200825-dfcadd6f.log.json) |

-

+## Citation

```latex

@article{zhang2019bridging,

@@ -26,10 +29,3 @@ Object detection has been dominated by anchor-based detectors for several years.

year = {2019}

}

```

-

-## Results and Models

-

-| Backbone | Style | Lr schd | Mem (GB) | Inf time (fps) | box AP | Config | Download |

-|:---------:|:-------:|:-------:|:--------:|:--------------:|:------:|:------:|:--------:|

-| R-50 | pytorch | 1x | 3.7 | 19.7 | 39.4 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/atss/atss_r50_fpn_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/atss/atss_r50_fpn_1x_coco/atss_r50_fpn_1x_coco_20200209-985f7bd0.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/atss/atss_r50_fpn_1x_coco/atss_r50_fpn_1x_coco_20200209_102539.log.json) |

-| R-101 | pytorch | 1x | 5.6 | 12.3 | 41.5 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/atss/atss_r101_fpn_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/atss/atss_r101_fpn_1x_coco/atss_r101_fpn_1x_20200825-dfcadd6f.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/atss/atss_r101_fpn_1x_coco/atss_r101_fpn_1x_20200825-dfcadd6f.log.json) |

diff --git a/configs/autoassign/README.md b/configs/autoassign/README.md

index 172071d5001..8e8341a717a 100644

--- a/configs/autoassign/README.md

+++ b/configs/autoassign/README.md

@@ -1,32 +1,17 @@

-# AutoAssign: Differentiable Label Assignment for Dense Object Detection

+# AutoAssign

-## Abstract

+> [AutoAssign: Differentiable Label Assignment for Dense Object Detection](https://arxiv.org/abs/2007.03496)

+

+

-

+## Abstract

Determining positive/negative samples for object detection is known as label assignment. Here we present an anchor-free detector named AutoAssign. It requires little human knowledge and achieves appearance-aware through a fully differentiable weighting mechanism. During training, to both satisfy the prior distribution of data and adapt to category characteristics, we present Center Weighting to adjust the category-specific prior distributions. To adapt to object appearances, Confidence Weighting is proposed to adjust the specific assign strategy of each instance. The two weighting modules are then combined to generate positive and negative weights to adjust each location's confidence. Extensive experiments on the MS COCO show that our method steadily surpasses other best sampling strategies by large margins with various backbones. Moreover, our best model achieves 52.1% AP, outperforming all existing one-stage detectors. Besides, experiments on other datasets, e.g., PASCAL VOC, Objects365, and WiderFace, demonstrate the broad applicability of AutoAssign.

-

-

-

-

-## Citation

-

-

-

-```

-@article{zhu2020autoassign,

- title={AutoAssign: Differentiable Label Assignment for Dense Object Detection},

- author={Zhu, Benjin and Wang, Jianfeng and Jiang, Zhengkai and Zong, Fuhang and Liu, Songtao and Li, Zeming and Sun, Jian},

- journal={arXiv preprint arXiv:2007.03496},

- year={2020}

-}

-```

-

## Results and Models

| Backbone | Style | Lr schd | Mem (GB) | box AP | Config | Download |

@@ -37,3 +22,14 @@ Determining positive/negative samples for object detection is known as label ass

1. We find that the performance is unstable with 1x setting and may fluctuate by about 0.3 mAP. mAP 40.3 ~ 40.6 is acceptable. Such fluctuation can also be found in the original implementation.

2. You can get a more stable results ~ mAP 40.6 with a schedule total 13 epoch, and learning rate is divided by 10 at 10th and 13th epoch.

+

+## Citation

+

+```latex

+@article{zhu2020autoassign,

+ title={AutoAssign: Differentiable Label Assignment for Dense Object Detection},

+ author={Zhu, Benjin and Wang, Jianfeng and Jiang, Zhengkai and Zong, Fuhang and Liu, Songtao and Li, Zeming and Sun, Jian},

+ journal={arXiv preprint arXiv:2007.03496},

+ year={2020}

+}

+```

diff --git a/configs/carafe/README.md b/configs/carafe/README.md

index dca52e6d1ef..983aafb412a 100644

--- a/configs/carafe/README.md

+++ b/configs/carafe/README.md

@@ -1,35 +1,17 @@

-# CARAFE: Content-Aware ReAssembly of FEatures

+# CARAFE

-## Abstract

+> [CARAFE: Content-Aware ReAssembly of FEatures](https://arxiv.org/abs/1905.02188)

-

+

+

+## Abstract

Feature upsampling is a key operation in a number of modern convolutional network architectures, e.g. feature pyramids. Its design is critical for dense prediction tasks such as object detection and semantic/instance segmentation. In this work, we propose Content-Aware ReAssembly of FEatures (CARAFE), a universal, lightweight and highly effective operator to fulfill this goal. CARAFE has several appealing properties: (1) Large field of view. Unlike previous works (e.g. bilinear interpolation) that only exploit sub-pixel neighborhood, CARAFE can aggregate contextual information within a large receptive field. (2) Content-aware handling. Instead of using a fixed kernel for all samples (e.g. deconvolution), CARAFE enables instance-specific content-aware handling, which generates adaptive kernels on-the-fly. (3) Lightweight and fast to compute. CARAFE introduces little computational overhead and can be readily integrated into modern network architectures. We conduct comprehensive evaluations on standard benchmarks in object detection, instance/semantic segmentation and inpainting. CARAFE shows consistent and substantial gains across all the tasks (1.2%, 1.3%, 1.8%, 1.1db respectively) with negligible computational overhead. It has great potential to serve as a strong building block for future research. It has great potential to serve as a strong building block for future research.

-

-

-

-

-## Citation

-

-

-

-We provide config files to reproduce the object detection & instance segmentation results in the ICCV 2019 Oral paper for [CARAFE: Content-Aware ReAssembly of FEatures](https://arxiv.org/abs/1905.02188).

-

-```

-@inproceedings{Wang_2019_ICCV,

- title = {CARAFE: Content-Aware ReAssembly of FEatures},

- author = {Wang, Jiaqi and Chen, Kai and Xu, Rui and Liu, Ziwei and Loy, Chen Change and Lin, Dahua},

- booktitle = {The IEEE International Conference on Computer Vision (ICCV)},

- month = {October},

- year = {2019}

-}

-```

-

## Results and Models

The results on COCO 2017 val is shown in the below table.

@@ -44,3 +26,17 @@ The results on COCO 2017 val is shown in the below table.

## Implementation

The CUDA implementation of CARAFE can be find at https://github.com/myownskyW7/CARAFE.

+

+## Citation

+

+We provide config files to reproduce the object detection & instance segmentation results in the ICCV 2019 Oral paper for [CARAFE: Content-Aware ReAssembly of FEatures](https://arxiv.org/abs/1905.02188).

+

+```latex

+@inproceedings{Wang_2019_ICCV,

+ title = {CARAFE: Content-Aware ReAssembly of FEatures},

+ author = {Wang, Jiaqi and Chen, Kai and Xu, Rui and Liu, Ziwei and Loy, Chen Change and Lin, Dahua},

+ booktitle = {The IEEE International Conference on Computer Vision (ICCV)},

+ month = {October},

+ year = {2019}

+}

+```

diff --git a/configs/cascade_rcnn/README.md b/configs/cascade_rcnn/README.md

index a88cfd772a6..109fd7c3ded 100644

--- a/configs/cascade_rcnn/README.md

+++ b/configs/cascade_rcnn/README.md

@@ -1,38 +1,18 @@

-# Cascade R-CNN: High Quality Object Detection and Instance Segmentation

+# Cascade R-CNN

-## Abstract

+> [Cascade R-CNN: High Quality Object Detection and Instance Segmentation](https://arxiv.org/abs/1906.09756)

-

+

+

+## Abstract

In object detection, the intersection over union (IoU) threshold is frequently used to define positives/negatives. The threshold used to train a detector defines its quality. While the commonly used threshold of 0.5 leads to noisy (low-quality) detections, detection performance frequently degrades for larger thresholds. This paradox of high-quality detection has two causes: 1) overfitting, due to vanishing positive samples for large thresholds, and 2) inference-time quality mismatch between detector and test hypotheses. A multi-stage object detection architecture, the Cascade R-CNN, composed of a sequence of detectors trained with increasing IoU thresholds, is proposed to address these problems. The detectors are trained sequentially, using the output of a detector as training set for the next. This resampling progressively improves hypotheses quality, guaranteeing a positive training set of equivalent size for all detectors and minimizing overfitting. The same cascade is applied at inference, to eliminate quality mismatches between hypotheses and detectors. An implementation of the Cascade R-CNN without bells or whistles achieves state-of-the-art performance on the COCO dataset, and significantly improves high-quality detection on generic and specific object detection datasets, including VOC, KITTI, CityPerson, and WiderFace. Finally, the Cascade R-CNN is generalized to instance segmentation, with nontrivial improvements over the Mask R-CNN.

-

-

-

-

-## Citation

-

-

-

-```latex

-@article{Cai_2019,

- title={Cascade R-CNN: High Quality Object Detection and Instance Segmentation},

- ISSN={1939-3539},

- url={http://dx.doi.org/10.1109/tpami.2019.2956516},

- DOI={10.1109/tpami.2019.2956516},

- journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

- publisher={Institute of Electrical and Electronics Engineers (IEEE)},

- author={Cai, Zhaowei and Vasconcelos, Nuno},

- year={2019},

- pages={1–1}

-}

-```

-

-## Results and models

+## Results and Models

### Cascade R-CNN

@@ -81,3 +61,19 @@ We also train some models with longer schedules and multi-scale training for Cas

| X-101-32x4d-FPN | pytorch| 3x | 9.0 | | 46.3 | 40.1 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/cascade_rcnn/cascade_mask_rcnn_x101_32x4d_fpn_mstrain_3x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/cascade_rcnn/cascade_mask_rcnn_x101_32x4d_fpn_mstrain_3x_coco/cascade_mask_rcnn_x101_32x4d_fpn_mstrain_3x_coco_20210706_225234-40773067.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/cascade_rcnn/cascade_mask_rcnn_x101_32x4d_fpn_mstrain_3x_coco/cascade_mask_rcnn_x101_32x4d_fpn_mstrain_3x_coco_20210706_225234.log.json)

| X-101-32x8d-FPN | pytorch| 3x | 12.1 | | 46.1 | 39.9 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/cascade_rcnn/cascade_mask_rcnn_x101_32x8d_fpn_mstrain_3x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/cascade_rcnn/cascade_mask_rcnn_x101_32x8d_fpn_mstrain_3x_coco/cascade_mask_rcnn_x101_32x8d_fpn_mstrain_3x_coco_20210719_180640-9ff7e76f.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/cascade_rcnn/cascade_mask_rcnn_x101_32x8d_fpn_mstrain_3x_coco/cascade_mask_rcnn_x101_32x8d_fpn_mstrain_3x_coco_20210719_180640.log.json)

| X-101-64x4d-FPN | pytorch| 3x | 12.0 | | 46.6 | 40.3 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/cascade_rcnn/cascade_mask_rcnn_x101_64x4d_fpn_mstrain_3x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/cascade_rcnn/cascade_mask_rcnn_x101_64x4d_fpn_mstrain_3x_coco/cascade_mask_rcnn_x101_64x4d_fpn_mstrain_3x_coco_20210719_210311-d3e64ba0.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/cascade_rcnn/cascade_mask_rcnn_x101_64x4d_fpn_mstrain_3x_coco/cascade_mask_rcnn_x101_64x4d_fpn_mstrain_3x_coco_20210719_210311.log.json)

+

+## Citation

+

+```latex

+@article{Cai_2019,

+ title={Cascade R-CNN: High Quality Object Detection and Instance Segmentation},

+ ISSN={1939-3539},

+ url={http://dx.doi.org/10.1109/tpami.2019.2956516},

+ DOI={10.1109/tpami.2019.2956516},

+ journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

+ publisher={Institute of Electrical and Electronics Engineers (IEEE)},

+ author={Cai, Zhaowei and Vasconcelos, Nuno},

+ year={2019},

+ pages={1–1}

+}

+```

diff --git a/configs/cascade_rpn/README.md b/configs/cascade_rpn/README.md

index 06b25a53bc1..900dc2916cf 100644

--- a/configs/cascade_rpn/README.md

+++ b/configs/cascade_rpn/README.md

@@ -1,35 +1,18 @@

-# Cascade RPN: Delving into High-Quality Region Proposal Network with Adaptive Convolution

+# Cascade RPN

-## Abstract

+> [Cascade RPN: Delving into High-Quality Region Proposal Network with Adaptive Convolution](https://arxiv.org/abs/1909.06720)

+

+

-

+## Abstract

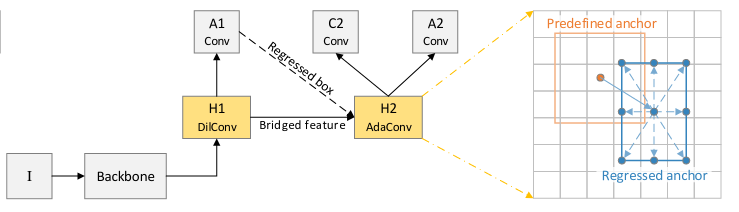

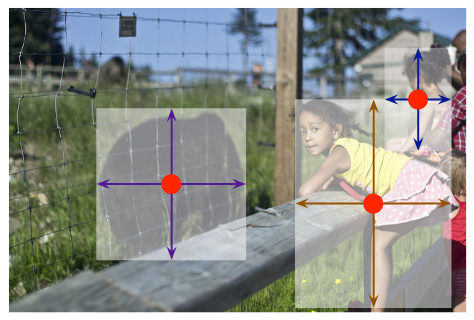

This paper considers an architecture referred to as Cascade Region Proposal Network (Cascade RPN) for improving the region-proposal quality and detection performance by systematically addressing the limitation of the conventional RPN that heuristically defines the anchors and aligns the features to the anchors. First, instead of using multiple anchors with predefined scales and aspect ratios, Cascade RPN relies on a single anchor per location and performs multi-stage refinement. Each stage is progressively more stringent in defining positive samples by starting out with an anchor-free metric followed by anchor-based metrics in the ensuing stages. Second, to attain alignment between the features and the anchors throughout the stages, adaptive convolution is proposed that takes the anchors in addition to the image features as its input and learns the sampled features guided by the anchors. A simple implementation of a two-stage Cascade RPN achieves AR 13.4 points higher than that of the conventional RPN, surpassing any existing region proposal methods. When adopting to Fast R-CNN and Faster R-CNN, Cascade RPN can improve the detection mAP by 3.1 and 3.5 points, respectively.

-

-

-

-

-## Citation

-

-

-

-We provide the code for reproducing experiment results of [Cascade RPN](https://arxiv.org/abs/1909.06720).

-

-```

-@inproceedings{vu2019cascade,

- title={Cascade RPN: Delving into High-Quality Region Proposal Network with Adaptive Convolution},

- author={Vu, Thang and Jang, Hyunjun and Pham, Trung X and Yoo, Chang D},

- booktitle={Conference on Neural Information Processing Systems (NeurIPS)},

- year={2019}

-}

-```

-

-## Benchmark

+## Results and Models

### Region proposal performance

@@ -43,3 +26,16 @@ We provide the code for reproducing experiment results of [Cascade RPN](https://

|:-------------:|:-----------:|:--------:|:-------:|:--------:|:--------:|:-------------------:|:--------------:|:------:|:-------:|:--------------------------------------------:|

| Fast R-CNN | Cascade RPN | R-50-FPN | caffe | 1x | - | - | - | 39.9 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/cascade_rpn/crpn_fast_rcnn_r50_caffe_fpn_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/cascade_rpn/crpn_fast_rcnn_r50_caffe_fpn_1x_coco/crpn_fast_rcnn_r50_caffe_fpn_1x_coco-cb486e66.pth) |

| Faster R-CNN | Cascade RPN | R-50-FPN | caffe | 1x | - | - | - | 40.4 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/cascade_rpn/crpn_faster_rcnn_r50_caffe_fpn_1x_coco.py) |[model](https://download.openmmlab.com/mmdetection/v2.0/cascade_rpn/crpn_faster_rcnn_r50_caffe_fpn_1x_coco/crpn_faster_rcnn_r50_caffe_fpn_1x_coco-c8283cca.pth) |

+

+## Citation

+

+We provide the code for reproducing experiment results of [Cascade RPN](https://arxiv.org/abs/1909.06720).

+

+```latex

+@inproceedings{vu2019cascade,

+ title={Cascade RPN: Delving into High-Quality Region Proposal Network with Adaptive Convolution},

+ author={Vu, Thang and Jang, Hyunjun and Pham, Trung X and Yoo, Chang D},

+ booktitle={Conference on Neural Information Processing Systems (NeurIPS)},

+ year={2019}

+}

+```

diff --git a/configs/cascade_rpn/metafile.yml b/configs/cascade_rpn/metafile.yml

new file mode 100644

index 00000000000..335b2bc7ef4

--- /dev/null

+++ b/configs/cascade_rpn/metafile.yml

@@ -0,0 +1,44 @@

+Collections:

+ - Name: Cascade RPN

+ Metadata:

+ Training Data: COCO

+ Training Techniques:

+ - SGD with Momentum

+ - Weight Decay

+ Training Resources: 8x V100 GPUs

+ Architecture:

+ - Cascade RPN

+ - FPN

+ - ResNet

+ Paper:

+ URL: https://arxiv.org/abs/1909.06720

+ Title: 'Cascade RPN: Delving into High-Quality Region Proposal Network with Adaptive Convolution'

+ README: configs/cascade_rpn/README.md

+ Code:

+ URL: https://github.com/open-mmlab/mmdetection/blob/v2.8.0/mmdet/models/dense_heads/cascade_rpn_head.py#L538

+ Version: v2.8.0

+

+Models:

+ - Name: crpn_fast_rcnn_r50_caffe_fpn_1x_coco

+ In Collection: Cascade RPN

+ Config: configs/cascade_rpn/crpn_fast_rcnn_r50_caffe_fpn_1x_coco.py

+ Metadata:

+ Epochs: 12

+ Results:

+ - Task: Object Detection

+ Dataset: COCO

+ Metrics:

+ box AP: 39.9

+ Weights: https://download.openmmlab.com/mmdetection/v2.0/cascade_rpn/crpn_fast_rcnn_r50_caffe_fpn_1x_coco/crpn_fast_rcnn_r50_caffe_fpn_1x_coco-cb486e66.pth

+

+ - Name: crpn_faster_rcnn_r50_caffe_fpn_1x_coco

+ In Collection: Cascade RPN

+ Config: configs/cascade_rpn/crpn_faster_rcnn_r50_caffe_fpn_1x_coco.py

+ Metadata:

+ Epochs: 12

+ Results:

+ - Task: Object Detection

+ Dataset: COCO

+ Metrics:

+ box AP: 40.4

+ Weights: https://download.openmmlab.com/mmdetection/v2.0/cascade_rpn/crpn_faster_rcnn_r50_caffe_fpn_1x_coco/crpn_faster_rcnn_r50_caffe_fpn_1x_coco-c8283cca.pth

diff --git a/configs/centernet/README.md b/configs/centernet/README.md

index 37c18e7084d..ffc1d8c2477 100644

--- a/configs/centernet/README.md

+++ b/configs/centernet/README.md

@@ -1,33 +1,18 @@

-# Objects as Points

+# CenterNet

-## Abstract

+> [Objects as Points](https://arxiv.org/abs/1904.07850)

+

+

-

+## Abstract

Detection identifies objects as axis-aligned boxes in an image. Most successful object detectors enumerate a nearly exhaustive list of potential object locations and classify each. This is wasteful, inefficient, and requires additional post-processing. In this paper, we take a different approach. We model an object as a single point --- the center point of its bounding box. Our detector uses keypoint estimation to find center points and regresses to all other object properties, such as size, 3D location, orientation, and even pose. Our center point based approach, CenterNet, is end-to-end differentiable, simpler, faster, and more accurate than corresponding bounding box based detectors. CenterNet achieves the best speed-accuracy trade-off on the MS COCO dataset, with 28.1% AP at 142 FPS, 37.4% AP at 52 FPS, and 45.1% AP with multi-scale testing at 1.4 FPS. We use the same approach to estimate 3D bounding box in the KITTI benchmark and human pose on the COCO keypoint dataset. Our method performs competitively with sophisticated multi-stage methods and runs in real-time.

-

-

-

-

-## Citation

-

-

-

-```latex

-@article{zhou2019objects,

- title={Objects as Points},

- author={Zhou, Xingyi and Wang, Dequan and Kr{\"a}henb{\"u}hl, Philipp},

- booktitle={arXiv preprint arXiv:1904.07850},

- year={2019}

-}

-```

-

-## Results and models

+## Results and Models

| Backbone | DCN | Mem (GB) | Box AP | Flip box AP| Config | Download |

| :-------------: | :--------: |:----------------: | :------: | :------------: | :----: | :----: |

@@ -42,3 +27,14 @@ Note:

- fix wrong image mean and variance in image normalization to be compatible with the pre-trained backbone.

- Use SGD rather than ADAM optimizer and add warmup and grad clip.

- Use DistributedDataParallel as other models in MMDetection rather than using DataParallel.

+

+## Citation

+

+```latex

+@article{zhou2019objects,

+ title={Objects as Points},

+ author={Zhou, Xingyi and Wang, Dequan and Kr{\"a}henb{\"u}hl, Philipp},

+ booktitle={arXiv preprint arXiv:1904.07850},

+ year={2019}

+}

+```

diff --git a/configs/centripetalnet/README.md b/configs/centripetalnet/README.md

index f3d22a57ab4..1a5a346bf3f 100644

--- a/configs/centripetalnet/README.md

+++ b/configs/centripetalnet/README.md

@@ -1,22 +1,29 @@

-# CentripetalNet: Pursuing High-quality Keypoint Pairs for Object Detection

+# CentripetalNet

-## Abstract

+> [CentripetalNet: Pursuing High-quality Keypoint Pairs for Object Detection](https://arxiv.org/abs/2003.09119)

+

+

-

+## Abstract

Keypoint-based detectors have achieved pretty-well performance. However, incorrect keypoint matching is still widespread and greatly affects the performance of the detector. In this paper, we propose CentripetalNet which uses centripetal shift to pair corner keypoints from the same instance. CentripetalNet predicts the position and the centripetal shift of the corner points and matches corners whose shifted results are aligned. Combining position information, our approach matches corner points more accurately than the conventional embedding approaches do. Corner pooling extracts information inside the bounding boxes onto the border. To make this information more aware at the corners, we design a cross-star deformable convolution network to conduct feature adaption. Furthermore, we explore instance segmentation on anchor-free detectors by equipping our CentripetalNet with a mask prediction module. On MS-COCO test-dev, our CentripetalNet not only outperforms all existing anchor-free detectors with an AP of 48.0% but also achieves comparable performance to the state-of-the-art instance segmentation approaches with a 40.2% MaskAP.

-

-

-

+## Results and Models

-## Citation

+| Backbone | Batch Size | Step/Total Epochs | Mem (GB) | Inf time (fps) | box AP | Config | Download |

+| :-------------: | :--------: |:----------------: | :------: | :------------: | :----: | :------: | :--------: |

+| HourglassNet-104 | [16 x 6](./centripetalnet_hourglass104_mstest_16x6_210e_coco.py) | 190/210 | 16.7 | 3.7 | 44.8 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/centripetalnet/centripetalnet_hourglass104_mstest_16x6_210e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/centripetalnet/centripetalnet_hourglass104_mstest_16x6_210e_coco/centripetalnet_hourglass104_mstest_16x6_210e_coco_20200915_204804-3ccc61e5.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/centripetalnet/centripetalnet_hourglass104_mstest_16x6_210e_coco/centripetalnet_hourglass104_mstest_16x6_210e_coco_20200915_204804.log.json) |

-

+Note:

+

+- TTA setting is single-scale and `flip=True`.

+- The model we released is the best checkpoint rather than the latest checkpoint (box AP 44.8 vs 44.6 in our experiment).

+

+## Citation

```latex

@InProceedings{Dong_2020_CVPR,

@@ -27,14 +34,3 @@ month = {June},

year = {2020}

}

```

-

-## Results and models

-

-| Backbone | Batch Size | Step/Total Epochs | Mem (GB) | Inf time (fps) | box AP | Config | Download |

-| :-------------: | :--------: |:----------------: | :------: | :------------: | :----: | :------: | :--------: |

-| HourglassNet-104 | [16 x 6](./centripetalnet_hourglass104_mstest_16x6_210e_coco.py) | 190/210 | 16.7 | 3.7 | 44.8 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/centripetalnet/centripetalnet_hourglass104_mstest_16x6_210e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/centripetalnet/centripetalnet_hourglass104_mstest_16x6_210e_coco/centripetalnet_hourglass104_mstest_16x6_210e_coco_20200915_204804-3ccc61e5.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/centripetalnet/centripetalnet_hourglass104_mstest_16x6_210e_coco/centripetalnet_hourglass104_mstest_16x6_210e_coco_20200915_204804.log.json) |

-

-Note:

-

-- TTA setting is single-scale and `flip=True`.

-- The model we released is the best checkpoint rather than the latest checkpoint (box AP 44.8 vs 44.6 in our experiment).

diff --git a/configs/cityscapes/README.md b/configs/cityscapes/README.md

index 28310f15a9e..7522ffe4993 100644

--- a/configs/cityscapes/README.md

+++ b/configs/cityscapes/README.md

@@ -1,33 +1,18 @@

-# The Cityscapes Dataset for Semantic Urban Scene Understanding

+# Cityscapes

-## Abstract

+> [The Cityscapes Dataset for Semantic Urban Scene Understanding](https://arxiv.org/abs/1604.01685)

+

+

-

+## Abstract

Visual understanding of complex urban street scenes is an enabling factor for a wide range of applications. Object detection has benefited enormously from large-scale datasets, especially in the context of deep learning. For semantic urban scene understanding, however, no current dataset adequately captures the complexity of real-world urban scenes.

To address this, we introduce Cityscapes, a benchmark suite and large-scale dataset to train and test approaches for pixel-level and instance-level semantic labeling. Cityscapes is comprised of a large, diverse set of stereo video sequences recorded in streets from 50 different cities. 5000 of these images have high quality pixel-level annotations; 20000 additional images have coarse annotations to enable methods that leverage large volumes of weakly-labeled data. Crucially, our effort exceeds previous attempts in terms of dataset size, annotation richness, scene variability, and complexity. Our accompanying empirical study provides an in-depth analysis of the dataset characteristics, as well as a performance evaluation of several state-of-the-art approaches based on our benchmark.

-

-

-

-

-## Citation

-

-

-

-```

-@inproceedings{Cordts2016Cityscapes,

- title={The Cityscapes Dataset for Semantic Urban Scene Understanding},

- author={Cordts, Marius and Omran, Mohamed and Ramos, Sebastian and Rehfeld, Timo and Enzweiler, Markus and Benenson, Rodrigo and Franke, Uwe and Roth, Stefan and Schiele, Bernt},

- booktitle={Proc. of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

- year={2016}

-}

-```

-

## Common settings

- All baselines were trained using 8 GPU with a batch size of 8 (1 images per GPU) using the [linear scaling rule](https://arxiv.org/abs/1706.02677) to scale the learning rate.

@@ -48,3 +33,14 @@ To address this, we introduce Cityscapes, a benchmark suite and large-scale data

| Backbone | Style | Lr schd | Scale | Mem (GB) | Inf time (fps) | box AP | mask AP | Config | Download |

| :-------------: | :-----: | :-----: | :------: | :------: | :------------: | :----: | :-----: | :------: | :------: |

| R-50-FPN | pytorch | 1x | 800-1024 | 5.3 | - | 40.9 | 36.4 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/cityscapes/mask_rcnn_r50_fpn_1x_cityscapes.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/cityscapes/mask_rcnn_r50_fpn_1x_cityscapes/mask_rcnn_r50_fpn_1x_cityscapes_20201211_133733-d2858245.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/cityscapes/mask_rcnn_r50_fpn_1x_cityscapes/mask_rcnn_r50_fpn_1x_cityscapes_20201211_133733.log.json) |

+

+## Citation

+

+```latex

+@inproceedings{Cordts2016Cityscapes,

+ title={The Cityscapes Dataset for Semantic Urban Scene Understanding},

+ author={Cordts, Marius and Omran, Mohamed and Ramos, Sebastian and Rehfeld, Timo and Enzweiler, Markus and Benenson, Rodrigo and Franke, Uwe and Roth, Stefan and Schiele, Bernt},

+ booktitle={Proc. of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

+ year={2016}

+}

+```

diff --git a/configs/cornernet/README.md b/configs/cornernet/README.md

index d7dc08c4aa9..55877c4c4bf 100644

--- a/configs/cornernet/README.md

+++ b/configs/cornernet/README.md

@@ -1,35 +1,18 @@

-# Cornernet: Detecting objects as paired keypoints

+# CornerNet

-## Abstract

+> [Cornernet: Detecting objects as paired keypoints](https://arxiv.org/abs/1808.01244)

-

+

+

+## Abstract

We propose CornerNet, a new approach to object detection where we detect an object bounding box as a pair of keypoints, the top-left corner and the bottom-right corner, using a single convolution neural network. By detecting objects as paired keypoints, we eliminate the need for designing a set of anchor boxes commonly used in prior single-stage detectors. In addition to our novel formulation, we introduce corner pooling, a new type of pooling layer that helps the network better localize corners. Experiments show that CornerNet achieves a 42.2% AP on MS COCO, outperforming all existing one-stage detectors.

-

-

-

-

-## Citation

-

-

-

-```latex

-@inproceedings{law2018cornernet,

- title={Cornernet: Detecting objects as paired keypoints},

- author={Law, Hei and Deng, Jia},

- booktitle={15th European Conference on Computer Vision, ECCV 2018},

- pages={765--781},

- year={2018},

- organization={Springer Verlag}

-}

-```

-

-## Results and models

+## Results and Models

| Backbone | Batch Size | Step/Total Epochs | Mem (GB) | Inf time (fps) | box AP | Config | Download |

| :-------------: | :--------: |:----------------: | :------: | :------------: | :----: | :------: | :--------: |

@@ -45,3 +28,16 @@ Note:

- 10 x 5: 10 GPUs with 5 images per gpu. This is the same setting as that reported in the original paper.

- 8 x 6: 8 GPUs with 6 images per gpu. The total batchsize is similar to paper and only need 1 node to train.

- 32 x 3: 32 GPUs with 3 images per gpu. The default setting for 1080TI and need 4 nodes to train.

+

+## Citation

+

+```latex

+@inproceedings{law2018cornernet,

+ title={Cornernet: Detecting objects as paired keypoints},

+ author={Law, Hei and Deng, Jia},

+ booktitle={15th European Conference on Computer Vision, ECCV 2018},

+ pages={765--781},

+ year={2018},

+ organization={Springer Verlag}

+}

+```

diff --git a/configs/dcn/README.md b/configs/dcn/README.md

index d9d23f07b06..7866078af2e 100644

--- a/configs/dcn/README.md

+++ b/configs/dcn/README.md

@@ -1,56 +1,26 @@

-# Deformable Convolutional Networks

+# DCN

-## Abstract

+> [Deformable Convolutional Networks](https://arxiv.org/abs/1703.06211)

-

+

+

+## Abstract

Convolutional neural networks (CNNs) are inherently limited to model geometric transformations due to the fixed geometric structures in its building modules. In this work, we introduce two new modules to enhance the transformation modeling capacity of CNNs, namely, deformable convolution and deformable RoI pooling. Both are based on the idea of augmenting the spatial sampling locations in the modules with additional offsets and learning the offsets from target tasks, without additional supervision. The new modules can readily replace their plain counterparts in existing CNNs and can be easily trained end-to-end by standard back-propagation, giving rise to deformable convolutional networks. Extensive experiments validate the effectiveness of our approach on sophisticated vision tasks of object detection and semantic segmentation.

-

-

-

-

-## Citation

-

-

-

-```none

-@inproceedings{dai2017deformable,

- title={Deformable Convolutional Networks},

- author={Dai, Jifeng and Qi, Haozhi and Xiong, Yuwen and Li, Yi and Zhang, Guodong and Hu, Han and Wei, Yichen},

- booktitle={Proceedings of the IEEE international conference on computer vision},

- year={2017}

-}

-```

-

-

-

-```

-@article{zhu2018deformable,

- title={Deformable ConvNets v2: More Deformable, Better Results},

- author={Zhu, Xizhou and Hu, Han and Lin, Stephen and Dai, Jifeng},

- journal={arXiv preprint arXiv:1811.11168},

- year={2018}

-}

-```

-

## Results and Models

| Backbone | Model | Style | Conv | Pool | Lr schd | Mem (GB) | Inf time (fps) | box AP | mask AP | Config | Download |

|:----------------:|:------------:|:-------:|:-------------:|:------:|:-------:|:--------:|:--------------:|:------:|:-------:|:------:|:--------:|

| R-50-FPN | Faster | pytorch | dconv(c3-c5) | - | 1x | 4.0 | 17.8 | 41.3 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200130-d68aed1e.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200130_212941.log.json) |

-| R-50-FPN | Faster | pytorch | mdconv(c3-c5) | - | 1x | 4.1 | 17.6 | 41.4 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200130-d099253b.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200130_222144.log.json) |

-| *R-50-FPN (dg=4) | Faster | pytorch | mdconv(c3-c5) | - | 1x | 4.2 | 17.4 | 41.5 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco_20200130-01262257.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco_20200130_222058.log.json) |

| R-50-FPN | Faster | pytorch | - | dpool | 1x | 5.0 | 17.2 | 38.9 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/faster_rcnn_r50_fpn_dpool_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_dpool_1x_coco/faster_rcnn_r50_fpn_dpool_1x_coco_20200307-90d3c01d.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_dpool_1x_coco/faster_rcnn_r50_fpn_dpool_1x_coco_20200307_203250.log.json) |

-| R-50-FPN | Faster | pytorch | - | mdpool | 1x | 5.8 | 16.6 | 38.7 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/faster_rcnn_r50_fpn_mdpool_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdpool_1x_coco/faster_rcnn_r50_fpn_mdpool_1x_coco_20200307-c0df27ff.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdpool_1x_coco/faster_rcnn_r50_fpn_mdpool_1x_coco_20200307_203304.log.json) |

| R-101-FPN | Faster | pytorch | dconv(c3-c5) | - | 1x | 6.0 | 12.5 | 42.7 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco/faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200203-1377f13d.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco/faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200203_230019.log.json) |

| X-101-32x4d-FPN | Faster | pytorch | dconv(c3-c5) | - | 1x | 7.3 | 10.0 | 44.5 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/faster_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco/faster_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco_20200203-4f85c69c.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco/faster_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco_20200203_001325.log.json) |

| R-50-FPN | Mask | pytorch | dconv(c3-c5) | - | 1x | 4.5 | 15.4 | 41.8 | 37.4 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200203-4d9ad43b.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200203_061339.log.json) |

-| R-50-FPN | Mask | pytorch | mdconv(c3-c5) | - | 1x | 4.5 | 15.1 | 41.5 | 37.1 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200203-ad97591f.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200203_063443.log.json) |

| R-101-FPN | Mask | pytorch | dconv(c3-c5) | - | 1x | 6.5 | 11.7 | 43.5 | 38.9 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco/mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200216-a71f5bce.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco/mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200216_191601.log.json) |

| R-50-FPN | Cascade | pytorch | dconv(c3-c5) | - | 1x | 4.5 | 14.6 | 43.8 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/cascade_rcnn_r50_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_rcnn_r50_fpn_dconv_c3-c5_1x_coco/cascade_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200130-2f1fca44.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_rcnn_r50_fpn_dconv_c3-c5_1x_coco/cascade_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200130_220843.log.json) |

| R-101-FPN | Cascade | pytorch | dconv(c3-c5) | - | 1x | 6.4 | 11.0 | 45.0 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/cascade_rcnn_r101_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_rcnn_r101_fpn_dconv_c3-c5_1x_coco/cascade_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200203-3b2f0594.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_rcnn_r101_fpn_dconv_c3-c5_1x_coco/cascade_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200203_224829.log.json) |

@@ -58,11 +28,21 @@ Convolutional neural networks (CNNs) are inherently limited to model geometric t

| R-101-FPN | Cascade Mask | pytorch | dconv(c3-c5) | - | 1x | 8.0 | 8.6 | 45.8 | 39.7 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/cascade_mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco/cascade_mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200204-df0c5f10.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco/cascade_mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco_20200204_134006.log.json) |

| X-101-32x4d-FPN | Cascade Mask | pytorch | dconv(c3-c5) | - | 1x | 9.2 | | 47.3 | 41.1 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcn/cascade_mask_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_mask_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco/cascade_mask_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco-e75f90c8.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/cascade_mask_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco/cascade_mask_rcnn_x101_32x4d_fpn_dconv_c3-c5_1x_coco-20200606_183737.log.json) |

| R-50-FPN (FP16) | Mask | pytorch | dconv(c3-c5) | - | 1x | 3.0 | | 41.9 | 37.5 |[config](https://github.com/open-mmlab/mmdetection/tree/master/configs/fp16/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco_20210520_180247-c06429d2.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco_20210520_180247.log.json) |

-| R-50-FPN (FP16) | Mask | pytorch | mdconv(c3-c5)| - | 1x | 3.1 | | 42.0 | 37.6 |[config](https://github.com/open-mmlab/mmdetection/tree/master/configs/fp16/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco_20210520_180434-cf8fefa5.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco_20210520_180434.log.json) |

**Notes:**

-- `dconv` and `mdconv` denote (modulated) deformable convolution, `c3-c5` means adding dconv in resnet stage 3 to 5. `dpool` and `mdpool` denote (modulated) deformable roi pooling.

+- `dconv` denotes deformable convolution, `c3-c5` means adding dconv in resnet stage 3 to 5. `dpool` denotes deformable roi pooling.

- The dcn ops are modified from https://github.com/chengdazhi/Deformable-Convolution-V2-PyTorch, which should be more memory efficient and slightly faster.

- (*) For R-50-FPN (dg=4), dg is short for deformable_group. This model is trained and tested on Amazon EC2 p3dn.24xlarge instance.

- **Memory, Train/Inf time is outdated.**

+

+## Citation

+

+```latex

+@inproceedings{dai2017deformable,

+ title={Deformable Convolutional Networks},

+ author={Dai, Jifeng and Qi, Haozhi and Xiong, Yuwen and Li, Yi and Zhang, Guodong and Hu, Han and Wei, Yichen},

+ booktitle={Proceedings of the IEEE international conference on computer vision},

+ year={2017}

+}

+```

diff --git a/configs/dcn/metafile.yml b/configs/dcn/metafile.yml

index 7919b842226..36f38871446 100644

--- a/configs/dcn/metafile.yml

+++ b/configs/dcn/metafile.yml

@@ -9,8 +9,8 @@ Collections:

Architecture:

- Deformable Convolution

Paper:

- URL: https://arxiv.org/abs/1811.11168

- Title: "Deformable ConvNets v2: More Deformable, Better Results"

+ URL: https://arxiv.org/abs/1703.06211

+ Title: "Deformable Convolutional Networks"

README: configs/dcn/README.md

Code:

URL: https://github.com/open-mmlab/mmdetection/blob/v2.0.0/mmdet/ops/dcn/deform_conv.py#L15

@@ -37,46 +37,6 @@ Models:

box AP: 41.3

Weights: https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200130-d68aed1e.pth

- - Name: faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco

- In Collection: Deformable Convolutional Networks

- Config: configs/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py

- Metadata:

- Training Memory (GB): 4.1

- inference time (ms/im):

- - value: 56.82

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (800, 1333)

- Epochs: 12

- Results:

- - Task: Object Detection

- Dataset: COCO

- Metrics:

- box AP: 41.4

- Weights: https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200130-d099253b.pth

-

- - Name: faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco

- In Collection: Deformable Convolutional Networks

- Config: configs/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco.py

- Metadata:

- Training Memory (GB): 4.2

- inference time (ms/im):

- - value: 57.47

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (800, 1333)

- Epochs: 12

- Results:

- - Task: Object Detection

- Dataset: COCO

- Metrics:

- box AP: 41.5

- Weights: https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco_20200130-01262257.pth

-

- Name: faster_rcnn_r50_fpn_dpool_1x_coco

In Collection: Deformable Convolutional Networks

Config: configs/dcn/faster_rcnn_r50_fpn_dpool_1x_coco.py

@@ -97,26 +57,6 @@ Models:

box AP: 38.9

Weights: https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_dpool_1x_coco/faster_rcnn_r50_fpn_dpool_1x_coco_20200307-90d3c01d.pth

- - Name: faster_rcnn_r50_fpn_mdpool_1x_coco

- In Collection: Deformable Convolutional Networks

- Config: configs/dcn/faster_rcnn_r50_fpn_mdpool_1x_coco.py

- Metadata:

- Training Memory (GB): 5.8

- inference time (ms/im):

- - value: 60.24

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (800, 1333)

- Epochs: 12

- Results:

- - Task: Object Detection

- Dataset: COCO

- Metrics:

- box AP: 38.7

- Weights: https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdpool_1x_coco/faster_rcnn_r50_fpn_mdpool_1x_coco_20200307-c0df27ff.pth

-

- Name: faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco

In Collection: Deformable Convolutional Networks

Config: configs/dcn/faster_rcnn_r101_fpn_dconv_c3-c5_1x_coco.py

@@ -181,30 +121,6 @@ Models:

mask AP: 37.4

Weights: https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_dconv_c3-c5_1x_coco_20200203-4d9ad43b.pth

- - Name: mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco

- In Collection: Deformable Convolutional Networks

- Config: configs/dcn/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py

- Metadata:

- Training Memory (GB): 4.5

- inference time (ms/im):

- - value: 66.23

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (800, 1333)

- Epochs: 12

- Results:

- - Task: Object Detection

- Dataset: COCO

- Metrics:

- box AP: 41.5

- - Task: Instance Segmentation

- Dataset: COCO

- Metrics:

- mask AP: 37.1

- Weights: https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200203-ad97591f.pth

-

- Name: mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco

In Collection: Deformable Convolutional Networks

Config: configs/dcn/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco.py

@@ -226,27 +142,6 @@ Models:

mask AP: 37.5

Weights: https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_dconv_c3-c5_1x_coco_20210520_180247-c06429d2.pth

- - Name: mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco

- In Collection: Deformable Convolutional Networks

- Config: configs/dcn/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco.py

- Metadata:

- Training Memory (GB): 3.1

- Training Techniques:

- - SGD with Momentum

- - Weight Decay

- - Mixed Precision Training

- Epochs: 12

- Results:

- - Task: Object Detection

- Dataset: COCO

- Metrics:

- box AP: 42.0

- - Task: Instance Segmentation

- Dataset: COCO

- Metrics:

- mask AP: 37.6

- Weights: https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco_20210520_180434-cf8fefa5.pth

-

- Name: mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco

In Collection: Deformable Convolutional Networks

Config: configs/dcn/mask_rcnn_r101_fpn_dconv_c3-c5_1x_coco.py

diff --git a/configs/dcnv2/README.md b/configs/dcnv2/README.md

new file mode 100644

index 00000000000..1e7e3201b56

--- /dev/null

+++ b/configs/dcnv2/README.md

@@ -0,0 +1,37 @@

+# DCNv2

+

+> [Deformable ConvNets v2: More Deformable, Better Results](https://arxiv.org/abs/1811.11168)

+

+

+

+## Abstract

+

+The superior performance of Deformable Convolutional Networks arises from its ability to adapt to the geometric variations of objects. Through an examination of its adaptive behavior, we observe that while the spatial support for its neural features conforms more closely than regular ConvNets to object structure, this support may nevertheless extend well beyond the region of interest, causing features to be influenced by irrelevant image content. To address this problem, we present a reformulation of Deformable ConvNets that improves its ability to focus on pertinent image regions, through increased modeling power and stronger training. The modeling power is enhanced through a more comprehensive integration of deformable convolution within the network, and by introducing a modulation mechanism that expands the scope of deformation modeling. To effectively harness this enriched modeling capability, we guide network training via a proposed feature mimicking scheme that helps the network to learn features that reflect the object focus and classification power of RCNN features. With the proposed contributions, this new version of Deformable ConvNets yields significant performance gains over the original model and produces leading results on the COCO benchmark for object detection and instance segmentation.

+

+## Results and Models

+

+| Backbone | Model | Style | Conv | Pool | Lr schd | Mem (GB) | Inf time (fps) | box AP | mask AP | Config | Download |

+|:----------------:|:------------:|:-------:|:-------------:|:------:|:-------:|:--------:|:--------------:|:------:|:-------:|:------:|:--------:|

+| R-50-FPN | Faster | pytorch | mdconv(c3-c5) | - | 1x | 4.1 | 17.6 | 41.4 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcnv2/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200130-d099253b.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200130_222144.log.json) |

+| *R-50-FPN (dg=4) | Faster | pytorch | mdconv(c3-c5) | - | 1x | 4.2 | 17.4 | 41.5 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcnv2/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco_20200130-01262257.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco_20200130_222058.log.json) |

+| R-50-FPN | Faster | pytorch | - | mdpool | 1x | 5.8 | 16.6 | 38.7 | | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcnv2/faster_rcnn_r50_fpn_mdpool_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdpool_1x_coco/faster_rcnn_r50_fpn_mdpool_1x_coco_20200307-c0df27ff.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdpool_1x_coco/faster_rcnn_r50_fpn_mdpool_1x_coco_20200307_203304.log.json) |

+| R-50-FPN | Mask | pytorch | mdconv(c3-c5) | - | 1x | 4.5 | 15.1 | 41.5 | 37.1 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/dcnv2/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200203-ad97591f.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200203_063443.log.json) |

+| R-50-FPN (FP16) | Mask | pytorch | mdconv(c3-c5)| - | 1x | 3.1 | | 42.0 | 37.6 |[config](https://github.com/open-mmlab/mmdetection/tree/master/configs/fp16/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco_20210520_180434-cf8fefa5.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco_20210520_180434.log.json) |

+

+**Notes:**

+

+- `mdconv` denotes modulated deformable convolution, `c3-c5` means adding dconv in resnet stage 3 to 5. `mdpool` denotes modulated deformable roi pooling.

+- The dcn ops are modified from https://github.com/chengdazhi/Deformable-Convolution-V2-PyTorch, which should be more memory efficient and slightly faster.

+- (*) For R-50-FPN (dg=4), dg is short for deformable_group. This model is trained and tested on Amazon EC2 p3dn.24xlarge instance.

+- **Memory, Train/Inf time is outdated.**

+

+## Citation

+

+```latex

+@article{zhu2018deformable,

+ title={Deformable ConvNets v2: More Deformable, Better Results},

+ author={Zhu, Xizhou and Hu, Han and Lin, Stephen and Dai, Jifeng},

+ journal={arXiv preprint arXiv:1811.11168},

+ year={2018}

+}

+```

diff --git a/configs/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py b/configs/dcnv2/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py

similarity index 100%

rename from configs/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py

rename to configs/dcnv2/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py

diff --git a/configs/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco.py b/configs/dcnv2/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco.py

similarity index 100%

rename from configs/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco.py

rename to configs/dcnv2/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco.py

diff --git a/configs/dcn/faster_rcnn_r50_fpn_mdpool_1x_coco.py b/configs/dcnv2/faster_rcnn_r50_fpn_mdpool_1x_coco.py

similarity index 100%

rename from configs/dcn/faster_rcnn_r50_fpn_mdpool_1x_coco.py

rename to configs/dcnv2/faster_rcnn_r50_fpn_mdpool_1x_coco.py

diff --git a/configs/dcn/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco.py b/configs/dcnv2/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco.py

similarity index 100%

rename from configs/dcn/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco.py

rename to configs/dcnv2/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco.py

diff --git a/configs/dcn/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py b/configs/dcnv2/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py

similarity index 100%

rename from configs/dcn/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py

rename to configs/dcnv2/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py

diff --git a/configs/dcnv2/metafile.yml b/configs/dcnv2/metafile.yml

new file mode 100644

index 00000000000..90494215d64

--- /dev/null

+++ b/configs/dcnv2/metafile.yml

@@ -0,0 +1,123 @@

+Collections:

+ - Name: Deformable Convolutional Networks v2

+ Metadata:

+ Training Data: COCO

+ Training Techniques:

+ - SGD with Momentum

+ - Weight Decay

+ Training Resources: 8x V100 GPUs

+ Architecture:

+ - Deformable Convolution

+ Paper:

+ URL: https://arxiv.org/abs/1811.11168

+ Title: "Deformable ConvNets v2: More Deformable, Better Results"

+ README: configs/dcnv2/README.md

+ Code:

+ URL: https://github.com/open-mmlab/mmdetection/blob/v2.0.0/mmdet/ops/dcn/deform_conv.py#L15

+ Version: v2.0.0

+

+Models:

+ - Name: faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco

+ In Collection: Deformable Convolutional Networks v2

+ Config: configs/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py

+ Metadata:

+ Training Memory (GB): 4.1

+ inference time (ms/im):

+ - value: 56.82

+ hardware: V100

+ backend: PyTorch

+ batch size: 1

+ mode: FP32

+ resolution: (800, 1333)

+ Epochs: 12

+ Results:

+ - Task: Object Detection

+ Dataset: COCO

+ Metrics:

+ box AP: 41.4

+ Weights: https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200130-d099253b.pth

+

+ - Name: faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco

+ In Collection: Deformable Convolutional Networks v2

+ Config: configs/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco.py

+ Metadata:

+ Training Memory (GB): 4.2

+ inference time (ms/im):

+ - value: 57.47

+ hardware: V100

+ backend: PyTorch

+ batch size: 1

+ mode: FP32

+ resolution: (800, 1333)

+ Epochs: 12

+ Results:

+ - Task: Object Detection

+ Dataset: COCO

+ Metrics:

+ box AP: 41.5

+ Weights: https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco/faster_rcnn_r50_fpn_mdconv_c3-c5_group4_1x_coco_20200130-01262257.pth

+

+ - Name: faster_rcnn_r50_fpn_mdpool_1x_coco

+ In Collection: Deformable Convolutional Networks v2

+ Config: configs/dcn/faster_rcnn_r50_fpn_mdpool_1x_coco.py

+ Metadata:

+ Training Memory (GB): 5.8

+ inference time (ms/im):

+ - value: 60.24

+ hardware: V100

+ backend: PyTorch

+ batch size: 1

+ mode: FP32

+ resolution: (800, 1333)

+ Epochs: 12

+ Results:

+ - Task: Object Detection

+ Dataset: COCO

+ Metrics:

+ box AP: 38.7

+ Weights: https://download.openmmlab.com/mmdetection/v2.0/dcn/faster_rcnn_r50_fpn_mdpool_1x_coco/faster_rcnn_r50_fpn_mdpool_1x_coco_20200307-c0df27ff.pth

+

+ - Name: mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco

+ In Collection: Deformable Convolutional Networks v2

+ Config: configs/dcn/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco.py

+ Metadata:

+ Training Memory (GB): 4.5

+ inference time (ms/im):

+ - value: 66.23

+ hardware: V100

+ backend: PyTorch

+ batch size: 1

+ mode: FP32

+ resolution: (800, 1333)

+ Epochs: 12

+ Results:

+ - Task: Object Detection

+ Dataset: COCO

+ Metrics:

+ box AP: 41.5

+ - Task: Instance Segmentation

+ Dataset: COCO

+ Metrics:

+ mask AP: 37.1

+ Weights: https://download.openmmlab.com/mmdetection/v2.0/dcn/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_mdconv_c3-c5_1x_coco_20200203-ad97591f.pth

+

+ - Name: mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco

+ In Collection: Deformable Convolutional Networks v2

+ Config: configs/dcn/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco.py

+ Metadata:

+ Training Memory (GB): 3.1

+ Training Techniques:

+ - SGD with Momentum

+ - Weight Decay

+ - Mixed Precision Training

+ Epochs: 12

+ Results:

+ - Task: Object Detection

+ Dataset: COCO

+ Metrics:

+ box AP: 42.0

+ - Task: Instance Segmentation

+ Dataset: COCO

+ Metrics:

+ mask AP: 37.6

+ Weights: https://download.openmmlab.com/mmdetection/v2.0/fp16/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco/mask_rcnn_r50_fpn_fp16_mdconv_c3-c5_1x_coco_20210520_180434-cf8fefa5.pth

diff --git a/configs/deepfashion/README.md b/configs/deepfashion/README.md

index e2c042f56a9..dd4f012bfa3 100644

--- a/configs/deepfashion/README.md

+++ b/configs/deepfashion/README.md

@@ -1,23 +1,19 @@

-# DeepFashion: Powering Robust Clothes Recognition and Retrieval With Rich Annotations

+# DeepFashion

-## Abstract

+> [DeepFashion: Powering Robust Clothes Recognition and Retrieval With Rich Annotations](https://openaccess.thecvf.com/content_cvpr_2016/html/Liu_DeepFashion_Powering_Robust_CVPR_2016_paper.html)

-

+

+

+## Abstract

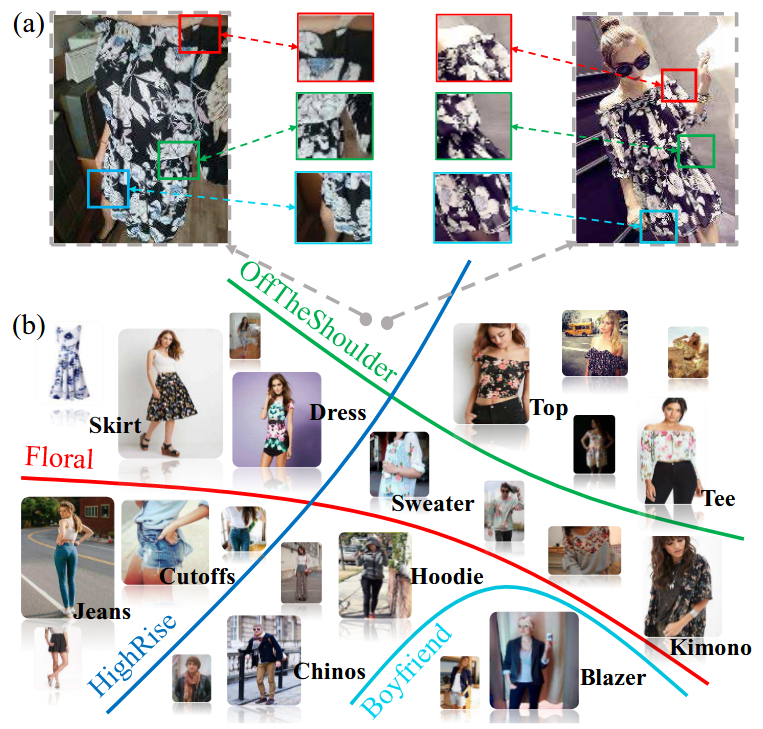

Recent advances in clothes recognition have been driven by the construction of clothes datasets. Existing datasets are limited in the amount of annotations and are difficult to cope with the various challenges in real-world applications. In this work, we introduce DeepFashion, a large-scale clothes dataset with comprehensive annotations. It contains over 800,000 images, which are richly annotated with massive attributes, clothing landmarks, and correspondence of images taken under different scenarios including store, street snapshot, and consumer. Such rich annotations enable the development of powerful algorithms in clothes recognition and facilitating future researches. To demonstrate the advantages of DeepFashion, we propose a new deep model, namely FashionNet, which learns clothing features by jointly predicting clothing attributes and landmarks. The estimated landmarks are then employed to pool or gate the learned features. It is optimized in an iterative manner. Extensive experiments demonstrate the effectiveness of FashionNet and the usefulness of DeepFashion.

-

-

-

-

## Introduction

-

-

[MMFashion](https://github.com/open-mmlab/mmfashion) develops "fashion parsing and segmentation" module

based on the dataset

[DeepFashion-Inshop](https://drive.google.com/drive/folders/0B7EVK8r0v71pVDZFQXRsMDZCX1E?usp=sharing).

@@ -55,9 +51,15 @@ mmdetection

After that you can train the Mask RCNN r50 on DeepFashion-In-shop dataset by launching training with the `mask_rcnn_r50_fpn_1x.py` config

or creating your own config file.

+## Results and Models

+

+| Backbone | Model type | Dataset | bbox detection Average Precision | segmentation Average Precision | Config | Download (Google) |

+| :---------: | :----------: | :-----------------: | :--------------------------------: | :----------------------------: | :---------:| :-------------------------: |

+| ResNet50 | Mask RCNN | DeepFashion-In-shop | 0.599 | 0.584 |[config](https://github.com/open-mmlab/mmdetection/blob/master/configs/deepfashion/mask_rcnn_r50_fpn_15e_deepfashion.py)| [model](https://download.openmmlab.com/mmdetection/v2.0/deepfashion/mask_rcnn_r50_fpn_15e_deepfashion/mask_rcnn_r50_fpn_15e_deepfashion_20200329_192752.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/deepfashion/mask_rcnn_r50_fpn_15e_deepfashion/20200329_192752.log.json) |

+

## Citation

-```

+```latex

@inproceedings{liuLQWTcvpr16DeepFashion,

author = {Liu, Ziwei and Luo, Ping and Qiu, Shi and Wang, Xiaogang and Tang, Xiaoou},

title = {DeepFashion: Powering Robust Clothes Recognition and Retrieval with Rich Annotations},

@@ -66,9 +68,3 @@ or creating your own config file.

year = {2016}

}

```

-

-## Model Zoo

-

-| Backbone | Model type | Dataset | bbox detection Average Precision | segmentation Average Precision | Config | Download (Google) |

-| :---------: | :----------: | :-----------------: | :--------------------------------: | :----------------------------: | :---------:| :-------------------------: |

-| ResNet50 | Mask RCNN | DeepFashion-In-shop | 0.599 | 0.584 |[config](https://github.com/open-mmlab/mmdetection/blob/master/configs/deepfashion/mask_rcnn_r50_fpn_15e_deepfashion.py)| [model](https://download.openmmlab.com/mmdetection/v2.0/deepfashion/mask_rcnn_r50_fpn_15e_deepfashion/mask_rcnn_r50_fpn_15e_deepfashion_20200329_192752.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/deepfashion/mask_rcnn_r50_fpn_15e_deepfashion/20200329_192752.log.json) |

diff --git a/configs/deformable_detr/README.md b/configs/deformable_detr/README.md

index e3b8e41d27c..f415be350b9 100644

--- a/configs/deformable_detr/README.md

+++ b/configs/deformable_detr/README.md

@@ -1,26 +1,35 @@

-# Deformable DETR: Deformable Transformers for End-to-End Object Detection

+# Deformable DETR

-## Abstract

+> [Deformable DETR: Deformable Transformers for End-to-End Object Detection](https://arxiv.org/abs/2010.04159)

+

+

-

+## Abstract

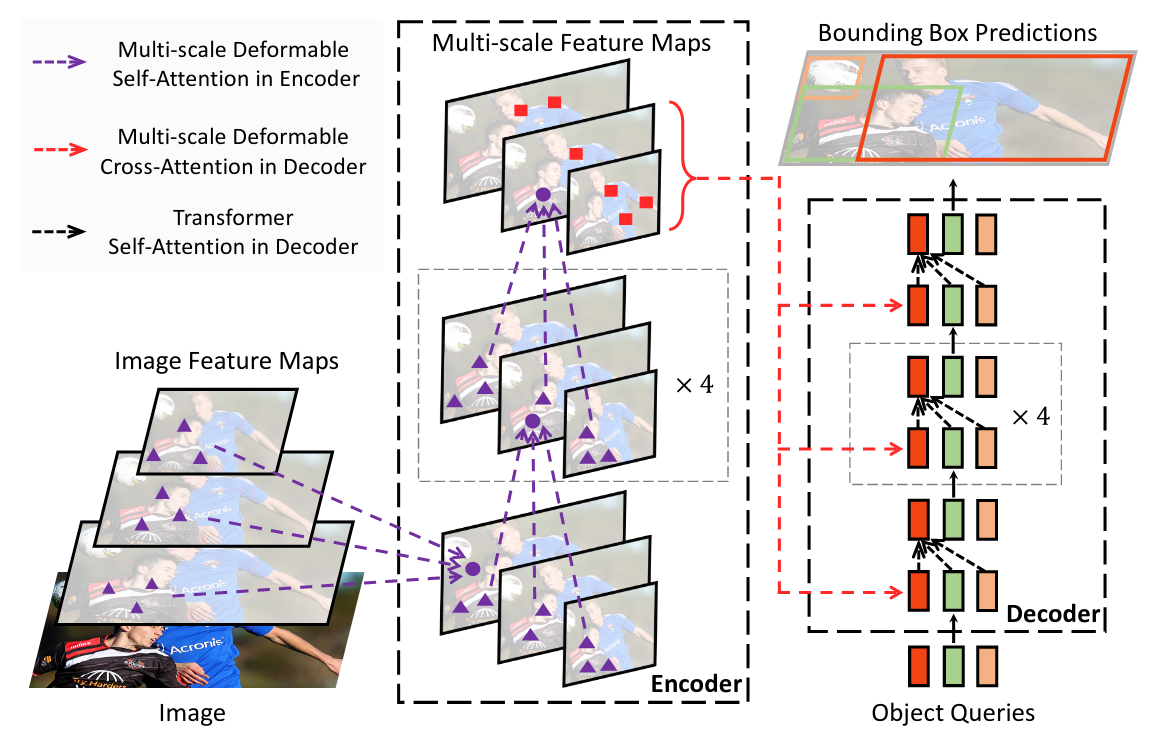

DETR has been recently proposed to eliminate the need for many hand-designed components in object detection while demonstrating good performance. However, it suffers from slow convergence and limited feature spatial resolution, due to the limitation of Transformer attention modules in processing image feature maps. To mitigate these issues, we proposed Deformable DETR, whose attention modules only attend to a small set of key sampling points around a reference. Deformable DETR can achieve better performance than DETR (especially on small objects) with 10 times less training epochs. Extensive experiments on the COCO benchmark demonstrate the effectiveness of our approach.

-

-

-

+## Results and Models

-## Citation

+| Backbone | Model | Lr schd | box AP | Config | Download |

+|:------:|:--------:|:--------------:|:------:|:------:|:--------:|

+| R-50 | Deformable DETR |50e | 44.5 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/deformable_detr/deformable_detr_r50_16x2_50e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_r50_16x2_50e_coco/deformable_detr_r50_16x2_50e_coco_20210419_220030-a12b9512.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_r50_16x2_50e_coco/deformable_detr_r50_16x2_50e_coco_20210419_220030-a12b9512.log.json) |

+| R-50 | + iterative bounding box refinement |50e | 46.1 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/deformable_detr/deformable_detr_refine_r50_16x2_50e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_refine_r50_16x2_50e_coco/deformable_detr_refine_r50_16x2_50e_coco_20210419_220503-5f5dff21.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_refine_r50_16x2_50e_coco/deformable_detr_refine_r50_16x2_50e_coco_20210419_220503-5f5dff21.log.json) |

+| R-50 | ++ two-stage Deformable DETR |50e | 46.8 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/deformable_detr/deformable_detr_twostage_refine_r50_16x2_50e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_twostage_refine_r50_16x2_50e_coco/deformable_detr_twostage_refine_r50_16x2_50e_coco_20210419_220613-9d28ab72.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_twostage_refine_r50_16x2_50e_coco/deformable_detr_twostage_refine_r50_16x2_50e_coco_20210419_220613-9d28ab72.log.json) |

-

+# NOTE

+

+1. All models are trained with batch size 32.

+2. The performance is unstable. `Deformable DETR` and `iterative bounding box refinement` may fluctuate about 0.3 mAP. `two-stage Deformable DETR` may fluctuate about 0.2 mAP.

+

+## Citation

We provide the config files for Deformable DETR: [Deformable DETR: Deformable Transformers for End-to-End Object Detection](https://arxiv.org/abs/2010.04159).

-```

+```latex

@inproceedings{

zhu2021deformable,

title={Deformable DETR: Deformable Transformers for End-to-End Object Detection},

@@ -30,16 +39,3 @@ year={2021},

url={https://openreview.net/forum?id=gZ9hCDWe6ke}

}

```

-

-## Results and Models

-

-| Backbone | Model | Lr schd | box AP | Config | Download |

-|:------:|:--------:|:--------------:|:------:|:------:|:--------:|

-| R-50 | Deformable DETR |50e | 44.5 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/deformable_detr/deformable_detr_r50_16x2_50e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_r50_16x2_50e_coco/deformable_detr_r50_16x2_50e_coco_20210419_220030-a12b9512.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_r50_16x2_50e_coco/deformable_detr_r50_16x2_50e_coco_20210419_220030-a12b9512.log.json) |

-| R-50 | + iterative bounding box refinement |50e | 46.1 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/deformable_detr/deformable_detr_refine_r50_16x2_50e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_refine_r50_16x2_50e_coco/deformable_detr_refine_r50_16x2_50e_coco_20210419_220503-5f5dff21.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_refine_r50_16x2_50e_coco/deformable_detr_refine_r50_16x2_50e_coco_20210419_220503-5f5dff21.log.json) |

-| R-50 | ++ two-stage Deformable DETR |50e | 46.8 | [config](https://github.com/open-mmlab/mmdetection/tree/master/configs/deformable_detr/deformable_detr_twostage_refine_r50_16x2_50e_coco.py) | [model](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_twostage_refine_r50_16x2_50e_coco/deformable_detr_twostage_refine_r50_16x2_50e_coco_20210419_220613-9d28ab72.pth) | [log](https://download.openmmlab.com/mmdetection/v2.0/deformable_detr/deformable_detr_twostage_refine_r50_16x2_50e_coco/deformable_detr_twostage_refine_r50_16x2_50e_coco_20210419_220613-9d28ab72.log.json) |

-

-# NOTE

-

-1. All models are trained with batch size 32.

-2. The performance is unstable. `Deformable DETR` and `iterative bounding box refinement` may fluctuate about 0.3 mAP. `two-stage Deformable DETR` may fluctuate about 0.2 mAP.

diff --git a/configs/detectors/README.md b/configs/detectors/README.md

index c90302b2f6c..3504ee2731a 100644

--- a/configs/detectors/README.md

+++ b/configs/detectors/README.md

@@ -1,35 +1,18 @@

-# DetectoRS: Detecting Objects with Recursive Feature Pyramid and Switchable Atrous Convolution

+# DetectoRS

-## Abstract

+> [DetectoRS: Detecting Objects with Recursive Feature Pyramid and Switchable Atrous Convolution](https://arxiv.org/abs/2006.02334)

+

+

-

+## Abstract