ASN was nominated as finalist for the Best Paper Award in Cognitive Robotics at IEEE International Conference on Robotics and Automation (ICRA) 2020

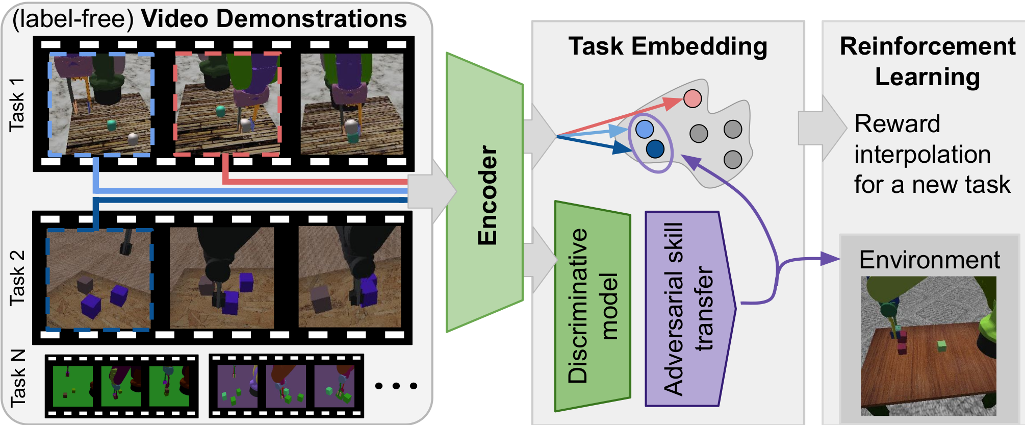

This repository is a PyTorch implementation of Adversarial Skill Networks (ASN), an approach for unsupervised skill learning from video. Concretely, our approach learns a task-agnostic skill embedding space from unlabeled multiview videos. We combine a metric learning loss, which utilizes temporal video coherence to learn a state representation, with an entropy regularized adversarial skill-transfer loss. The learned embedding enables training of continuous control policies to solve novel tasks that require the interpolation of previously seen skills. More information at our project page.

If you find the code helpful please consider citing the corresponding paper

@INPROCEEDINGS{mees20icra_asn,

author = {Oier Mees and Markus Merklinger and Gabriel Kalweit and Wolfram Burgard},

title = {Adversarial Skill Networks: Unsupervised Robot Skill Learning from Videos},

booktitle = {Proceedings of the IEEE International Conference on Robotics and Automation (ICRA)},

year = 2020,

address = {Paris, France}

}

We provide a simple package to install ASN preferably in a virtual environment. We have tested the code with Ubuntu 18.04 and PyTorch 1.4.0.

If not installed, install virtualenv and virtualenvwrapper with --user option:

pip install --user virtualenv virtualenvwrapper

Add the following virtualenvwrapper settings to your .bashrc:

export WORKON_HOME=$HOME/.virtualenvs export PROJECT_HOME=$HOME/.venvproject export VIRTUALENVWRAPPER_VIRTUALENV_ARGS='--no-site-packages' source $HOME/.local/bin/virtualenvwrapper.sh

Create a virtual environment with Python3:

mkvirtualenv -p /usr/bin/python3 asn

Now clone our repo:

git clone [email protected]:mees/Adversarial-Skill-Networks.git

After cd-ing to the repo install it with:

pip install -e .

First download and extract the dataset containing the block tasks into your /tmp/ directory. To start the training you need to specify the location of the dataset and which tasks you want to held out. For example, to train the skill embedding on the tasks 2 block stacking, color pushing and separate to stack and evaluate it via video alignment on the unseen color stacking task:

python train_asn.py --train-dir /tmp/real_combi_task3/videos/train/ --val-dir-metric /tmp/real_combi_task3/videos/val/ --train-filter-tasks cstack

Evaluate the trained ASN model on the video alignment of a novel task and visualize the corresponding t-SNE plots of the learned embedding:

python eval_asn.py --load-model pretrained_model/model_best.pth.tar --val-dir-metric /tmp/real_combi_task3/videos/val/ --task cstack

We provide the weights, a log file and a t-SNE visualization for a pretrained model for the default setting here. This model achieves an alignment loss of 0.1638 on the unseen color stacking task, which is very close to the 0.165 reported on the paper.

Our Block Task dataset contains multi-view rgb data of several block manipulation tasks and can be found here.

We provide a python script to record and synchronize several webcams. First check if your webcams are recognized:

ls -ltrh /dev/video*

Now start the recording for n webcams:

python utils/webcam_dataset_creater.py --ports 0,1 --tag test --display

Hit Ctrl-C when done collecting, upon which the script will compile videos for each view .

For academic usage, the code is released under the GPLv3 license. For any commercial purpose, please contact the authors. For Pytorch see its respective license.