diff --git a/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/DataX-write.md b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/DataX-write.md

deleted file mode 100644

index fa3bc57eae..0000000000

--- a/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/DataX-write.md

+++ /dev/null

@@ -1,633 +0,0 @@

-# 使用 DataX 将数据写入 MatrixOne

-

-## 概述

-

-本文介绍如何使用 DataX 工具将数据离线写入 MatrixOne 数据库。

-

-DataX 是一款由阿里开源的异构数据源离线同步工具,提供了稳定和高效的数据同步功能,旨在实现各种异构数据源之间的高效数据同步。

-

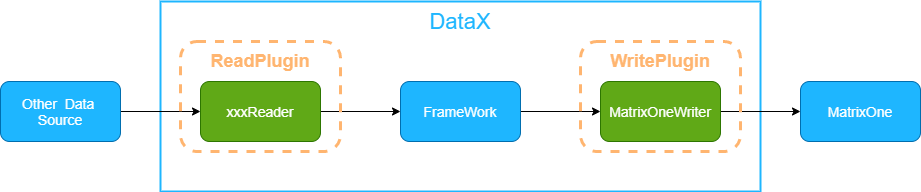

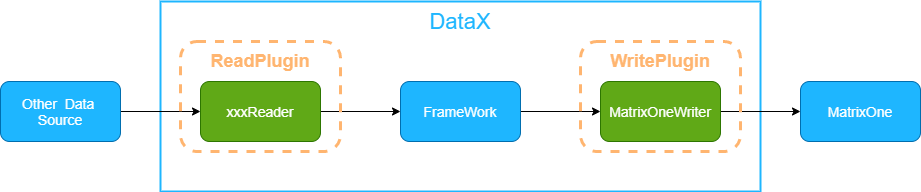

-DataX 将不同数据源的同步分为两个主要组件:**Reader(读取数据源)

-**和 **Writer(写入目标数据源)**。DataX 框架理论上支持任何数据源类型的数据同步工作。

-

-MatrixOne 与 MySQL 8.0 高度兼容,但由于 DataX 自带的 MySQL Writer 插件适配的是 MySQL 5.1 的 JDBC 驱动,为了提升兼容性,社区单独改造了基于 MySQL 8.0 驱动的 MatrixOneWriter 插件。MatrixOneWriter 插件实现了将数据写入 MatrixOne 数据库目标表的功能。在底层实现中,MatrixOneWriter 通过 JDBC 连接到远程 MatrixOne 数据库,并执行相应的 `insert into ...` SQL 语句将数据写入 MatrixOne,同时支持批量提交。

-

-MatrixOneWriter 利用 DataX 框架从 Reader 获取生成的协议数据,并根据您配置的 `writeMode` 生成相应的 `insert into...` 语句。在遇到主键或唯一性索引冲突时,会排除冲突的行并继续写入。出于性能优化的考虑,我们采用了 `PreparedStatement + Batch` 的方式,并设置了 `rewriteBatchedStatements=true` 选项,以将数据缓冲到线程上下文的缓冲区中。只有当缓冲区的数据量达到预定的阈值时,才会触发写入请求。

-

-

-

-!!! note

- 执行整个任务至少需要拥有 `insert into ...` 的权限,是否需要其他权限取决于你在任务配置中的 `preSql` 和 `postSql`。

-

-MatrixOneWriter 主要面向 ETL 开发工程师,他们使用 MatrixOneWriter 将数据从数据仓库导入到 MatrixOne。同时,MatrixOneWriter 也可以作为数据迁移工具为 DBA 等用户提供服务。

-

-## 开始前准备

-

-在开始使用 DataX 将数据写入 MatrixOne 之前,需要完成安装以下软件:

-

-- 安装 [JDK 8+ version](https://www.oracle.com/sg/java/technologies/javase/javase8-archive-downloads.html)。

-- 安装 [Python 3.8(or plus)](https://www.python.org/downloads/)。

-- 下载 [DataX](https://datax-opensource.oss-cn-hangzhou.aliyuncs.com/202210/datax.tar.gz) 安装包,并解压。

-- 下载 [matrixonewriter.zip](https://community-shared-data-1308875761.cos.ap-beijing.myqcloud.com/artwork/docs/develop/Computing-Engine/datax-write/matrixonewriter.zip),解压至 DataX 项目根目录的 `plugin/writer/` 目录下。

-- 安装 <a href="https://dev.mysql.com/downloads/mysql" target="_blank">MySQL Client</a>。

-- [安装和启动 MatrixOne](../../../Get-Started/install-standalone-matrixone.md)。

-

-## 操作步骤

-

-### 创建 MatrixOne 测试表

-

-使用 Mysql Client 连接 MatrixOne,在 MatrixOne 中创建一个测试表:

-

-```sql

-CREATE DATABASE mo_demo;

-USE mo_demo;

-CREATE TABLE m_user(

- M_ID INT NOT NULL,

- M_NAME CHAR(25) NOT NULL

-);

-```

-

-### 配置数据源

-

-本例中,我们使用**内存**中生成的数据作为数据源:

-

-```json

-"reader": {

- "name": "streamreader",

- "parameter": {

- "column" : [ #可以写多个列

- {

- "value": 20210106, #表示该列的值

- "type": "long" #表示该列的类型

- },

- {

- "value": "matrixone",

- "type": "string"

- }

- ],

- "sliceRecordCount": 1000 #表示要打印多少次

- }

-}

-```

-

-### 编写作业配置文件

-

-使用以下命令查看配置模板:

-

-```

-python datax.py -r {YOUR_READER} -w matrixonewriter

-```

-

-编写作业的配置文件 `stream2matrixone.json`:

-

-```json

-{

- "job": {

- "setting": {

- "speed": {

- "channel": 1

- }

- },

- "content": [

- {

- "reader": {

- "name": "streamreader",

- "parameter": {

- "column" : [

- {

- "value": 20210106,

- "type": "long"

- },

- {

- "value": "matrixone",

- "type": "string"

- }

- ],

- "sliceRecordCount": 1000

- }

- },

- "writer": {

- "name": "matrixonewriter",

- "parameter": {

- "writeMode": "insert",

- "username": "root",

- "password": "111",

- "column": [

- "M_ID",

- "M_NAME"

- ],

- "preSql": [

- "delete from m_user"

- ],

- "connection": [

- {

- "jdbcUrl": "jdbc:mysql://127.0.0.1:6001/mo_demo",

- "table": [

- "m_user"

- ]

- }

- ]

- }

- }

- }

- ]

- }

-}

-```

-

-### 启动 DataX

-

-执行以下命令启动 DataX:

-

-```shell

-$ cd {YOUR_DATAX_DIR_BIN}

-$ python datax.py stream2matrixone.json

-```

-

-### 查看运行结果

-

-使用 Mysql Client 连接 MatrixOne,使用 `select` 查询插入的结果。内存中的 1000 条数据已成功写入 MatrixOne。

-

-```sql

-mysql> select * from m_user limit 5;

-+----------+-----------+

-| m_id | m_name |

-+----------+-----------+

-| 20210106 | matrixone |

-| 20210106 | matrixone |

-| 20210106 | matrixone |

-| 20210106 | matrixone |

-| 20210106 | matrixone |

-+----------+-----------+

-5 rows in set (0.01 sec)

-

-mysql> select count(*) from m_user limit 5;

-+----------+

-| count(*) |

-+----------+

-| 1000 |

-+----------+

-1 row in set (0.00 sec)

-```

-

-## 参数说明

-

-以下是 MatrixOneWriter 的一些常用参数说明:

-

-|参数名称 | 参数描述 | 是否必选 | 默认值|

-|---|---|---|---|

-|**jdbcUrl** |目标数据库的 JDBC 连接信息。DataX 在运行时会在提供的 `jdbcUrl` 后面追加一些属性,例如:`yearIsDateType=false&zeroDateTimeBehavior=CONVERT_TO_NULL&rewriteBatchedStatements=true&tinyInt1isBit=false&serverTimezone=Asia/Shanghai`。 |是 |无 |

-|**username** | 目标数据库的用户名。|是 |无 |

-|**password** |目标数据库的密码。 |是 |无 |

-|**table** |目标表的名称。支持写入一个或多个表,如果配置多张表,必须确保它们的结构保持一致。 |是 |无 |

-|**column** | 目标表中需要写入数据的字段,字段之间用英文逗号分隔。例如:`"column": ["id","name","age"]`。如果要写入所有列,可以使用 `*` 表示,例如:`"column": ["*"]`。|是 |无 |

-|**preSql** |写入数据到目标表之前,会执行这里配置的标准 SQL 语句。 |否 |无 |

-|**postSql** |写入数据到目标表之后,会执行这里配置的标准 SQL 语句。 |否 |无 |

-|**writeMode** |控制写入数据到目标表时使用的 SQL 语句,可以选择 `insert` 或 `update`。 | `insert` 或 `update`| `insert`|

-|**batchSize** |一次性批量提交的记录数大小,可以显著减少 DataX 与 MatrixOne 的网络交互次数,提高整体吞吐量。但是设置过大可能导致 DataX 运行进程内存溢出 | 否 | 1024 |

-

-## 类型转换

-

-MatrixOneWriter 支持大多数 MatrixOne 数据类型,但也有少数类型尚未支持,需要特别注意你的数据类型。

-

-以下是 MatrixOneWriter 针对 MatrixOne 数据类型的转换列表:

-

-| DataX 内部类型 | MatrixOne 数据类型 |

-| --------------- | ------------------ |

-| Long | int, tinyint, smallint, bigint |

-| Double | float, double, decimal |

-| String | varchar, char, text |

-| Date | date, datetime, timestamp, time |

-| Boolean | bool |

-| Bytes | blob |

-

-## 参考其他说明

-

-- MatrixOne 兼容 MySQL 协议,MatrixOneWriter 实际上是对 MySQL Writer 进行了一些 JDBC 驱动版本上的调整后的改造版本,你仍然可以使用 MySQL Writer 来写入 MatrixOne。

-

-- 在 DataX 中添加 MatrixOne Writer,那么你需要下载 [matrixonewriter.zip](https://community-shared-data-1308875761.cos.ap-beijing.myqcloud.com/artwork/docs/develop/Computing-Engine/datax-write/matrixonewriter.zip),然后将其解压缩到 DataX 项目根目录的 `plugin/writer/` 目录下,即可开始使用。

-

-## 最佳实践:实现 MatrixOne 与 ElasticSearch 间的数据迁移

-

-MatrixOne 擅长 HTAP 场景的事务处理和低延迟分析计算,ElasticSearch 擅长全文检索,两者做为流行的搜索和分析引擎,结合起来可形成更完善的全场景分析解决方案。为了在不同场景间进行数据的高效流转,我们可通过 DataX 进行 MatrixOne 与 ElasticSearch 间的数据迁移。

-

-### 环境准备

-

-- MatrixOne 版本:1.1.3

-

-- Elasticsearch 版本:7.10.2

-

-- DataX 版本:[DataX_v202309](https://datax-opensource.oss-cn-hangzhou.aliyuncs.com/202309/datax.tar.gz)

-

-### 在 MatrixOne 中创建库和表

-

-创建数据库 `mo`,并在该库创建数据表 person:

-

-```sql

-create database mo;

-CREATE TABLE mo.`person` (

-`id` INT DEFAULT NULL,

-`name` VARCHAR(255) DEFAULT NULL,

-`birthday` DATE DEFAULT NULL

-);

-```

-

-### 在 ElasticSearch 中创建索引

-

-创建名称为 person 的索引(下文 `-u` 参数后为 ElasticSearch 中的用户名和密码,本地测试时可按需进行修改或删除):

-

-```shell

-curl -X PUT "http://127.0.0.1:9200/person" -u elastic:elastic

-```

-

-输出如下信息表示创建成功:

-

-```shell

-{"acknowledged":true,"shards_acknowledged":true,"index":"person"}

-```

-

-给索引 person 添加字段:

-

-```shell

-curl -X PUT "127.0.0.1:9200/person/_mapping" -H 'Content-Type: application/json' -u elastic:elastic -d'

-{

- "properties": {

- "id": { "type": "integer" },

- "name": { "type": "text" },

- "birthday": {"type": "date"}

- }

-}

-'

-```

-

-输出如下信息表示设置成功:

-

-```shell

-{"acknowledged":true}

-```

-

-### 为 ElasticSearch 索引添加数据

-

-通过 curl 命令添加三条数据:

-

-```shell

-curl -X POST '127.0.0.1:9200/person/_bulk' -H 'Content-Type: application/json' -u elastic:elastic -d '

-{"index":{"_index":"person","_type":"_doc","_id":1}}

-{"id": 1,"name": "MatrixOne","birthday": "1992-08-08"}

-{"index":{"_index":"person","_type":"_doc","_id":2}}

-{"id": 2,"name": "MO","birthday": "1993-08-08"}

-{"index":{"_index":"person","_type":"_doc","_id":3}}

-{"id": 3,"name": "墨墨","birthday": "1994-08-08"}

-'

-```

-

-输出如下信息表示执行成功:

-

-```shell

-{"took":5,"errors":false,"items":[{"index":{"_index":"person","_type":"_doc","_id":"1","_version":1,"result":"created","_shards":{"total":2,"successful":1,"failed":0},"_seq_no":0,"_primary_term":1,"status":201}},{"index":{"_index":"person","_type":"_doc","_id":"2","_version":1,"result":"created","_shards":{"total":2,"successful":1,"failed":0},"_seq_no":1,"_primary_term":1,"status":201}},{"index":{"_index":"person","_type":"_doc","_id":"3","_version":1,"result":"created","_shards":{"total":2,"successful":1,"failed":0},"_seq_no":2,"_primary_term":1,"status":201}}]}

-```

-

-查看索引中所有内容:

-

-```shell

-curl -u elastic:elastic -X GET http://127.0.0.1:9200/person/_search?pretty -H 'Content-Type: application/json' -d'

-{

- "query" : {

- "match_all": {}

- }

-}'

-```

-

-可正常看到索引中新增的数据即表示执行成功。

-

-### 使用 DataX 导入数据

-

-#### 1. 下载并解压 DataX

-

-DataX 解压后目录如下:

-

-```shell

-[root@node01 datax]# ll

-total 4

-drwxr-xr-x. 2 root root 59 Nov 28 13:48 bin

-drwxr-xr-x. 2 root root 68 Oct 11 09:55 conf

-drwxr-xr-x. 2 root root 22 Oct 11 09:55 job

-drwxr-xr-x. 2 root root 4096 Oct 11 09:55 lib

-drwxr-xr-x. 4 root root 42 Oct 12 18:42 log

-drwxr-xr-x. 4 root root 42 Oct 12 18:42 log_perf

-drwxr-xr-x. 4 root root 34 Oct 11 09:55 plugin

-drwxr-xr-x. 2 root root 23 Oct 11 09:55 script

-drwxr-xr-x. 2 root root 24 Oct 11 09:55 tmp

-```

-

-为保证迁移的易用性和高效性,MatrixOne 社区开发了 `elasticsearchreader` 以及 `matrixonewriter` 两个插件,将 [elasticsearchreader.zip](https://community-shared-data-1308875761.cos.ap-beijing.myqcloud.com/datax_es_mo/elasticsearchreader.zip) 下载后使用 `unzip` 命令解压至 `datax/plugin/reader` 目录下(注意不要在该目录中保留插件 zip 包,关于 elasticsearchreader 的详细介绍可参考插件包内的 elasticsearchreader.md 文档),同样,将 [matrixonewriter.zip](https://community-shared-data-1308875761.cos.ap-beijing.myqcloud.com/artwork/docs/develop/Computing-Engine/datax-write/matrixonewriter.zip) 下载后解压至 `datax/plugin/writer` 目录下,matrixonewriter 是社区基于 mysqlwriter 的改造版,使用 mysql-connector-j-8.0.33.jar 驱动来保证更好的性能和兼容性,writer 部分的其语法可参考上文“参数说明”章节。

-

-在进行后续的操作前,请先检查插件是否已正确分发在对应的位置中。

-

-#### 2. 编写 ElasticSearch 至 MatrixOne 的迁移作业文件

-

-DataX 使用 json 文件来配置作业信息,编写作业文件例如 **es2mo.json**,习惯性的可以将其存放在 `datax/job` 目录中:

-

-```json

-{

- "job":{

- "setting":{

- "speed":{

- "channel":1

- },

- "errorLimit":{

- "record":0,

- "percentage":0.02

- }

- },

- "content":[

- {

- "reader":{

- "name":"elasticsearchreader",

- "parameter":{

- "endpoint":"http://127.0.0.1:9200",

- "accessId":"elastic",

- "accessKey":"elastic",

- "index":"person",

- "type":"_doc",

- "headers":{

-

- },

- "scroll":"3m",

- "search":[

- {

- "query":{

- "match_all":{

-

- }

- }

- }

- ],

- "table":{

- "filter":"",

- "nameCase":"UPPERCASE",

- "column":[

- {

- "name":"id",

- "type":"integer"

- },

- {

- "name":"name",

- "type":"text"

- },

- {

- "name":"birthday",

- "type":"date"

- }

- ]

- }

- }

- },

- "writer":{

- "name":"matrixonewriter",

- "parameter":{

- "username":"root",

- "password":"111",

- "column":[

- "id",

- "name",

- "birthday"

- ],

- "connection":[

- {

- "table":[

- "person"

- ],

- "jdbcUrl":"jdbc:mysql://127.0.0.1:6001/mo"

- }

- ]

- }

- }

- }

- ]

- }

-}

-```

-

-#### 3. 执行迁移任务

-

-进入 datax 安装目录,执行以下命令启动迁移作业:

-

-```shell

-cd datax

-python bin/datax.py job/es2mo.json

-```

-

-作业执行完成后,输出结果如下:

-

-```shell

-2023-11-28 15:55:45.642 [job-0] INFO StandAloneJobContainerCommunicator - Total 3 records, 67 bytes | Speed 6B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.456s | Percentage 100.00%

-2023-11-28 15:55:45.644 [job-0] INFO JobContainer -

-任务启动时刻 : 2023-11-28 15:55:31

-任务结束时刻 : 2023-11-28 15:55:45

-任务总计耗时 : 14s

-任务平均流量 : 6B/s

-记录写入速度 : 0rec/s

-读出记录总数 : 3

-读写失败总数 : 0

-```

-

-#### 4. 在 MatrixOne 中查看迁移后数据

-

-在 MatrixOne 数据库中查看目标表中的结果,确认迁移已完成:

-

-```shell

-mysql> select * from mo.person;

-+------+-----------+------------+

-| id | name | birthday |

-+------+-----------+------------+

-| 1 | MatrixOne | 1992-08-08 |

-| 2 | MO | 1993-08-08 |

-| 3 | 墨墨 | 1994-08-08 |

-+------+-----------+------------+

-3 rows in set (0.00 sec)

-```

-

-#### 5. 编写 MatrixOne 至 ElasticSearch 的作业文件

-

-编写 datax 作业文件 **mo2es.json**,同样放在 `datax/job` 目录,MatrixOne 高度兼容 MySQL 协议,我们可以直接使用 mysqlreader 来通过 jdbc 方式读取 MatrixOne 中的数据:

-

-```json

-{

- "job": {

- "setting": {

- "speed": {

- "channel": 1

- },

- "errorLimit": {

- "record": 0,

- "percentage": 0.02

- }

- },

- "content": [{

- "reader": {

- "name": "mysqlreader",

- "parameter": {

- "username": "root",

- "password": "111",

- "column": [

- "id",

- "name",

- "birthday"

- ],

- "splitPk": "id",

- "connection": [{

- "table": [

- "person"

- ],

- "jdbcUrl": [

- "jdbc:mysql://127.0.0.1:6001/mo"

- ]

- }]

- }

- },

- "writer": {

- "name": "elasticsearchwriter",

- "parameter": {

- "endpoint": "http://127.0.0.1:9200",

- "accessId": "elastic",

- "accessKey": "elastic",

- "index": "person",

- "type": "_doc",

- "cleanup": true,

- "settings": {

- "index": {

- "number_of_shards": 1,

- "number_of_replicas": 1

- }

- },

- "discovery": false,

- "batchSize": 1000,

- "splitter": ",",

- "column": [{

- "name": "id",

- "type": "integer"

- },

- {

- "name": "name",

- "type": "text"

- },

- {

- "name": "birthday",

- "type": "date"

- }

- ]

-

- }

-

- }

- }]

- }

-}

-```

-

-#### 6.MatrixOne 数据准备

-

-```sql

-truncate table mo.person;

-INSERT into mo.person (id, name, birthday)

-VALUES(1, 'mo101', '2023-07-09'),(2, 'mo102', '2023-07-08'),(3, 'mo103', '2023-07-12');

-```

-

-#### 7. 执行 MatrixOne 向 ElasticSearch 的迁移任务

-

-进入 datax 安装目录,执行以下命令

-

-```shell

-cd datax

-python bin/datax.py job/mo2es.json

-```

-

-执行完成后,输出结果如下:

-

-```shell

-2023-11-28 17:38:04.795 [job-0] INFO StandAloneJobContainerCommunicator - Total 3 records, 42 bytes | Speed 4B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

-2023-11-28 17:38:04.799 [job-0] INFO JobContainer -

-任务启动时刻 : 2023-11-28 17:37:49

-任务结束时刻 : 2023-11-28 17:38:04

-任务总计耗时 : 15s

-任务平均流量 : 4B/s

-记录写入速度 : 0rec/s

-读出记录总数 : 3

-读写失败总数 : 0

-```

-

-#### 8. 查看执行结果

-

-在 Elasticsearch 中查看结果

-

-```shell

-curl -u elastic:elastic -X GET http://127.0.0.1:9200/person/_search?pretty -H 'Content-Type: application/json' -d'

-{

- "query" : {

- "match_all": {}

- }

-}'

-```

-

-结果显示如下,表示迁移作业已正常完成:

-

-```json

-{

- "took" : 7,

- "timed_out" : false,

- "_shards" : {

- "total" : 1,

- "successful" : 1,

- "skipped" : 0,

- "failed" : 0

- },

- "hits" : {

- "total" : {

- "value" : 3,

- "relation" : "eq"

- },

- "max_score" : 1.0,

- "hits" : [

- {

- "_index" : "person",

- "_type" : "_doc",

- "_id" : "dv9QFYwBPwIzfbNQfgG1",

- "_score" : 1.0,

- "_source" : {

- "birthday" : "2023-07-09T00:00:00.000+08:00",

- "name" : "mo101",

- "id" : 1

- }

- },

- {

- "_index" : "person",

- "_type" : "_doc",

- "_id" : "d_9QFYwBPwIzfbNQfgG1",

- "_score" : 1.0,

- "_source" : {

- "birthday" : "2023-07-08T00:00:00.000+08:00",

- "name" : "mo102",

- "id" : 2

- }

- },

- {

- "_index" : "person",

- "_type" : "_doc",

- "_id" : "eP9QFYwBPwIzfbNQfgG1",

- "_score" : 1.0,

- "_source" : {

- "birthday" : "2023-07-12T00:00:00.000+08:00",

- "name" : "mo103",

- "id" : 3

- }

- }

- ]

- }

-}

-```

-

-## 常见问题

-

-**Q: 在运行时,我遇到了“配置信息错误,您提供的配置文件/{YOUR_MATRIXONE_WRITER_PATH}/plugin.json 不存在”的问题该怎么处理?**

-

-A: DataX 在启动时会尝试查找相似的文件夹以寻找 plugin.json 文件。如果 matrixonewriter.zip 文件也存在于相同的目录下,DataX 将尝试从 `.../datax/plugin/writer/matrixonewriter.zip/plugin.json` 中查找。在 MacOS 环境下,DataX 还会尝试从 `.../datax/plugin/writer/.DS_Store/plugin.json` 中查找。此时,您需要删除这些多余的文件或文件夹。

diff --git a/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink.md b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink.md

deleted file mode 100644

index 9cc896e2a4..0000000000

--- a/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink.md

+++ /dev/null

@@ -1,812 +0,0 @@

-# 使用 Flink 将实时数据写入 MatrixOne

-

-## 概述

-

-Apache Flink 是一个强大的框架和分布式处理引擎,专注于进行有状态计算,适用于处理无边界和有边界的数据流。Flink 能够在各种常见集群环境中高效运行,并以内存速度执行计算,支持处理任意规模的数据。

-

-### 应用场景

-

-* 事件驱动型应用

-

- 事件驱动型应用通常具备状态,并且它们从一个或多个事件流中提取数据,根据到达的事件触发计算、状态更新或执行其他外部动作。典型的事件驱动型应用包括反欺诈系统、异常检测、基于规则的报警系统和业务流程监控。

-

-* 数据分析应用

-

- 数据分析任务的主要目标是从原始数据中提取有价值的信息和指标。Flink 支持流式和批量分析应用,适用于各种场景,例如电信网络质量监控、移动应用中的产品更新和实验评估分析、消费者技术领域的实时数据即席分析以及大规模图分析。

-

-* 数据管道应用

-

- 提取 - 转换 - 加载(ETL)是在不同存储系统之间进行数据转换和迁移的常见方法。数据管道和 ETL 作业有相似之处,都可以进行数据转换和丰富,然后将数据从一个存储系统移动到另一个存储系统。不同之处在于数据管道以持续流模式运行,而不是周期性触发。典型的数据管道应用包括电子商务中的实时查询索引构建和持续 ETL。

-

-本篇文档将介绍两种示例,一种是使用计算引擎 Flink 实现将实时数据写入到 MatrixOne,另一种是使用计算引擎 Flink 将流式数据写入到 MatrixOne 数据库。

-

-## 前期准备

-

-### 硬件环境

-

-本次实践对于机器的硬件要求如下:

-

-| 服务器名称 | 服务器 IP | 安装软件 | 操作系统 |

-| ---------- | -------------- | ----------- | -------------- |

-| node1 | 192.168.146.10 | MatrixOne | Debian11.1 x86 |

-| node2 | 192.168.146.12 | kafka | Centos7.9 |

-| node3 | 192.168.146.11 | IDEA、MYSQL | win10 |

-

-### 软件环境

-

-本次实践需要安装部署以下软件环境:

-

-- 完成[单机部署 MatrixOne](https://docs.matrixorigin.cn/1.2.1/MatrixOne/Get-Started/install-standalone-matrixone/)。

-- 下载安装 [lntelliJ IDEA(2022.2.1 or later version)](https://www.jetbrains.com/idea/download/)。

-- 根据你的系统环境选择 [JDK 8+ version](https://www.oracle.com/sg/java/technologies/javase/javase8-archive-downloads.html) 版本进行下载安装。

-- 下载并安装 [Kafka](https://archive.apache.org/dist/kafka/3.5.0/kafka_2.13-3.5.0.tgz),推荐版本为 2.13 - 3.5.0。

-- 下载并安装 [Flink](https://archive.apache.org/dist/flink/flink-1.17.0/flink-1.17.0-bin-scala_2.12.tgz),推荐版本为 1.17.0。

-- 下载并安装 [MySQL](https://downloads.mysql.com/archives/get/p/23/file/mysql-server_8.0.33-1ubuntu23.04_amd64.deb-bundle.tar),推荐版本为 8.0.33。

-

-## 示例 1:从 MySQL 迁移数据至 MatrixOne

-

-### 步骤一:初始化项目

-

-1. 打开 IDEA,点击 **File > New > Project**,选择 **Spring Initializer**,并填写以下配置参数:

-

- - **Name**:matrixone-flink-demo

- - **Location**:~\Desktop

- - **Language**:Java

- - **Type**:Maven

- - **Group**:com.example

- - **Artifact**:matrixone-flink-demo

- - **Package name**:com.matrixone.flink.demo

- - **JDK** 1.8

-

- 配置示例如下图所示:

-

- <div align="center">

- <img src=https://community-shared-data-1308875761.cos.ap-beijing.myqcloud.com/artwork/docs/develop/flink/matrixone-flink-demo.png width=50% heigth=50%/>

- </div>

-

-2. 添加项目依赖,编辑项目根目录下的 `pom.xml` 文件,将以下内容添加到文件中:

-

-```xml

-<?xml version="1.0" encoding="UTF-8"?>

-<project xmlns="http://maven.apache.org/POM/4.0.0"

- xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

- xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

- <modelVersion>4.0.0</modelVersion>

-

- <groupId>com.matrixone.flink</groupId>

- <artifactId>matrixone-flink-demo</artifactId>

- <version>1.0-SNAPSHOT</version>

-

- <properties>

- <scala.binary.version>2.12</scala.binary.version>

- <java.version>1.8</java.version>

- <flink.version>1.17.0</flink.version>

- <scope.mode>compile</scope.mode>

- </properties>

-

- <dependencies>

-

- <!-- Flink Dependency -->

- <dependency>

- <groupId>org.apache.flink</groupId>

- <artifactId>flink-connector-hive_2.12</artifactId>

- <version>${flink.version}</version>

- </dependency>

-

- <dependency>

- <groupId>org.apache.flink</groupId>

- <artifactId>flink-java</artifactId>

- <version>${flink.version}</version>

- </dependency>

-

- <dependency>

- <groupId>org.apache.flink</groupId>

- <artifactId>flink-streaming-java</artifactId>

- <version>${flink.version}</version>

- </dependency>

-

- <dependency>

- <groupId>org.apache.flink</groupId>

- <artifactId>flink-clients</artifactId>

- <version>${flink.version}</version>

- </dependency>

-

- <dependency>

- <groupId>org.apache.flink</groupId>

- <artifactId>flink-table-api-java-bridge</artifactId>

- <version>${flink.version}</version>

- </dependency>

-

- <dependency>

- <groupId>org.apache.flink</groupId>

- <artifactId>flink-table-planner_2.12</artifactId>

- <version>${flink.version}</version>

- </dependency>

-

- <!-- JDBC相关依赖包 -->

- <dependency>

- <groupId>org.apache.flink</groupId>

- <artifactId>flink-connector-jdbc</artifactId>

- <version>1.15.4</version>

- </dependency>

- <dependency>

- <groupId>mysql</groupId>

- <artifactId>mysql-connector-java</artifactId>

- <version>8.0.33</version>

- </dependency>

-

- <!-- Kafka相关依赖 -->

- <dependency>

- <groupId>org.apache.kafka</groupId>

- <artifactId>kafka_2.13</artifactId>

- <version>3.5.0</version>

- </dependency>

- <dependency>

- <groupId>org.apache.flink</groupId>

- <artifactId>flink-connector-kafka</artifactId>

- <version>3.0.0-1.17</version>

- </dependency>

-

- <!-- JSON -->

- <dependency>

- <groupId>com.alibaba.fastjson2</groupId>

- <artifactId>fastjson2</artifactId>

- <version>2.0.34</version>

- </dependency>

-

- </dependencies>

-

-

-

-

- <build>

- <plugins>

- <plugin>

- <groupId>org.apache.maven.plugins</groupId>

- <artifactId>maven-compiler-plugin</artifactId>

- <version>3.8.0</version>

- <configuration>

- <source>${java.version}</source>

- <target>${java.version}</target>

- <encoding>UTF-8</encoding>

- </configuration>

- </plugin>

- <plugin>

- <artifactId>maven-assembly-plugin</artifactId>

- <version>2.6</version>

- <configuration>

- <descriptorRefs>

- <descriptor>jar-with-dependencies</descriptor>

- </descriptorRefs>

- </configuration>

- <executions>

- <execution>

- <id>make-assembly</id>

- <phase>package</phase>

- <goals>

- <goal>single</goal>

- </goals>

- </execution>

- </executions>

- </plugin>

-

- </plugins>

- </build>

-

-</project>

-```

-

-### 步骤二:读取 MatrixOne 数据

-

-使用 MySQL 客户端连接 MatrixOne 后,创建演示所需的数据库以及数据表。

-

-1. 在 MatrixOne 中创建数据库、数据表,并导入数据:

-

- ```SQL

- CREATE DATABASE test;

- USE test;

- CREATE TABLE `person` (`id` INT DEFAULT NULL, `name` VARCHAR(255) DEFAULT NULL, `birthday` DATE DEFAULT NULL);

- INSERT INTO test.person (id, name, birthday) VALUES(1, 'zhangsan', '2023-07-09'),(2, 'lisi', '2023-07-08'),(3, 'wangwu', '2023-07-12');

- ```

-

-2. 在 IDEA 中创建 `MoRead.java` 类,以使用 Flink 读取 MatrixOne 数据:

-

- ```java

- package com.matrixone.flink.demo;

-

- import org.apache.flink.api.common.functions.MapFunction;

- import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

- import org.apache.flink.api.java.ExecutionEnvironment;

- import org.apache.flink.api.java.operators.DataSource;

- import org.apache.flink.api.java.operators.MapOperator;

- import org.apache.flink.api.java.typeutils.RowTypeInfo;

- import org.apache.flink.connector.jdbc.JdbcInputFormat;

- import org.apache.flink.types.Row;

-

- import java.text.SimpleDateFormat;

-

- /**

- * @author MatrixOne

- * @description

- */

- public class MoRead {

-

- private static String srcHost = "192.168.146.10";

- private static Integer srcPort = 6001;

- private static String srcUserName = "root";

- private static String srcPassword = "111";

- private static String srcDataBase = "test";

-

- public static void main(String[] args) throws Exception {

-

- ExecutionEnvironment environment = ExecutionEnvironment.getExecutionEnvironment();

- // 设置并行度

- environment.setParallelism(1);

- SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd");

-

- // 设置查询的字段类型

- RowTypeInfo rowTypeInfo = new RowTypeInfo(

- new BasicTypeInfo[]{

- BasicTypeInfo.INT_TYPE_INFO,

- BasicTypeInfo.STRING_TYPE_INFO,

- BasicTypeInfo.DATE_TYPE_INFO

- },

- new String[]{

- "id",

- "name",

- "birthday"

- }

- );

-

- DataSource<Row> dataSource = environment.createInput(JdbcInputFormat.buildJdbcInputFormat()

- .setDrivername("com.mysql.cj.jdbc.Driver")

- .setDBUrl("jdbc:mysql://" + srcHost + ":" + srcPort + "/" + srcDataBase)

- .setUsername(srcUserName)

- .setPassword(srcPassword)

- .setQuery("select * from person")

- .setRowTypeInfo(rowTypeInfo)

- .finish());

-

- // 将 Wed Jul 12 00:00:00 CST 2023 日期格式转换为 2023-07-12

- MapOperator<Row, Row> mapOperator = dataSource.map((MapFunction<Row, Row>) row -> {

- row.setField("birthday", sdf.format(row.getField("birthday")));

- return row;

- });

-

- mapOperator.print();

- }

- }

- ```

-

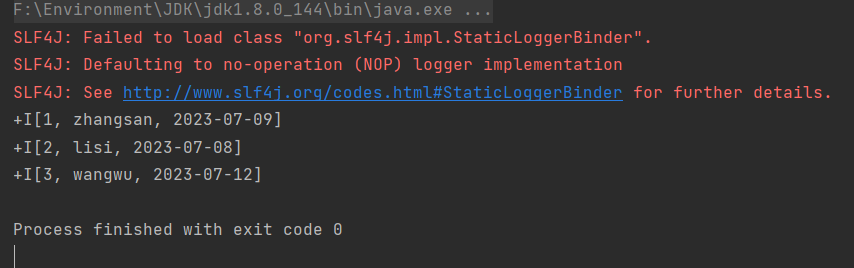

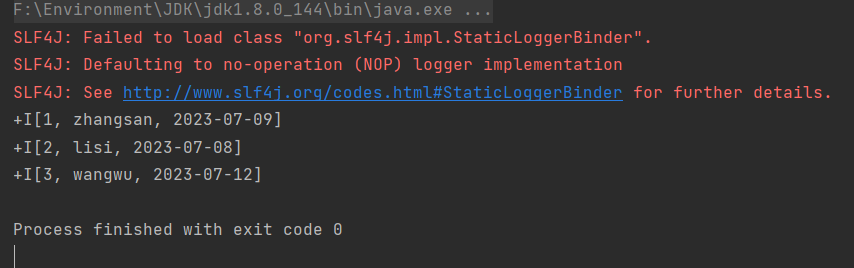

-3. 在 IDEA 中运行 `MoRead.Main()`,执行结果如下:

-

-

-

-### 步骤三:将 MySQL 数据写入 MatrixOne

-

-现在可以开始使用 Flink 将 MySQL 数据迁移到 MatrixOne。

-

-1. 准备 MySQL 数据:在 node3 上,使用 Mysql 客户端连接本地 Mysql,创建所需数据库、数据表、并插入数据:

-

- ```sql

- mysql -h127.0.0.1 -P3306 -uroot -proot

- mysql> CREATE DATABASE motest;

- mysql> USE motest;

- mysql> CREATE TABLE `person` (`id` int DEFAULT NULL, `name` varchar(255) DEFAULT NULL, `birthday` date DEFAULT NULL);

- mysql> INSERT INTO motest.person (id, name, birthday) VALUES(2, 'lisi', '2023-07-09'),(3, 'wangwu', '2023-07-13'),(4, 'zhaoliu', '2023-08-08');

- ```

-

-2. 清空 MatrixOne 表数据:

-

- 在 node3 上,使用 MySQL 客户端连接 node1 的 MatrixOne。由于本示例继续使用前面读取 MatrixOne 数据的示例中的 `test` 数据库,因此我们需要首先清空 `person` 表的数据。

-

- ```sql

- -- 在 node3 上,使用 Mysql 客户端连接 node1 的 MatrixOne

- mysql -h192.168.146.10 -P6001 -uroot -p111

- mysql> TRUNCATE TABLE test.person;

- ```

-

-3. 在 IDEA 中编写代码:

-

- 创建 `Person.java` 和 `Mysql2Mo.java` 类,使用 Flink 读取 MySQL 数据,执行简单的 ETL 操作(将 Row 转换为 Person 对象),最终将数据写入 MatrixOne 中。

-

-```java

-package com.matrixone.flink.demo.entity;

-

-

-import java.util.Date;

-

-public class Person {

-

- private int id;

- private String name;

- private Date birthday;

-

- public int getId() {

- return id;

- }

-

- public void setId(int id) {

- this.id = id;

- }

-

- public String getName() {

- return name;

- }

-

- public void setName(String name) {

- this.name = name;

- }

-

- public Date getBirthday() {

- return birthday;

- }

-

- public void setBirthday(Date birthday) {

- this.birthday = birthday;

- }

-}

-```

-

-```java

-package com.matrixone.flink.demo;

-

-import com.matrixone.flink.demo.entity.Person;

-import org.apache.flink.api.common.functions.MapFunction;

-import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

-import org.apache.flink.api.java.typeutils.RowTypeInfo;

-import org.apache.flink.connector.jdbc.*;

-import org.apache.flink.streaming.api.datastream.DataStreamSink;

-import org.apache.flink.streaming.api.datastream.DataStreamSource;

-import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

-import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

-import org.apache.flink.types.Row;

-

-import java.sql.Date;

-

-/**

- * @author MatrixOne

- * @description

- */

-public class Mysql2Mo {

-

- private static String srcHost = "127.0.0.1";

- private static Integer srcPort = 3306;

- private static String srcUserName = "root";

- private static String srcPassword = "root";

- private static String srcDataBase = "motest";

-

- private static String destHost = "192.168.146.10";

- private static Integer destPort = 6001;

- private static String destUserName = "root";

- private static String destPassword = "111";

- private static String destDataBase = "test";

- private static String destTable = "person";

-

-

- public static void main(String[] args) throws Exception {

-

- StreamExecutionEnvironment environment = StreamExecutionEnvironment.getExecutionEnvironment();

- //设置并行度

- environment.setParallelism(1);

- //设置查询的字段类型

- RowTypeInfo rowTypeInfo = new RowTypeInfo(

- new BasicTypeInfo[]{

- BasicTypeInfo.INT_TYPE_INFO,

- BasicTypeInfo.STRING_TYPE_INFO,

- BasicTypeInfo.DATE_TYPE_INFO

- },

- new String[]{

- "id",

- "name",

- "birthday"

- }

- );

-

- //添加 srouce

- DataStreamSource<Row> dataSource = environment.createInput(JdbcInputFormat.buildJdbcInputFormat()

- .setDrivername("com.mysql.cj.jdbc.Driver")

- .setDBUrl("jdbc:mysql://" + srcHost + ":" + srcPort + "/" + srcDataBase)

- .setUsername(srcUserName)

- .setPassword(srcPassword)

- .setQuery("select * from person")

- .setRowTypeInfo(rowTypeInfo)

- .finish());

-

- //进行 ETL

- SingleOutputStreamOperator<Person> mapOperator = dataSource.map((MapFunction<Row, Person>) row -> {

- Person person = new Person();

- person.setId((Integer) row.getField("id"));

- person.setName((String) row.getField("name"));

- person.setBirthday((java.util.Date)row.getField("birthday"));

- return person;

- });

-

- //设置 matrixone sink 信息

- mapOperator.addSink(

- JdbcSink.sink(

- "insert into " + destTable + " values(?,?,?)",

- (ps, t) -> {

- ps.setInt(1, t.getId());

- ps.setString(2, t.getName());

- ps.setDate(3, new Date(t.getBirthday().getTime()));

- },

- new JdbcConnectionOptions.JdbcConnectionOptionsBuilder()

- .withDriverName("com.mysql.cj.jdbc.Driver")

- .withUrl("jdbc:mysql://" + destHost + ":" + destPort + "/" + destDataBase)

- .withUsername(destUserName)

- .withPassword(destPassword)

- .build()

- )

- );

-

- environment.execute();

- }

-

-}

-```

-

-### 步骤四:查看执行结果

-

-在 MatrixOne 中执行如下 SQL 查询结果:

-

-```sql

-mysql> select * from test.person;

-+------+---------+------------+

-| id | name | birthday |

-+------+---------+------------+

-| 2 | lisi | 2023-07-09 |

-| 3 | wangwu | 2023-07-13 |

-| 4 | zhaoliu | 2023-08-08 |

-+------+---------+------------+

-3 rows in set (0.01 sec)

-```

-

-## 示例 2:将 Kafka 数据写入 MatrixOne

-

-### 步骤一:启动 Kafka 服务

-

-Kafka 集群协调和元数据管理可以通过 KRaft 或 ZooKeeper 来实现。在这里,我们将使用 Kafka 3.5.0 版本,无需依赖独立的 ZooKeeper 软件,而是使用 Kafka 自带的 **KRaft** 来进行元数据管理。请按照以下步骤配置配置文件,该文件位于 Kafka 软件根目录下的 `config/kraft/server.properties`。

-

-配置文件内容如下:

-

-```properties

-# Licensed to the Apache Software Foundation (ASF) under one or more

-# contributor license agreements. See the NOTICE file distributed with

-# this work for additional information regarding copyright ownership.

-# The ASF licenses this file to You under the Apache License, Version 2.0

-# (the "License"); you may not use this file except in compliance with

-# the License. You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-

-#

-# This configuration file is intended for use in KRaft mode, where

-# Apache ZooKeeper is not present. See config/kraft/README.md for details.

-#

-

-############################# Server Basics #############################

-

-# The role of this server. Setting this puts us in KRaft mode

-process.roles=broker,controller

-

-# The node id associated with this instance's roles

-node.id=1

-

-# The connect string for the controller quorum

-controller.quorum.voters=1@192.168.146.12:9093

-

-############################# Socket Server Settings #############################

-

-# The address the socket server listens on.

-# Combined nodes (i.e. those with `process.roles=broker,controller`) must list the controller listener here at a minimum.

-# If the broker listener is not defined, the default listener will use a host name that is equal to the value of java.net.InetAddress.getCanonicalHostName(),

-# with PLAINTEXT listener name, and port 9092.

-# FORMAT:

-# listeners = listener_name://host_name:port

-# EXAMPLE:

-# listeners = PLAINTEXT://your.host.name:9092

-#listeners=PLAINTEXT://:9092,CONTROLLER://:9093

-listeners=PLAINTEXT://192.168.146.12:9092,CONTROLLER://192.168.146.12:9093

-

-# Name of listener used for communication between brokers.

-inter.broker.listener.name=PLAINTEXT

-

-# Listener name, hostname and port the broker will advertise to clients.

-# If not set, it uses the value for "listeners".

-#advertised.listeners=PLAINTEXT://localhost:9092

-

-# A comma-separated list of the names of the listeners used by the controller.

-# If no explicit mapping set in `listener.security.protocol.map`, default will be using PLAINTEXT protocol

-# This is required if running in KRaft mode.

-controller.listener.names=CONTROLLER

-

-# Maps listener names to security protocols, the default is for them to be the same. See the config documentation for more details

-listener.security.protocol.map=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

-

-# The number of threads that the server uses for receiving requests from the network and sending responses to the network

-num.network.threads=3

-

-# The number of threads that the server uses for processing requests, which may include disk I/O

-num.io.threads=8

-

-# The send buffer (SO_SNDBUF) used by the socket server

-socket.send.buffer.bytes=102400

-

-# The receive buffer (SO_RCVBUF) used by the socket server

-socket.receive.buffer.bytes=102400

-

-# The maximum size of a request that the socket server will accept (protection against OOM)

-socket.request.max.bytes=104857600

-

-

-############################# Log Basics #############################

-

-# A comma separated list of directories under which to store log files

-log.dirs=/home/software/kafka_2.13-3.5.0/kraft-combined-logs

-

-# The default number of log partitions per topic. More partitions allow greater

-# parallelism for consumption, but this will also result in more files across

-# the brokers.

-num.partitions=1

-

-# The number of threads per data directory to be used for log recovery at startup and flushing at shutdown.

-# This value is recommended to be increased for installations with data dirs located in RAID array.

-num.recovery.threads.per.data.dir=1

-

-############################# Internal Topic Settings #############################

-# The replication factor for the group metadata internal topics "__consumer_offsets" and "__transaction_state"

-# For anything other than development testing, a value greater than 1 is recommended to ensure availability such as 3.

-offsets.topic.replication.factor=1

-transaction.state.log.replication.factor=1

-transaction.state.log.min.isr=1

-

-############################# Log Flush Policy #############################

-

-# Messages are immediately written to the filesystem but by default we only fsync() to sync

-# the OS cache lazily. The following configurations control the flush of data to disk.

-# There are a few important trade-offs here:

-# 1. Durability: Unflushed data may be lost if you are not using replication.

-# 2. Latency: Very large flush intervals may lead to latency spikes when the flush does occur as there will be a lot of data to flush.

-# 3. Throughput: The flush is generally the most expensive operation, and a small flush interval may lead to excessive seeks.

-# The settings below allow one to configure the flush policy to flush data after a period of time or

-# every N messages (or both). This can be done globally and overridden on a per-topic basis.

-

-# The number of messages to accept before forcing a flush of data to disk

-#log.flush.interval.messages=10000

-

-# The maximum amount of time a message can sit in a log before we force a flush

-#log.flush.interval.ms=1000

-

-############################# Log Retention Policy #############################

-

-# The following configurations control the disposal of log segments. The policy can

-# be set to delete segments after a period of time, or after a given size has accumulated.

-# A segment will be deleted whenever *either* of these criteria are met. Deletion always happens

-# from the end of the log.

-

-# The minimum age of a log file to be eligible for deletion due to age

-log.retention.hours=72

-

-# A size-based retention policy for logs. Segments are pruned from the log unless the remaining

-# segments drop below log.retention.bytes. Functions independently of log.retention.hours.

-#log.retention.bytes=1073741824

-

-# The maximum size of a log segment file. When this size is reached a new log segment will be created.

-log.segment.bytes=1073741824

-

-# The interval at which log segments are checked to see if they can be deleted according

-# to the retention policies

-log.retention.check.interval.ms=300000

-```

-

-文件配置完成后,执行如下命令,启动 Kafka 服务:

-

-```shell

-#生成集群ID

-$ KAFKA_CLUSTER_ID="$(bin/kafka-storage.sh random-uuid)"

-#设置日志目录格式

-$ bin/kafka-storage.sh format -t $KAFKA_CLUSTER_ID -c config/kraft/server.properties

-#启动Kafka服务

-$ bin/kafka-server-start.sh config/kraft/server.properties

-```

-

-### 步骤二:创建 Kafka 主题

-

-为了使 Flink 能够从中读取数据并写入到 MatrixOne,我们需要首先创建一个名为 "matrixone" 的 Kafka 主题。在下面的命令中,使用 `--bootstrap-server` 参数指定 Kafka 服务的监听地址为 `192.168.146.12:9092`:

-

-```shell

-$ bin/kafka-topics.sh --create --topic matrixone --bootstrap-server 192.168.146.12:9092

-```

-

-### 步骤三:读取 MatrixOne 数据

-

-在连接到 MatrixOne 数据库之后,需要执行以下操作以创建所需的数据库和数据表:

-

-1. 在 MatrixOne 中创建数据库和数据表,并导入数据:

-

- ```sql

- CREATE TABLE `users` (

- `id` INT DEFAULT NULL,

- `name` VARCHAR(255) DEFAULT NULL,

- `age` INT DEFAULT NULL

- )

- ```

-

-2. 在 IDEA 集成开发环境中编写代码:

-

- 在 IDEA 中,创建两个类:`User.java` 和 `Kafka2Mo.java`。这些类用于使用 Flink 从 Kafka 读取数据,并将数据写入 MatrixOne 数据库中。

-

-```java

-package com.matrixone.flink.demo.entity;

-

-public class User {

-

- private int id;

- private String name;

- private int age;

-

- public int getId() {

- return id;

- }

-

- public void setId(int id) {

- this.id = id;

- }

-

- public String getName() {

- return name;

- }

-

- public void setName(String name) {

- this.name = name;

- }

-

- public int getAge() {

- return age;

- }

-

- public void setAge(int age) {

- this.age = age;

- }

-}

-```

-

-```java

-package com.matrixone.flink.demo;

-

-import com.alibaba.fastjson2.JSON;

-import com.matrixone.flink.demo.entity.User;

-import org.apache.flink.api.common.eventtime.WatermarkStrategy;

-import org.apache.flink.api.common.serialization.AbstractDeserializationSchema;

-import org.apache.flink.connector.jdbc.JdbcExecutionOptions;

-import org.apache.flink.connector.jdbc.JdbcSink;

-import org.apache.flink.connector.jdbc.JdbcStatementBuilder;

-import org.apache.flink.connector.jdbc.internal.options.JdbcConnectorOptions;

-import org.apache.flink.connector.kafka.source.KafkaSource;

-import org.apache.flink.connector.kafka.source.enumerator.initializer.OffsetsInitializer;

-import org.apache.flink.streaming.api.datastream.DataStreamSource;

-import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

-import org.apache.kafka.clients.consumer.OffsetResetStrategy;

-

-import java.nio.charset.StandardCharsets;

-

-/**

- * @author MatrixOne

- * @desc

- */

-public class Kafka2Mo {

-

- private static String srcServer = "192.168.146.12:9092";

- private static String srcTopic = "matrixone";

- private static String consumerGroup = "matrixone_group";

-

- private static String destHost = "192.168.146.10";

- private static Integer destPort = 6001;

- private static String destUserName = "root";

- private static String destPassword = "111";

- private static String destDataBase = "test";

- private static String destTable = "person";

-

- public static void main(String[] args) throws Exception {

-

- //初始化环境

- StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

- //设置并行度

- env.setParallelism(1);

-

- //设置 kafka source 信息

- KafkaSource<User> source = KafkaSource.<User>builder()

- //Kafka 服务

- .setBootstrapServers(srcServer)

- //消息主题

- .setTopics(srcTopic)

- //消费组

- .setGroupId(consumerGroup)

- //偏移量 当没有提交偏移量则从最开始开始消费

- .setStartingOffsets(OffsetsInitializer.committedOffsets(OffsetResetStrategy.LATEST))

- //自定义解析消息内容

- .setValueOnlyDeserializer(new AbstractDeserializationSchema<User>() {

- @Override

- public User deserialize(byte[] message) {

- return JSON.parseObject(new String(message, StandardCharsets.UTF_8), User.class);

- }

- })

- .build();

- DataStreamSource<User> kafkaSource = env.fromSource(source, WatermarkStrategy.noWatermarks(), "kafka_maxtixone");

- //kafkaSource.print();

-

- //设置 matrixone sink 信息

- kafkaSource.addSink(JdbcSink.sink(

- "insert into users (id,name,age) values(?,?,?)",

- (JdbcStatementBuilder<User>) (preparedStatement, user) -> {

- preparedStatement.setInt(1, user.getId());

- preparedStatement.setString(2, user.getName());

- preparedStatement.setInt(3, user.getAge());

- },

- JdbcExecutionOptions.builder()

- //默认值 5000

- .withBatchSize(1000)

- //默认值为 0

- .withBatchIntervalMs(200)

- //最大尝试次数

- .withMaxRetries(5)

- .build(),

- JdbcConnectorOptions.builder()

- .setDBUrl("jdbc:mysql://"+destHost+":"+destPort+"/"+destDataBase)

- .setUsername(destUserName)

- .setPassword(destPassword)

- .setDriverName("com.mysql.cj.jdbc.Driver")

- .setTableName(destTable)

- .build()

- ));

- env.execute();

- }

-}

-```

-

-代码编写完成后,你可以运行 Flink 任务,即在 IDEA 中选择 `Kafka2Mo.java` 文件,然后执行 `Kafka2Mo.Main()`。

-

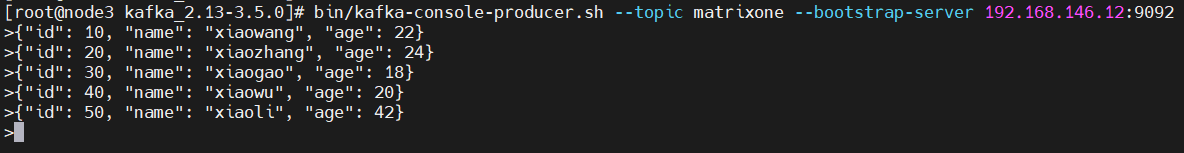

-### 步骤四:生成数据

-

-使用 Kafka 提供的命令行生产者工具,您可以向 Kafka 的 "matrixone" 主题中添加数据。在下面的命令中,使用 `--topic` 参数指定要添加到的主题,而 `--bootstrap-server` 参数指定了 Kafka 服务的监听地址。

-

-```shell

-bin/kafka-console-producer.sh --topic matrixone --bootstrap-server 192.168.146.12:9092

-```

-

-执行上述命令后,您将在控制台上等待输入消息内容。只需直接输入消息值 (value),每行表示一条消息(以换行符分隔),如下所示:

-

-```shell

-{"id": 10, "name": "xiaowang", "age": 22}

-{"id": 20, "name": "xiaozhang", "age": 24}

-{"id": 30, "name": "xiaogao", "age": 18}

-{"id": 40, "name": "xiaowu", "age": 20}

-{"id": 50, "name": "xiaoli", "age": 42}

-```

-

-

-

-### 步骤五:查看执行结果

-

-在 MatrixOne 中执行如下 SQL 查询结果:

-

-```sql

-mysql> select * from test.users;

-+------+-----------+------+

-| id | name | age |

-+------+-----------+------+

-| 10 | xiaowang | 22 |

-| 20 | xiaozhang | 24 |

-| 30 | xiaogao | 18 |

-| 40 | xiaowu | 20 |

-| 50 | xiaoli | 42 |

-+------+-----------+------+

-5 rows in set (0.01 sec)

-```

diff --git a/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-kafka-matrixone.md b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-kafka-matrixone.md

new file mode 100644

index 0000000000..6cea267c8e

--- /dev/null

+++ b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-kafka-matrixone.md

@@ -0,0 +1,363 @@

+# 使用 Flink 将 Kafka 数据写入 MatrixOne

+

+本章节将介绍如何使用 Flink 将 Kafka 数据写入到 MatrixOne。

+

+## 前期准备

+

+本次实践需要安装部署以下软件环境:

+

+- 完成[单机部署 MatrixOne](https://docs.matrixorigin.cn/1.2.1/MatrixOne/Get-Started/install-standalone-matrixone/)。

+- 下载安装 [lntelliJ IDEA(2022.2.1 or later version)](https://www.jetbrains.com/idea/download/)。

+- 根据你的系统环境选择 [JDK 8+ version](https://www.oracle.com/sg/java/technologies/javase/javase8-archive-downloads.html) 版本进行下载安装。

+- 下载并安装 [Kafka](https://archive.apache.org/dist/kafka/3.5.0/kafka_2.13-3.5.0.tgz)。

+- 下载并安装 [Flink](https://archive.apache.org/dist/flink/flink-1.17.0/flink-1.17.0-bin-scala_2.12.tgz),最低支持版本为 1.11。

+- 下载并安装 [MySQL Client](https://dev.mysql.com/downloads/mysql)。

+

+## 操作步骤

+

+### 步骤一:启动 Kafka 服务

+

+Kafka 集群协调和元数据管理可以通过 KRaft 或 ZooKeeper 来实现。在这里,我们将使用 Kafka 3.5.0 版本,无需依赖独立的 ZooKeeper 软件,而是使用 Kafka 自带的 **KRaft** 来进行元数据管理。请按照以下步骤配置配置文件,该文件位于 Kafka 软件根目录下的 `config/kraft/server.properties`。

+

+配置文件内容如下:

+

+```properties

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+#

+# This configuration file is intended for use in KRaft mode, where

+# Apache ZooKeeper is not present. See config/kraft/README.md for details.

+#

+

+############################# Server Basics #############################

+

+# The role of this server. Setting this puts us in KRaft mode

+process.roles=broker,controller

+

+# The node id associated with this instance's roles

+node.id=1

+

+# The connect string for the controller quorum

+controller.quorum.voters=1@xx.xx.xx.xx:9093

+

+############################# Socket Server Settings #############################

+

+# The address the socket server listens on.

+# Combined nodes (i.e. those with `process.roles=broker,controller`) must list the controller listener here at a minimum.

+# If the broker listener is not defined, the default listener will use a host name that is equal to the value of java.net.InetAddress.getCanonicalHostName(),

+# with PLAINTEXT listener name, and port 9092.

+# FORMAT:

+# listeners = listener_name://host_name:port

+# EXAMPLE:

+# listeners = PLAINTEXT://your.host.name:9092

+#listeners=PLAINTEXT://:9092,CONTROLLER://:9093

+listeners=PLAINTEXT://xx.xx.xx.xx:9092,CONTROLLER://xx.xx.xx.xx:9093

+

+# Name of listener used for communication between brokers.

+inter.broker.listener.name=PLAINTEXT

+

+# Listener name, hostname and port the broker will advertise to clients.

+# If not set, it uses the value for "listeners".

+#advertised.listeners=PLAINTEXT://localhost:9092

+

+# A comma-separated list of the names of the listeners used by the controller.

+# If no explicit mapping set in `listener.security.protocol.map`, default will be using PLAINTEXT protocol

+# This is required if running in KRaft mode.

+controller.listener.names=CONTROLLER

+

+# Maps listener names to security protocols, the default is for them to be the same. See the config documentation for more details

+listener.security.protocol.map=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

+

+# The number of threads that the server uses for receiving requests from the network and sending responses to the network

+num.network.threads=3

+

+# The number of threads that the server uses for processing requests, which may include disk I/O

+num.io.threads=8

+

+# The send buffer (SO_SNDBUF) used by the socket server

+socket.send.buffer.bytes=102400

+

+# The receive buffer (SO_RCVBUF) used by the socket server

+socket.receive.buffer.bytes=102400

+

+# The maximum size of a request that the socket server will accept (protection against OOM)

+socket.request.max.bytes=104857600

+

+

+############################# Log Basics #############################

+

+# A comma separated list of directories under which to store log files

+log.dirs=/home/software/kafka_2.13-3.5.0/kraft-combined-logs

+

+# The default number of log partitions per topic. More partitions allow greater

+# parallelism for consumption, but this will also result in more files across

+# the brokers.

+num.partitions=1

+

+# The number of threads per data directory to be used for log recovery at startup and flushing at shutdown.

+# This value is recommended to be increased for installations with data dirs located in RAID array.

+num.recovery.threads.per.data.dir=1

+

+############################# Internal Topic Settings #############################

+# The replication factor for the group metadata internal topics "__consumer_offsets" and "__transaction_state"

+# For anything other than development testing, a value greater than 1 is recommended to ensure availability such as 3.

+offsets.topic.replication.factor=1

+transaction.state.log.replication.factor=1

+transaction.state.log.min.isr=1

+

+############################# Log Flush Policy #############################

+

+# Messages are immediately written to the filesystem but by default we only fsync() to sync

+# the OS cache lazily. The following configurations control the flush of data to disk.

+# There are a few important trade-offs here:

+# 1. Durability: Unflushed data may be lost if you are not using replication.

+# 2. Latency: Very large flush intervals may lead to latency spikes when the flush does occur as there will be a lot of data to flush.

+# 3. Throughput: The flush is generally the most expensive operation, and a small flush interval may lead to excessive seeks.

+# The settings below allow one to configure the flush policy to flush data after a period of time or

+# every N messages (or both). This can be done globally and overridden on a per-topic basis.

+

+# The number of messages to accept before forcing a flush of data to disk

+#log.flush.interval.messages=10000

+

+# The maximum amount of time a message can sit in a log before we force a flush

+#log.flush.interval.ms=1000

+

+############################# Log Retention Policy #############################

+

+# The following configurations control the disposal of log segments. The policy can

+# be set to delete segments after a period of time, or after a given size has accumulated.

+# A segment will be deleted whenever *either* of these criteria are met. Deletion always happens

+# from the end of the log.

+

+# The minimum age of a log file to be eligible for deletion due to age

+log.retention.hours=72

+

+# A size-based retention policy for logs. Segments are pruned from the log unless the remaining

+# segments drop below log.retention.bytes. Functions independently of log.retention.hours.

+#log.retention.bytes=1073741824

+

+# The maximum size of a log segment file. When this size is reached a new log segment will be created.

+log.segment.bytes=1073741824

+

+# The interval at which log segments are checked to see if they can be deleted according

+# to the retention policies

+log.retention.check.interval.ms=300000

+```

+

+文件配置完成后,执行如下命令,启动 Kafka 服务:

+

+```shell

+#生成集群ID

+$ KAFKA_CLUSTER_ID="$(bin/kafka-storage.sh random-uuid)"

+#设置日志目录格式

+$ bin/kafka-storage.sh format -t $KAFKA_CLUSTER_ID -c config/kraft/server.properties

+#启动Kafka服务

+$ bin/kafka-server-start.sh config/kraft/server.properties

+```

+

+### 步骤二:创建 Kafka 主题

+

+为了使 Flink 能够从中读取数据并写入到 MatrixOne,我们需要首先创建一个名为 "matrixone" 的 Kafka 主题。在下面的命令中,使用 `--bootstrap-server` 参数指定 Kafka 服务的监听地址为 `xx.xx.xx.xx:9092`:

+

+```shell

+$ bin/kafka-topics.sh --create --topic matrixone --bootstrap-server xx.xx.xx.xx:9092

+```

+

+### 步骤三:读取 MatrixOne 数据

+

+在连接到 MatrixOne 数据库之后,需要执行以下操作以创建所需的数据库和数据表:

+

+1. 在 MatrixOne 中创建数据库和数据表,并导入数据:

+

+ ```sql

+ CREATE TABLE `users` (

+ `id` INT DEFAULT NULL,

+ `name` VARCHAR(255) DEFAULT NULL,

+ `age` INT DEFAULT NULL

+ )

+ ```

+

+2. 在 IDEA 集成开发环境中编写代码:

+

+ 在 IDEA 中,创建两个类:`User.java` 和 `Kafka2Mo.java`。这些类用于使用 Flink 从 Kafka 读取数据,并将数据写入 MatrixOne 数据库中。

+

+```java

+package com.matrixone.flink.demo.entity;

+

+public class User {

+

+ private int id;

+ private String name;

+ private int age;

+

+ public int getId() {

+ return id;

+ }

+

+ public void setId(int id) {

+ this.id = id;

+ }

+

+ public String getName() {

+ return name;

+ }

+

+ public void setName(String name) {

+ this.name = name;

+ }

+

+ public int getAge() {

+ return age;

+ }

+

+ public void setAge(int age) {

+ this.age = age;

+ }

+}

+```

+

+```java

+package com.matrixone.flink.demo;

+

+import com.alibaba.fastjson2.JSON;

+import com.matrixone.flink.demo.entity.User;

+import org.apache.flink.api.common.eventtime.WatermarkStrategy;

+import org.apache.flink.api.common.serialization.AbstractDeserializationSchema;

+import org.apache.flink.connector.jdbc.JdbcExecutionOptions;

+import org.apache.flink.connector.jdbc.JdbcSink;

+import org.apache.flink.connector.jdbc.JdbcStatementBuilder;

+import org.apache.flink.connector.jdbc.internal.options.JdbcConnectorOptions;

+import org.apache.flink.connector.kafka.source.KafkaSource;

+import org.apache.flink.connector.kafka.source.enumerator.initializer.OffsetsInitializer;

+import org.apache.flink.streaming.api.datastream.DataStreamSource;

+import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

+import org.apache.kafka.clients.consumer.OffsetResetStrategy;

+

+import java.nio.charset.StandardCharsets;

+

+/**

+ * @author MatrixOne

+ * @desc

+ */

+public class Kafka2Mo {

+

+ private static String srcServer = "xx.xx.xx.xx:9092";

+ private static String srcTopic = "matrixone";

+ private static String consumerGroup = "matrixone_group";

+

+ private static String destHost = "xx.xx.xx.xx";

+ private static Integer destPort = 6001;

+ private static String destUserName = "root";

+ private static String destPassword = "111";

+ private static String destDataBase = "test";

+ private static String destTable = "person";

+

+ public static void main(String[] args) throws Exception {

+

+ //初始化环境

+ StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

+ //设置并行度

+ env.setParallelism(1);

+

+ //设置 kafka source 信息

+ KafkaSource<User> source = KafkaSource.<User>builder()

+ //Kafka 服务

+ .setBootstrapServers(srcServer)

+ //消息主题

+ .setTopics(srcTopic)

+ //消费组

+ .setGroupId(consumerGroup)

+ //偏移量 当没有提交偏移量则从最开始开始消费

+ .setStartingOffsets(OffsetsInitializer.committedOffsets(OffsetResetStrategy.LATEST))

+ //自定义解析消息内容

+ .setValueOnlyDeserializer(new AbstractDeserializationSchema<User>() {

+ @Override

+ public User deserialize(byte[] message) {

+ return JSON.parseObject(new String(message, StandardCharsets.UTF_8), User.class);

+ }

+ })

+ .build();

+ DataStreamSource<User> kafkaSource = env.fromSource(source, WatermarkStrategy.noWatermarks(), "kafka_maxtixone");

+ //kafkaSource.print();

+

+ //设置 matrixone sink 信息

+ kafkaSource.addSink(JdbcSink.sink(

+ "insert into users (id,name,age) values(?,?,?)",

+ (JdbcStatementBuilder<User>) (preparedStatement, user) -> {

+ preparedStatement.setInt(1, user.getId());

+ preparedStatement.setString(2, user.getName());

+ preparedStatement.setInt(3, user.getAge());

+ },

+ JdbcExecutionOptions.builder()

+ //默认值 5000

+ .withBatchSize(1000)

+ //默认值为 0

+ .withBatchIntervalMs(200)

+ //最大尝试次数

+ .withMaxRetries(5)

+ .build(),

+ JdbcConnectorOptions.builder()

+ .setDBUrl("jdbc:mysql://"+destHost+":"+destPort+"/"+destDataBase)

+ .setUsername(destUserName)

+ .setPassword(destPassword)

+ .setDriverName("com.mysql.cj.jdbc.Driver")

+ .setTableName(destTable)

+ .build()

+ ));

+ env.execute();

+ }

+}

+```

+

+代码编写完成后,你可以运行 Flink 任务,即在 IDEA 中选择 `Kafka2Mo.java` 文件,然后执行 `Kafka2Mo.Main()`。

+

+### 步骤四:生成数据

+

+使用 Kafka 提供的命令行生产者工具,您可以向 Kafka 的 "matrixone" 主题中添加数据。在下面的命令中,使用 `--topic` 参数指定要添加到的主题,而 `--bootstrap-server` 参数指定了 Kafka 服务的监听地址。

+

+```shell

+bin/kafka-console-producer.sh --topic matrixone --bootstrap-server xx.xx.xx.xx:9092

+```

+

+执行上述命令后,您将在控制台上等待输入消息内容。只需直接输入消息值 (value),每行表示一条消息(以换行符分隔),如下所示:

+

+```shell

+{"id": 10, "name": "xiaowang", "age": 22}

+{"id": 20, "name": "xiaozhang", "age": 24}

+{"id": 30, "name": "xiaogao", "age": 18}

+{"id": 40, "name": "xiaowu", "age": 20}

+{"id": 50, "name": "xiaoli", "age": 42}

+```

+

+

+

+### 步骤五:查看执行结果

+

+在 MatrixOne 中执行如下 SQL 查询结果:

+

+```sql

+mysql> select * from test.users;

++------+-----------+------+

+| id | name | age |

++------+-----------+------+

+| 10 | xiaowang | 22 |

+| 20 | xiaozhang | 24 |

+| 30 | xiaogao | 18 |

+| 40 | xiaowu | 20 |

+| 50 | xiaoli | 42 |

++------+-----------+------+

+5 rows in set (0.01 sec)

+```

diff --git a/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-mongo-matrixone.md b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-mongo-matrixone.md

new file mode 100644

index 0000000000..44d131f634

--- /dev/null

+++ b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-mongo-matrixone.md

@@ -0,0 +1,155 @@

+# 使用 Flink 将 MongoDB 数据写入 MatrixOne

+

+本章节将介绍如何使用 Flink 将 MongoDB 数据写入到 MatrixOne。

+

+## 前期准备

+

+本次实践需要安装部署以下软件环境:

+

+- 完成[单机部署 MatrixOne](https://docs.matrixorigin.cn/1.2.1/MatrixOne/Get-Started/install-standalone-matrixone/)。

+- 下载安装 [lntelliJ IDEA(2022.2.1 or later version)](https://www.jetbrains.com/idea/download/)。

+- 根据你的系统环境选择 [JDK 8+ version](https://www.oracle.com/sg/java/technologies/javase/javase8-archive-downloads.html) 版本进行下载安装。

+- 下载并安装 [Flink](https://archive.apache.org/dist/flink/flink-1.17.0/flink-1.17.0-bin-scala_2.12.tgz),最低支持版本为 1.11。

+- 下载并安装 [MongoDB](https://www.mongodb.com/)。

+- 下载并安装 [MySQL](https://downloads.mysql.com/archives/get/p/23/file/mysql-server_8.0.33-1ubuntu23.04_amd64.deb-bundle.tar),推荐版本为 8.0.33。

+

+## 操作步骤

+

+### 开启 Mongodb 副本集模式

+

+关闭命令:

+

+```bash

+mongod -f /opt/software/mongodb/conf/config.conf --shutdown

+```

+

+在 /opt/software/mongodb/conf/config.conf 中增加以下参数

+

+```shell

+replication:

+replSetName: rs0 #复制集名称

+```

+

+重新开启 mangod

+

+```bash

+mongod -f /opt/software/mongodb/conf/config.conf

+```

+

+然后进入 mongo 执行 `rs.initiate()` 然后执行 `rs.status()`

+

+```shell

+> rs.initiate()

+{

+"info2" : "no configuration specified. Using a default configuration for the set",

+"me" : "xx.xx.xx.xx:27017",

+"ok" : 1

+}

+rs0:SECONDARY> rs.status()

+```

+

+看到以下相关信息说明复制集启动成功

+

+```bash

+"members" : [

+{

+"_id" : 0,

+"name" : "xx.xx.xx.xx:27017",

+"health" : 1,

+"state" : 1,

+"stateStr" : "PRIMARY",

+"uptime" : 77,

+"optime" : {

+"ts" : Timestamp(1665998544, 1),

+"t" : NumberLong(1)

+},

+"optimeDate" : ISODate("2022-10-17T09:22:24Z"),

+"syncingTo" : "",

+"syncSourceHost" : "",

+"syncSourceId" : -1,

+"infoMessage" : "could not find member to sync from",

+"electionTime" : Timestamp(1665998504, 2),

+"electionDate" : ISODate("2022-10-17T09:21:44Z"),

+"configVersion" : 1,

+"self" : true,

+"lastHeartbeatMessage" : ""

+}

+],

+"ok" : 1,

+

+rs0:PRIMARY> show dbs

+admin 0.000GB

+config 0.000GB

+local 0.000GB

+test 0.000GB

+```

+

+### 在 flinkcdc sql 界面建立 source 表(mongodb)

+

+在 flink 目录下的 lib 目录下执行,下载 mongodb 的 cdcjar 包

+

+```bash

+wget <https://repo1.maven.org/maven2/com/ververica/flink-sql-connector-mongodb-cdc/2.2.1/flink-sql-connector-mongodb-cdc-2.2.1.jar>

+```

+

+建立数据源 mongodb 的映射表,列名也必须一模一样

+

+```sql

+CREATE TABLE products (

+ _id STRING,#必须有这一列,也必须为主键,因为mongodb会给每行数据自动生成一个id

+ `name` STRING,

+ age INT,

+ PRIMARY KEY(_id) NOT ENFORCED

+) WITH (

+ 'connector' = 'mongodb-cdc',

+ 'hosts' = 'xx.xx.xx.xx:27017',

+ 'username' = 'root',

+ 'password' = '',

+ 'database' = 'test',

+ 'collection' = 'test'

+);

+```

+

+建立完成后可以执行 `select * from products;` 查下是否连接成功

+

+### 在 flinkcdc sql 界面建立 sink 表(MatrixOne)

+

+建立 matrixone 的映射表,表结构需相同,不需要带 id 列

+

+```sql

+CREATE TABLE cdc_matrixone (

+ `name` STRING,

+ age INT,

+ PRIMARY KEY (`name`) NOT ENFORCED

+)WITH (

+'connector' = 'jdbc',

+'url' = 'jdbc:mysql://xx.xx.xx.xx:6001/test',

+'driver' = 'com.mysql.cj.jdbc.Driver',

+'username' = 'root',

+'password' = '111',

+'table-name' = 'mongodbtest'

+);

+```

+

+### 开启 cdc 同步任务

+

+这里同步任务开启后,mongodb 增删改的操作均可同步

+

+```sql

+INSERT INTO cdc_matrixone SELECT `name`,age FROM products;

+

+#增加

+rs0:PRIMARY> db.test.insert({"name" : "ddd", "age" : 90})

+WriteResult({ "nInserted" : 1 })

+rs0:PRIMARY> db.test.find()

+{ "_id" : ObjectId("6347e3c6229d6017c82bf03d"), "name" : "aaa", "age" : 20 }

+{ "_id" : ObjectId("6347e64a229d6017c82bf03e"), "name" : "bbb", "age" : 18 }

+{ "_id" : ObjectId("6347e652229d6017c82bf03f"), "name" : "ccc", "age" : 28 }

+{ "_id" : ObjectId("634d248f10e21b45c73b1a36"), "name" : "ddd", "age" : 90 }

+#修改

+rs0:PRIMARY> db.test.update({'name':'ddd'},{$set:{'age':'99'}})

+WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

+#删除

+rs0:PRIMARY> db.test.remove({'name':'ddd'})

+WriteResult({ "nRemoved" : 1 })

+```

\ No newline at end of file

diff --git a/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-mysql-matrixone.md b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-mysql-matrixone.md

new file mode 100644

index 0000000000..26e727addb

--- /dev/null

+++ b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-mysql-matrixone.md

@@ -0,0 +1,432 @@

+# 使用 Flink 将 MySQL 数据写入 MatrixOne

+

+本章节将介绍如何使用 Flink 将 MySQL 数据写入到 MatrixOne。

+

+## 前期准备

+

+本次实践需要安装部署以下软件环境:

+

+- 完成[单机部署 MatrixOne](https://docs.matrixorigin.cn/1.2.1/MatrixOne/Get-Started/install-standalone-matrixone/)。

+- 下载安装 [lntelliJ IDEA(2022.2.1 or later version)](https://www.jetbrains.com/idea/download/)。

+- 根据你的系统环境选择 [JDK 8+ version](https://www.oracle.com/sg/java/technologies/javase/javase8-archive-downloads.html) 版本进行下载安装。

+- 下载并安装 [Flink](https://archive.apache.org/dist/flink/flink-1.17.0/flink-1.17.0-bin-scala_2.12.tgz),最低支持版本为 1.11。

+- 下载并安装 [MySQL](https://downloads.mysql.com/archives/get/p/23/file/mysql-server_8.0.33-1ubuntu23.04_amd64.deb-bundle.tar),推荐版本为 8.0.33。

+

+## 操作步骤

+

+### 步骤一:初始化项目

+

+1. 打开 IDEA,点击 **File > New > Project**,选择 **Spring Initializer**,并填写以下配置参数:

+

+ - **Name**:matrixone-flink-demo

+ - **Location**:~\Desktop

+ - **Language**:Java

+ - **Type**:Maven

+ - **Group**:com.example

+ - **Artifact**:matrixone-flink-demo

+ - **Package name**:com.matrixone.flink.demo

+ - **JDK** 1.8

+

+ 配置示例如下图所示:

+

+ <div align="center">

+ <img src=https://community-shared-data-1308875761.cos.ap-beijing.myqcloud.com/artwork/docs/develop/flink/matrixone-flink-demo.png width=50% heigth=50%/>

+ </div>

+

+2. 添加项目依赖,编辑项目根目录下的 `pom.xml` 文件,将以下内容添加到文件中:

+

+```xml

+<?xml version="1.0" encoding="UTF-8"?>

+<project xmlns="http://maven.apache.org/POM/4.0.0"

+ xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

+ xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

+ <modelVersion>4.0.0</modelVersion>

+

+ <groupId>com.matrixone.flink</groupId>

+ <artifactId>matrixone-flink-demo</artifactId>

+ <version>1.0-SNAPSHOT</version>

+

+ <properties>

+ <scala.binary.version>2.12</scala.binary.version>

+ <java.version>1.8</java.version>

+ <flink.version>1.17.0</flink.version>

+ <scope.mode>compile</scope.mode>

+ </properties>

+

+ <dependencies>

+

+ <!-- Flink Dependency -->

+ <dependency>

+ <groupId>org.apache.flink</groupId>

+ <artifactId>flink-connector-hive_2.12</artifactId>

+ <version>${flink.version}</version>

+ </dependency>

+

+ <dependency>

+ <groupId>org.apache.flink</groupId>

+ <artifactId>flink-java</artifactId>

+ <version>${flink.version}</version>

+ </dependency>

+

+ <dependency>

+ <groupId>org.apache.flink</groupId>

+ <artifactId>flink-streaming-java</artifactId>

+ <version>${flink.version}</version>

+ </dependency>

+

+ <dependency>

+ <groupId>org.apache.flink</groupId>

+ <artifactId>flink-clients</artifactId>

+ <version>${flink.version}</version>

+ </dependency>

+

+ <dependency>

+ <groupId>org.apache.flink</groupId>

+ <artifactId>flink-table-api-java-bridge</artifactId>

+ <version>${flink.version}</version>

+ </dependency>

+

+ <dependency>

+ <groupId>org.apache.flink</groupId>

+ <artifactId>flink-table-planner_2.12</artifactId>

+ <version>${flink.version}</version>

+ </dependency>

+

+ <!-- JDBC相关依赖包 -->

+ <dependency>

+ <groupId>org.apache.flink</groupId>

+ <artifactId>flink-connector-jdbc</artifactId>

+ <version>1.15.4</version>

+ </dependency>

+ <dependency>

+ <groupId>mysql</groupId>

+ <artifactId>mysql-connector-java</artifactId>

+ <version>8.0.33</version>

+ </dependency>

+

+ <!-- Kafka相关依赖 -->

+ <dependency>

+ <groupId>org.apache.kafka</groupId>

+ <artifactId>kafka_2.13</artifactId>

+ <version>3.5.0</version>

+ </dependency>

+ <dependency>

+ <groupId>org.apache.flink</groupId>

+ <artifactId>flink-connector-kafka</artifactId>

+ <version>3.0.0-1.17</version>

+ </dependency>

+

+ <!-- JSON -->

+ <dependency>

+ <groupId>com.alibaba.fastjson2</groupId>

+ <artifactId>fastjson2</artifactId>

+ <version>2.0.34</version>

+ </dependency>

+

+ </dependencies>

+

+

+

+

+ <build>

+ <plugins>

+ <plugin>

+ <groupId>org.apache.maven.plugins</groupId>

+ <artifactId>maven-compiler-plugin</artifactId>

+ <version>3.8.0</version>

+ <configuration>

+ <source>${java.version}</source>

+ <target>${java.version}</target>

+ <encoding>UTF-8</encoding>

+ </configuration>

+ </plugin>

+ <plugin>

+ <artifactId>maven-assembly-plugin</artifactId>

+ <version>2.6</version>

+ <configuration>

+ <descriptorRefs>

+ <descriptor>jar-with-dependencies</descriptor>

+ </descriptorRefs>

+ </configuration>

+ <executions>

+ <execution>

+ <id>make-assembly</id>

+ <phase>package</phase>

+ <goals>

+ <goal>single</goal>

+ </goals>

+ </execution>

+ </executions>

+ </plugin>

+

+ </plugins>

+ </build>

+

+</project>

+```

+

+### 步骤二:读取 MatrixOne 数据

+

+使用 MySQL 客户端连接 MatrixOne 后,创建演示所需的数据库以及数据表。

+

+1. 在 MatrixOne 中创建数据库、数据表,并导入数据:

+

+ ```SQL

+ CREATE DATABASE test;

+ USE test;

+ CREATE TABLE `person` (`id` INT DEFAULT NULL, `name` VARCHAR(255) DEFAULT NULL, `birthday` DATE DEFAULT NULL);

+ INSERT INTO test.person (id, name, birthday) VALUES(1, 'zhangsan', '2023-07-09'),(2, 'lisi', '2023-07-08'),(3, 'wangwu', '2023-07-12');

+ ```

+

+2. 在 IDEA 中创建 `MoRead.java` 类,以使用 Flink 读取 MatrixOne 数据:

+

+ ```java

+ package com.matrixone.flink.demo;

+

+ import org.apache.flink.api.common.functions.MapFunction;

+ import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

+ import org.apache.flink.api.java.ExecutionEnvironment;

+ import org.apache.flink.api.java.operators.DataSource;

+ import org.apache.flink.api.java.operators.MapOperator;

+ import org.apache.flink.api.java.typeutils.RowTypeInfo;

+ import org.apache.flink.connector.jdbc.JdbcInputFormat;

+ import org.apache.flink.types.Row;

+

+ import java.text.SimpleDateFormat;

+

+ /**

+ * @author MatrixOne

+ * @description

+ */

+ public class MoRead {

+

+ private static String srcHost = "xx.xx.xx.xx";

+ private static Integer srcPort = 6001;

+ private static String srcUserName = "root";

+ private static String srcPassword = "111";

+ private static String srcDataBase = "test";

+

+ public static void main(String[] args) throws Exception {

+

+ ExecutionEnvironment environment = ExecutionEnvironment.getExecutionEnvironment();

+ // 设置并行度

+ environment.setParallelism(1);

+ SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd");

+

+ // 设置查询的字段类型

+ RowTypeInfo rowTypeInfo = new RowTypeInfo(

+ new BasicTypeInfo[]{

+ BasicTypeInfo.INT_TYPE_INFO,

+ BasicTypeInfo.STRING_TYPE_INFO,

+ BasicTypeInfo.DATE_TYPE_INFO

+ },

+ new String[]{

+ "id",

+ "name",

+ "birthday"

+ }

+ );

+

+ DataSource<Row> dataSource = environment.createInput(JdbcInputFormat.buildJdbcInputFormat()

+ .setDrivername("com.mysql.cj.jdbc.Driver")

+ .setDBUrl("jdbc:mysql://" + srcHost + ":" + srcPort + "/" + srcDataBase)

+ .setUsername(srcUserName)

+ .setPassword(srcPassword)

+ .setQuery("select * from person")

+ .setRowTypeInfo(rowTypeInfo)

+ .finish());

+

+ // 将 Wed Jul 12 00:00:00 CST 2023 日期格式转换为 2023-07-12

+ MapOperator<Row, Row> mapOperator = dataSource.map((MapFunction<Row, Row>) row -> {

+ row.setField("birthday", sdf.format(row.getField("birthday")));

+ return row;

+ });

+

+ mapOperator.print();

+ }

+ }

+ ```

+

+3. 在 IDEA 中运行 `MoRead.Main()`,执行结果如下:

+

+

+

+### 步骤三:将 MySQL 数据写入 MatrixOne

+

+现在可以开始使用 Flink 将 MySQL 数据迁移到 MatrixOne。

+

+1. 准备 MySQL 数据:在 node3 上,使用 Mysql 客户端连接本地 Mysql,创建所需数据库、数据表、并插入数据:

+

+ ```sql

+ mysql -h127.0.0.1 -P3306 -uroot -proot

+ mysql> CREATE DATABASE motest;

+ mysql> USE motest;

+ mysql> CREATE TABLE `person` (`id` int DEFAULT NULL, `name` varchar(255) DEFAULT NULL, `birthday` date DEFAULT NULL);