You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

# -*- coding: utf-8 -*-from __future__ importprint_functionimportmathimporttorchimporttorchvision.transformsastransformsfromtorch.distributedimportget_world_size, get_rankfromtorch.utils.data.samplerimportSamplerfromtorchvisionimportdatasetsfromtqdmimporttqdmfromtorch.autogradimportVariableimporttorch.nnasnnimportosprint('MASTER_ADDR', os.environ['MASTER_ADDR'])

print('MASTER_PORT', os.environ['MASTER_PORT'])

print('RANK', os.environ['RANK'])

print('WORLD_SIZE', os.environ['WORLD_SIZE'])

torch.distributed.init_process_group(backend='nccl')

classDistributedSampler(Sampler):

"""Sampler that restricts data loading to a subset of the dataset. It is especially useful in conjunction with :class:`torch.nn.parallel.DistributedDataParallel`. In such case, each process can pass a DistributedSampler instance as a DataLoader sampler, and load a subset of the original dataset that is exclusive to it. .. note:: Dataset is assumed to be of constant size. Arguments: dataset: Dataset used for sampling. num_replicas (optional): Number of processes participating in distributed training. rank (optional): Rank of the current process within num_replicas. """def__init__(self, dataset, num_replicas=None, rank=None):

ifnum_replicasisNone:

num_replicas=get_world_size()

ifrankisNone:

rank=get_rank()

self.dataset=datasetself.num_replicas=num_replicasself.rank=rankself.epoch=0self.num_samples=int(math.ceil(len(self.dataset) *1.0/self.num_replicas))

self.total_size=self.num_samples*self.num_replicasdef__iter__(self):

# deterministically shuffle based on epochg=torch.Generator()

g.manual_seed(self.epoch)

indices=list(torch.randperm(len(self.dataset), generator=g))

# add extra samples to make it evenly divisibleindices+=indices[:(self.total_size-len(indices))]

assertlen(indices) ==self.total_size# subsampleoffset=self.num_samples*self.rankindices=indices[offset:offset+self.num_samples]

assertlen(indices) ==self.num_samplesreturniter(indices)

def__len__(self):

returnself.num_samplesdefset_epoch(self, epoch):

self.epoch=epochtransform=transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

trainset=datasets.CIFAR10(

root="./data/volume1/", train=True, download=True, transform=transform)

train_sampler=DistributedSampler(trainset)

trainloader=torch.utils.data.DataLoader(

trainset, batch_size=32, sampler=train_sampler, num_workers=2, drop_last=True)

testset=datasets.CIFAR10(

root="./data/volume1/", train=False, download=True, transform=transform)

testloader=torch.utils.data.DataLoader(

testset, batch_size=4, shuffle=False, num_workers=2, drop_last=True)

classes= (

'plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

classNet(nn.Module):

def__init__(self):

super(Net, self).__init__()

self.conv1=nn.Conv2d(3, 6, 5)

self.bn1=nn.BatchNorm2d(6)

self.pool=nn.MaxPool2d(2, 2)

self.conv2=nn.Conv2d(6, 16, 5)

self.fc1=nn.Linear(16*5*5, 1200)

self.fc2=nn.Linear(1200, 840)

self.fc3=nn.Linear(840, 10)

self.relu=nn.ReLU()

defforward(self, x):

x=self.conv1(x)

x=self.bn1(x)

x=self.relu(x)

x=self.pool(x)

x=self.pool(self.relu(self.conv2(x)))

x=x.view(-1, 16*5*5)

x=self.relu(self.fc1(x))

x=self.relu(self.fc2(x))

x=self.fc3(x)

returnxnet=Net().cuda() # GPU# net = Net()net=torch.nn.parallel.DistributedDataParallel(net)

importtorch.optimasoptimcriterion=nn.CrossEntropyLoss()

optimizer=optim.SGD(net.parameters(), lr=0.01, momentum=0.9)

forepochinrange(1000): # loop over the dataset multiple timestrainloader.sampler.set_epoch(epoch)

running_loss=0.0fori, datainenumerate(tqdm(trainloader), 0):

# get the inputsinputs, labels=data# wrap them in Variableinputs, labels=Variable(inputs.cuda()), Variable(labels.cuda()) # GPU# inputs, labels = Variable(inputs), Variable(labels)# zero the parameter gradientsoptimizer.zero_grad()

# forward + backward + optimizeoutputs=net(inputs)

loss=criterion(outputs, labels)

loss.backward()

optimizer.step()

# print statisticsrunning_loss+=loss.item()

ifi%200==199: # print every 2000 mini-batchesprint('[%d, %5d] loss: %.3f'%

(epoch+1, i+1, running_loss/200))

running_loss=0.0print('Finished Training')

######################################################################### The results seem pretty good.## Let us look at how the network performs on the whole dataset.correct=0total=0fordataintestloader:

images, labels=dataimages, labels=images.cuda(), labels.cuda() # GPUoutputs=net(Variable(images))

_, predicted=torch.max(outputs.data, 1)

total+=labels.size(0)

correct+= (predicted==labels).sum().item()

print('Accuracy of the network on the 10000 test images: %d %%'% (

100*correct/total))

######################################################################### That looks waaay better than chance, which is 10% accuracy (randomly picking# a class out of 10 classes).# Seems like the network learnt something.## Hmmm, what are the classes that performed well, and the classes that did# not perform well:class_correct=list(0.foriinrange(10))

class_total=list(0.foriinrange(10))

fordataintestloader:

images, labels=dataimages, labels=images.cuda(), labels.cuda() # GPUoutputs=net(Variable(images))

_, predicted=torch.max(outputs.data, 1)

c= (predicted==labels).squeeze()

foriinrange(4):

label=labels[i]

class_correct[label] +=c[i].item()

class_total[label] +=1foriinrange(10):

print('Accuracy of %5s : %2d %%'% (

classes[i], 100*class_correct[i] /class_total[i]))

example code

arena command:

arena submit pytorchjob --name=torch-simple --workers=3 --gpus=4 --image=registry.cn-beijing.aliyuncs.com/pai-dlc/pytorch-training:1.4.0PAI-gpu-py37-cu100-ubuntu16.04 --data=pai-dlc:/pai-dlc "cd /pai-dlc; python train.py"

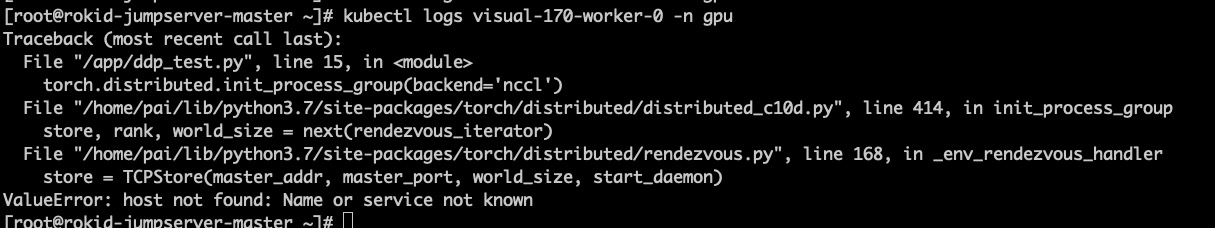

error occured when master start later than worker pod

After modify restartPolicy to OnFailure in file ~/charts/pytorchjob/templates/pytorchjob.yaml,we can workaround this problem

Suggestions:

The text was updated successfully, but these errors were encountered: