-

Notifications

You must be signed in to change notification settings - Fork 820

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Proposal: RefCount allocation to host many room in same pod #1197

Comments

|

I've heard a few requests for this sort of enhancement so it's definitely worth exploring and seeing how it would work. There are a few of things I'm concerned about:

There are probably other things that will come up, but thinking through these will be a good place to start. |

|

Definitely need this. I wrote a multi-room system within a single server instance for Unity/Mirror. |

|

@logixworx - Are there any details about that system that you can share (or point to any public documentation)? Do you have any requirements that weren't described in the original post? Do you have any insights that we can leverage in Agones? Do you have any thoughts about the questions I posed above? |

|

My requirements are exactly as described in the original post, as well as in your response. I can't think of anything more to contribute to the discussion at the moment. |

|

So I have quite a few thoughts, and it comes under several categories. This is a topic that has come up quite regularly over the years. I'm going to use the term "Game Session" as the term I've grow comfortable with for a separate session / room within a Game Server. There's some implementation details below, but I'm mostly trying to keep them to describe my ideas - please consider them sacrificial drafts. Configuration

Data Communication Routing

Allocation

Other Thoughts

Would love comments, thoughts and questions on the above. |

From an api standpoint it would be nice to just be able to call something like RequestSession() as much as I need. I have a system that allow us to fully clean the server from each session so being able to run the server for as long as possible is better then having to wait for a new container to boot. We could still have the config option that equates to max total sessions the instance is allowed to make which would make the sidecar return an error when requesting a session once the limit has passed. This could fit into draining/updating as well by returning the error once the instance has been told to drain/update. |

What is "it" in the above? What exactly would "RequestSession()" be doing here? Is this a SDK level API, or is more something like Allocation ? One thing I didn't mention in the above, was I think we would also need a way to self-allocate a Then you can move the Actually I think we are on the same page that the assumption is that in this instance, a |

|

Is there an ETA on this feature? I need it asap. Thanks! |

|

@logixworx no ETA as of yet. We've got a variety of users who are interested in this as well, so I would expect it to come at some point this year. We still need to do a complete design document on this, as well as implementation - it's a pretty substantial piece of work, so I expect it will take some months to complete. |

|

Any updates on this feature? |

|

Hi. First of all, our GameServer process has multiple Rooms. Our custom allocator works as follows Room Assignment

Shutdown

This approach is simple as Custom Allocator only knows about RefCount and one cached GameServer. Even if the Custom Allocator loses its state, Agones will assign a new GameServer to it. The entire service will not be down. However, this approach requires the GameServer to implement multi-rooms. |

|

Thanks for the feedback - we curious to see if the updated "Re-Allocation" design we have been working on #1239 (comment) would be useful for your needs?

Yeah, I'm not sure if Agones can help with the internals of the multi-room implementation inside the game server binary. We're an orchestration platform, so this seems like something that would be game specific and best left outside of Agones. |

|

@markmandel I just read it, the idea of re-allocation sounds great! Once Agones implements re-allocation, we may be able to fulfill the request without custom allocator service. There are a couple of things to worry about.

|

The whole structure is built so that you can safely allocate while doing rolling updates across Fleets (in fact how Kubernetes handles resources make this quite simple) -- so none of that changes. We also have lots of tests to ensure this stay safe.

We're doing a bunch of allocation request performance tweaks(#1852 and #1856), but we're seeing easy throughput of 100+ allocations per second when doing some load testing. We have to update the GameServer when Allocating anyway, so we make label/annotation changes part of that update request -- so it's all the same operations. As per the label change from the SDK -- this is a newer operation. This will end up working eventually as it's backed by a queue. So there could be some delay if the K8s API gets backed up for some reason, but will be eventually consistent. So having something that is "count" based may be faster - but we could potentially go down that path as we gather real world data on the speeds people actually need. Does that answer your question? |

|

@markmandel Thank you for the quick answer. I have no further concerns at the moment. |

|

A similar use case is to run multiple game servers process in the same pod, each one, exposing its own ports as a game server. This would be an easy out-of-the-box solution if you want to pack more games per node, as doesn't require any game server change, just the port. Especially to work around the limit of pods per node (110 in GKE) when you want to scale vertically. Ideally, it would create a 1-N relationship between pod and game sever, in another word, each pod can have multiple game servers. For the original request. You just need to configure your listener to listen to multiple ports for the same server, one for each "room". |

|

Another thing comes to my mind. Let me know if I am lost in the concepts, still new here. I can already run multiple game sessions on the same server by switching back to Ready after be allocated until I can not receive a new session. Obviously, a game server can be evicted. But if this proves to be a solution, a new state AllocatedAndReady to manage the case of ready and on use. |

As you pointed out, a Rather than add a whole new state, see the linked design above for utilising labels to manage if the GameServer is in the pool to be allocated from - which adds the same functionality, but is very flexible. |

|

Just reviewing this issue - I think we can close this ticket now, since we have: |

|

This has been stale for a month, so I'll close this issue. |

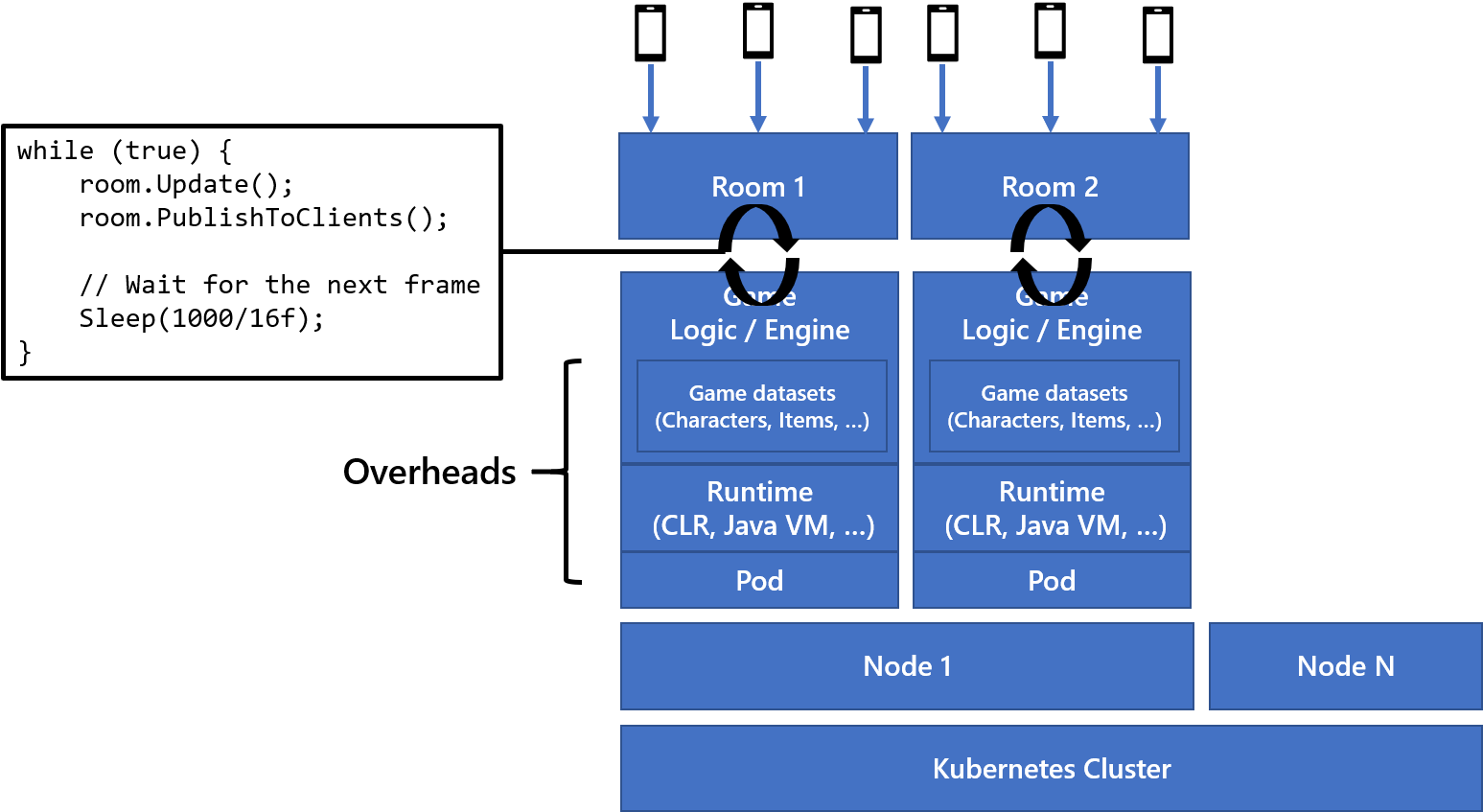

Is your feature request related to a problem? Please describe.

The current agones allocation model is only support to assign single room in Pod.

This provides a complete model of Isolation, but at the cost of Runtime and Game Engine,

it loses many sharable memory and pay much sleep time.

If the room is not hosting a large number of users like BattleRoyal,

a smaller user (2-8) and command frequency is low, we are able to host multiple rooms on one game engine(pod).

Does not share state between rooms, but shares execution engine (Load immutable gamedatas in memory, Runtime JIT, dynamic assemblies, etc...).

This can result in significant cost savings.

We're creating mobile game that run in .NET Core Server and Unity Client.

In .NET Core, we're using MagicOnion, OpenSource our network engine built on .NET Core/gRPC/Http2.

The cost of a one-room game loop on the server is relatively small, and many rooms can coexist on a single engine.

We want to host this real-time server on Agones, but we need to host many rooms on same pod to reduce costs.

Many mobile games requires state-ful and realtime server but not request heavy traffic per single room.

Therefore, since the runtime cost is relatively high, we want to host multiple rooms.

(If the runtime cost is relatively low, single room is fine.)

Describe the solution you'd like

I suggest RefCount (virtual allocation).

Allocate requests returns same pod and increment reference count.

When the finish games, request shutdown to decrement reference count.

If the reference count exceeds the value set in the configuration,

returns the server created for the new pod.

The text was updated successfully, but these errors were encountered: