\n```\n\nNesse exemplo, estamos combinando flexbox (classe `flex`) com unidades percentuais (`width: 100%`). Isso pode funcionar em navegadores mais recentes, mas causa problemas em versões mais antigas do Safari, como o iPhone 10, 11 e 12.\n\nPara solucionar esse problema, recomendamos substituir `w-full` por classes relacionadas ao flexbox:\n\n ```html\n

\n

\n

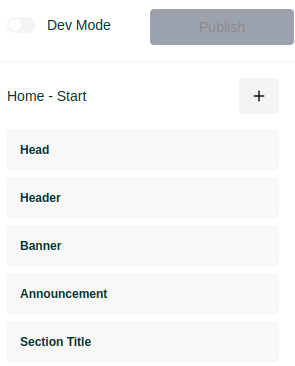

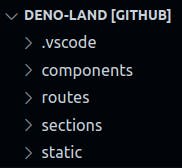

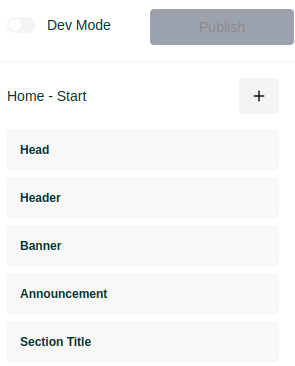

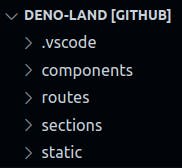

\n```\n\nIsso garantirá que o layout funcione corretamente em todos os navegadores.\n\n\nEstamos sempre acompanhando os novos projetos, coletando os apresentados e melhorando o template [Storefront](https://github.com/deco-sites/storefront).","seo":{"image":"https://github.com/deco-cx/blog/assets/1753396/20fb996b-4d04-417c-af69-5beb2a7e7969","title":"6 meses de lojas em produçāo - Uma jornada de aprendizados","description":"Nos últimos seis meses acumulamos um valioso conjunto de lições ao levar lojas para produção. Nesse período, aprendemos o que deve ser evitado, o que não funciona e a melhor maneira de iniciar o desenvolvimento de uma loja online. A seguir, compartilhamos um resumo das lições que adquirimos durante essa jornada."}}},"date":"10/10/2023","path":"licoes-6-meses.md","author":"Gui Tavano, Tiago Gimenes"},{"body":{"pt":{"title":"Partials","descr":"Revolucionando o Desenvolvimento Web","content":"[Fresh](https://fresh.deno.dev/), o framework web utilizado pela Deco, é conhecido por possibilitar a criação de páginas de altíssimo desempenho. Uma das razões pelas quais as páginas criadas na Deco são tão eficientes é devido à arquitetura de ilhas. Essa arquitetura permite que os desenvolvedores removam partes não interativas do pacote final de JavaScript, reduzindo a quantidade total de JavaScript na página e aliviando o navegador para realizar outras tarefas.\n\nNo entanto, uma das limitações dessa arquitetura é que páginas muito complexas, com muita interatividade e, portanto, muitas ilhas, ainda exigem uma grande quantidade de JavaScript. Felizmente, essa limitação agora é coisa do passado, graças à introdução dos Partials.\n\n## Como Funciona?\n\nOs Partials, inspirados no [htmx](https://htmx.org/docs/), operam com um runtime que intercepta as interações do usuário com elementos de botão, âncora e formulário. Essas interações são enviadas para nosso servidor, que calcula o novo estado da página e responde ao navegador. O navegador recebe o novo estado da IU em HTML puro, que é então processado e as diferenças são aplicadas, alterando a página para o seu estado final. Para obter informações mais detalhadas sobre os Partials, consulte a [documentação](https://github.com/denoland/fresh/issues/1609) do Fresh.\n\n## Partials em Ação\n\nEstamos migrando os componentes da loja da Deco para a nova solução de Partials. Até o momento, migramos o seletor de SKU, que pode ser visualizado em ação [aqui](http://storefront-vtex.deco.site/camisa-masculina-xadrez-sun-e-azul/p?skuId=120). Mais mudanças, como `infinite scroll` e melhorias nos filtros, estão por vir.\n\nOutra funcionalidade desbloqueada é a possibilidade de criar dobras na página. As páginas de comércio eletrônico geralmente são longas e contêm muitos elementos. Os navegadores costumam enfrentar problemas quando há muitos elementos no HTML. Para lidar com isso, foi inventada a técnica de dobra.\n\nA ideia básica dessa técnica é dividir o conteúdo da página em duas partes: o conteúdo acima e o conteúdo abaixo da dobra. O conteúdo acima da dobra é carregado na primeira solicitação ao servidor. O conteúdo abaixo da dobra é carregado assim que a primeira solicitação é concluída. Esse tipo de funcionalidade costumava ser difícil de implementar em arquiteturas mais antigas. Felizmente, embutimos essa lógica em uma nova seção chamada `Deferred`. Esta seção aceita uma lista de seções como parâmetro que devem ter seu carregamento adiado para um momento posterior.\n\nPara usar essa nova seção:\n\n1. Acesse o painel de administração da Deco e adicione a seção `Rendering > Deferred` à sua página.\n2. Mova as seções que deseja adiar para a seção `Deferred`.\n3. Salve e publique a página.\n4. Pronto! As seções agora estão adiadas sem a necessidade de alterar o código!\n\nVeja `Deferred` em ação neste [link](https://storefront-vtex.deco.site/home-partial).\n\n> Observe que, para a seção `Deferred` aparecer, você deve estar na versão mais recente do `fresh`, `apps` e deco!\n\nUma pergunta que você pode estar se fazendo agora é: Como escolher as seções que devo incluir no deferred? Para isso, use a aba de desempenho e comece pelas seções mais pesadas que oferecem o maior retorno!\n\nPara mais informações, veja nossas [docs](https://www.deco.cx/docs/en/developing-capabilities/interactive-sections/partial)"},"en":{"title":"Partials","descr":"Revolutionizing Web Development","content":"[Fresh](https://fresh.deno.dev/), the web framework used by Deco, is known for enabling the creation of high-performance pages. One of the reasons why pages created in Deco are so efficient is due to the island architecture. This architecture allows developers to remove non-interactive parts of the final JavaScript package, reducing the total amount of JavaScript on the page and freeing up the browser to perform other tasks.\n\nHowever, one of the limitations of this architecture is that very complex pages, with a lot of interactivity and therefore many islands, still require a large amount of JavaScript. Fortunately, this limitation is now a thing of the past, thanks to the introduction of Partials.\n\n## How It Works\n\nPartials, inspired by [htmx](https://htmx.org/docs/), operate with a runtime that intercepts user interactions with button, anchor, and form elements. These interactions are sent to our server, which calculates the new state of the page and responds to the browser. The browser receives the new UI state in pure HTML, which is then processed and the differences are applied, changing the page to its final state. For more detailed information about Partials, see the [Fresh documentation](https://github.com/denoland/fresh/issues/1609).\n\n## Partials in Action\n\nWe are migrating the components of the Deco store to the new Partials solution. So far, we have migrated the SKU selector, which can be viewed in action [here](http://storefront-vtex.deco.site/camisa-masculina-xadrez-sun-e-azul/p?skuId=120). More changes, such as `infinite scroll` and improvements to filters, are coming soon.\n\nAnother unlocked feature is the ability to create folds on the page. E-commerce pages are usually long and contain many elements. Browsers often face problems when there are many elements in the HTML. To deal with this, the fold technique was invented.\n\nThe basic idea of this technique is to divide the content of the page into two parts: the content above and the content below the fold. The content above the fold is loaded on the first request to the server. The content below the fold is loaded as soon as the first request is completed. This type of functionality used to be difficult to implement in older architectures. Fortunately, we have embedded this logic in a new section called `Deferred`. This section accepts a list of sections as a parameter that should have their loading delayed until a later time.\n\nTo use this new section:\n\n1. Access the Deco administration panel and add the `Rendering > Deferred` section to your page.\n2. Move the sections you want to defer to the `Deferred` section.\n3. Save and publish the page.\n4. Done! The sections are now deferred without the need to change the code!\n\nSee `Deferred` in action at this [link](https://storefront-vtex.deco.site/home-partial).\n\n> Note that, for the `Deferred` section to appear, you must be on the latest version of `fresh`, `apps`, and deco!\n\nA question you may be asking yourself now is: How do I choose the sections I should include in the deferred? To do this, use the performance tab and start with the heaviest sections that offer the greatest return!\n\nFor more information, see our [docs](https://www.deco.cx/docs/en/developing-capabilities/interactive-sections/partial)"}},"path":"partials.md","img":"https://user-images.githubusercontent.com/121065272/278023987-b7ab4ae9-6669-4e38-94b8-268049fa6569.png","date":"10/06/2023","author":"Marcos Candeia, Tiago Gimenes"},{"img":"https://user-images.githubusercontent.com/121065272/278021874-91a4ffea-3a22-4631-a4b3-c21bbc056386.png","body":{"en":{"descr":"Como a Lojas Torra dobrou sua taxa de conversão após migrar o front do seu ecommerce para a deco.cx","title":"A nova fase da Lojas Torra com a deco.cx."},"pt":{"descr":"Como a Lojas Torra dobrou sua taxa de conversão após migrar o front do seu ecommerce para a deco.cx","title":"A nova fase da Lojas Torra com a deco.cx."}},"date":"08/31/2023","path":"case-lojas-torra","author":"Por Maria Cecilia Marques"},{"body":{"pt":{"title":"AR&Co kickstarts digital shift: Baw goes headless with deco.cx","descr":"AR&Co. is getting its headless strategy off the ground, with both quality and speed by trusting deco.cx as its frontend solution."},"en":{"title":"AR&Co kickstarts digital shift: Baw goes headless with deco.cx","descr":"AR&Co. is getting its headless strategy off the ground, with both quality and speed by trusting deco.cx as its frontend solution."}},"path":"customer-story-baw","date":"04/25/2023","author":"Maria Cecilia Marques","img":"https://ozksgdmyrqcxcwhnbepg.supabase.co/storage/v1/object/public/assets/530/ec116700-62ee-408d-8621-3f8b2db72e8b","tags":[]},{"body":{"pt":{"content":"Com dezenas de milhões de e-commerces, o varejo digital nunca enfrentou tanta competição. Por outro lado, com o grande aumento do número de consumidores online, nunca houve maior oportunidade pra crescer. Neste contexto, **oferecer experiências distintas é crucial.**\n\nContudo, mesmo as maiores marcas tem dificuldade construir algo além do previsível - páginas lentas e estáticas para todos os usuários. O resultado todos já sabem: **baixa conversão** -- pessoas deixam de comprar na loja porque não viram um conteúdo que lhe agrada ou porquê as páginas demoraram pra carregar em seus celulares.\n\nVamos entender primeiro porque isso acontece.\n\n\n## Por quê seu site performa mal?\n\n### 1. Sua loja demora para construir\n\nO time de tecnologia para ecommerce é pouco produtivo pois trabalha com frameworks e tech stacks complexas de aprender e fazer manutenção - e que vão ficando ainda mais complexas com o tempo, além do talento em tech ser escasso.\n\nPessoas desenvolvedoras gastam horas e horas cada mês com a complexidade acidental das tecnologias usadas: esperando o tempo de deploy, cuidando de milhares de linhas de código, ou lidando com dependências no npm - sobra pouco tempo para codar de verdade.\n\nResultado: o custo de novas funcionalidades é caro e a entrega é demorada, além de ser operacionalmente complexo contratar e treinar devs.\n\n\n### 2. Sua loja demora para carregar\n\nSabe-se que a velocidade de carregamento de uma página afeta drastricamente a taxa de conversão e o SEO. Em um site \"médio\", é esperado que cada 100ms a menos no tempo de carregamento de uma página, aumente em 1% a conversão.\n\nAinda assim, a maior parte das lojas tem storefronts (frentes de loja de ecommerce) com nota abaixo de 50 no [Google Lighthouse](https://developer.chrome.com/docs/lighthouse/overview/). E poucos parecem saber resolver.\n\nCom isso, o ecommerce global perde trilhões (!) de dólares em vendas.\n\nParte do problema é a escolha do framework e das tecnologias envolvidas:\n\n- a. SPA (Single Page Application) não é a tecnologia ideal para ecommerce, especialmente para performance e SEO.\n\n- b. A complexidade de criar código com esse paradigma cria bases de código difíceis de manter e adicionar novas funcionalidades.\n\n\n### 3. Sua loja demora para evoluir\n\nNos negócios e na vida, a evolução contínua e o aprendizado diário é o que gera sucesso. Especialmente no varejo, que vive de otimização contínua de margem.\n\nContudo, times de ecommerce dependem de devs para editar, testar, aprender e evoluir suas lojas.\n\nDevs, por sua vez, estão geralmente sobrecarregadas de demandas, e demoram demais para entregar o que foi pedido, ainda que sejam tasks simples.\n\nPara piorar, as ferramentas necessárias para realizar o ciclo completo de CRO (conversion rate optimization) como teste A/B, analytics, personalização e content management system (CMS) estão fragmentadas, o que exige ter que lidar com várias integrações e diversos serviços terceirizados diferentes.\n\nTudo isso aumenta drasticamente a complexidade operacional, especialmente em storefronts de alta escala, onde o ciclo de evolução é lento e ineficaz.\n\n\n## Nossa solução\n\nNosso time ficou mais de 10 anos lidando com estes mesmo problemas. Sabemos que existem tecnologias para criar sites e lojas de alto desempenho, mas a maioria parece não ter a coragem de utilizá-las.\n\nFoi quando decidimos criar a deco.cx.\n\nPara habilitarmos a criação de experiências de alto desempenho foi necessário um novo paradigma, que simplifique a criação de lojas rápidas, sem sacrificar a capacidade de **personalização e de evolução contínua.**\n\nCriamos uma plataforma headless que integra tudo o que uma marca ou agência precisa para gerenciar e otimizar sua experiência digital, tornando fácil tomar decisões em cima de dados reais, e aumentando continuamente as taxas de conversão.\n\n## Como fazemos isso:\n\n### 1. Com deco.cx fica rápido de construir\n\nAceleramos desenvolvimento de lojas, apostando em uma combinação de tecnologias de ponta que oferecem uma experiência de desenvolvimento frontend otimizada e eficiente. A stack inclui:\n\n- [**Deno**](https://deno.land)\n Um runtime seguro para JavaScript e TypeScript, criado para resolver problemas do Node.js, como gerenciamento de dependências e configurações TypeScript.\n\n- [**Fresh**](https://fresh.deno.dev)\nUm framework web minimalista e rápido, que proporciona uma experiência de desenvolvimento mais dinâmica e eficiente, com tempos de compilação rápidos ou inexistentes.\n\n- [**Twind**](https://twind.dev)\nUma biblioteca de CSS-in-JS que permite a criação de estilos dinâmicos, sem sacrificar o desempenho, e oferece compatibilidade com o popular framework Tailwind CSS.\n\n- [**Preact**](https://deno.land)\nUma alternativa leve ao React, que oferece desempenho superior e menor tamanho de pacote para aplicações web modernas.\n\nEssa combinação de tecnologias permite que a deco.cx ofereça uma plataforma de desenvolvimento frontend que melhora a produtividade e reduz o tempo de implementação, além de proporcionar sites de alto desempenho.\n\nOs principais ganhos de produtividade da plataforma e tecnologia deco são:\n\n- O deploy (e rollback) instantâneo na edge oferecido pelo [deno Deploy](https://deno.com/deploy)\n\n- A bibliotecas de componentes universais e extensíveis ([Sections](https://www.deco.cx/docs/en/concepts/section)), além de funções pré-construídas e totalmente customizáveis ([Loaders](https://www.deco.cx/docs/en/concepts/loader)).\n\n- A facilidade de aprendizado. Qualquer desenvolvedor junior que sabe codar em React, Javascript, HTML e CSS consegue aprender rapidamente a nossa stack, que foi desenhada pensando na melhor experiência de desenvolvimento.\n\n\n### 2. Com deco.cx fica ultrarrápido de navegar\n\nAs tecnologias escolhidas pela deco também facilitam drasticamente a criação de lojas ultrarrápidas.\n\nNormalmente os storesfronts são criados com React e Next.Js - frameworks JavaScript que usam a abordagem de Single-Page Applications (SPA) para construir aplicativos web. Ou seja, o site é carregado em uma única página e o conteúdo é transportado dinamicamente com JavaScript.\n\nDeno e Fresh, tecnologias que usamos aqui, são frameworks de JavaScript que trabalham com outro paradigma: o server-side-rendering, ou seja, o processamento dos componentes da página é feito em servidores antes de ser enviado para o navegador, ao invés de ser processado apenas no lado do cliente.\n\nIsso significa que a página já vem pronta para ser exibida quando é carregada no navegador, o que resulta em um carregamento mais rápido e uma melhor experiência do usuário.\n\nAlém disso, essas tecnologias trabalham nativamente com computação na edge, o que significa que a maior parte da lógica é executada em servidores perto do usuário, reduzindo a latência e melhorando a velocidade de resposta. Como o processamento é feito em servidores na edge, ele é capaz de realizar tarefas mais pesadas, como buscar e processar dados de fontes externas, de forma mais eficiente.\n\nAo usar essas ferramentas em conjunto, é possível construir e-commerces de alto desempenho, com tempos de carregamento de página mais rápidos e uma experiência geral melhorada.\n\n\n### 3. Com deco.cx fica fácil de evoluir\n\nA plataforma deco oferece, em um mesmo produto, tudo o que as agências e marcas precisam para rodar o ciclo completo de evolução da loja:\n\n- **Library**: Onde os desenvolvedores criam (ou customizam) [loaders](https://www.deco.cx/docs/en/concepts/loader) que puxam dados de qualquer API externa, e usam os dados coletados para criar componentes de interface totalmente editáveis pelos usuários de negócio ([Sections](https://www.deco.cx/docs/en/concepts/section)).\n\n- **Pages**: Nosso CMS integrado, para que o time de negócio consiga [compor e editar experiências rapidamente](https://www.deco.cx/docs/en/concepts/page), usando as funções e componentes de alto desempenho pré-construidos pelos seus desenvolvedores, sem precisar de uma linha de código.\n\n- **Campanhas**: Nossa ferramenta de personalização que te permite agendar ou customizar a mudança que quiser por data ou audiência. Seja uma landing page para a Black Friday ou uma sessão para um público específico, você decide como e quando seu site se comporta para cada usuário.\n\n- **Experimentos**: Testes A/B ou multivariados em 5 segundos - nossa ferramenta te permite acompanhar, em tempo real, a performance dos seus experimentos e relação à versão de controle.\n\n- **Analytics**: Dados em tempo real sobre toda a jornada de compra e a performance da loja. Aqui você cria relatórios personalizados, que consolidam dados de qualquer API externa.\n\n\n## O que está por vir\n\nEstamos só começando. Nossa primeira linha de código na deco foi em Setembro 2022. De lá pra cá montamos [uma comunidade no Discord](http://deco.cx/discord), que já conta com 1.050+ membros, entre agências, estudantes, marcas e experts.\n\nSe você está atrás de uma forma de criar sites e lojas ultrarrápidos, relevantes para cada audiência, e que evoluem diariamente através de experimentos e dados, [agende uma demo](http://deco.cx).","title":"Como a deco.cx faz sua loja converter mais","descr":"Descubra como a nossa plataforma headless ajuda lojas virtuais a converter mais clientes, simplificando a criação e manutenção de lojas rápidas e personalizáveis. "},"en":{"title":"Como a deco.cx faz sua loja converter mais","descr":"Descubra como a nossa plataforma headless ajuda lojas virtuais a converter mais clientes, simplificando a criação e manutenção de lojas rápidas e personalizáveis. ","content":"Com dezenas de milhões de e-commerces, o varejo digital nunca enfrentou tanta competição. Por outro lado, com o grande aumento do número de consumidores online, nunca houve maior oportunidade pra crescer. Neste contexto, **oferecer experiências distintas é crucial.**\n\nContudo, mesmo as maiores marcas tem dificuldade construir algo além do previsível - páginas lentas e estáticas para todos os usuários. O resultado todos já sabem: **baixa conversão** -- pessoas deixam de comprar na loja porque não viram um conteúdo que lhe agrada ou porquê as páginas demoraram pra carregar em seus celulares.\n\nVamos entender primeiro porque isso acontece.\n\n\n## Por quê seu site performa mal?\n\n### 1. Sua loja demora para construir\n\nO time de tecnologia para ecommerce é pouco produtivo pois trabalha com frameworks e tech stacks complexas de aprender e fazer manutenção - e que vão ficando ainda mais complexas com o tempo, além do talento em tech ser escasso.\n\nPessoas desenvolvedoras gastam horas e horas cada mês com a complexidade acidental das tecnologias usadas: esperando o tempo de deploy, cuidando de milhares de linhas de código, ou lidando com dependências no npm - sobra pouco tempo para codar de verdade.\n\nResultado: o custo de novas funcionalidades é caro e a entrega é demorada, além de ser operacionalmente complexo contratar e treinar devs.\n\n\n### 2. Sua loja demora para carregar\n\nSabe-se que a velocidade de carregamento de uma página afeta drastricamente a taxa de conversão e o SEO. Em um site \"médio\", é esperado que cada 100ms a menos no tempo de carregamento de uma página, aumente em 1% a conversão.\n\nAinda assim, a maior parte das lojas tem storefronts (frentes de loja de ecommerce) com nota abaixo de 50 no [Google Lighthouse](https://developer.chrome.com/docs/lighthouse/overview/). E poucos parecem saber resolver.\n\nCom isso, o ecommerce global perde trilhões (!) de dólares em vendas.\n\nParte do problema é a escolha do framework e das tecnologias envolvidas:\n\n- a. SPA (Single Page Application) não é a tecnologia ideal para ecommerce, especialmente para performance e SEO.\n\n- b. A complexidade de criar código com esse paradigma cria bases de código difíceis de manter e adicionar novas funcionalidades.\n\n\n### 3. Sua loja demora para evoluir\n\nNos negócios e na vida, a evolução contínua e o aprendizado diário é o que gera sucesso. Especialmente no varejo, que vive de otimização contínua de margem.\n\nContudo, times de ecommerce dependem de devs para editar, testar, aprender e evoluir suas lojas.\n\nDevs, por sua vez, estão geralmente sobrecarregadas de demandas, e demoram demais para entregar o que foi pedido, ainda que sejam tasks simples.\n\nPara piorar, as ferramentas necessárias para realizar o ciclo completo de CRO (conversion rate optimization) como teste A/B, analytics, personalização e content management system (CMS) estão fragmentadas, o que exige ter que lidar com várias integrações e diversos serviços terceirizados diferentes.\n\nTudo isso aumenta drasticamente a complexidade operacional, especialmente em storefronts de alta escala, onde o ciclo de evolução é lento e ineficaz.\n\n\n## Nossa solução\n\nNosso time ficou mais de 10 anos lidando com estes mesmo problemas. Sabemos que existem tecnologias para criar sites e lojas de alto desempenho, mas a maioria parece não ter a coragem de utilizá-las.\n\nFoi quando decidimos criar a deco.cx.\n\nPara habilitarmos a criação de experiências de alto desempenho foi necessário um novo paradigma, que simplifique a criação de lojas rápidas, sem sacrificar a capacidade de **personalização e de evolução contínua.**\n\nCriamos uma plataforma headless que integra tudo o que uma marca ou agência precisa para gerenciar e otimizar sua experiência digital, tornando fácil tomar decisões em cima de dados reais, e aumentando continuamente as taxas de conversão.\n\n## Como fazemos isso:\n\n### 1. Com deco.cx fica rápido de construir\n\nAceleramos desenvolvimento de lojas, apostando em uma combinação de tecnologias de ponta que oferecem uma experiência de desenvolvimento frontend otimizada e eficiente. A stack inclui:\n\n- [**Deno**](https://deno.land)\n Um runtime seguro para JavaScript e TypeScript, criado para resolver problemas do Node.js, como gerenciamento de dependências e configurações TypeScript.\n\n- [**Fresh**](https://fresh.deno.dev)\nUm framework web minimalista e rápido, que proporciona uma experiência de desenvolvimento mais dinâmica e eficiente, com tempos de compilação rápidos ou inexistentes.\n\n- [**Twind**](https://twind.dev)\nUma biblioteca de CSS-in-JS que permite a criação de estilos dinâmicos, sem sacrificar o desempenho, e oferece compatibilidade com o popular framework Tailwind CSS.\n\n- [**Preact**](https://deno.land)\nUma alternativa leve ao React, que oferece desempenho superior e menor tamanho de pacote para aplicações web modernas.\n\nEssa combinação de tecnologias permite que a deco.cx ofereça uma plataforma de desenvolvimento frontend que melhora a produtividade e reduz o tempo de implementação, além de proporcionar sites de alto desempenho.\n\nOs principais ganhos de produtividade da plataforma e tecnologia deco são:\n\n- O deploy (e rollback) instantâneo na edge oferecido pelo [deno Deploy](https://deno.com/deploy)\n\n- A bibliotecas de componentes universais e extensíveis ([Sections](https://www.deco.cx/docs/en/concepts/section)), além de funções pré-construídas e totalmente customizáveis ([Loaders](https://www.deco.cx/docs/en/concepts/loader)).\n\n- A facilidade de aprendizado. Qualquer desenvolvedor junior que sabe codar em React, Javascript, HTML e CSS consegue aprender rapidamente a nossa stack, que foi desenhada pensando na melhor experiência de desenvolvimento.\n\n\n### 2. Com deco.cx fica ultrarrápido de navegar\n\nAs tecnologias escolhidas pela deco também facilitam drasticamente a criação de lojas ultrarrápidas.\n\nNormalmente os storesfronts são criados com React e Next.Js - frameworks JavaScript que usam a abordagem de Single-Page Applications (SPA) para construir aplicativos web. Ou seja, o site é carregado em uma única página e o conteúdo é transportado dinamicamente com JavaScript.\n\nDeno e Fresh, tecnologias que usamos aqui, são frameworks de JavaScript que trabalham com outro paradigma: o server-side-rendering, ou seja, o processamento dos componentes da página é feito em servidores antes de ser enviado para o navegador, ao invés de ser processado apenas no lado do cliente.\n\nIsso significa que a página já vem pronta para ser exibida quando é carregada no navegador, o que resulta em um carregamento mais rápido e uma melhor experiência do usuário.\n\nAlém disso, essas tecnologias trabalham nativamente com computação na edge, o que significa que a maior parte da lógica é executada em servidores perto do usuário, reduzindo a latência e melhorando a velocidade de resposta. Como o processamento é feito em servidores na edge, ele é capaz de realizar tarefas mais pesadas, como buscar e processar dados de fontes externas, de forma mais eficiente.\n\nAo usar essas ferramentas em conjunto, é possível construir e-commerces de alto desempenho, com tempos de carregamento de página mais rápidos e uma experiência geral melhorada.\n\n\n### 3. Com deco.cx fica fácil de evoluir\n\nA plataforma deco oferece, em um mesmo produto, tudo o que as agências e marcas precisam para rodar o ciclo completo de evolução da loja:\n\n- **Library**: Onde os desenvolvedores criam (ou customizam) [loaders](https://www.deco.cx/docs/en/concepts/loader) que puxam dados de qualquer API externa, e usam os dados coletados para criar componentes de interface totalmente editáveis pelos usuários de negócio ([Sections](https://www.deco.cx/docs/en/concepts/section)).\n\n- **Pages**: Nosso CMS integrado, para que o time de negócio consiga [compor e editar experiências rapidamente](https://www.deco.cx/docs/en/concepts/page), usando as funções e componentes de alto desempenho pré-construidos pelos seus desenvolvedores, sem precisar de uma linha de código.\n\n- **Campanhas**: Nossa ferramenta de personalização que te permite agendar ou customizar a mudança que quiser por data ou audiência. Seja uma landing page para a Black Friday ou uma sessão para um público específico, você decide como e quando seu site se comporta para cada usuário.\n\n- **Experimentos**: Testes A/B ou multivariados em 5 segundos - nossa ferramenta te permite acompanhar, em tempo real, a performance dos seus experimentos e relação à versão de controle.\n\n- **Analytics**: Dados em tempo real sobre toda a jornada de compra e a performance da loja. Aqui você cria relatórios personalizados, que consolidam dados de qualquer API externa.\n\n\n## O que está por vir\n\nEstamos só começando. Nossa primeira linha de código na deco foi em Setembro 2022. De lá pra cá montamos [uma comunidade no Discord](http://deco.cx/discord), que já conta com 1.050+ membros, entre agências, estudantes, marcas e experts.\n\nSe você está atrás de uma forma de criar sites e lojas ultrarrápidos, relevantes para cada audiência, e que evoluem diariamente através de experimentos e dados, [agende uma demo](http://deco.cx)."}},"path":"como-a-deco-faz-sua-loja-converter-mais.md","date":"04/14/2023","author":"Rafael Crespo","img":"https://ozksgdmyrqcxcwhnbepg.supabase.co/storage/v1/object/public/assets/530/450efa63-082e-427a-b218-e8b3690823cc"},{"body":{"pt":{"content":"## Desafio\n\nRecentemente aceitei um desafio e quero compartilhar como foi a minha experiência.\n\nO desafio era reconstruir a Home da [https://deno.land/](https://deno.land/) utilizando a plataforma deco.cx como CMS.\n\n## O que é deco.cx?\n\nÉ uma plataforma criada por um time de pessoas que trabalharam na Vtex, para construir páginas e lojas virtuais performáticas e em um curto período de tempo.\n\nCom o slogan \"Crie, teste e evolua sua loja. Todos os dias.\", a plataforma foca na simplicidade no desenvolvimento, autonomia para edições/testes e foco em performance.\n\n## Primeira etapa - Conhecendo a Stack\n\nAntes de iniciar o desenvolvimento, precisei correr atrás das tecnologias que a deco.cx sugere para utilização.\n\nSão elas:\n\n### Preact\n\nPreact é uma biblioteca JS baseada no React, mas que promete ser mais leve e mais performática.\n\nA adaptação aqui, para quem já tem o React, não tende a ser complicada.\n\n### Deno\n\nDeno é um ambiente de execução de Javascript e Typescript, criado pelo mesmo criador do Node.js.\n\nCom uma boa aceitação entre os devs, a alternativa melhorada do Node.js promete se destacar no futuro.\n\n### Fresh\n\nFresh é um Framework que me deixou bem curioso. Nessa stack, podemos dizer que ele atua como um Next.\n\nApesar de não ter me aprofundado no Framework, não tive problemas aqui, afinal um dos pilares do Fresh é não precisar de configuração, além de não ter etapa de build.\n\n### Twind\n\nTwind é uma alternativa mais leve, rápida e completa do tão conhecido Tailwind.\n\nA adaptação é extremamente fácil para quem já trabalha com Tailwind, mas não era o meu caso.\n\nPequenos gargalos no começo, mas a curva de aprendizagem é curta e nas últimas seções eu já estava sentindo os efeitos (positivos).\n\n## Segunda Etapa - Conhecendo a ferramenta da deco.cx\n\nMeu objetivo aqui, era entender como a ferramenta me ajudaria a recriar a página deno.land, tornando os componentes editáveis pelo painel CMS.\n\n### Criação da Conta\n\nO Login foi bem simples, pode ser feito com Github ou Google.\n\n### Criação do Projeto\n\nNa primeira página, após logado, temos a opção de Criar um Novo Site.\n\nTive que dar um nome ao projeto e escolher o template padrão para ser iniciado.\n\n\n\nAssim que o site é criado, **automaticamente** um repositório é montado no GitHub da [deco-sites](https://github.com/deco-sites) e um link da sua página na internet também.\n\nA partir dai, cada push que você fizer resultará no Deploy da aplicação no servidor e rapidamente será refletido em produção\n\n### Entendendo páginas e seções\n\nDentro do painel, podemos acessar a aba de \"Pages\"\n\n\n\nNessa parte, cada página do seu site estará listada, e assim que você clicar, você pode ver as seções que estão sendo utilizadas.\n\n\n\nDessa forma, fica fácil para uma pessoa não técnica conseguir criar novas páginas e adicionar/editar as seções.\n\nMas para entender como criar um modelo de seção, vamos para a terceira etapa.\n\n## Terceira Etapa - Integração do Código com a deco.cx\n\nComo eu tinha o objetivo de tornar as seções da página editáveis, eu precisei entender como isso deveria ser escrito no código.\n\nE é tão simples, quanto tipagem em Typescript, literalmente.\n\nDentro da estrutura no GitHub, temos uma pasta chamada _sections_, e cada arquivo _.tsx_ lá dentro se tornará uma daquelas seções no painel.\n\n\n\nEm cada arquivo, deve ser exportado uma função default, que retorna um código HTML.\n\nDentro desse HTML, podemos utilizar dados que vem do painel, e para definir quais dados deverão vir de lá, fazemos dessa forma:\n\nexport interface Props {\n image: LiveImage;\n imageMobile: LiveImage;\n altText: string;\n title: string;\n subTitle: string;\n}\n\nEsses são os campos que configurei para a seção _Banner_, e o resultado é esse no painel:\n\n\n\nDepois recebi esses dados como Props, assim:\n\nexport default function Banner({ image, imageMobile, altText, subTitle, title }: Props,) {\n return (\n <>\n //Your HTML code here\n \n );\n}\n\nE o resultado final da seção é:\n\n\n\n### Dev Mode\n\nÉ importante ressaltar, que durante o desenvolvimento, a ferramenta oferece uma função \"Dev Mode\".\n\nIsso faz com que os campos editáveis do painel se baseiem no código feito no localhost e não do repositório no Github.\n\nGarantindo a possibilidade de testes durante a criação.\n\n## Resultado Final + Conclusão\n\nO resultado final, pode ser conferido em: [https://deno-land.deco.site/](https://deno-land.deco.site/)\n\nConsegui atingir nota 97 no Pagespeed Mobile e isso me impressionou bastante.\n\nParticularmente, gostei muito dos pilares que a deco.cx está buscando entregar, em especial a autonomia de edição e o foco na velocidade de carregamento.\n\nA ferramenta é nova e já entrega muita coisa legal. A realização desse desafio foi feita após 3 meses do início do desenvolvimento da plataforma da deco.\n\nMinha recomendação a todos é que entrem na [Comunidade no Discord](https://discord.gg/RZkvt5AE) e acompanhem as novidades para não ficar para trás.\n\nSe possível, criem um site na ferramenta e façam os seus próprios testes.\n\nEm parceria com [TavanoBlog](https://tavanoblog.com.br/).","title":"Minha experiência com deco.cx","descr":"Recriando a página da deno-land utilizando a plataforma da deco.cx com as tecnologias: Deno, Fresh, Preact e Twind."},"en":{"title":"Minha experiência com deco.cx","descr":"Recriando a página da deno-land utilizando a plataforma da deco.cx com as tecnologias: Deno, Fresh, Preact e Twind.","content":"## Desafio\n\nRecentemente aceitei um desafio e quero compartilhar como foi a minha experiência.\n\nO desafio era reconstruir a Home da [https://deno.land/](https://deno.land/) utilizando a plataforma deco.cx como CMS.\n\n## O que é deco.cx?\n\nÉ uma plataforma criada por um time de pessoas que trabalharam na Vtex, para construir páginas e lojas virtuais performáticas e em um curto período de tempo.\n\nCom o slogan \"Crie, teste e evolua sua loja. Todos os dias.\", a plataforma foca na simplicidade no desenvolvimento, autonomia para edições/testes e foco em performance.\n\n## Primeira etapa - Conhecendo a Stack\n\nAntes de iniciar o desenvolvimento, precisei correr atrás das tecnologias que a deco.cx sugere para utilização.\n\nSão elas:\n\n### Preact\n\nPreact é uma biblioteca JS baseada no React, mas que promete ser mais leve e mais performática.\n\nA adaptação aqui, para quem já tem o React, não tende a ser complicada.\n\n### Deno\n\nDeno é um ambiente de execução de Javascript e Typescript, criado pelo mesmo criador do Node.js.\n\nCom uma boa aceitação entre os devs, a alternativa melhorada do Node.js promete se destacar no futuro.\n\n### Fresh\n\nFresh é um Framework que me deixou bem curioso. Nessa stack, podemos dizer que ele atua como um Next.\n\nApesar de não ter me aprofundado no Framework, não tive problemas aqui, afinal um dos pilares do Fresh é não precisar de configuração, além de não ter etapa de build.\n\n### Twind\n\nTwind é uma alternativa mais leve, rápida e completa do tão conhecido Tailwind.\n\nA adaptação é extremamente fácil para quem já trabalha com Tailwind, mas não era o meu caso.\n\nPequenos gargalos no começo, mas a curva de aprendizagem é curta e nas últimas seções eu já estava sentindo os efeitos (positivos).\n\n## Segunda Etapa - Conhecendo a ferramenta da deco.cx\n\nMeu objetivo aqui, era entender como a ferramenta me ajudaria a recriar a página deno.land, tornando os componentes editáveis pelo painel CMS.\n\n### Criação da Conta\n\nO Login foi bem simples, pode ser feito com Github ou Google.\n\n### Criação do Projeto\n\nNa primeira página, após logado, temos a opção de Criar um Novo Site.\n\nTive que dar um nome ao projeto e escolher o template padrão para ser iniciado.\n\n\n\nAssim que o site é criado, **automaticamente** um repositório é montado no GitHub da [deco-sites](https://github.com/deco-sites) e um link da sua página na internet também.\n\nA partir dai, cada push que você fizer resultará no Deploy da aplicação no servidor e rapidamente será refletido em produção\n\n### Entendendo páginas e seções\n\nDentro do painel, podemos acessar a aba de \"Pages\"\n\n\n\nNessa parte, cada página do seu site estará listada, e assim que você clicar, você pode ver as seções que estão sendo utilizadas.\n\n\n\nDessa forma, fica fácil para uma pessoa não técnica conseguir criar novas páginas e adicionar/editar as seções.\n\nMas para entender como criar um modelo de seção, vamos para a terceira etapa.\n\n## Terceira Etapa - Integração do Código com a deco.cx\n\nComo eu tinha o objetivo de tornar as seções da página editáveis, eu precisei entender como isso deveria ser escrito no código.\n\nE é tão simples, quanto tipagem em Typescript, literalmente.\n\nDentro da estrutura no GitHub, temos uma pasta chamada _sections_, e cada arquivo _.tsx_ lá dentro se tornará uma daquelas seções no painel.\n\n\n\nEm cada arquivo, deve ser exportado uma função default, que retorna um código HTML.\n\nDentro desse HTML, podemos utilizar dados que vem do painel, e para definir quais dados deverão vir de lá, fazemos dessa forma:\n\nexport interface Props {\n image: LiveImage;\n imageMobile: LiveImage;\n altText: string;\n title: string;\n subTitle: string;\n}\n\nEsses são os campos que configurei para a seção _Banner_, e o resultado é esse no painel:\n\n\n\nDepois recebi esses dados como Props, assim:\n\nexport default function Banner({ image, imageMobile, altText, subTitle, title }: Props,) {\n return (\n <>\n //Your HTML code here\n \n );\n}\n\nE o resultado final da seção é:\n\n\n\n### Dev Mode\n\nÉ importante ressaltar, que durante o desenvolvimento, a ferramenta oferece uma função \"Dev Mode\".\n\nIsso faz com que os campos editáveis do painel se baseiem no código feito no localhost e não do repositório no Github.\n\nGarantindo a possibilidade de testes durante a criação.\n\n## Resultado Final + Conclusão\n\nO resultado final, pode ser conferido em: [https://deno-land.deco.site/](https://deno-land.deco.site/)\n\nConsegui atingir nota 97 no Pagespeed Mobile e isso me impressionou bastante.\n\nParticularmente, gostei muito dos pilares que a deco.cx está buscando entregar, em especial a autonomia de edição e o foco na velocidade de carregamento.\n\nA ferramenta é nova e já entrega muita coisa legal. A realização desse desafio foi feita após 3 meses do início do desenvolvimento da plataforma da deco.\n\nMinha recomendação a todos é que entrem na [Comunidade no Discord](https://discord.gg/RZkvt5AE) e acompanhem as novidades para não ficar para trás.\n\nSe possível, criem um site na ferramenta e façam os seus próprios testes.\n\nEm parceria com [TavanoBlog](https://tavanoblog.com.br/)."}},"path":"criando-uma-pagina-com-decocx.md","date":"03/01/2023","author":"Guilherme Tavano","img":"https://ozksgdmyrqcxcwhnbepg.supabase.co/storage/v1/object/public/assets/530/179d2e1f-8214-46f4-bc85-497f2313145e"},{"body":{"pt":{"content":"Nesta última versão, a deco está revolucionando a forma como você adiciona funcionalidades em seus **sites**. Dê as boas-vindas à era dos Apps.\n\n**O que são Apps?**\n\nAs Apps são poderosos conjuntos de capacidades de negócios que podem ser importados e configurados em sites deco. Uma App na deco é essencialmente uma coleção de vários componentes, como **Actions**, **Sections**, **Loaders**, **Workflows**, **Handlers**, ou quaisquer outros tipos de blocks que podem ser usados para estender a funcionalidade dos seus sites.\n\nCom as apps, você tem o poder de definir propriedades necessárias para a funcionalidade como um todo, como chaves de aplicação, nomes de contas entre outros.\n\n**Templates de Loja**\n\nIntroduzimos vários templates de lojas integradas com plataformas como - Vnda., VTEX e Shopify. Agora, você tem a flexibilidade de escolher o template que melhor se adapta às suas necessidades e pode criar facilmente sua loja a partir desses templates.\n\n

\n\n**DecoHub: Seu Hub de Apps**\n\nCom a estreia do DecoHub, você pode descobrir e instalar uma variedade de apps com base em suas necessidades do seu negócio. Se você é um desenvolvedor, pode criar seus próprios apps fora do repositório do [deco-cx/apps](http://github.com/deco-cx/apps) e enviar um PR para adicioná-los ao DecoHub.\n\n**Transição de Deco-Sites/Std para Apps**\n\nMigramos do hub central de integrações do [deco-sites/std](https://github.com/deco-sites/std) para uma abordagem mais modular com o [deco-cx/apps](http://github.com/deco-cx/apps). O STD agora está em modo de manutenção, portanto, certifique-se de migrar sua loja e seguir as etapas de instalação em nossa [documentação](https://www.deco.cx/docs/en/getting-started/installing-an-app) para aproveitar o novo ecossistema de Apps.\n\n**Comece a Usar os Apps Agora Mesmo!**\n\nDesenvolvedores, mergulhem em nosso [guia](https://www.deco.cx/docs/en/developing-capabilities/apps/creating-an-app) sobre como criar seu primeiro App no Deco. Para lojas existentes, faça a migração executando `deno run -A -r https://deco.cx/upgrade`.\n\nIsso é apenas o começo. Estamos trabalhando continuamente para aprimorar nosso ecossistema de Apps e explorar novos recursos, como transições de visualização e soluções de estilo.\n\nFiquem ligados e vamos construir juntos o futuro do desenvolvimento web!","title":"Como utilizar apps para adicionar funcionalidades aos seus sites.","descr":"Descubra como a nossa plataforma headless ajuda lojas virtuais a converter mais clientes, simplificando a criação e manutenção de lojas rápidas e personalizáveis."},"en":{"title":"How to use apps to add functionality to your sites.","descr":"Discover how our headless platform helps e-commerce stores convert more customers by simplifying the creation and maintenance of fast, customizable stores.","content":"In this latest release, deco is revolutionizing the way you add functionality to your **sites**. Welcome to the era of Apps.\n\n**What are Apps?**\n\nApps are powerful sets of business capabilities that can be imported and configured in deco sites. An App in deco is essentially a collection of various components, such as **Actions**, **Sections**, **Loaders**, **Workflows**, **Handlers**, or any other types of blocks that can be used to extend the functionality of your sites.\n\nWith apps, you have the power to define properties necessary for the functionality as a whole, such as application keys, account names, and more.\n\n**Store Templates**\n\nWe've introduced several store templates integrated with platforms like - Vnda., VTEX, and Shopify. Now, you have the flexibility to choose the template that best suits your needs and can easily create your store from these templates.\n\n

\n\n**DecoHub: Your App Hub**\n\nWith the debut of DecoHub, you can discover and install a variety of apps based on your business needs. If you're a developer, you can create your own apps outside of the [deco-cx/apps](http://github.com/deco-cx/apps) repository and submit a PR to add them to DecoHub.\n\n**Transition from Deco-Sites/Std to Apps**\n\nWe've migrated from the central integration hub of [deco-sites/std](https://github.com/deco-sites/std) to a more modular approach with [deco-cx/apps](http://github.com/deco-cx/apps). STD is now in maintenance mode, so be sure to migrate your store and follow the installation steps in our [documentation](https://www.deco.cx/docs/en/getting-started/installing-an-app) to take advantage of the new Apps ecosystem.\n\n**Start Using Apps Now!**\n\nDevelopers, dive into our [guide](https://www.deco.cx/docs/en/developing-capabilities/apps/creating-an-app) on how to create your first App in Deco. For existing stores, make the migration by running `deno run -A -r https://deco.cx/upgrade`.\n\nThis is just the beginning. We're continuously working to enhance our Apps ecosystem and explore new features, such as view transitions and styling solutions.\n\nStay tuned and let's build the future of web development together!"}},"path":"apps-era.md","date":"01/23/2023","author":"Marcos Candeia, Tiago Gimenes","img":"https://ozksgdmyrqcxcwhnbepg.supabase.co/storage/v1/object/public/assets/530/5a07de58-ba83-4c6c-92e1-bea033350318","descr":"A era dos Apps!"},{"body":{"pt":{"title":"A vida é curta","descr":"Por Paul Graham, traduzido por ChatGPT.","content":"A vida é curta, como todos sabem. Quando era criança, costumava me perguntar sobre isso. A vida realmente é curta ou estamos realmente nos queixando de sua finitude? Seríamos tão propensos a sentir que a vida é curta se vivêssemos 10 vezes mais tempo?\n\nComo não parecia haver maneira de responder a essa pergunta, parei de me perguntar sobre isso. Depois tive filhos. Isso me deu uma maneira de responder à pergunta e a resposta é que a vida realmente é curta.\n\nTer filhos me mostrou como converter uma quantidade contínua, o tempo, em quantidades discretas. Você só tem 52 fins de semana com seu filho de 2 anos. Se o Natal-como-mágica dure, digamos, dos 3 aos 10 anos, só poderá ver seu filho experimentá-lo 8 vezes. E enquanto é impossível dizer o que é muito ou pouco de uma quantidade contínua como o tempo, 8 não é muito de alguma coisa. Se você tivesse uma punhado de 8 amendoins ou uma prateleira de 8 livros para escolher, a quantidade definitivamente pareceria limitada, independentemente da sua expectativa de vida.\n\nOk, então a vida realmente é curta. Isso faz alguma diferença saber disso?\n\nFez para mim. Isso significa que argumentos do tipo \"A vida é muito curta para x\" têm muita força. Não é apenas uma figura de linguagem dizer que a vida é muito curta para alguma coisa. Não é apenas um sinônimo de chato. Se você achar que a vida é muito curta para alguma coisa, deve tentar eliminá-la se puder.\n\nQuando me pergunto o que descobri que a vida é muito curta, a palavra que me vem à cabeça é \"besteira\". Eu percebo que essa resposta é um pouco tautológica. Quase é a definição de besteira que é a coisa de que a vida é muito curta. E ainda assim a besteira tem um caráter distintivo. Há algo falso nela. É o alimento junk da experiência. [1]\n\nSe você perguntar a si mesmo o que gasta o seu tempo em besteira, provavelmente já sabe a resposta. Reuniões desnecessárias, disputas sem sentido, burocracia, postura, lidar com os erros de outras pessoas, engarrafamentos, passatempos viciantes mas pouco gratificantes.\n\nHá duas maneiras pelas quais esse tipo de coisa entra em sua vida: ou é imposto a você ou o engana. Até certo ponto, você tem que suportar as besteiras impostas por circunstâncias. Você precisa ganhar dinheiro e ganhar dinheiro consiste principalmente em realizar tarefas. De fato, a lei da oferta e da demanda garante isso: quanto mais gratificante é algum tipo de trabalho, mais barato as pessoas o farão. Pode ser que menos tarefas sejam impostas a você do que você pensa, no entanto. Sempre houve uma corrente de pessoas que optam por sair da rotina padrão e vão viver em algum lugar onde as oportunidades são menores no sentido convencional, mas a vida parece mais autêntica. Isso pode se tornar mais comum.\n\nVocê pode reduzir a quantidade de besteira em sua vida sem precisar se mudar. A quantidade de tempo que você precisa gastar com besteiras varia entre empregadores. A maioria das grandes organizações (e muitas pequenas) está imersa nelas. Mas se você priorizar conscientemente a redução de besteiras acima de outros fatores, como dinheiro e prestígio, pode encontrar empregadores que irão desperdiçar menos do seu tempo.\n\nSe você é freelancer ou uma pequena empresa, pode fazer isso no nível de clientes individuais. Se você demitir ou evitar clientes tóxicos, pode diminuir a quantidade de besteira em sua vida mais do que diminui sua renda.\n\nMas, enquanto alguma quantidade de besteira é inevitavelmente imposta a você, a besteira que se infiltra em sua vida enganando-o não é culpa de ninguém, mas sua própria. E ainda assim, a besteira que você escolhe pode ser mais difícil de eliminar do que a besteira que é imposta a você. Coisas que o atraem para desperdiçar seu tempo precisam ser muito boas em enganá-lo. Um exemplo que será familiar para muitas pessoas é discutir online. Quando alguém contradiz você, está, de certa forma, atacando você. Às vezes bastante abertamente. Seu instinto quando é atacado é se defender. Mas, assim como muitos instintos, esse não foi projetado para o mundo em que vivemos agora. Contrariamente ao que parece, é melhor, na maioria das vezes, não se defender. Caso contrário, essas pessoas estão literalmente tomando sua vida. [2]\n\nDiscutir online é apenas incidentalmente viciante. Há coisas mais perigosas do que isso. Como já escrevi antes, um subproduto do progresso técnico é que as coisas que gostamos tendem a se tornar mais viciantes. Isso significa que precisaremos fazer um esforço consciente para evitar vícios, para nos colocarmos fora de nós mesmos e perguntarmos: \"é assim que quero estar gastando meu tempo?\"\n\nAlém de evitar besteiras, devemos procurar ativamente coisas que importam. Mas coisas diferentes importam para pessoas diferentes e a maioria precisa aprender o que importa para elas. Alguns são afortunados e percebem cedo que adoram matemática, cuidar de animais ou escrever e, então, descobrem uma maneira de passar muito tempo fazendo isso. Mas a maioria das pessoas começa com uma vida que é uma mistura de coisas que importam e coisas que não importam e só gradualmente aprende a distinguir entre elas.\n\nPara os jovens, especialmente, muita dessa confusão é induzida pelas situações artificiais em que se encontram. Na escola intermediária e na escola secundária, o que os outros estudantes acham de você parece ser a coisa mais importante do mundo. Mas quando você pergunta aos adultos o que eles fizeram de errado nessa idade, quase todos dizem que se importavam demais com o que os outros estudantes achavam deles.\n\nUma heurística para distinguir coisas que importam é perguntar a si mesmo se você se importará com elas no futuro. A coisa falsa que importa geralmente tem um pico afiado de parecer importar. É assim que o engana. A área sob a curva é pequena, mas sua forma se crava em sua consciência como uma alfinete.\n\nAs coisas que importam nem sempre são aquelas que as pessoas chamariam de \"importantes\". Tomar café com um amigo importa. Você não vai sentir depois que isso foi um desperdício de tempo.\n\nUma coisa ótima em ter crianças pequenas é que elas fazem você gastar tempo em coisas que importam: elas. Elas puxam sua manga enquanto você está olhando para o seu celular e dizem: \"você vai brincar comigo?\". E as chances são de que essa seja, de fato, a opção de minimização de besteira.\n\nSe a vida é curta, devemos esperar que sua brevidade nos surpreenda. E é exatamente isso que costuma acontecer. Você assume as coisas como garantidas e, então, elas desaparecem. Você acha que sempre pode escrever aquele livro, ou subir aquela montanha, ou o que quer que seja, e então percebe que a janela se fechou. As janelas mais tristes fecham quando outras pessoas morrem. Suas vidas também são curtas. Depois que minha mãe morreu, eu desejei ter passado mais tempo com ela. Eu vivi como se ela sempre estivesse lá. E, de maneira típica e discreta, ela incentivou essa ilusão. Mas era uma ilusão. Acho que muitas pessoas cometem o mesmo erro que eu cometi.\n\nA maneira usual de evitar ser surpreendido por algo é estar consciente disso. Quando a vida era mais precária, as pessoas costumavam ser conscientes da morte em um grau que agora pareceria um pouco morboso. Não tenho certeza do porquê, mas não parece ser a resposta certa lembrar constantemente da caveira pairando no ombro de todos. Talvez uma solução melhor seja olhar o problema pelo outro lado. Cultive o hábito de ser impaciente com as coisas que mais quer fazer. Não espere antes de subir aquela montanha ou escrever aquele livro ou visitar sua mãe. Você não precisa se lembrar constantemente por que não deve esperar. Apenas não espere.\n\nPosso pensar em mais duas coisas que alguém faz quando não tem muito de algo: tentar obter mais disso e saborear o que tem. Ambos fazem sentido aqui.\n\nComo você vive afeta por quanto tempo você vive. A maioria das pessoas poderia fazer melhor. Eu entre elas.\n\nMas você provavelmente pode obter ainda mais efeito prestando mais atenção ao tempo que tem. É fácil deixar os dias passarem voando. O \"fluxo\" que as pessoas imaginativas adoram tanto tem um parente mais sombrio que impede você de pausar para saborear a vida no meio da rotina diária de tarefas e alarmes. Uma das coisas mais impressionantes que já li não estava em um livro, mas no título de um: Burning the Days (Queimando os Dias), de James Salter.\n\nÉ possível diminuir um pouco o tempo. Eu melhorei nisso. As crianças ajudam. Quando você tem crianças pequenas, há muitos momentos tão perfeitos que você não consegue deixar de notar.\n\nTambém ajuda sentir que você tirou tudo de alguma experiência. O motivo pelo qual estou triste por minha mãe não é apenas porque sinto falta dela, mas porque penso em tudo o que poderíamos ter feito e não fizemos. Meu filho mais velho vai fazer 7 anos em breve. E, embora sinta falta da versão dele de 3 anos, pelo menos não tenho nenhum arrependimento pelo que poderia ter sido. Tivemos o melhor tempo que um pai e um filho de 3 anos poderiam ter.\n\nCorte implacavelmente as besteiras, não espere para fazer coisas que importam e saboreie o tempo que tem. É isso o que você faz quando a vida é curta. \n\n---\n\nNotas\n\n[1] No começo, eu não gostei que a palavra que me veio à mente tivesse outros significados. Mas então percebi que os outros significados estão bastante relacionados. Besteira no sentido de coisas em que você desperdiça seu tempo é bastante parecida com besteira intelectual.\n\n[2] Escolhi esse exemplo de forma deliberada como uma nota para mim mesmo. Eu sou atacado muito online. As pessoas contam as mentiras mais loucas sobre mim. E até agora eu fiz um trabalho bastante medíocre de suprimir a inclinação natural do ser humano de dizer \"Ei, isso não é verdade!\".\n\nObrigado a Jessica Livingston e Geoff Ralston por ler rascunhos deste."},"en":{"title":"A vida é curta","descr":"Por Paul Graham, traduzido por ChatGPT.","content":"A vida é curta, como todos sabem. Quando era criança, costumava me perguntar sobre isso. A vida realmente é curta ou estamos realmente nos queixando de sua finitude? Seríamos tão propensos a sentir que a vida é curta se vivêssemos 10 vezes mais tempo?\n\nComo não parecia haver maneira de responder a essa pergunta, parei de me perguntar sobre isso. Depois tive filhos. Isso me deu uma maneira de responder à pergunta e a resposta é que a vida realmente é curta.\n\nTer filhos me mostrou como converter uma quantidade contínua, o tempo, em quantidades discretas. Você só tem 52 fins de semana com seu filho de 2 anos. Se o Natal-como-mágica dure, digamos, dos 3 aos 10 anos, só poderá ver seu filho experimentá-lo 8 vezes. E enquanto é impossível dizer o que é muito ou pouco de uma quantidade contínua como o tempo, 8 não é muito de alguma coisa. Se você tivesse uma punhado de 8 amendoins ou uma prateleira de 8 livros para escolher, a quantidade definitivamente pareceria limitada, independentemente da sua expectativa de vida.\n\nOk, então a vida realmente é curta. Isso faz alguma diferença saber disso?\n\nFez para mim. Isso significa que argumentos do tipo \"A vida é muito curta para x\" têm muita força. Não é apenas uma figura de linguagem dizer que a vida é muito curta para alguma coisa. Não é apenas um sinônimo de chato. Se você achar que a vida é muito curta para alguma coisa, deve tentar eliminá-la se puder.\n\nQuando me pergunto o que descobri que a vida é muito curta, a palavra que me vem à cabeça é \"besteira\". Eu percebo que essa resposta é um pouco tautológica. Quase é a definição de besteira que é a coisa de que a vida é muito curta. E ainda assim a besteira tem um caráter distintivo. Há algo falso nela. É o alimento junk da experiência. [1]\n\nSe você perguntar a si mesmo o que gasta o seu tempo em besteira, provavelmente já sabe a resposta. Reuniões desnecessárias, disputas sem sentido, burocracia, postura, lidar com os erros de outras pessoas, engarrafamentos, passatempos viciantes mas pouco gratificantes.\n\nHá duas maneiras pelas quais esse tipo de coisa entra em sua vida: ou é imposto a você ou o engana. Até certo ponto, você tem que suportar as besteiras impostas por circunstâncias. Você precisa ganhar dinheiro e ganhar dinheiro consiste principalmente em realizar tarefas. De fato, a lei da oferta e da demanda garante isso: quanto mais gratificante é algum tipo de trabalho, mais barato as pessoas o farão. Pode ser que menos tarefas sejam impostas a você do que você pensa, no entanto. Sempre houve uma corrente de pessoas que optam por sair da rotina padrão e vão viver em algum lugar onde as oportunidades são menores no sentido convencional, mas a vida parece mais autêntica. Isso pode se tornar mais comum.\n\nVocê pode reduzir a quantidade de besteira em sua vida sem precisar se mudar. A quantidade de tempo que você precisa gastar com besteiras varia entre empregadores. A maioria das grandes organizações (e muitas pequenas) está imersa nelas. Mas se você priorizar conscientemente a redução de besteiras acima de outros fatores, como dinheiro e prestígio, pode encontrar empregadores que irão desperdiçar menos do seu tempo.\n\nSe você é freelancer ou uma pequena empresa, pode fazer isso no nível de clientes individuais. Se você demitir ou evitar clientes tóxicos, pode diminuir a quantidade de besteira em sua vida mais do que diminui sua renda.\n\nMas, enquanto alguma quantidade de besteira é inevitavelmente imposta a você, a besteira que se infiltra em sua vida enganando-o não é culpa de ninguém, mas sua própria. E ainda assim, a besteira que você escolhe pode ser mais difícil de eliminar do que a besteira que é imposta a você. Coisas que o atraem para desperdiçar seu tempo precisam ser muito boas em enganá-lo. Um exemplo que será familiar para muitas pessoas é discutir online. Quando alguém contradiz você, está, de certa forma, atacando você. Às vezes bastante abertamente. Seu instinto quando é atacado é se defender. Mas, assim como muitos instintos, esse não foi projetado para o mundo em que vivemos agora. Contrariamente ao que parece, é melhor, na maioria das vezes, não se defender. Caso contrário, essas pessoas estão literalmente tomando sua vida. [2]\n\nDiscutir online é apenas incidentalmente viciante. Há coisas mais perigosas do que isso. Como já escrevi antes, um subproduto do progresso técnico é que as coisas que gostamos tendem a se tornar mais viciantes. Isso significa que precisaremos fazer um esforço consciente para evitar vícios, para nos colocarmos fora de nós mesmos e perguntarmos: \"é assim que quero estar gastando meu tempo?\"\n\nAlém de evitar besteiras, devemos procurar ativamente coisas que importam. Mas coisas diferentes importam para pessoas diferentes e a maioria precisa aprender o que importa para elas. Alguns são afortunados e percebem cedo que adoram matemática, cuidar de animais ou escrever e, então, descobrem uma maneira de passar muito tempo fazendo isso. Mas a maioria das pessoas começa com uma vida que é uma mistura de coisas que importam e coisas que não importam e só gradualmente aprende a distinguir entre elas.\n\nPara os jovens, especialmente, muita dessa confusão é induzida pelas situações artificiais em que se encontram. Na escola intermediária e na escola secundária, o que os outros estudantes acham de você parece ser a coisa mais importante do mundo. Mas quando você pergunta aos adultos o que eles fizeram de errado nessa idade, quase todos dizem que se importavam demais com o que os outros estudantes achavam deles.\n\nUma heurística para distinguir coisas que importam é perguntar a si mesmo se você se importará com elas no futuro. A coisa falsa que importa geralmente tem um pico afiado de parecer importar. É assim que o engana. A área sob a curva é pequena, mas sua forma se crava em sua consciência como uma alfinete.\n\nAs coisas que importam nem sempre são aquelas que as pessoas chamariam de \"importantes\". Tomar café com um amigo importa. Você não vai sentir depois que isso foi um desperdício de tempo.\n\nUma coisa ótima em ter crianças pequenas é que elas fazem você gastar tempo em coisas que importam: elas. Elas puxam sua manga enquanto você está olhando para o seu celular e dizem: \"você vai brincar comigo?\". E as chances são de que essa seja, de fato, a opção de minimização de besteira.\n\nSe a vida é curta, devemos esperar que sua brevidade nos surpreenda. E é exatamente isso que costuma acontecer. Você assume as coisas como garantidas e, então, elas desaparecem. Você acha que sempre pode escrever aquele livro, ou subir aquela montanha, ou o que quer que seja, e então percebe que a janela se fechou. As janelas mais tristes fecham quando outras pessoas morrem. Suas vidas também são curtas. Depois que minha mãe morreu, eu desejei ter passado mais tempo com ela. Eu vivi como se ela sempre estivesse lá. E, de maneira típica e discreta, ela incentivou essa ilusão. Mas era uma ilusão. Acho que muitas pessoas cometem o mesmo erro que eu cometi.\n\nA maneira usual de evitar ser surpreendido por algo é estar consciente disso. Quando a vida era mais precária, as pessoas costumavam ser conscientes da morte em um grau que agora pareceria um pouco morboso. Não tenho certeza do porquê, mas não parece ser a resposta certa lembrar constantemente da caveira pairando no ombro de todos. Talvez uma solução melhor seja olhar o problema pelo outro lado. Cultive o hábito de ser impaciente com as coisas que mais quer fazer. Não espere antes de subir aquela montanha ou escrever aquele livro ou visitar sua mãe. Você não precisa se lembrar constantemente por que não deve esperar. Apenas não espere.\n\nPosso pensar em mais duas coisas que alguém faz quando não tem muito de algo: tentar obter mais disso e saborear o que tem. Ambos fazem sentido aqui.\n\nComo você vive afeta por quanto tempo você vive. A maioria das pessoas poderia fazer melhor. Eu entre elas.\n\nMas você provavelmente pode obter ainda mais efeito prestando mais atenção ao tempo que tem. É fácil deixar os dias passarem voando. O \"fluxo\" que as pessoas imaginativas adoram tanto tem um parente mais sombrio que impede você de pausar para saborear a vida no meio da rotina diária de tarefas e alarmes. Uma das coisas mais impressionantes que já li não estava em um livro, mas no título de um: Burning the Days (Queimando os Dias), de James Salter.\n\nÉ possível diminuir um pouco o tempo. Eu melhorei nisso. As crianças ajudam. Quando você tem crianças pequenas, há muitos momentos tão perfeitos que você não consegue deixar de notar.\n\nTambém ajuda sentir que você tirou tudo de alguma experiência. O motivo pelo qual estou triste por minha mãe não é apenas porque sinto falta dela, mas porque penso em tudo o que poderíamos ter feito e não fizemos. Meu filho mais velho vai fazer 7 anos em breve. E, embora sinta falta da versão dele de 3 anos, pelo menos não tenho nenhum arrependimento pelo que poderia ter sido. Tivemos o melhor tempo que um pai e um filho de 3 anos poderiam ter.\n\nCorte implacavelmente as besteiras, não espere para fazer coisas que importam e saboreie o tempo que tem. É isso o que você faz quando a vida é curta. \n\n---\n\nNotas\n\n[1] No começo, eu não gostei que a palavra que me veio à mente tivesse outros significados. Mas então percebi que os outros significados estão bastante relacionados. Besteira no sentido de coisas em que você desperdiça seu tempo é bastante parecida com besteira intelectual.\n\n[2] Escolhi esse exemplo de forma deliberada como uma nota para mim mesmo. Eu sou atacado muito online. As pessoas contam as mentiras mais loucas sobre mim. E até agora eu fiz um trabalho bastante medíocre de suprimir a inclinação natural do ser humano de dizer \"Ei, isso não é verdade!\".\n\nObrigado a Jessica Livingston e Geoff Ralston por ler rascunhos deste."}},"path":"pg-vb-pt-br.md","author":"Chat GPT","img":"https://i.insider.com/5e6bb1bd84159f39f736ee32?width=2000&format=jpeg&auto=webp","date":"11/29/2022"},{"tags":[],"body":{"en":{"content":"Maximus Tecidos, a prominent name in the textile industry, faced critical challenges with their existing e-commerce platform that hindered their operational efficiency and customer experience. This article outlines how Maximus Tecidos, in collaboration with Agência 2B Digital, successfully transitioned to deco.cx, resulting in significant improvements in site performance and management ease.\n\n### The Challenges\n\nPrior to the migration, Maximus Tecidos encountered several issues:\n\n- **Difficulty in Managing Site Content:** The existing CMS platform didn't allow for quick content updates.\n- **Performance Issues:** The website's performance, particularly page load times, was subpar, affecting user experience and engagement.\n\n### The Solution\n\nAgência 2B Digital, leveraging their longstanding partnership with Maximus Tecidos, facilitated a strategic transition to deco.cx. This move was designed to address the critical pain points and provide a more efficient platform.\n\n- **Enhanced Manageability:** deco.cx offers a user-friendly platform, simplifying the management of configurations and site maintenance.\n- **Improved Performance:** The new platform significantly boosted site performance, ensuring faster load times and a smoother user experience.\n\nThe migration occurred on December 5, 2023.\n\n### The Results\n\n- **Keyword Index Stability:** Post-migration, there was no drop in the number of indexed keywords.\n- **Improved SEO Metrics:** There was an increase in the Top 3 keyword rankings and the total number of indexed keywords.\n- **Increased Conversion Rates:** The improved site performance contributed to a notable rise in conversion rates.\n\n### Conclusion\n\nFor businesses seeking to optimize their digital operations and improve site performance, the experience of Maximus Tecidos offers valuable insights and a proven path to success. The partnership with Agência 2B Digital and the transition to deco.cx have empowered Maximus Tecidos to overcome their e-commerce challenges and achieve substantial performance gains.","title":"Maximus Tecidos, 2B Digital & deco.cx","descr":"A Seamless Transition to Enhanced E-commerce Performance","seo":{"title":"Maximus Tecidos, 2B Digital & deco.cx: A Seamless Transition to Enhanced E-commerce Performance","description":"This article outlines how Maximus Tecidos, in collaboration with Agência 2B Digital, successfully transitioned to deco.cx, resulting in significant improvements in site performance and management ease.","image":"https://ozksgdmyrqcxcwhnbepg.supabase.co/storage/v1/object/public/assets/530/341e8759-7fbd-42fd-821b-5382f7cc053a"}},"pt":{"title":"Maximus Tecidos, 2B Digital & deco.cx","descr":"Uma Transição Suave para Melhor Desempenho no E-commerce","content":"A Maximus Tecidos, um nome proeminente na indústria têxtil, enfrentava desafios críticos com sua plataforma de e-commerce existente, que prejudicavam sua eficiência operacional e a experiência do cliente. Este artigo descreve como a Maximus Tecidos, em colaboração com a Agência 2B Digital, realizou com sucesso a transição para a deco.cx, resultando em melhorias significativas no desempenho do site e na facilidade de gestão.\n\n### Os Desafios\n\nAntes da migração, a Maximus Tecidos encontrava vários problemas:\n\n- **Dificuldade em Gerenciar o Conteúdo do Site: A plataforma CMS existente não permitia atualizações rápidas de conteúdo.\n- **Problemas de Desempenho: O desempenho do site, especialmente os tempos de carregamento de página, era insatisfatório, afetando a experiência do usuário e o engajamento.\n\n### A Solução\n\nA Agência 2B Digital, aproveitando sua parceria de longa data com a Maximus Tecidos, facilitou uma transição estratégica para a deco.cx. Este movimento foi projetado para abordar os pontos críticos e fornecer uma plataforma mais eficiente.\n\n- **Maior Facilidade de Gestão: A deco.cx oferece uma plataforma amigável, simplificando a gestão de configurações e a manutenção do site.\n- **Desempenho Melhorado: A nova plataforma melhorou significativamente o desempenho do site, garantindo tempos de carregamento mais rápidos e uma experiência de usuário mais suave.\n\nA migração ocorreu em 5 de dezembro de 2023.\n\n### Os Resultados\n\n- **Estabilidade no Índice de Palavras-Chave: Após a migração, não houve queda no número de palavras-chave indexadas.\n- **Melhoria nas Métricas de SEO: Houve um aumento nas classificações das palavras-chave no Top 3 e no número total de palavras-chave indexadas.\n- **Aumento nas Taxas de Conversão: O melhor desempenho do site contribuiu para um aumento notável nas taxas de conversão.\n\n### Conclusão\n\nPara empresas que buscam otimizar suas operações digitais e melhorar o desempenho do site, a experiência da Maximus Tecidos oferece insights valiosos e um caminho comprovado para o sucesso. A parceria com a Agência 2B Digital e a transição para a deco.cx permitiram que a Maximus Tecidos superasse seus desafios no e-commerce e alcançasse ganhos substanciais de desempenho.","seo":{"title":"Maximus Tecidos, 2B Digital & deco.cx: Uma Transição Suave para Melhor Desempenho no E-commerce","description":"Este artigo descreve como a Maximus Tecidos, em colaboração com a Agência 2B Digital, realizou com sucesso a transição para a deco.cx, resultando em melhorias significativas no desempenho do site e na facilidade de gestão","image":"https://ozksgdmyrqcxcwhnbepg.supabase.co/storage/v1/object/public/assets/530/72426ea0-ebee-45a1-9701-79c7cd007bb4"}}},"path":"maximus-tecidos","date":"05/08/2024","author":"Maria Cecília Marques","img":"https://ozksgdmyrqcxcwhnbepg.supabase.co/storage/v1/object/public/assets/530/8903bdfe-fda3-4a79-8b3b-544f64e8f9d6"}]},"__resolveType":"site/loaders/blogPostLoader.ts"}

\ No newline at end of file

diff --git a/manifest.gen.ts b/manifest.gen.ts

index 222d6f5e..59380044 100644

--- a/manifest.gen.ts

+++ b/manifest.gen.ts

@@ -80,117 +80,117 @@ import * as $$$$$$54 from "./sections/FreePlan.tsx";

import * as $$$$$$55 from "./sections/General/Spacer.tsx";

import * as $$$$$$56 from "./sections/Glossary/GlossaryPost.tsx";

import * as $$$$$$57 from "./sections/Glossary/GlossaryPostList.tsx";

-import * as $$$$$$58 from "./sections/Glossary/Search.tsx";

-import * as $$$$$$168 from "./sections/Glossary/Hero.tsx";

-import * as $$$$$$59 from "./sections/Hackathon/Challenge.tsx";

-import * as $$$$$$60 from "./sections/Hackathon/Footer.tsx";

-import * as $$$$$$61 from "./sections/Hackathon/HeroAndHeader.tsx";

-import * as $$$$$$62 from "./sections/Hackathon/Intro.tsx";

-import * as $$$$$$63 from "./sections/Hackathon/Partners.tsx";

-import * as $$$$$$64 from "./sections/Hackathon/Times.tsx";

-import * as $$$$$$65 from "./sections/Head.tsx";

-import * as $$$$$$66 from "./sections/Header.tsx";

-import * as $$$$$$67 from "./sections/HelpBlogPosts.tsx";

-import * as $$$$$$68 from "./sections/HelpFaq.tsx";

-import * as $$$$$$69 from "./sections/HeroBanner.tsx";

-import * as $$$$$$70 from "./sections/Home/Brands.tsx";

-import * as $$$$$$71 from "./sections/Home/BuildShowcase.tsx";

-import * as $$$$$$72 from "./sections/Home/DoubleBrands.tsx";

-import * as $$$$$$73 from "./sections/Home/FAQ.tsx";

-import * as $$$$$$74 from "./sections/Home/Features.tsx";

-import * as $$$$$$75 from "./sections/Home/Header.tsx";

-import * as $$$$$$76 from "./sections/Home/Hero.tsx";

-import * as $$$$$$77 from "./sections/Home/MainVideo.tsx";

-import * as $$$$$$78 from "./sections/Home/Results.tsx";

-import * as $$$$$$79 from "./sections/Home/Sale.tsx";

-import * as $$$$$$80 from "./sections/Home/SitesCarousel.tsx";

-import * as $$$$$$81 from "./sections/Home/StartingCards.tsx";

-import * as $$$$$$82 from "./sections/Home/Testimonials.tsx";

-import * as $$$$$$83 from "./sections/Home/TextHero.tsx";

-import * as $$$$$$84 from "./sections/Home/TextSection.tsx";

-import * as $$$$$$85 from "./sections/Home/TextSection2.tsx";

-import * as $$$$$$86 from "./sections/Home/TrustSignals.tsx";

-import * as $$$$$$87 from "./sections/HowCanWeHelp.tsx";

-import * as $$$$$$88 from "./sections/Hub/Advantage.tsx";

-import * as $$$$$$89 from "./sections/Hub/Agencies.tsx";

-import * as $$$$$$90 from "./sections/Hub/Creator.tsx";

-import * as $$$$$$91 from "./sections/Hub/Heading.tsx";

-import * as $$$$$$92 from "./sections/Hub/Integrations.tsx";

-import * as $$$$$$93 from "./sections/Hub/SectionSubtitle.tsx";

-import * as $$$$$$94 from "./sections/Hub/SectionTitle.tsx";

-import * as $$$$$$95 from "./sections/Hub/TemplatePreview.tsx";

-import * as $$$$$$96 from "./sections/Hub/TemplatesGrid.tsx";

-import * as $$$$$$97 from "./sections/ImpactCalculator.tsx";

-import * as $$$$$$98 from "./sections/Integration.tsx";

-import * as $$$$$$99 from "./sections/JoinOurCommunity.tsx";

-import * as $$$$$$100 from "./sections/Links.tsx";

-import * as $$$$$$101 from "./sections/Live Projects/Hero.tsx";

-import * as $$$$$$102 from "./sections/Live Projects/LiveProjects.tsx";

-import * as $$$$$$103 from "./sections/Live Projects/ProjectsGrid.tsx";

-import * as $$$$$$104 from "./sections/Live Projects/ProjectsSlider.tsx";

-import * as $$$$$$105 from "./sections/Live Projects/TextHero.tsx";

-import * as $$$$$$106 from "./sections/MainBanner.tsx";

-import * as $$$$$$107 from "./sections/Markdown.tsx";

-import * as $$$$$$108 from "./sections/MarkdownContent.tsx";

-import * as $$$$$$130 from "./sections/New Landing/CarouselLinks.tsx";

-import * as $$$$$$131 from "./sections/New Landing/GetSiteDone.tsx";

-import * as $$$$$$132 from "./sections/New Landing/Investors.tsx";

-import * as $$$$$$109 from "./sections/NRF/BlogPosts.tsx";

-import * as $$$$$$110 from "./sections/NRF/Brands.tsx";

-import * as $$$$$$111 from "./sections/NRF/CallToAction.tsx";

-import * as $$$$$$112 from "./sections/NRF/Contact.tsx";

-import * as $$$$$$113 from "./sections/NRF/DecoFooter.tsx";

-import * as $$$$$$114 from "./sections/NRF/Editor.tsx";

-import * as $$$$$$115 from "./sections/NRF/EditorMobile.tsx";

-import * as $$$$$$116 from "./sections/NRF/Features.tsx";

-import * as $$$$$$117 from "./sections/NRF/FeaturesWithImage.tsx";

-import * as $$$$$$118 from "./sections/NRF/Grid.tsx";

-import * as $$$$$$119 from "./sections/NRF/Header.tsx";

-import * as $$$$$$120 from "./sections/NRF/Hero.tsx";

-import * as $$$$$$121 from "./sections/NRF/HeroFlat.tsx";

-import * as $$$$$$122 from "./sections/NRF/ImageSection.tsx";

-import * as $$$$$$123 from "./sections/NRF/PlatformContact.tsx";

-import * as $$$$$$124 from "./sections/NRF/Pricing.tsx";

-import * as $$$$$$125 from "./sections/NRF/PricingTable.tsx";

-import * as $$$$$$126 from "./sections/NRF/PricingValue.tsx";

-import * as $$$$$$127 from "./sections/NRF/Questions.tsx";

-import * as $$$$$$128 from "./sections/NRF/TextLines.tsx";

-import * as $$$$$$129 from "./sections/NRF/Thanks.tsx";

-import * as $$$$$$133 from "./sections/OnThisPage.tsx";

-import * as $$$$$$134 from "./sections/Page.tsx";

-import * as $$$$$$135 from "./sections/PageContest/CallToAction.tsx";

-import * as $$$$$$136 from "./sections/PageContest/Faq.tsx";

-import * as $$$$$$137 from "./sections/PageContest/Features.tsx";

-import * as $$$$$$138 from "./sections/PageContest/Hero.tsx";

-import * as $$$$$$139 from "./sections/PageContest/HowItWorks.tsx";

-import * as $$$$$$140 from "./sections/PageContest/SectionHeader.tsx";

-import * as $$$$$$141 from "./sections/Partner.tsx";

-import * as $$$$$$142 from "./sections/PopularDocuments.tsx";

-import * as $$$$$$143 from "./sections/PopularTopics.tsx";

-import * as $$$$$$144 from "./sections/Pricing.tsx";

-import * as $$$$$$145 from "./sections/PricingTiers.tsx";

-import * as $$$$$$146 from "./sections/PricingValue.tsx";

-import * as $$$$$$147 from "./sections/ProductHuntCTA.tsx";

-import * as $$$$$$148 from "./sections/QuillText.tsx";

-import * as $$$$$$149 from "./sections/RankingAnalyze.tsx";

-import * as $$$$$$150 from "./sections/RankingHeader.tsx";

-import * as $$$$$$151 from "./sections/RankingList.tsx";

-import * as $$$$$$152 from "./sections/ReCAPTCHA.tsx";

-import * as $$$$$$153 from "./sections/RichLetter.tsx";

-import * as $$$$$$154 from "./sections/Roadmap.tsx";

-import * as $$$$$$155 from "./sections/SectionList.tsx";

-import * as $$$$$$156 from "./sections/Sidebar.tsx";

-import * as $$$$$$157 from "./sections/Test.tsx";

-import * as $$$$$$158 from "./sections/Thanks.tsx";

-import * as $$$$$$159 from "./sections/Theme/Theme.tsx";

-import * as $$$$$$160 from "./sections/Theme/ThemeHints.tsx";

-import * as $$$$$$161 from "./sections/TripletBanner.tsx";

-import * as $$$$$$167 from "./sections/tuju/Form.tsx";

-import * as $$$$$$162 from "./sections/WasThisPageHelpful.tsx";

-import * as $$$$$$163 from "./sections/Webinar/Content.tsx";

-import * as $$$$$$164 from "./sections/Webinar/Hero.tsx";

-import * as $$$$$$165 from "./sections/Whatsapp.tsx";

-import * as $$$$$$166 from "./sections/Why.tsx";

+import * as $$$$$$58 from "./sections/Glossary/Hero.tsx";

+import * as $$$$$$59 from "./sections/Glossary/Search.tsx";

+import * as $$$$$$60 from "./sections/Hackathon/Challenge.tsx";

+import * as $$$$$$61 from "./sections/Hackathon/Footer.tsx";

+import * as $$$$$$62 from "./sections/Hackathon/HeroAndHeader.tsx";

+import * as $$$$$$63 from "./sections/Hackathon/Intro.tsx";

+import * as $$$$$$64 from "./sections/Hackathon/Partners.tsx";

+import * as $$$$$$65 from "./sections/Hackathon/Times.tsx";

+import * as $$$$$$66 from "./sections/Head.tsx";

+import * as $$$$$$67 from "./sections/Header.tsx";

+import * as $$$$$$68 from "./sections/HelpBlogPosts.tsx";

+import * as $$$$$$69 from "./sections/HelpFaq.tsx";

+import * as $$$$$$70 from "./sections/HeroBanner.tsx";

+import * as $$$$$$71 from "./sections/Home/Brands.tsx";

+import * as $$$$$$72 from "./sections/Home/BuildShowcase.tsx";

+import * as $$$$$$73 from "./sections/Home/DoubleBrands.tsx";

+import * as $$$$$$74 from "./sections/Home/FAQ.tsx";

+import * as $$$$$$75 from "./sections/Home/Features.tsx";

+import * as $$$$$$76 from "./sections/Home/Header.tsx";

+import * as $$$$$$77 from "./sections/Home/Hero.tsx";

+import * as $$$$$$78 from "./sections/Home/MainVideo.tsx";

+import * as $$$$$$79 from "./sections/Home/Results.tsx";

+import * as $$$$$$80 from "./sections/Home/Sale.tsx";

+import * as $$$$$$81 from "./sections/Home/SitesCarousel.tsx";