diff --git a/README.md b/README.md

index d46be6600..dd5fbeb46 100644

--- a/README.md

+++ b/README.md

@@ -1,13 +1,13 @@

-* [Overview](#overview)

-* [Where to Start](#where-to-start)

-* [Confluent Cloud](#confluent-cloud)

-* [Stream Processing](#stream-processing)

-* [Data Pipelines](#data-pipelines)

-* [Confluent Platform](#confluent-platform)

-* [Build Your Own](#build-your-own)

-* [Additional Demos](#additional-demos)

+- [Overview](#overview)

+- [Where to start](#where-to-start)

+- [Confluent Cloud](#confluent-cloud)

+- [Stream Processing](#stream-processing)

+- [Data Pipelines](#data-pipelines)

+- [Confluent Platform](#confluent-platform)

+- [Build Your Own](#build-your-own)

+- [Additional Demos](#additional-demos)

# Overview

@@ -61,17 +61,17 @@ You can find the documentation and instructions for all Confluent Cloud demos at

# Confluent Platform

-| Demo | Local | Docker | Description

-| ------------------------------------------ | ----- | ------ | ---------------------------------------------------------------------------

-| [Avro](clients/README.md) | [Y](clients/README.md) | N | Client applications using Avro and Confluent Schema Registry

-| [CP Demo](https://github.com/confluentinc/cp-demo) | N | [Y](https://github.com/confluentinc/cp-demo) | [Confluent Platform demo](https://docs.confluent.io/platform/current/tutorials/cp-demo/docs/index.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top) (`cp-demo`) with a playbook for Kafka event streaming ETL deployments

-| [CP Demo](https://github.com/confluentinc/cp-demo) | N | [Y](https://github.com/confluentinc/cp-demo) | [Confluent Platform demo](https://docs.confluent.io/platform/current/tutorials/cp-demo/docs/index.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top) (`cp-demo`) with a playbook for Kafka event streaming ETL deployments

-| [Kubernetes](kubernetes/README.md) | N | [Y](kubernetes/README.md) | Demonstrations of Confluent Platform deployments using the [Confluent Operator](https://docs.confluent.io/operator/current/overview.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top)

-| [Kubernetes](kubernetes/README.md) | N | [Y](kubernetes/README.md) | Demonstrations of Confluent Platform deployments using the [Confluent Operator](https://docs.confluent.io/operator/current/overview.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top)

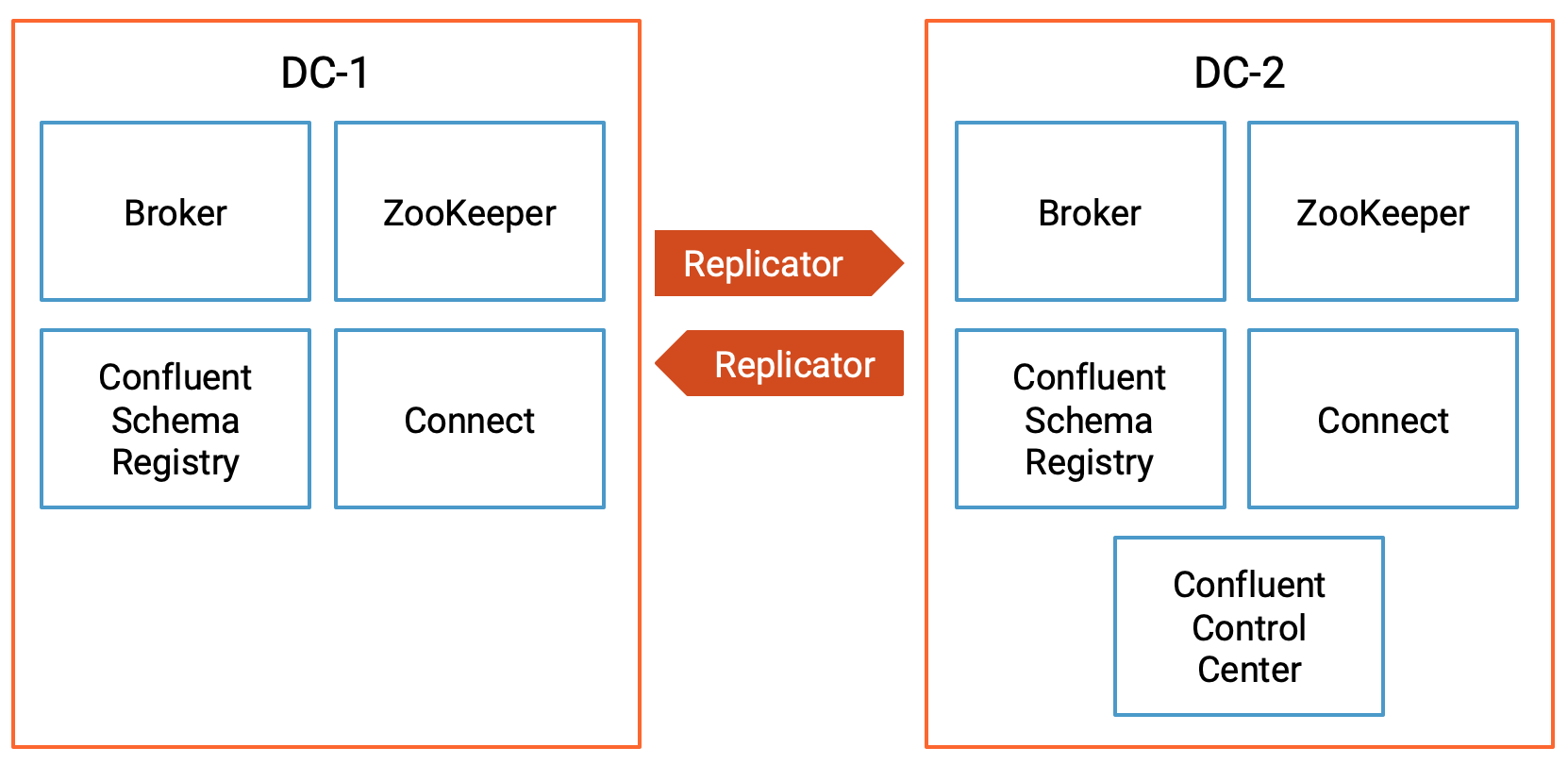

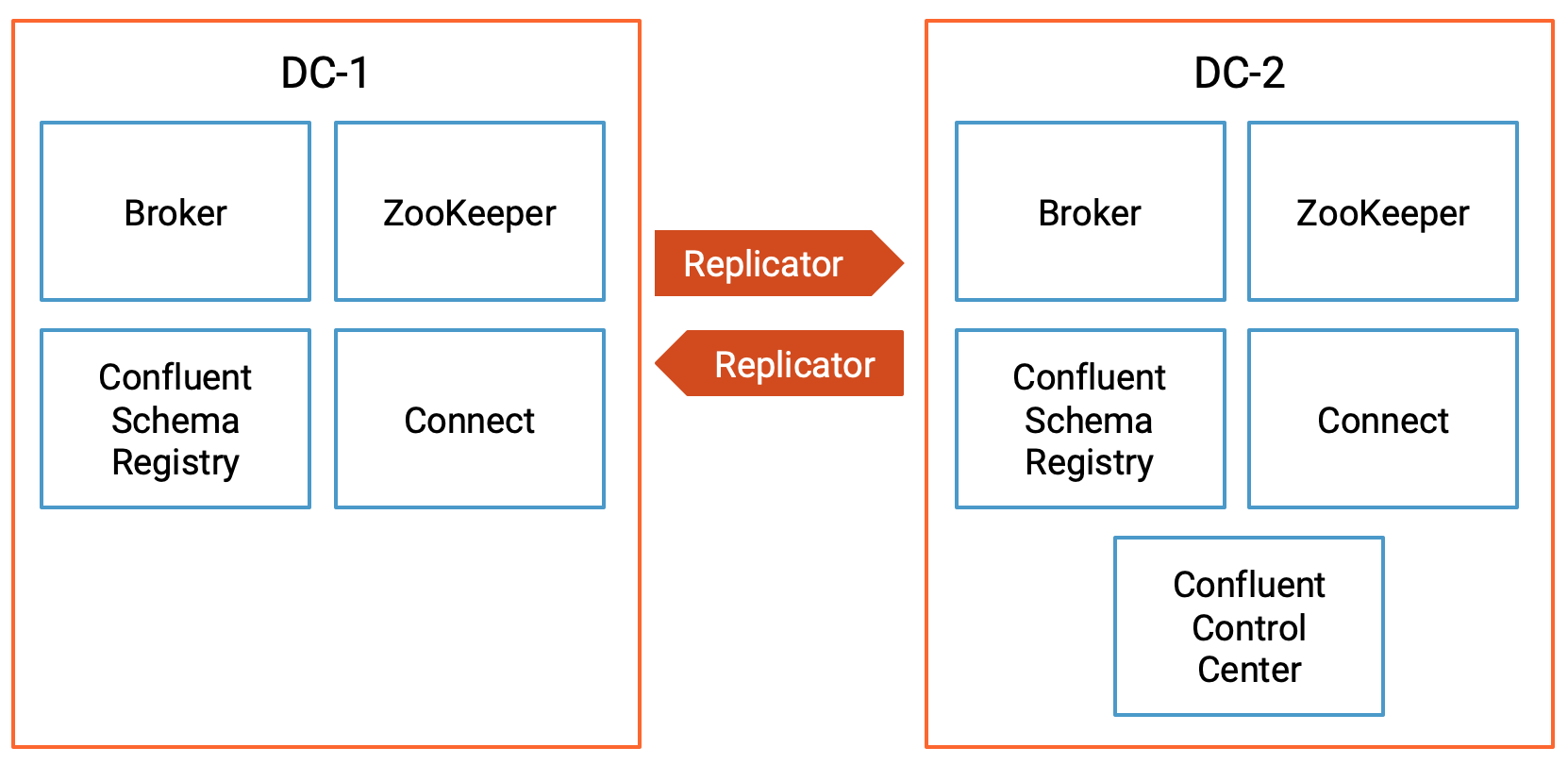

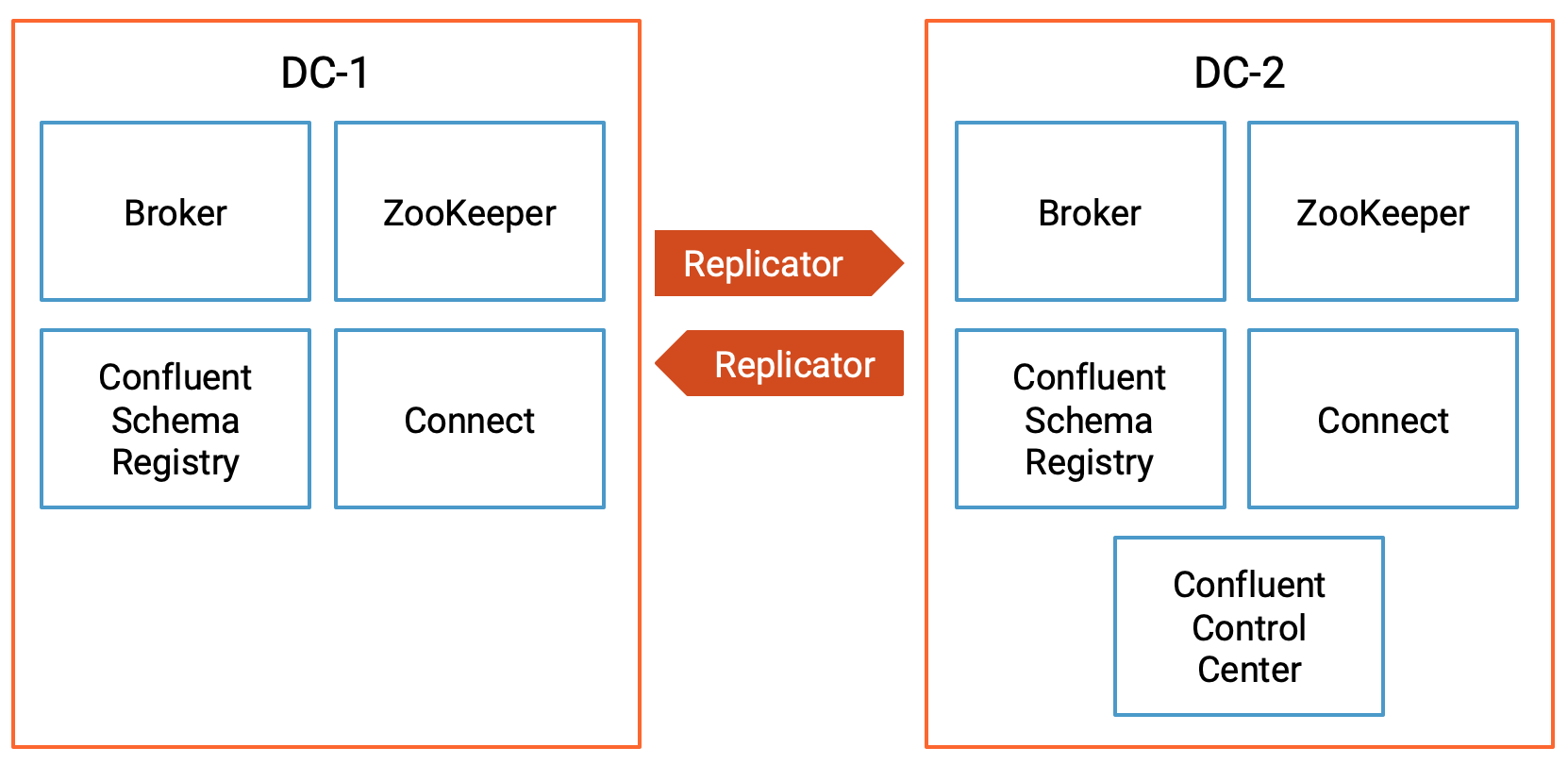

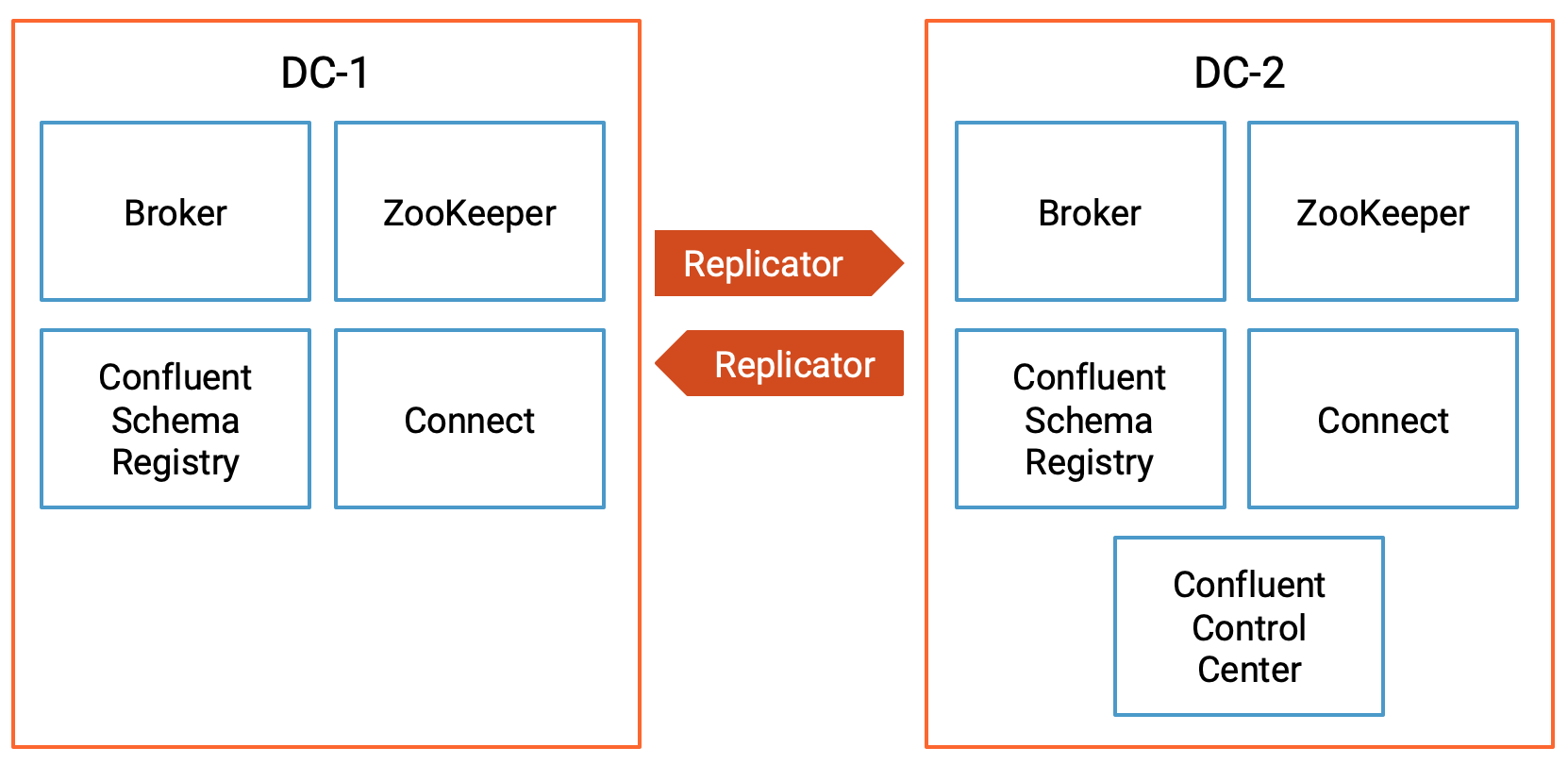

-| [Multi Datacenter](multi-datacenter/README.md) | N | [Y](multi-datacenter/README.md) | Active-active multi-datacenter design with two instances of Confluent Replicator copying data bidirectionally between the datacenters

-| [Multi Datacenter](multi-datacenter/README.md) | N | [Y](multi-datacenter/README.md) | Active-active multi-datacenter design with two instances of Confluent Replicator copying data bidirectionally between the datacenters

-| [Multi-Region Clusters](multiregion/README.md) | N | [Y](multiregion/README.md) | Multi-Region clusters (MRC) with follower fetching, observers, and replica placement

-| [Multi-Region Clusters](multiregion/README.md) | N | [Y](multiregion/README.md) | Multi-Region clusters (MRC) with follower fetching, observers, and replica placement

-| [Quickstart](cp-quickstart/README.md) | [Y](cp-quickstart/README.md) | [Y](cp-quickstart/README.md) | Automated version of the [Confluent Quickstart](https://docs.confluent.io/platform/current/quickstart/index.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top): for Confluent Platform on local install or Docker, community version, and Confluent Cloud

-| [Quickstart](cp-quickstart/README.md) | [Y](cp-quickstart/README.md) | [Y](cp-quickstart/README.md) | Automated version of the [Confluent Quickstart](https://docs.confluent.io/platform/current/quickstart/index.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top): for Confluent Platform on local install or Docker, community version, and Confluent Cloud

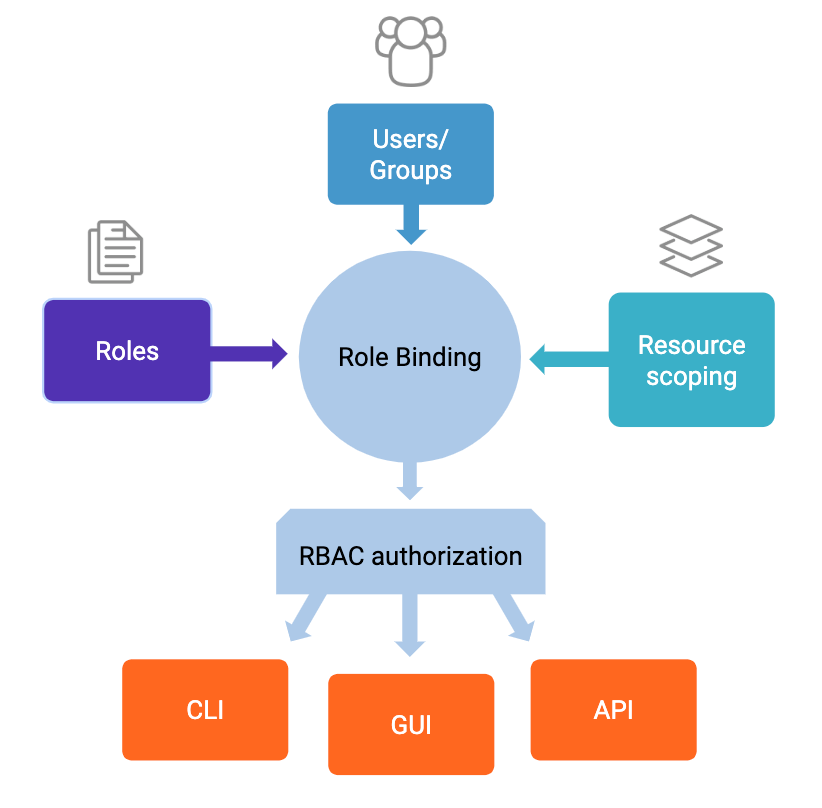

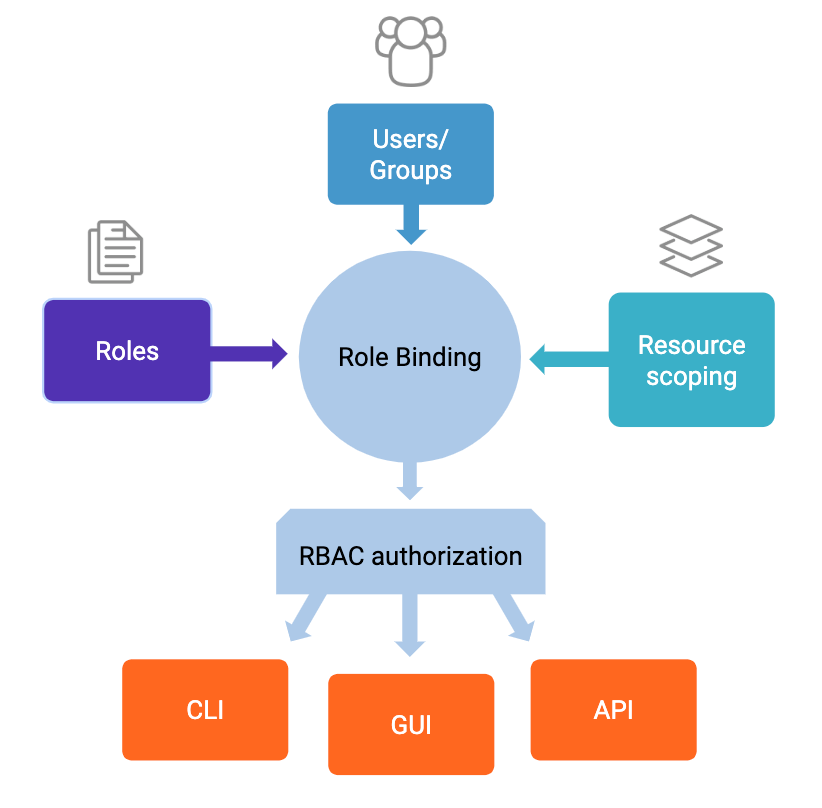

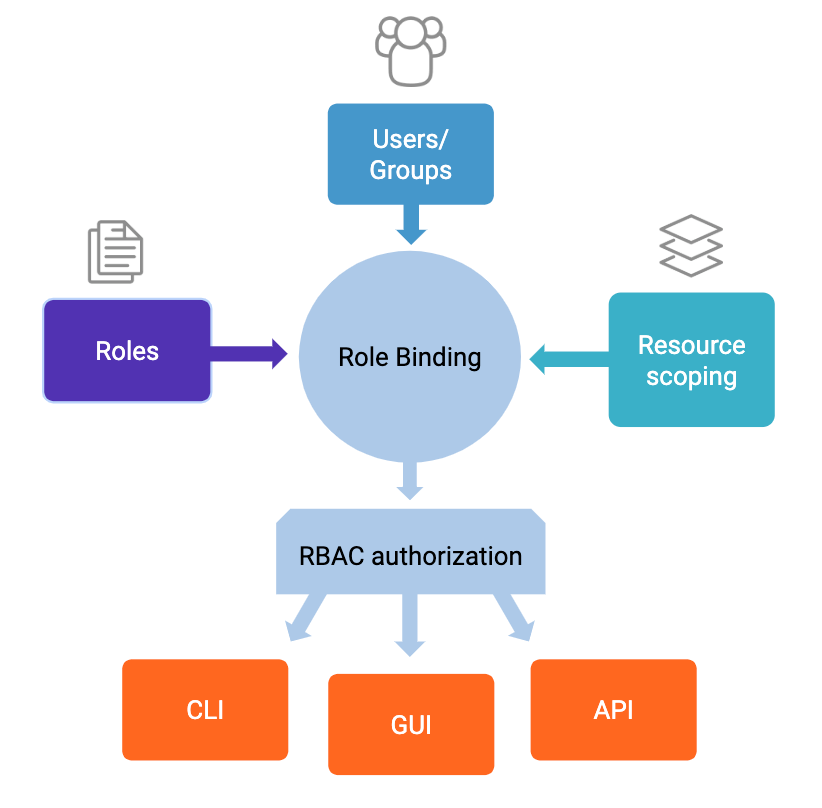

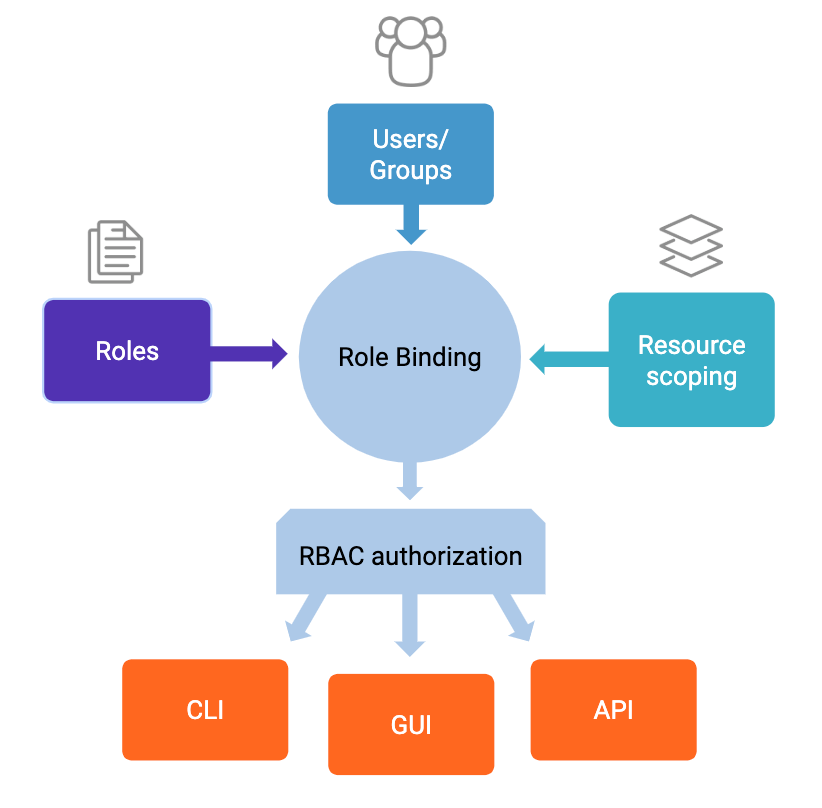

-| [Role-Based Access Control](security/rbac/README.md) | Y | Y | Role-based Access Control (RBAC) provides granular privileges for users and service accounts

-| [Role-Based Access Control](security/rbac/README.md) | Y | Y | Role-based Access Control (RBAC) provides granular privileges for users and service accounts

-| [Replicator Security](replicator-security/README.md) | N | [Y](replicator-security/README.md) | Demos of various security configurations supported by Confluent Replicator and examples of how to implement them

-| [Replicator Security](replicator-security/README.md) | N | [Y](replicator-security/README.md) | Demos of various security configurations supported by Confluent Replicator and examples of how to implement them

-

+| Demo | Local | Docker | Description |

+|------------------------------------------------------|------------------------------|----------------------------------------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| [Avro](clients/README.md) | [Y](clients/README.md) | N | Client applications using Avro and Confluent Schema Registry

-

+| Demo | Local | Docker | Description |

+|------------------------------------------------------|------------------------------|----------------------------------------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| [Avro](clients/README.md) | [Y](clients/README.md) | N | Client applications using Avro and Confluent Schema Registry

|

+| [CP Demo](https://github.com/confluentinc/cp-demo) | N | [Y](https://github.com/confluentinc/cp-demo) | [Confluent Platform demo](https://docs.confluent.io/platform/current/tutorials/cp-demo/docs/index.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top) (`cp-demo`) with a playbook for Kafka event streaming ETL deployments

|

+| [CP Demo](https://github.com/confluentinc/cp-demo) | N | [Y](https://github.com/confluentinc/cp-demo) | [Confluent Platform demo](https://docs.confluent.io/platform/current/tutorials/cp-demo/docs/index.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top) (`cp-demo`) with a playbook for Kafka event streaming ETL deployments

|

+| [Kubernetes](kubernetes/README.md) | N | [Y](kubernetes/README.md) | Demonstrations of Confluent Platform deployments using the [Confluent Operator](https://docs.confluent.io/operator/current/overview.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top)

|

+| [Kubernetes](kubernetes/README.md) | N | [Y](kubernetes/README.md) | Demonstrations of Confluent Platform deployments using the [Confluent Operator](https://docs.confluent.io/operator/current/overview.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top)

|

+| [Multi Datacenter](multi-datacenter/README.md) | N | [Y](multi-datacenter/README.md) | Active-active multi-datacenter design with two instances of Confluent Replicator copying data bidirectionally between the datacenters

|

+| [Multi Datacenter](multi-datacenter/README.md) | N | [Y](multi-datacenter/README.md) | Active-active multi-datacenter design with two instances of Confluent Replicator copying data bidirectionally between the datacenters

|

+| [Multi-Region Clusters](multiregion/README.md) | N | [Y](multiregion/README.md) | Multi-Region clusters (MRC) with follower fetching, observers, and replica placement

|

+| [Multi-Region Clusters](multiregion/README.md) | N | [Y](multiregion/README.md) | Multi-Region clusters (MRC) with follower fetching, observers, and replica placement

|

+| [Quickstart](cp-quickstart/README.md) | [Y](cp-quickstart/README.md) | [Y](cp-quickstart/README.md) | Automated version of the [Confluent Quickstart](https://docs.confluent.io/platform/current/quickstart/index.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top): for Confluent Platform on local install or Docker, community version, and Confluent Cloud

|

+| [Quickstart](cp-quickstart/README.md) | [Y](cp-quickstart/README.md) | [Y](cp-quickstart/README.md) | Automated version of the [Confluent Quickstart](https://docs.confluent.io/platform/current/quickstart/index.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top): for Confluent Platform on local install or Docker, community version, and Confluent Cloud

|

+| [Role-Based Access Control](security/rbac/README.md) | Y | Y | Role-based Access Control (RBAC) provides granular privileges for users and service accounts

|

+| [Role-Based Access Control](security/rbac/README.md) | Y | Y | Role-based Access Control (RBAC) provides granular privileges for users and service accounts

|

+| [Replicator Security](replicator-security/README.md) | N | [Y](replicator-security/README.md) | Demos of various security configurations supported by Confluent Replicator and examples of how to implement them

|

+| [Replicator Security](replicator-security/README.md) | N | [Y](replicator-security/README.md) | Demos of various security configurations supported by Confluent Replicator and examples of how to implement them

|

+| [Instant KRaft](instant-kraft/README.md) | Y | [Y](instant-kraft/README.md) | Get started with KRaft mode in Kafka in just a few minutes. Explore KRaft metadata. Note that KRaft is not yet supported in Confluent Platform.

# Build Your Own

diff --git a/instant-kraft/README.md b/instant-kraft/README.md

new file mode 100644

index 000000000..24fe848d9

--- /dev/null

+++ b/instant-kraft/README.md

@@ -0,0 +1,56 @@

+# Instant KRaft Demo

+Let's make some delicious instant KRaft

+ ___

+ .'O o'-._

+ / O o_.-`|

+ /O_.-' O |

+ |O o O .-`

+ |o O_.-'

+ '--`

+

+## Step 1

+```

+$ git clone https://github.com/confluentinc/examples.git && cd examples/instant-kraft

+

+$ docker compose up

+```

+

+That's it! One container, one process, doing the the broker and controller work. No Zookeeper.

+The key server configure to enable KRaft is the `process.roles`. Here we have the same node doing both the broker and quorum conroller work. Note that this is ok for local development, but in production you should have seperate nodes for the broker and controller quorum nodes.

+

+```

+KAFKA_PROCESS_ROLES: 'broker,controller'

+```

+

+## Step 2

+Start streaming

+

+```

+$ docker compose exec broker kafka-topics --create --topic zookeeper-vacation-ideas --bootstrap-server localhost:9092

+

+$ docker-compose exec broker kafka-producer-perf-test --topic zookeeper-vacation-ideas \

+ --num-records 200000 \

+ --record-size 50 \

+ --throughput -1 \

+ --producer-props \

+ acks=all \

+ bootstrap.servers=localhost:9092 \

+ compression.type=none \

+ batch.size=8196

+```

+

+## Step 3

+Explore the cluster using the new kafka metadata command line tool.

+

+```

+ $ docker-compose exec broker kafka-metadata-shell --snapshot /tmp/kraft-combined-logs/__cluster_metadata-0/00000000000000000000.log

+

+# use the metadata shell to explore the quorum

+>> ls metadataQuorum

+

+>> cat metadataQuorum/leader

+```

+

+Note: the `update_run.sh` is to get around some checks in the cp-kafka docker image. There are plans to change those checks in the future.

+

+The scipt also formats the storage volumes with a random uuid for the cluster. Formatting kafka storage is very important in production environments. See the AK documentation for more information.

\ No newline at end of file

diff --git a/instant-kraft/docker-compose.yml b/instant-kraft/docker-compose.yml

new file mode 100644

index 000000000..76050d4da

--- /dev/null

+++ b/instant-kraft/docker-compose.yml

@@ -0,0 +1,30 @@

+---

+version: '2'

+services:

+ broker:

+ image: confluentinc/cp-kafka:latest

+ hostname: broker

+ container_name: broker

+ ports:

+ - "9092:9092"

+ - "9101:9101"

+ environment:

+ KAFKA_BROKER_ID: 1

+ KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: 'CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT'

+ KAFKA_ADVERTISED_LISTENERS: 'PLAINTEXT://broker:29092,PLAINTEXT_HOST://localhost:9092'

+ KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

+ KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

+ KAFKA_TRANSACTION_STATE_LOG_MIN_ISR: 1

+ KAFKA_TRANSACTION_STATE_LOG_REPLICATION_FACTOR: 1

+ KAFKA_JMX_PORT: 9101

+ KAFKA_JMX_HOSTNAME: localhost

+ KAFKA_PROCESS_ROLES: 'broker,controller'

+ KAFKA_NODE_ID: 1

+ KAFKA_CONTROLLER_QUORUM_VOTERS: '1@broker:29093'

+ KAFKA_LISTENERS: 'PLAINTEXT://broker:29092,CONTROLLER://broker:29093,PLAINTEXT_HOST://0.0.0.0:9092'

+ KAFKA_INTER_BROKER_LISTENER_NAME: 'PLAINTEXT'

+ KAFKA_CONTROLLER_LISTENER_NAMES: 'CONTROLLER'

+ KAFKA_LOG_DIRS: '/tmp/kraft-combined-logs'

+ volumes:

+ - ./update_run.sh:/tmp/update_run.sh

+ command: "bash -c 'if [ ! -f /tmp/update_run.sh ]; then echo \"ERROR: Did you forget the update_run.sh file that came with this docker-compose.yml file?\" && exit 1 ; else /tmp/update_run.sh && /etc/confluent/docker/run ; fi'"

diff --git a/instant-kraft/update_run.sh b/instant-kraft/update_run.sh

new file mode 100755

index 000000000..7c9510acd

--- /dev/null

+++ b/instant-kraft/update_run.sh

@@ -0,0 +1,10 @@

+#!/bin/sh

+

+# Docker workaround: Remove check for KAFKA_ZOOKEEPER_CONNECT parameter

+sed -i '/KAFKA_ZOOKEEPER_CONNECT/d' /etc/confluent/docker/configure

+

+# Docker workaround: Ignore cub zk-ready

+sed -i 's/cub zk-ready/echo ignore zk-ready/' /etc/confluent/docker/ensure

+

+# KRaft required step: Format the storage directory with a new cluster ID

+echo "kafka-storage format --ignore-formatted -t $(kafka-storage random-uuid) -c /etc/kafka/kafka.properties" >> /etc/confluent/docker/ensure

|

+| [Instant KRaft](instant-kraft/README.md) | Y | [Y](instant-kraft/README.md) | Get started with KRaft mode in Kafka in just a few minutes. Explore KRaft metadata. Note that KRaft is not yet supported in Confluent Platform.

# Build Your Own

diff --git a/instant-kraft/README.md b/instant-kraft/README.md

new file mode 100644

index 000000000..24fe848d9

--- /dev/null

+++ b/instant-kraft/README.md

@@ -0,0 +1,56 @@

+# Instant KRaft Demo

+Let's make some delicious instant KRaft

+ ___

+ .'O o'-._

+ / O o_.-`|

+ /O_.-' O |

+ |O o O .-`

+ |o O_.-'

+ '--`

+

+## Step 1

+```

+$ git clone https://github.com/confluentinc/examples.git && cd examples/instant-kraft

+

+$ docker compose up

+```

+

+That's it! One container, one process, doing the the broker and controller work. No Zookeeper.

+The key server configure to enable KRaft is the `process.roles`. Here we have the same node doing both the broker and quorum conroller work. Note that this is ok for local development, but in production you should have seperate nodes for the broker and controller quorum nodes.

+

+```

+KAFKA_PROCESS_ROLES: 'broker,controller'

+```

+

+## Step 2

+Start streaming

+

+```

+$ docker compose exec broker kafka-topics --create --topic zookeeper-vacation-ideas --bootstrap-server localhost:9092

+

+$ docker-compose exec broker kafka-producer-perf-test --topic zookeeper-vacation-ideas \

+ --num-records 200000 \

+ --record-size 50 \

+ --throughput -1 \

+ --producer-props \

+ acks=all \

+ bootstrap.servers=localhost:9092 \

+ compression.type=none \

+ batch.size=8196

+```

+

+## Step 3

+Explore the cluster using the new kafka metadata command line tool.

+

+```

+ $ docker-compose exec broker kafka-metadata-shell --snapshot /tmp/kraft-combined-logs/__cluster_metadata-0/00000000000000000000.log

+

+# use the metadata shell to explore the quorum

+>> ls metadataQuorum

+

+>> cat metadataQuorum/leader

+```

+

+Note: the `update_run.sh` is to get around some checks in the cp-kafka docker image. There are plans to change those checks in the future.

+

+The scipt also formats the storage volumes with a random uuid for the cluster. Formatting kafka storage is very important in production environments. See the AK documentation for more information.

\ No newline at end of file

diff --git a/instant-kraft/docker-compose.yml b/instant-kraft/docker-compose.yml

new file mode 100644

index 000000000..76050d4da

--- /dev/null

+++ b/instant-kraft/docker-compose.yml

@@ -0,0 +1,30 @@

+---

+version: '2'

+services:

+ broker:

+ image: confluentinc/cp-kafka:latest

+ hostname: broker

+ container_name: broker

+ ports:

+ - "9092:9092"

+ - "9101:9101"

+ environment:

+ KAFKA_BROKER_ID: 1

+ KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: 'CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT'

+ KAFKA_ADVERTISED_LISTENERS: 'PLAINTEXT://broker:29092,PLAINTEXT_HOST://localhost:9092'

+ KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

+ KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

+ KAFKA_TRANSACTION_STATE_LOG_MIN_ISR: 1

+ KAFKA_TRANSACTION_STATE_LOG_REPLICATION_FACTOR: 1

+ KAFKA_JMX_PORT: 9101

+ KAFKA_JMX_HOSTNAME: localhost

+ KAFKA_PROCESS_ROLES: 'broker,controller'

+ KAFKA_NODE_ID: 1

+ KAFKA_CONTROLLER_QUORUM_VOTERS: '1@broker:29093'

+ KAFKA_LISTENERS: 'PLAINTEXT://broker:29092,CONTROLLER://broker:29093,PLAINTEXT_HOST://0.0.0.0:9092'

+ KAFKA_INTER_BROKER_LISTENER_NAME: 'PLAINTEXT'

+ KAFKA_CONTROLLER_LISTENER_NAMES: 'CONTROLLER'

+ KAFKA_LOG_DIRS: '/tmp/kraft-combined-logs'

+ volumes:

+ - ./update_run.sh:/tmp/update_run.sh

+ command: "bash -c 'if [ ! -f /tmp/update_run.sh ]; then echo \"ERROR: Did you forget the update_run.sh file that came with this docker-compose.yml file?\" && exit 1 ; else /tmp/update_run.sh && /etc/confluent/docker/run ; fi'"

diff --git a/instant-kraft/update_run.sh b/instant-kraft/update_run.sh

new file mode 100755

index 000000000..7c9510acd

--- /dev/null

+++ b/instant-kraft/update_run.sh

@@ -0,0 +1,10 @@

+#!/bin/sh

+

+# Docker workaround: Remove check for KAFKA_ZOOKEEPER_CONNECT parameter

+sed -i '/KAFKA_ZOOKEEPER_CONNECT/d' /etc/confluent/docker/configure

+

+# Docker workaround: Ignore cub zk-ready

+sed -i 's/cub zk-ready/echo ignore zk-ready/' /etc/confluent/docker/ensure

+

+# KRaft required step: Format the storage directory with a new cluster ID

+echo "kafka-storage format --ignore-formatted -t $(kafka-storage random-uuid) -c /etc/kafka/kafka.properties" >> /etc/confluent/docker/ensure

-| [CP Demo](https://github.com/confluentinc/cp-demo) | N | [Y](https://github.com/confluentinc/cp-demo) | [Confluent Platform demo](https://docs.confluent.io/platform/current/tutorials/cp-demo/docs/index.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top) (`cp-demo`) with a playbook for Kafka event streaming ETL deployments

-| [CP Demo](https://github.com/confluentinc/cp-demo) | N | [Y](https://github.com/confluentinc/cp-demo) | [Confluent Platform demo](https://docs.confluent.io/platform/current/tutorials/cp-demo/docs/index.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top) (`cp-demo`) with a playbook for Kafka event streaming ETL deployments  -| [Kubernetes](kubernetes/README.md) | N | [Y](kubernetes/README.md) | Demonstrations of Confluent Platform deployments using the [Confluent Operator](https://docs.confluent.io/operator/current/overview.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top)

-| [Kubernetes](kubernetes/README.md) | N | [Y](kubernetes/README.md) | Demonstrations of Confluent Platform deployments using the [Confluent Operator](https://docs.confluent.io/operator/current/overview.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top)  -| [Multi Datacenter](multi-datacenter/README.md) | N | [Y](multi-datacenter/README.md) | Active-active multi-datacenter design with two instances of Confluent Replicator copying data bidirectionally between the datacenters

-| [Multi Datacenter](multi-datacenter/README.md) | N | [Y](multi-datacenter/README.md) | Active-active multi-datacenter design with two instances of Confluent Replicator copying data bidirectionally between the datacenters  -| [Multi-Region Clusters](multiregion/README.md) | N | [Y](multiregion/README.md) | Multi-Region clusters (MRC) with follower fetching, observers, and replica placement

-| [Multi-Region Clusters](multiregion/README.md) | N | [Y](multiregion/README.md) | Multi-Region clusters (MRC) with follower fetching, observers, and replica placement -| [Quickstart](cp-quickstart/README.md) | [Y](cp-quickstart/README.md) | [Y](cp-quickstart/README.md) | Automated version of the [Confluent Quickstart](https://docs.confluent.io/platform/current/quickstart/index.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top): for Confluent Platform on local install or Docker, community version, and Confluent Cloud

-| [Quickstart](cp-quickstart/README.md) | [Y](cp-quickstart/README.md) | [Y](cp-quickstart/README.md) | Automated version of the [Confluent Quickstart](https://docs.confluent.io/platform/current/quickstart/index.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top): for Confluent Platform on local install or Docker, community version, and Confluent Cloud  -| [Role-Based Access Control](security/rbac/README.md) | Y | Y | Role-based Access Control (RBAC) provides granular privileges for users and service accounts

-| [Role-Based Access Control](security/rbac/README.md) | Y | Y | Role-based Access Control (RBAC) provides granular privileges for users and service accounts  -| [Replicator Security](replicator-security/README.md) | N | [Y](replicator-security/README.md) | Demos of various security configurations supported by Confluent Replicator and examples of how to implement them

-| [Replicator Security](replicator-security/README.md) | N | [Y](replicator-security/README.md) | Demos of various security configurations supported by Confluent Replicator and examples of how to implement them  -

+| Demo | Local | Docker | Description |

+|------------------------------------------------------|------------------------------|----------------------------------------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| [Avro](clients/README.md) | [Y](clients/README.md) | N | Client applications using Avro and Confluent Schema Registry

-

+| Demo | Local | Docker | Description |

+|------------------------------------------------------|------------------------------|----------------------------------------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| [Avro](clients/README.md) | [Y](clients/README.md) | N | Client applications using Avro and Confluent Schema Registry  |

+| [CP Demo](https://github.com/confluentinc/cp-demo) | N | [Y](https://github.com/confluentinc/cp-demo) | [Confluent Platform demo](https://docs.confluent.io/platform/current/tutorials/cp-demo/docs/index.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top) (`cp-demo`) with a playbook for Kafka event streaming ETL deployments

|

+| [CP Demo](https://github.com/confluentinc/cp-demo) | N | [Y](https://github.com/confluentinc/cp-demo) | [Confluent Platform demo](https://docs.confluent.io/platform/current/tutorials/cp-demo/docs/index.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top) (`cp-demo`) with a playbook for Kafka event streaming ETL deployments  |

+| [Kubernetes](kubernetes/README.md) | N | [Y](kubernetes/README.md) | Demonstrations of Confluent Platform deployments using the [Confluent Operator](https://docs.confluent.io/operator/current/overview.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top)

|

+| [Kubernetes](kubernetes/README.md) | N | [Y](kubernetes/README.md) | Demonstrations of Confluent Platform deployments using the [Confluent Operator](https://docs.confluent.io/operator/current/overview.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top)  |

+| [Multi Datacenter](multi-datacenter/README.md) | N | [Y](multi-datacenter/README.md) | Active-active multi-datacenter design with two instances of Confluent Replicator copying data bidirectionally between the datacenters

|

+| [Multi Datacenter](multi-datacenter/README.md) | N | [Y](multi-datacenter/README.md) | Active-active multi-datacenter design with two instances of Confluent Replicator copying data bidirectionally between the datacenters  |

+| [Multi-Region Clusters](multiregion/README.md) | N | [Y](multiregion/README.md) | Multi-Region clusters (MRC) with follower fetching, observers, and replica placement

|

+| [Multi-Region Clusters](multiregion/README.md) | N | [Y](multiregion/README.md) | Multi-Region clusters (MRC) with follower fetching, observers, and replica placement |

+| [Quickstart](cp-quickstart/README.md) | [Y](cp-quickstart/README.md) | [Y](cp-quickstart/README.md) | Automated version of the [Confluent Quickstart](https://docs.confluent.io/platform/current/quickstart/index.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top): for Confluent Platform on local install or Docker, community version, and Confluent Cloud

|

+| [Quickstart](cp-quickstart/README.md) | [Y](cp-quickstart/README.md) | [Y](cp-quickstart/README.md) | Automated version of the [Confluent Quickstart](https://docs.confluent.io/platform/current/quickstart/index.html?utm_source=github&utm_medium=demo&utm_campaign=ch.examples_type.community_content.top): for Confluent Platform on local install or Docker, community version, and Confluent Cloud  |

+| [Role-Based Access Control](security/rbac/README.md) | Y | Y | Role-based Access Control (RBAC) provides granular privileges for users and service accounts

|

+| [Role-Based Access Control](security/rbac/README.md) | Y | Y | Role-based Access Control (RBAC) provides granular privileges for users and service accounts  |

+| [Replicator Security](replicator-security/README.md) | N | [Y](replicator-security/README.md) | Demos of various security configurations supported by Confluent Replicator and examples of how to implement them

|

+| [Replicator Security](replicator-security/README.md) | N | [Y](replicator-security/README.md) | Demos of various security configurations supported by Confluent Replicator and examples of how to implement them  |

+| [Instant KRaft](instant-kraft/README.md) | Y | [Y](instant-kraft/README.md) | Get started with KRaft mode in Kafka in just a few minutes. Explore KRaft metadata. Note that KRaft is not yet supported in Confluent Platform.

# Build Your Own

diff --git a/instant-kraft/README.md b/instant-kraft/README.md

new file mode 100644

index 000000000..24fe848d9

--- /dev/null

+++ b/instant-kraft/README.md

@@ -0,0 +1,56 @@

+# Instant KRaft Demo

+Let's make some delicious instant KRaft

+ ___

+ .'O o'-._

+ / O o_.-`|

+ /O_.-' O |

+ |O o O .-`

+ |o O_.-'

+ '--`

+

+## Step 1

+```

+$ git clone https://github.com/confluentinc/examples.git && cd examples/instant-kraft

+

+$ docker compose up

+```

+

+That's it! One container, one process, doing the the broker and controller work. No Zookeeper.

+The key server configure to enable KRaft is the `process.roles`. Here we have the same node doing both the broker and quorum conroller work. Note that this is ok for local development, but in production you should have seperate nodes for the broker and controller quorum nodes.

+

+```

+KAFKA_PROCESS_ROLES: 'broker,controller'

+```

+

+## Step 2

+Start streaming

+

+```

+$ docker compose exec broker kafka-topics --create --topic zookeeper-vacation-ideas --bootstrap-server localhost:9092

+

+$ docker-compose exec broker kafka-producer-perf-test --topic zookeeper-vacation-ideas \

+ --num-records 200000 \

+ --record-size 50 \

+ --throughput -1 \

+ --producer-props \

+ acks=all \

+ bootstrap.servers=localhost:9092 \

+ compression.type=none \

+ batch.size=8196

+```

+

+## Step 3

+Explore the cluster using the new kafka metadata command line tool.

+

+```

+ $ docker-compose exec broker kafka-metadata-shell --snapshot /tmp/kraft-combined-logs/__cluster_metadata-0/00000000000000000000.log

+

+# use the metadata shell to explore the quorum

+>> ls metadataQuorum

+

+>> cat metadataQuorum/leader

+```

+

+Note: the `update_run.sh` is to get around some checks in the cp-kafka docker image. There are plans to change those checks in the future.

+

+The scipt also formats the storage volumes with a random uuid for the cluster. Formatting kafka storage is very important in production environments. See the AK documentation for more information.

\ No newline at end of file

diff --git a/instant-kraft/docker-compose.yml b/instant-kraft/docker-compose.yml

new file mode 100644

index 000000000..76050d4da

--- /dev/null

+++ b/instant-kraft/docker-compose.yml

@@ -0,0 +1,30 @@

+---

+version: '2'

+services:

+ broker:

+ image: confluentinc/cp-kafka:latest

+ hostname: broker

+ container_name: broker

+ ports:

+ - "9092:9092"

+ - "9101:9101"

+ environment:

+ KAFKA_BROKER_ID: 1

+ KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: 'CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT'

+ KAFKA_ADVERTISED_LISTENERS: 'PLAINTEXT://broker:29092,PLAINTEXT_HOST://localhost:9092'

+ KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

+ KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

+ KAFKA_TRANSACTION_STATE_LOG_MIN_ISR: 1

+ KAFKA_TRANSACTION_STATE_LOG_REPLICATION_FACTOR: 1

+ KAFKA_JMX_PORT: 9101

+ KAFKA_JMX_HOSTNAME: localhost

+ KAFKA_PROCESS_ROLES: 'broker,controller'

+ KAFKA_NODE_ID: 1

+ KAFKA_CONTROLLER_QUORUM_VOTERS: '1@broker:29093'

+ KAFKA_LISTENERS: 'PLAINTEXT://broker:29092,CONTROLLER://broker:29093,PLAINTEXT_HOST://0.0.0.0:9092'

+ KAFKA_INTER_BROKER_LISTENER_NAME: 'PLAINTEXT'

+ KAFKA_CONTROLLER_LISTENER_NAMES: 'CONTROLLER'

+ KAFKA_LOG_DIRS: '/tmp/kraft-combined-logs'

+ volumes:

+ - ./update_run.sh:/tmp/update_run.sh

+ command: "bash -c 'if [ ! -f /tmp/update_run.sh ]; then echo \"ERROR: Did you forget the update_run.sh file that came with this docker-compose.yml file?\" && exit 1 ; else /tmp/update_run.sh && /etc/confluent/docker/run ; fi'"

diff --git a/instant-kraft/update_run.sh b/instant-kraft/update_run.sh

new file mode 100755

index 000000000..7c9510acd

--- /dev/null

+++ b/instant-kraft/update_run.sh

@@ -0,0 +1,10 @@

+#!/bin/sh

+

+# Docker workaround: Remove check for KAFKA_ZOOKEEPER_CONNECT parameter

+sed -i '/KAFKA_ZOOKEEPER_CONNECT/d' /etc/confluent/docker/configure

+

+# Docker workaround: Ignore cub zk-ready

+sed -i 's/cub zk-ready/echo ignore zk-ready/' /etc/confluent/docker/ensure

+

+# KRaft required step: Format the storage directory with a new cluster ID

+echo "kafka-storage format --ignore-formatted -t $(kafka-storage random-uuid) -c /etc/kafka/kafka.properties" >> /etc/confluent/docker/ensure

|

+| [Instant KRaft](instant-kraft/README.md) | Y | [Y](instant-kraft/README.md) | Get started with KRaft mode in Kafka in just a few minutes. Explore KRaft metadata. Note that KRaft is not yet supported in Confluent Platform.

# Build Your Own

diff --git a/instant-kraft/README.md b/instant-kraft/README.md

new file mode 100644

index 000000000..24fe848d9

--- /dev/null

+++ b/instant-kraft/README.md

@@ -0,0 +1,56 @@

+# Instant KRaft Demo

+Let's make some delicious instant KRaft

+ ___

+ .'O o'-._

+ / O o_.-`|

+ /O_.-' O |

+ |O o O .-`

+ |o O_.-'

+ '--`

+

+## Step 1

+```

+$ git clone https://github.com/confluentinc/examples.git && cd examples/instant-kraft

+

+$ docker compose up

+```

+

+That's it! One container, one process, doing the the broker and controller work. No Zookeeper.

+The key server configure to enable KRaft is the `process.roles`. Here we have the same node doing both the broker and quorum conroller work. Note that this is ok for local development, but in production you should have seperate nodes for the broker and controller quorum nodes.

+

+```

+KAFKA_PROCESS_ROLES: 'broker,controller'

+```

+

+## Step 2

+Start streaming

+

+```

+$ docker compose exec broker kafka-topics --create --topic zookeeper-vacation-ideas --bootstrap-server localhost:9092

+

+$ docker-compose exec broker kafka-producer-perf-test --topic zookeeper-vacation-ideas \

+ --num-records 200000 \

+ --record-size 50 \

+ --throughput -1 \

+ --producer-props \

+ acks=all \

+ bootstrap.servers=localhost:9092 \

+ compression.type=none \

+ batch.size=8196

+```

+

+## Step 3

+Explore the cluster using the new kafka metadata command line tool.

+

+```

+ $ docker-compose exec broker kafka-metadata-shell --snapshot /tmp/kraft-combined-logs/__cluster_metadata-0/00000000000000000000.log

+

+# use the metadata shell to explore the quorum

+>> ls metadataQuorum

+

+>> cat metadataQuorum/leader

+```

+

+Note: the `update_run.sh` is to get around some checks in the cp-kafka docker image. There are plans to change those checks in the future.

+

+The scipt also formats the storage volumes with a random uuid for the cluster. Formatting kafka storage is very important in production environments. See the AK documentation for more information.

\ No newline at end of file

diff --git a/instant-kraft/docker-compose.yml b/instant-kraft/docker-compose.yml

new file mode 100644

index 000000000..76050d4da

--- /dev/null

+++ b/instant-kraft/docker-compose.yml

@@ -0,0 +1,30 @@

+---

+version: '2'

+services:

+ broker:

+ image: confluentinc/cp-kafka:latest

+ hostname: broker

+ container_name: broker

+ ports:

+ - "9092:9092"

+ - "9101:9101"

+ environment:

+ KAFKA_BROKER_ID: 1

+ KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: 'CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT'

+ KAFKA_ADVERTISED_LISTENERS: 'PLAINTEXT://broker:29092,PLAINTEXT_HOST://localhost:9092'

+ KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

+ KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

+ KAFKA_TRANSACTION_STATE_LOG_MIN_ISR: 1

+ KAFKA_TRANSACTION_STATE_LOG_REPLICATION_FACTOR: 1

+ KAFKA_JMX_PORT: 9101

+ KAFKA_JMX_HOSTNAME: localhost

+ KAFKA_PROCESS_ROLES: 'broker,controller'

+ KAFKA_NODE_ID: 1

+ KAFKA_CONTROLLER_QUORUM_VOTERS: '1@broker:29093'

+ KAFKA_LISTENERS: 'PLAINTEXT://broker:29092,CONTROLLER://broker:29093,PLAINTEXT_HOST://0.0.0.0:9092'

+ KAFKA_INTER_BROKER_LISTENER_NAME: 'PLAINTEXT'

+ KAFKA_CONTROLLER_LISTENER_NAMES: 'CONTROLLER'

+ KAFKA_LOG_DIRS: '/tmp/kraft-combined-logs'

+ volumes:

+ - ./update_run.sh:/tmp/update_run.sh

+ command: "bash -c 'if [ ! -f /tmp/update_run.sh ]; then echo \"ERROR: Did you forget the update_run.sh file that came with this docker-compose.yml file?\" && exit 1 ; else /tmp/update_run.sh && /etc/confluent/docker/run ; fi'"

diff --git a/instant-kraft/update_run.sh b/instant-kraft/update_run.sh

new file mode 100755

index 000000000..7c9510acd

--- /dev/null

+++ b/instant-kraft/update_run.sh

@@ -0,0 +1,10 @@

+#!/bin/sh

+

+# Docker workaround: Remove check for KAFKA_ZOOKEEPER_CONNECT parameter

+sed -i '/KAFKA_ZOOKEEPER_CONNECT/d' /etc/confluent/docker/configure

+

+# Docker workaround: Ignore cub zk-ready

+sed -i 's/cub zk-ready/echo ignore zk-ready/' /etc/confluent/docker/ensure

+

+# KRaft required step: Format the storage directory with a new cluster ID

+echo "kafka-storage format --ignore-formatted -t $(kafka-storage random-uuid) -c /etc/kafka/kafka.properties" >> /etc/confluent/docker/ensure