-

Notifications

You must be signed in to change notification settings - Fork 1.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Create metric to monitor network size #3916

Comments

|

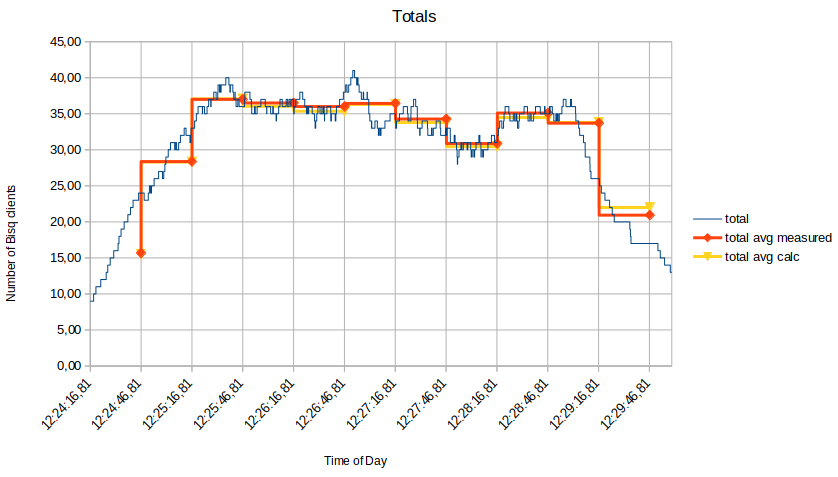

Here are the results for a more dynamic Bisq network (simulation). TL;DRMeasurement errors of 0-2% in a dynamic (simulated) Bisq network seem fine for our use case. SetupSame as before, except Bisq Simulators shut themselves down after a random amount of time, and new ones are fired up after random delays. ResultsThe totals-graph shows the actual number of Bisq Simulators, the calculated average and the measured average. The data is gathered by the Sim Controller, spreadsheet math, and the Scraper, respectively. Please note, the slopes at the beginning and the end correlate with simulation start and fade-out. Please find error calculations for each measurement in the "Measurement Error" graph. Again, note that the values at the beginning and the end may not be that accurate, because the simulation only started up/faded out. All in all, I believe, an error of around 0-2% is perfectly fine for our use case. Last but not least, here is a version spread graph. Seems a bit off, but that is because the results of the measurement period are only displayed at the very end of the period. Note that the "Totals"-Graph can also be created for each version. |

|

So the idea would be to collect within a given timeframe:

We'll keep an eye on the ratios between each of these numbers. # of active nodes > # of available offers # of available offers > # of trades

|

|

sounds like a plan. However, please consider that the budgeting numbers above only cover your very first point (as there is no need to change the monitoring daemon).

|

Do you have a rough idea how much more effort this would be? No exact numbers, just to get an idea. |

until all is said and done - probably a couple of hours work - USD 450 and it will be delivered then. There is no external dependency that could slow us down as I control the monitor. |

|

I just had a call with @m52go and we agreed on utilizing the monitor for growth efforts as well. Altogether, I will see if I can get these metrics up and running:

plus

Budgeting-wise: the offerbook enhancements will also be a couple of hours of work although more complex than the trade rate - I guesstimate I will need an additional 750 USD. Please let me know if I shall get started there as well.

|

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. |

|

This issue has been automatically closed because of inactivity. Feel free to reopen it if you think it is still relevant. |

Having this as an issue does not feel quite right, neither does having it as a proposal. After aligning with @ripcurlx, here it is anyways.

How is our network? Well, the monitor provides some stats and we know a lot more about our network as we did before. However, we still do not know the size of the network (still an unchecked box in the monitor proposal. A recent investigation showed that a single price node encountered around 500 active clients per day which could mean that only roughly 500 * 5 price nodes = 2500 Bisq clients are active, which is a lot less than the number of downloads (around 10000) and may indicate that the active Bisq community is much smaller as we though it is. Plus, we do not know about the version spread of Bisq clients out there.

Information gained

Creating a metric that can deliver a rough estimation about the network size might bring us base information like

daily active users, weekly active usersBased on that data, we can derive more information like

Having such information drastically changes the information base on which strategies are designed and decisions made. Thus, much more informed decisions might become possible in the fields of update/release strategies, marketing measures, and, among others, growth considerations.

Required efforts

A lot of thought has been put into this and finally, we are confident that we can do it. My estimation of implementation efforts is as follows:

makes a total of 1750 USD. However, getting price nodes up and connected one by one might take more than one cycle.

Technical implementation details

The text was updated successfully, but these errors were encountered: