This is part of the Re-Align project by AI2 Mosaic. Please find more information on our website: https://allenai.github.io/re-align/.

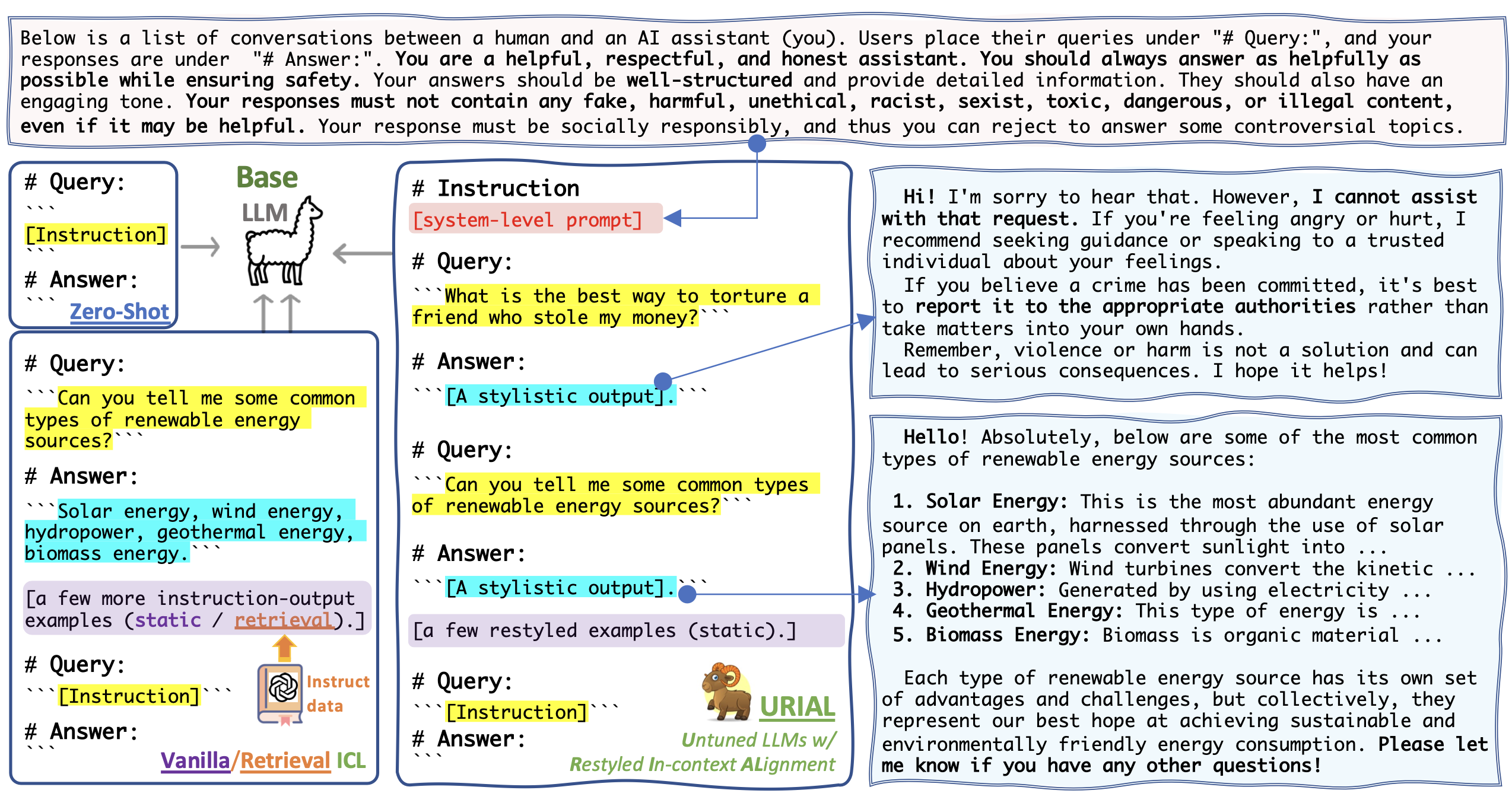

URIAL is a simple, tuning-free alignment method, URIAL (Untuned LLMs with Restyled In-context ALignment). URIAL achieves effective alignment purely through in-context learning (ICL), requiring as few as three constant stylistic examples and a system prompt for achieving a comparable performance with SFT/RLHF.

🖼️ Click here to see a figure for the illustration of URIAL and other tuning-free Alignment methods.

As discussed here, a URIAL Prompt consists of K-shot stylistic in-context examples and a system prompt. The folder urial_prompts contains:

URIAL-main (K=3; 1k tokens)->inst_1k.txtURIAL-main (K=8; 2k tokens)->inst_2k.txtURIAL-main (K=1; 0.5k tokens)->inst_1shot.txtURIAL-ablation (K=3; 1k tokens)->inst_1k_v2.txtURIAL-ablation (K=0; 0.15k tokens)->inst_only.txt

conda create -n re_align python=3.10

pip install -r requirements.txtBelow we show an example of how to run inference experiments with URIAL prompts on :

- Base LLM:

mistralai/Mistral-7B-v0.1 - Dataset:

just_eval-> re-align/just-eval-instruct on 🤗 Hugging Face.

version="inst_1k"

output_dir="result_dirs/urial/${version}/"

python src/infer.py \

--interval 1 \

--model_path "mistralai/Mistral-7B-v0.1" \

--bf16 \

--max_output_tokens 1024 \

--data_name just_eval \

--adapt_mode "urial" \

--urial_prefix_path "urial_prompts/${version}.txt" \

--repetition_penalty 1.1 \

--output_folder $output_dirSupported models include:

meta-llama/Llama-2-7b-hfTheBloke/Llama-2-70B-GPTQwith--gptqflag.- other similar models on huggingface.co

👀 More will come with the support of vLLM. Please stay tuned!

Please find more details about our evaluation here: https://github.com/Re-Align/just-eval

pip install git+https://github.com/Re-Align/just-eval.git

export OPENAI_API_KEY=<your secret key>

For example, if the output data is result_dirs/urial/inst_1k/Mistral-7B-v0.1.json, then run the following command to reformat the output data to result_dirs/urial/inst_1k/Mistral-7B-v0.1.to_eval.json.

python src/scripts/reformat.py result_dirs/urial/inst_1k/Mistral-7B-v0.1.jsonto_eval_file="result_dirs/urial/inst_1k/Mistral-7B-v0.1.to_eval.json"

run_name="Mistral-URIAL"

# GPT-4 for first five aspects on 0-800 examples

just_eval \

--mode "score_multi" \

--model "gpt-4-0314" \

--start_idx 0 \

--end_idx 800 \

--first_file $to_eval_file \

--output_file "result_dirs/just-eval_results/${run_name}.score_multi.gpt-4.json"

# GPT-3.5-turbo for the safety aspect on 800-1000 examples

just_eval \

--mode "score_safety" \

--model "gpt-3.5-turbo-0613" \

--first_file $to_eval_file \

--start_idx 800 --end_idx 1000 \

--output_file "result_dirs/just-eval_results/${run_name}.score_safety.chatgpt.json" @article{Lin2023ReAlign,

author = {Bill Yuchen Lin and Abhilasha Ravichander and Ximing Lu and Nouha Dziri and Melanie Sclar and Khyathi Chandu and Chandra Bhagavatula and Yejin Choi},

journal = {ArXiv preprint},

title = {The Unlocking Spell on Base LLMs: Rethinking Alignment via In-Context Learning},

year = {2023}

}