-

Notifications

You must be signed in to change notification settings - Fork 293

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Performance] Data viewer can't handle large DFs #3434

Comments

|

Update I tried viewing just a (3226,) pandas.Series and got the following error thrown back: Error: Failure during variable extraction:TypeError Traceback (most recent call last) C:\ProgramData\Anaconda3\envs\DEV64\lib\json_init_.py in dumps(obj, skipkeys, ensure_ascii, check_circular, allow_nan, cls, indent, separators, default, sort_keys, **kw) C:\ProgramData\Anaconda3\envs\DEV64\lib\json\encoder.py in encode(self, o) C:\ProgramData\Anaconda3\envs\DEV64\lib\json\encoder.py in iterencode(self, o, _one_shot) C:\ProgramData\Anaconda3\envs\DEV64\lib\json\encoder.py in default(self, o) TypeError: Object of type 'Timestamp' is not JSON serializable So I created a DataFrame of 2 series of 700 values with a pandas.DatetimeIndex, and while I can see the values the index is not shown, which would defeat the purpose of viewing DFs when working with time series. |

|

Need to investigate. |

|

@FranciscoRZ do you have an example piece of code you can share to create the failing problem? At least for the datetimeIndex, it's working fine for me. Also what version of pandas are you using? |

|

For the huge amount of columns, I don't think we'll be able to support it for a while. The control we're using isn't virtualizing columns, just rows, so it adds the 3000 columns into the DOM and the nodejs process runs out of memory. @FranciscoRZ, I think we're going to have to limit the number of columns displayed. Here's the code I used to repro the first issue: |

|

@rchiodo, thanks for the quick response (and sorry for the late reply, I'm guessing we're in different time zones 😅 ) To reproduce the problem with large DFs, I used the following code: --> double click df in variable explorer From that, I reproduced the problem with the DatetimeIndex as follows: --> double click df2 in variable explorer Here's what I get: Sorry I can't be of more help, I really have no experience / knowledge of web development technologies. Also, while working yesterday I noticed that as my variable environment grew, the variable explorer started to flicker more and more, and took a while to reload. Is it reevaluating all variables each time a variable is defined? If so, it seems like a really resource intensive process. I haven't yet come accross performance issues I can pin to this, but maybe a "refresh" button in the variable explorer would be more user friendly / resource conscious? |

|

Thanks for the code. That helps. Although I'm confused as to the bug for the second? It's not showing the 'TypeError: Object of type 'Timestamp' is not JSON serializable' anymore? So that part of it is fixed/not reproing? Thanks for the feedback on the refresh too. You can disable refreshing by just collapsing the variables explorer, but yes we do refresh every variable every cell. It works like a debugger does, every time you step it updates all of the variables. We should do some perf testing to see if there's any interference with actually executing cells. Adding @IanMatthewHuff for an FYI. |

|

Sorry, I forgot to specify that one.

I know some pandas attributes are not serializable (I'm pretty sure |

|

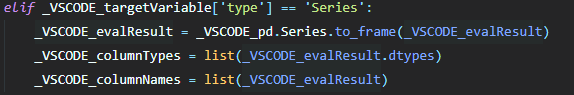

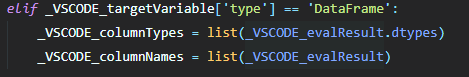

Lastly, I've been playing around with the date type to see if this is a pandas related problem. It's interesting that in the case of So I took a quick look at the source code. I'm guessing that the Python side of the data viewer's magic happens in Anyways, hope this helps. |

|

Yes it does help. Thanks. I'm going to split out the dataframe index and timestamp problems. They're different from the too many columns not working. Actually there's already another bug for this: I'm going to fix the timestamp problem there too. It has to do with timestamps/datetimes having custom string formatting and pandas not using the same values as str() does. |

|

You're right, best to keep them separate. Thanks, and good luck! |

|

@FranciscoRZ the timestamp/index column problem is now fixed. You can try it out if you like from our insider's build The column virtualization (or limitation) is going to take a little longer though. |

|

Awesome, I will! Best regards, Francisco |

|

I just submitted a fix for the column virtualization. Please feel free to try it out in our next insiders It should support any number of columns and rows, but it will ask if you're sure you want to open the view if there's more than 1000 columns. More than a 1000 columns causes the initial bring up to take awhile and fetching the data can take longer too (it has to turn the rows into a JSON string in order to send it to our UI - function of how VS code works). 1000 x 10000 DF takes me about 5 minutes to load. However it also now supports filtering with expressions on numeric columns. Example: |

|

That's really impressive, thanks a lot! Take care, Francisco |

Environment data

Expected behaviour

View large DataFrames (>1000 columns, >1000 rows) in under 1 minute

Actual behaviour

When opening large DFs (current is 709x3201) the Data Viewer stops at showing the structure with all values at 'loading ...' (current runtime 20 minutes).

Steps to reproduce:

Logs

Output for

Pythonin theOutputpanel (View→Output, change the drop-down the upper-right of theOutputpanel toPython)Output from

Consoleunder theDeveloper Toolspanel (toggle Developer Tools on underHelp; turn on source maps to make any tracebacks be useful by runningEnable source map support for extension debugging)I was really looking forward to these features, so thanks for getting them in there! However, when dealing with quantitative finance problems we often have very large dataframes, and it would be nice to be able to use the data viewer to explore them.

Best regards,

Francisco

The text was updated successfully, but these errors were encountered: