-

Notifications

You must be signed in to change notification settings - Fork 4.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Realtime point cloud calculation in a remote computer #6535

Comments

|

Intel recently published a white-paper document about setting up open-source ethernet networking for RealSense cameras. Section 2.7 of the paper explains how it is possible to add a Network Device (a camera's IP address) in the RealSense Viewer using the Add Source button to use the Viewer's various streaming modes (including point cloud) through a network. I believe that in the paper's setup, the computer with the camera attached is the Remote computer and the computer that accesses the camera data via the network is the Host computer (which has the Viewer installed on it). |

|

Thank you for your quick response. However, in my setting two computers do not belong to the same LAN. They are connected over the Internet so that the bandwidth is narrow. So, I need to shrink each frame as far as possible. In other words, I want to calculate a point cloud from minimal data or send the compressed point cloud itself. Is there any other way? |

|

There is an application called Depth2Web that can package depth data for the web. It is built on Javascript code. https://github.com/js6450/depth2web Another camera to web system has previously been published on Intel's main GitHub (not the RealSense GitHub): The link below, meanwhile, has a non-RealSense white paper document by Intel that discusses techniques for streaming high quality data over broadband or 5G phone connection with a lower amount of data: |

|

Thank you for many pointers. I will read all of them carefully. |

|

Hi @akawashiro, From what I understand you are mostly concerned with the bandwidth limitation and not concerned with web technologies so UDP or TCP/IP streaming is ok. I solved similar problem in the following fashion:

See explanation here or the code here (~ 30 lines of code).

I typically use it with 8 Mbit (depth) + 1 Mbit (color or infrared) If you don't have infrastructure and use consumer type internet provider you will be mostly limited with upload bandwidth (typically orders of magnitude lower than download bandwidth. What I did is Linux only and mostly works on Intel, at least KabyLake on encoding side. The repositories:

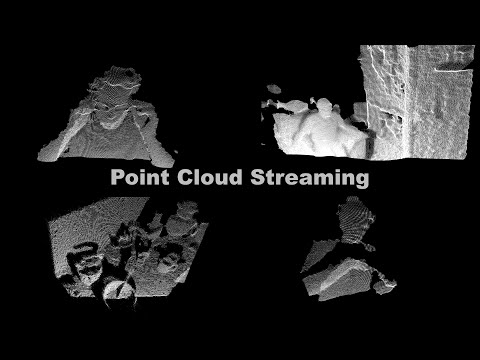

You may see it working in action here: @MartyG-RealSense wrote:

This scheme is great and lossless but gives compression of order 50-75%. This is probably still too much data for what you need.

The paper is very interesting, however typical hardware encoders don't support chroma subsampling 4:4:4 (e.g. every sample of luminance matched with chrominance data sample) that would be needed for encoding. It is interesting scheme for software encoding or if you have the luck to have hardware encoder supporting it. Compared to my approach (HEVC Main10) it gives 10,5 bits per pixel vs 10 bits per pixel. Both ways are quite picky in the hardware they need for hardware encoding. For software encoding it is possible to combine gstreamer/FFmpeg with the methodology from whitepaper (or if you are lucky also hardware encoding).

This paper is also quite interesting but it looks like the authors are mostly concerned with decoding. Typically the encoding is the harder part (to do in realtime). So I am not certain if the authors mean encoding offline and only serving in real time to AR framework. The bandwidth requirements are also quite high (assumed 100 Mbps). Some more comments here. Kind regards |

|

Ok, some notes:

|

|

Thanks! I cut off 6 bits in order to compress point cloud smaller. From my experiments, we can compress smaller when we cut more bits. |

|

Hi @akawashiro Do you still require assistance with this case, please? Thanks! |

|

No. I get enough information. Thank you for @MartyG-RealSense and @bmegli . |

|

@akawashiro Thanks so much for the update! |

Issue Description

I want to calculate a point cloud on a remote computer. It is connected via a network to the computer which is the camera attached. And I want to make a realtime application so I cannot use a record-and-playback method like Serializing / Pickling frames / frame sets for remote align #5296. Furthermore, I don't want to send a point cloud data from the viewpoint of the bandwidth of the network.

Ideally, I want to construct a point cloud only from RGB-D data which we can get with rs2::video_frame::get_data() and rs2::depth_frame::get_data(). Is there any way?

The text was updated successfully, but these errors were encountered: