-

Notifications

You must be signed in to change notification settings - Fork 34

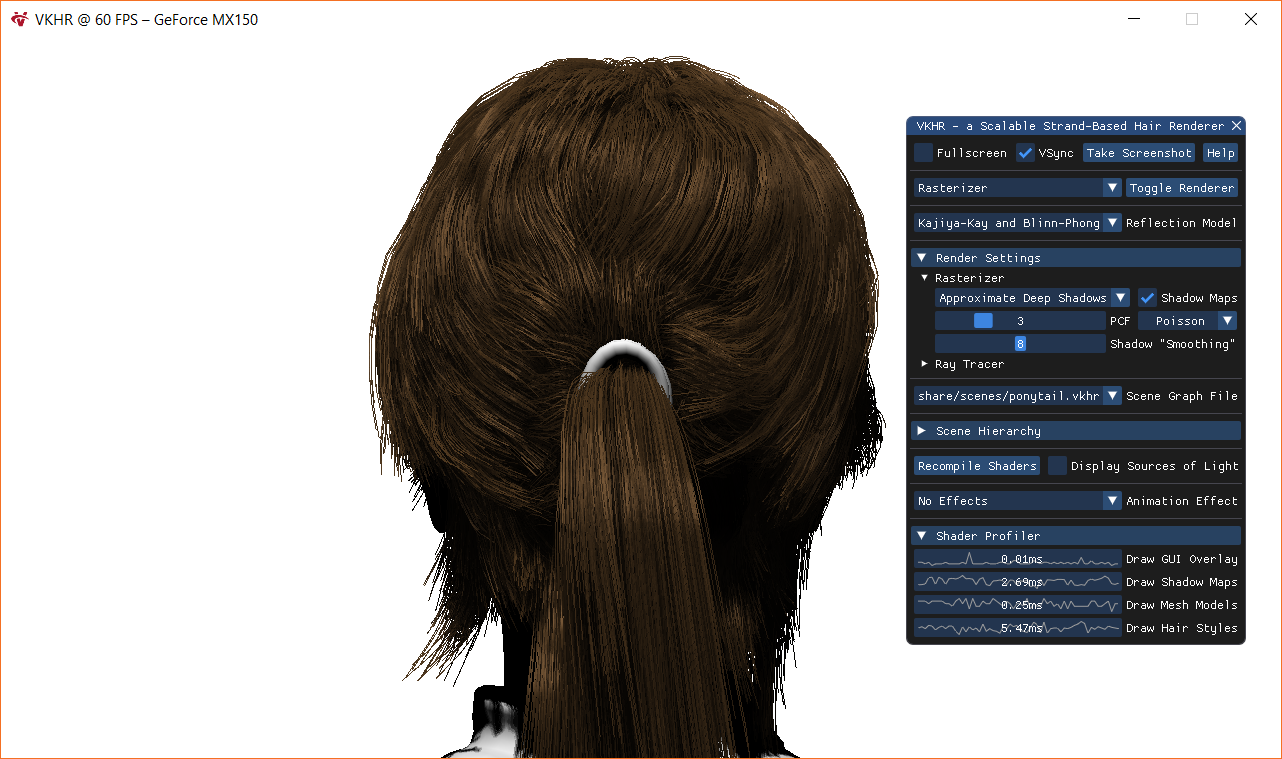

Captain's Log

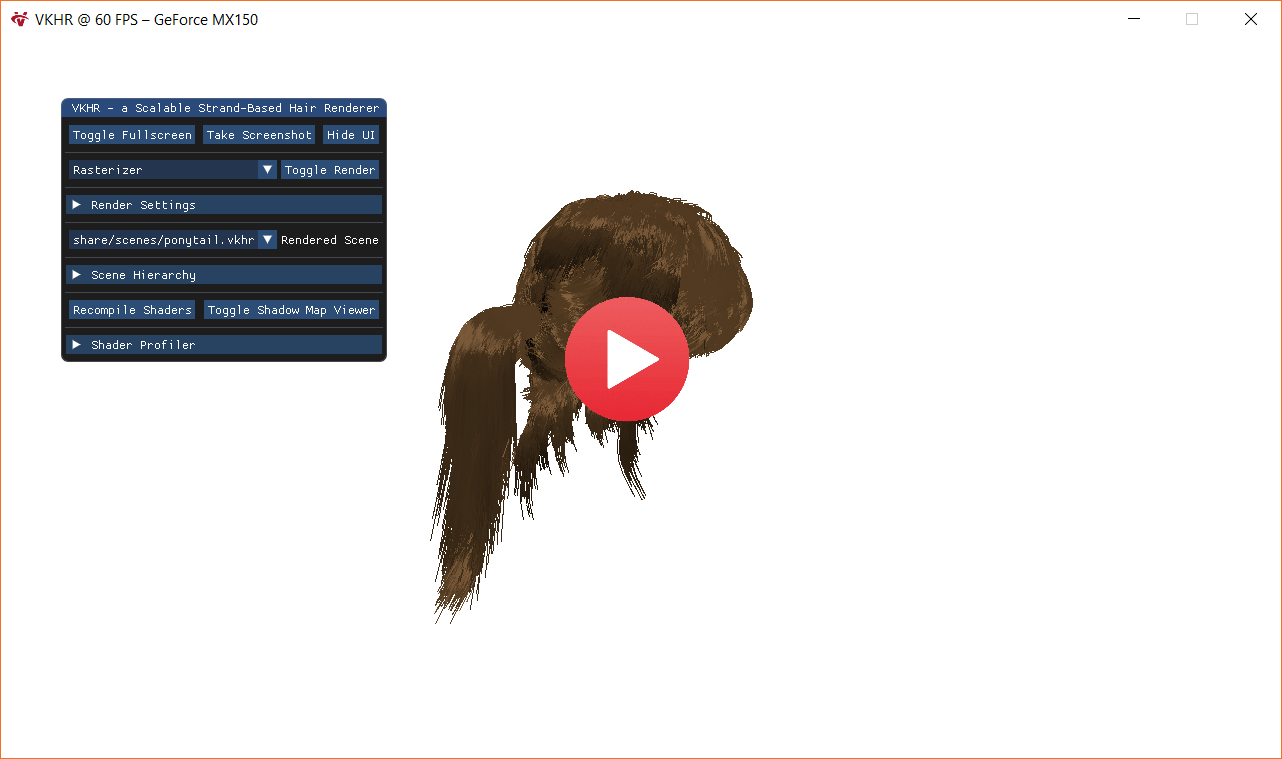

Here you'll find the current status of the project, which is updated every week-end. Below you'll find the implementation history of vkhr, and my thoughts on the current and future status of the project. I also describe the rationale and approach to my solutions blog-post style, for future references too.

- Raymarching now approximates the strand coverage as well (smooth transition).

- It uses the strand density to decide the alpha to assign to the line fragment.

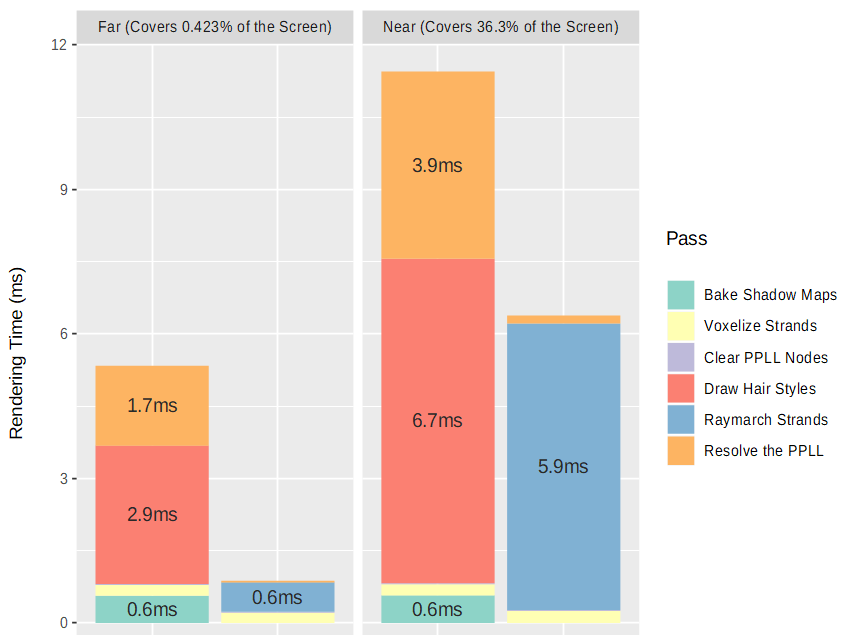

- Made the CSV format compatible with R and produced some example plots too.

- Added a semi-automatic packaging system for bundling the current

vkhrbuild. - Shuffled (only once) the strand order to quickly allow reductions for hair strands.

- Just modifying the offset, or padding, does not solve the problem generally.

- Adjusted lighting parameters. Now screenshots and printouts don't look so dark.

- Strands can now be skipped (assuming they're stored correctly) when rendering.

- This will allow us to estimate the performance scaling when benchmarking.

- Implemented a CSV exporter for gathering the frame shader timing information.

- Added the ability to take 60 frame performance snapshots for statistical analysis.

- Fixed bugs where screenshots with transparent fragments would be very wrong.

- Added the

--benchmarkflag. It automatically renders / profiles a given scenario.- A scenario is composed of a scene (e.g.

bear.vkhr) and its render settings. - For a benchmark, a queue of scenarios is automatically executed (in-order).

- The queue may be built programmatically to choose ranges of parameters.

- After a scenario has finished running, the profiling data is dumped (in CSV).

- The CSV contains: profiling data and rendering parameters that were used.

- It also grabs a screenshot from the scenario, to compare visual fidelity later.

- All of this is exported to a

benchmarksfolder, that can be used for analysis.

- A scenario is composed of a scene (e.g.

- Implemented ways to measure the number of pixels our hair covers on a screen.

- We need it for finding how the performance scales based on hair distances.

- Fixed additional swapchain recreation bugs (mostly fullscreen-related / V-Sync).

- Fixed bugs where

std::filesystemwould not be linked when using GCC 8.2.0.

- Replaced our

resolve-pass with an algorithm similar to the one used in TressFX.- Our previous k-buffer look-a-like solution needed lots of layers to converge.

- TressFX

resolveguarantees that the closest fragments are sorted by using:- The k-buffer that initially only contains the first k fragments in the PPLL.

- Going through remaining PPLL and swapping close fragments in buffer.

- Blending the remaining fragments in the PPLL with OIT (i.e. no sorting).

- After swapping, elements in k-buffer will have the k closest fragments.

- Sort (e.g. with insertion sort) and then blend fragments in the k-buffer.

- Still fairly expensive, but doesn't that many sorted fragments in the k-buffer.

- Removed Phone-Wire AA and instead added GPAA-like line coverage calculation.

- Previous solution didn't remove all "stair-case patterns", needed e.g. MSAA.

- Instead, we simply find the pixel-distance between the fragment and the line.

- By rendering "thick" lines and changing alpha based on coverage we get AA.

- Added Level of Detail (LoD) transition between the line rasterizer and raymarcher.

- Uses simple alpha blending and a

smoothstepfunction to vary alpha values.

- Uses simple alpha blending and a

- Made the PCF use a wider Poisson-Disk sampler, based on pre-calculated values.

- Reduced strand coverage at the last segments to fake "sub-pixel" feeling of hair.

- Finally, properly recreated the Vulkan swapchain and added support for high DPI.

- GLFW and ImGui still don't officially support this, so I had to hack around it.

- ImGui still doesn't scale according to DPI, so I'll probably have to fix that too.

- Started adding code to reduce number of strands when loading for benchmarks.

- Milestone: all features have been implemented at this point (might need polish).

Figure: 8 sorted fragments on the left, with "MLAB" in center, and 128 sorted on the right.

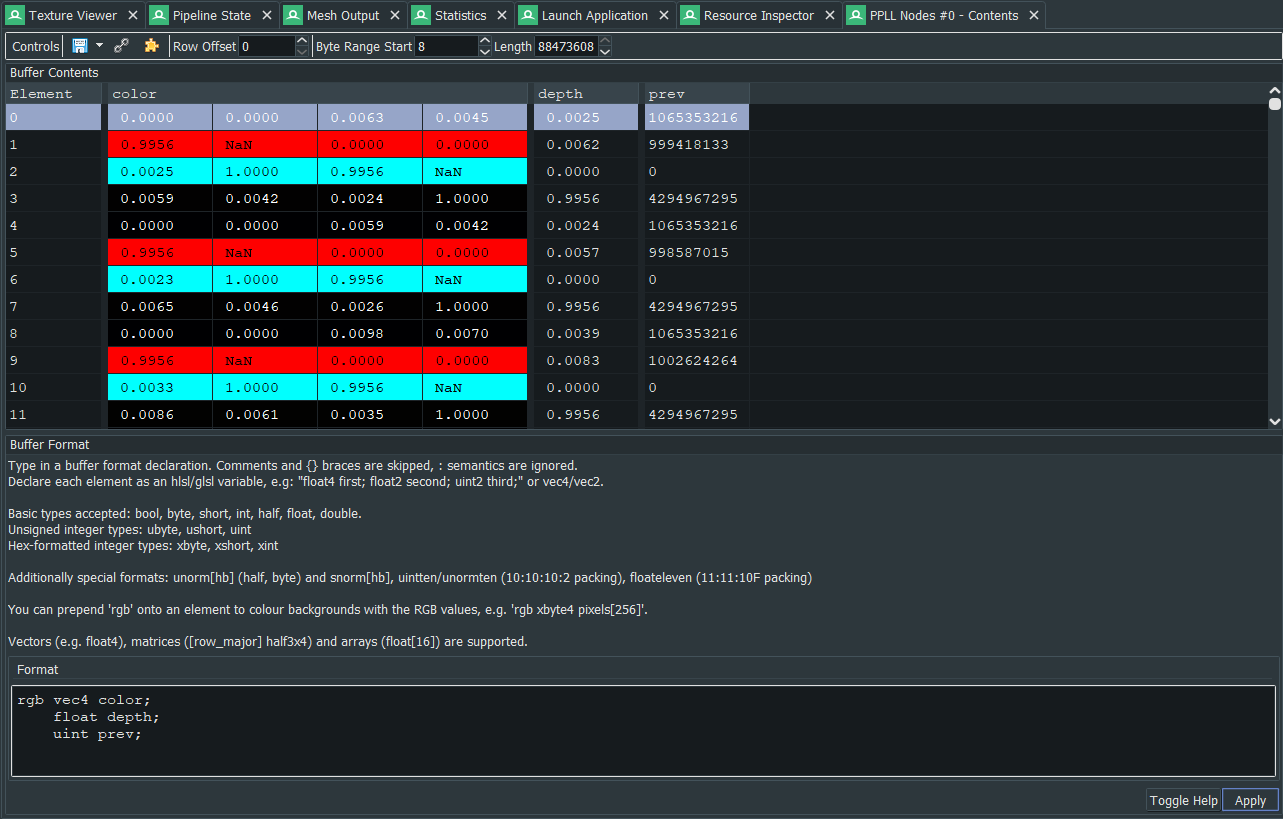

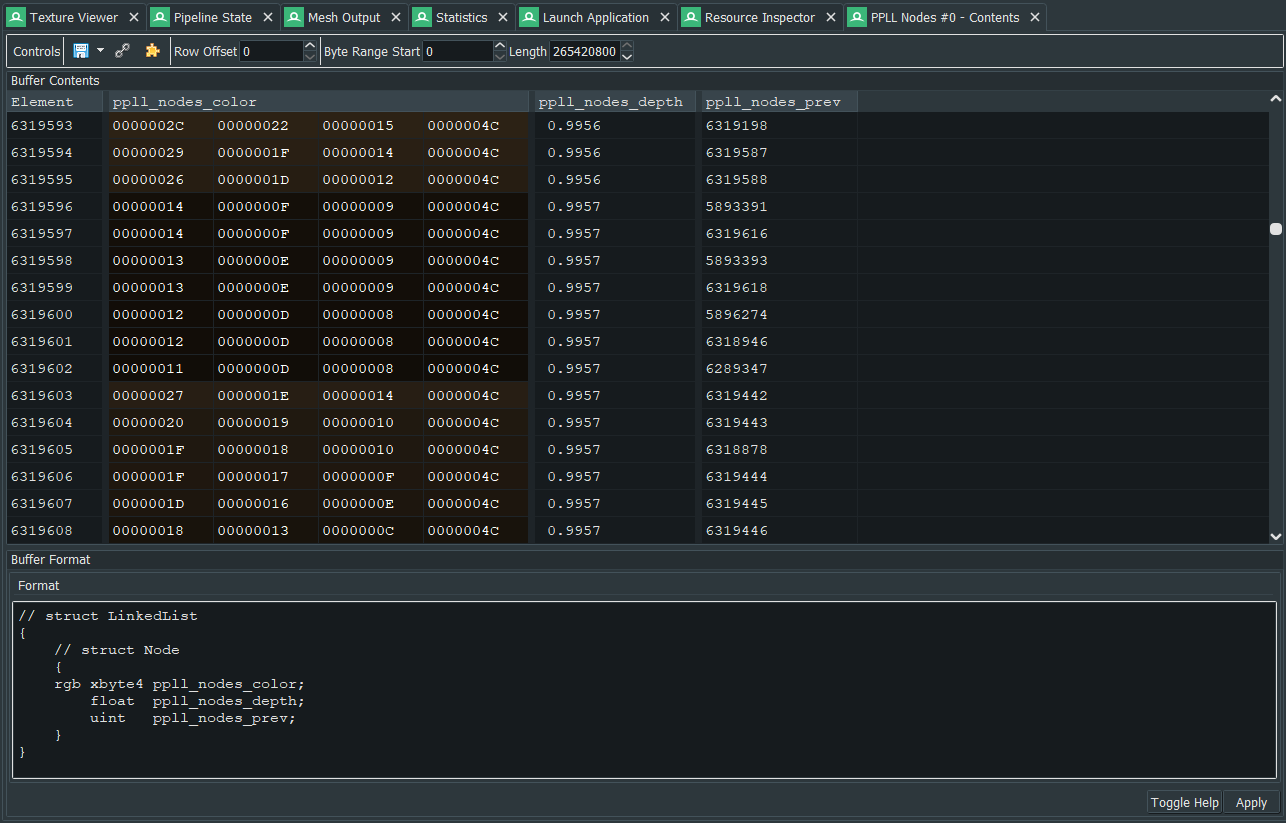

- Fixed PPLL insertions into the

ppll_nodesstructure by padding its contents.- Turns out SSBOs also need to be aligned even with the

std430layouts.

- Turns out SSBOs also need to be aligned even with the

- Instantiated

resolvecompute pipeline and added debug markers + timers. - Added current framebuffer as the resolve targets and did image transitions.

- Fixed wrong image layout in the

voxelizationpass, when clearing volume. - Modified rendering passes: depth -> voxelization -> color -> resolve -> gui.

- Also allowed us to fix validation error caused by imgui in subpass != 0.

- Needed to create compatible render passes to reuse the framebuffers.

- Needed to create the

GENERALimage view for the resolve attachment.

- Moved

ppll_sizeto an uniform, as that was getting me into align trouble. - Moved

ppll_counterto separate SSBO (removes padding inLinkedList). - Implemented

resolvepass (algorithm below doesn't include that MLAB):- Allocate the k-sized local storage buffer for the stuff we will be sorting.

- Insert the first k nodes from

ppll_nodesviappll_headsin that thing. - Do cheap insertion sort based on the depth values of those fragments.

- Alpha blend the sorted fragments with the content of the framebuffer.

- Still didn't get correct results. The problem: all fragments were being culled!

- Disabled depth writes and enabled

early_fragment_teststo fix this :-)

- Disabled depth writes and enabled

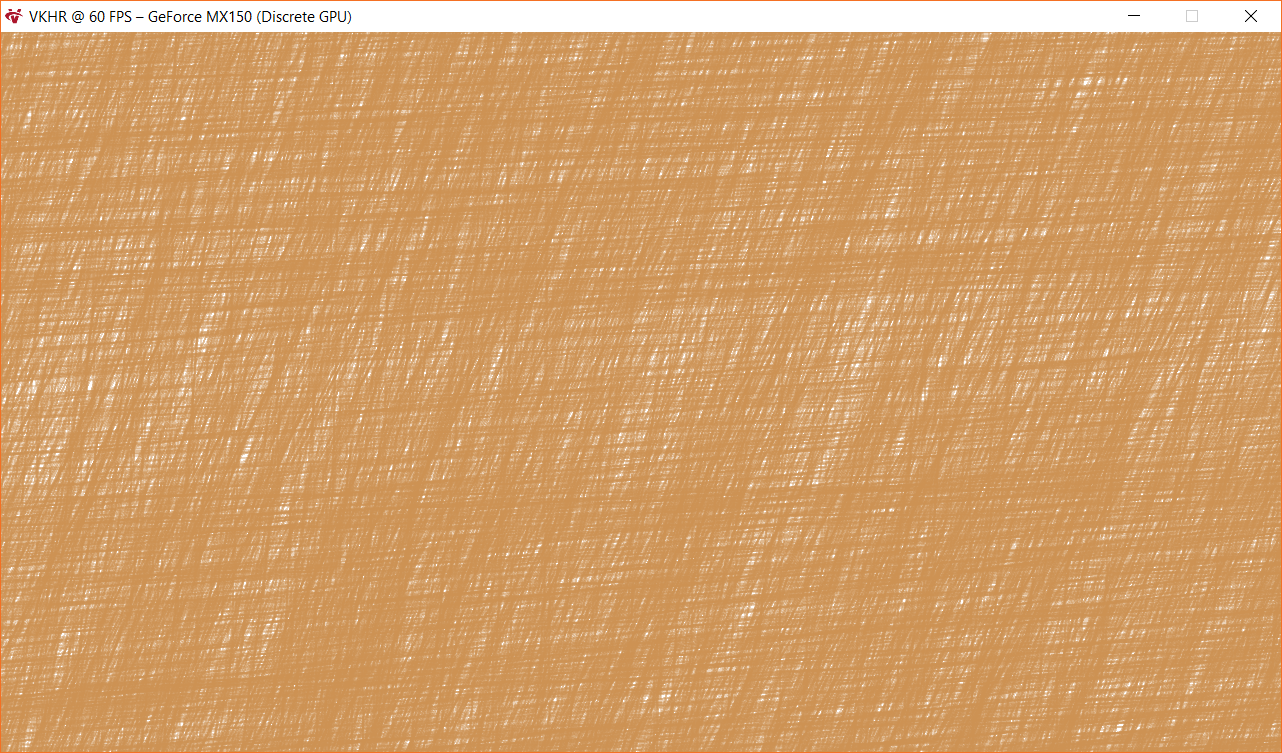

- Problem: performance is (more) horrible that I'd expected, even with low k!

- Solution: pack our

colorinto auint, reducing node size to 12 bytes. - Massive performance gain! Resolve step using 8 nodes runs in 0.4ms.

- Solution: pack our

- Added something similar to MLAB or the TressFX "tail shading" algorithm:

- Write fragments that aren't in the PPLLs to the "target" out-of-order.

- When resolving, it's blended with sorted ones. Need smaller k-buffer!

- Implemented the GBAA based on TressFX's

ComputeCoverageHLSL shader:- Still haven't gotten around to it yet since we need to have billboards.

- Better default hair parameters (e.g. a bit brighter) for the live demonstrations.

- Fixed PPLL shader code by doing an

imageAtomicExchangewith head instead. - Added

ppll_sizefor checking if we're allocating more memory than we got. - Ensured that the

PPLL_NULL_NODEwas given when thenodebuffer overflows. - Updated PPLL allocation scheme (24 Bytes * Width * Height * AverageNodes).

- Removed unnecessary

imageLoadwhen inserting fragment into the linked list.- Just used the return value from

imageAtomicExchangeto find head nodes.

- Just used the return value from

- Shifted the set bindings of strand fragment shader to accommodate the PPLL.

- Added ability to

clearPPLL by usingvkCmdClearColorImageandvkCmdFill.- Need to clear both the

headandppll_counter(i.e. the atomic value).

- Need to clear both the

- Started replacing framebuffer outputs with PPLL fragment insertions instead.

- Modified the existing rendering pass to follow the general PPLL procedures:

- Draw all opaque geometry (e.g. the models underneath the hair style).

- Clear the

headper-pixel pointers toPPLL_NULL_NODEto reset the PPLL. - Draw all transparent geometry (i.e. explicit hair strands) using our PPLL.

- [WiP] Resolve PPLL by sorting and blending fragments in a screen-pass.

-

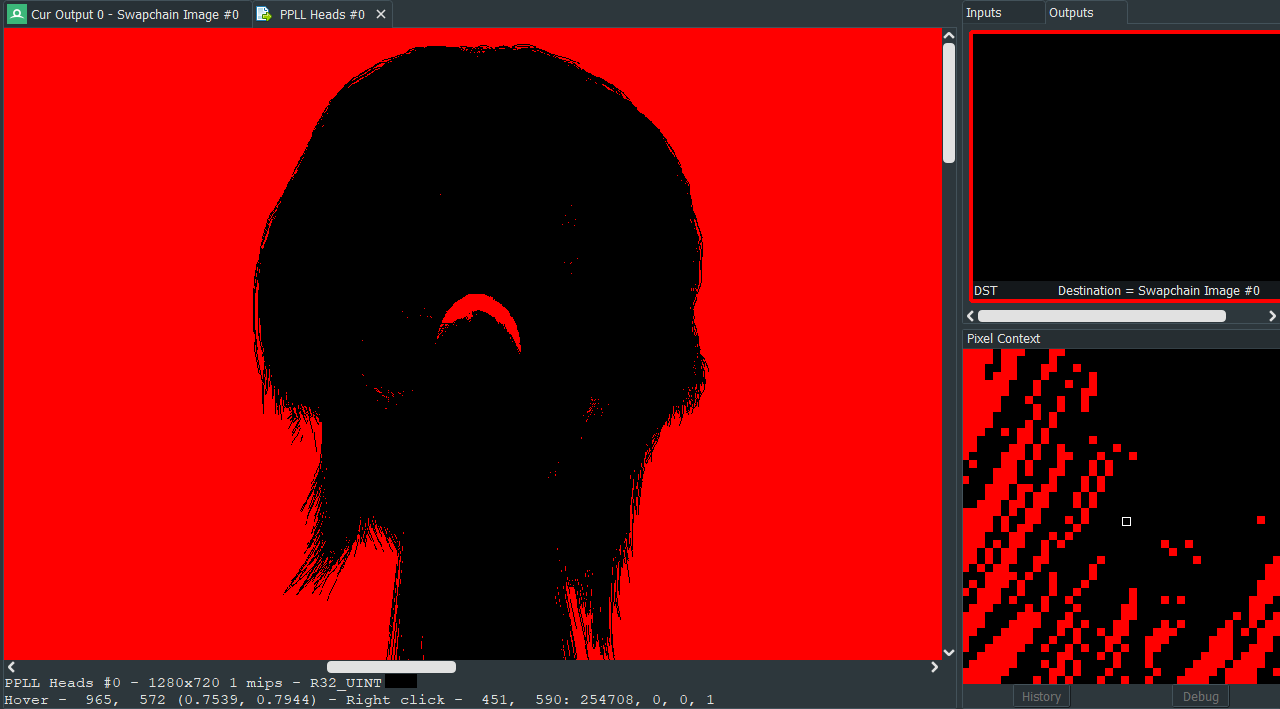

Issues: the PPLL

headslook OK butnodeshave padding/alignment issues. - Fixed Vulkan validation errors caused by adding the extra raymarch subpass.

- Added "better" shadow mapping bias values for the

ponytailand thebear. - Reorganized the shaders to make more sense when we add AA and blending.

- Removed unnecessary

clampwith amaxinstead, for the Kajiya-Kay shaders. - Added thickness data and applied Phone-Wire AA (not based on coverage yet)

- Not surprised... looks funky as the blending doesn't happen in right order.

- Started working on the PPLL (Per-Pixel Linked List) implementation, we've got:

- Allocation of

headwithStorageImageandnodesusingStorageBuffer. - Right now

headuses 8 MiB @1080p and thenodesuse 200 MiB where:- We allocate "scratch" memory for 4 fragments per pixel (on average).

- Each node is 24 bytes:

color(16 bytes),depthandnext(4 bytes). -

nextis a pointer to the next fragment in the same pixel in the PPLL.

- Implemented the

ppll_next,ppll_node,ppll_head,ppll_linkhelper.-

ppll_nextusesatomicAddto choose the "free" node for allocation. -

ppll_linkdoes anatomicExchangeto link a new node to old node. -

ppll_headfinds the first fragment for a pixel. User then usesnext.

-

- Added a sorting algorithm for the resolve step (insertion sorting for now).

- Started working on the resolve step. Still need to create extra subpasses!

- Allocation of

- Added better keybindings. Moving sources of light can be stopped using

L.- Also, fixed shadows when switching between the rasterizer / raymarcher.

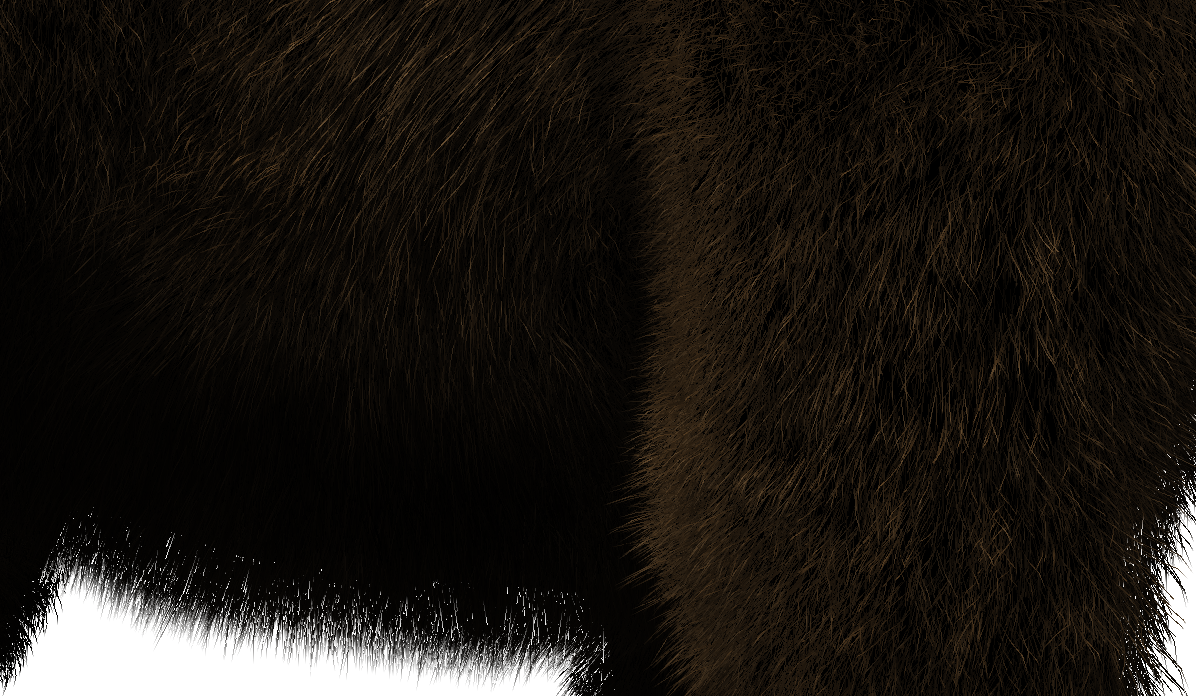

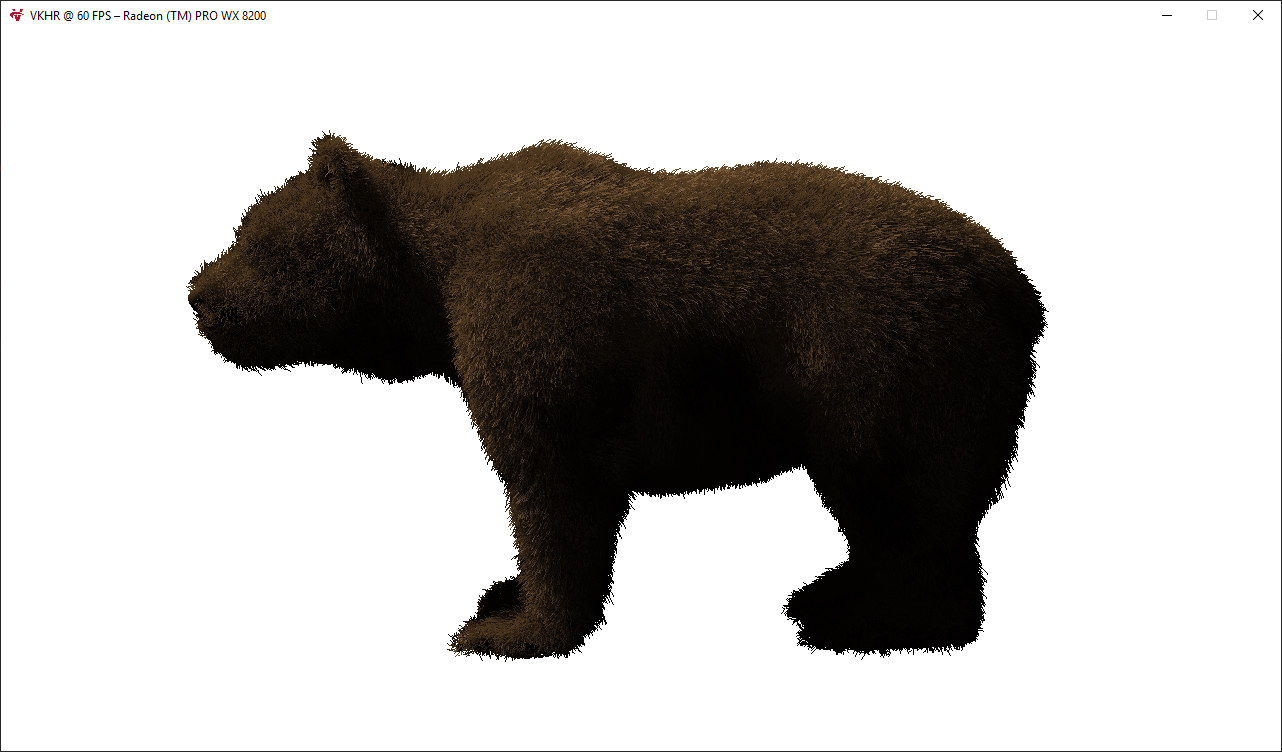

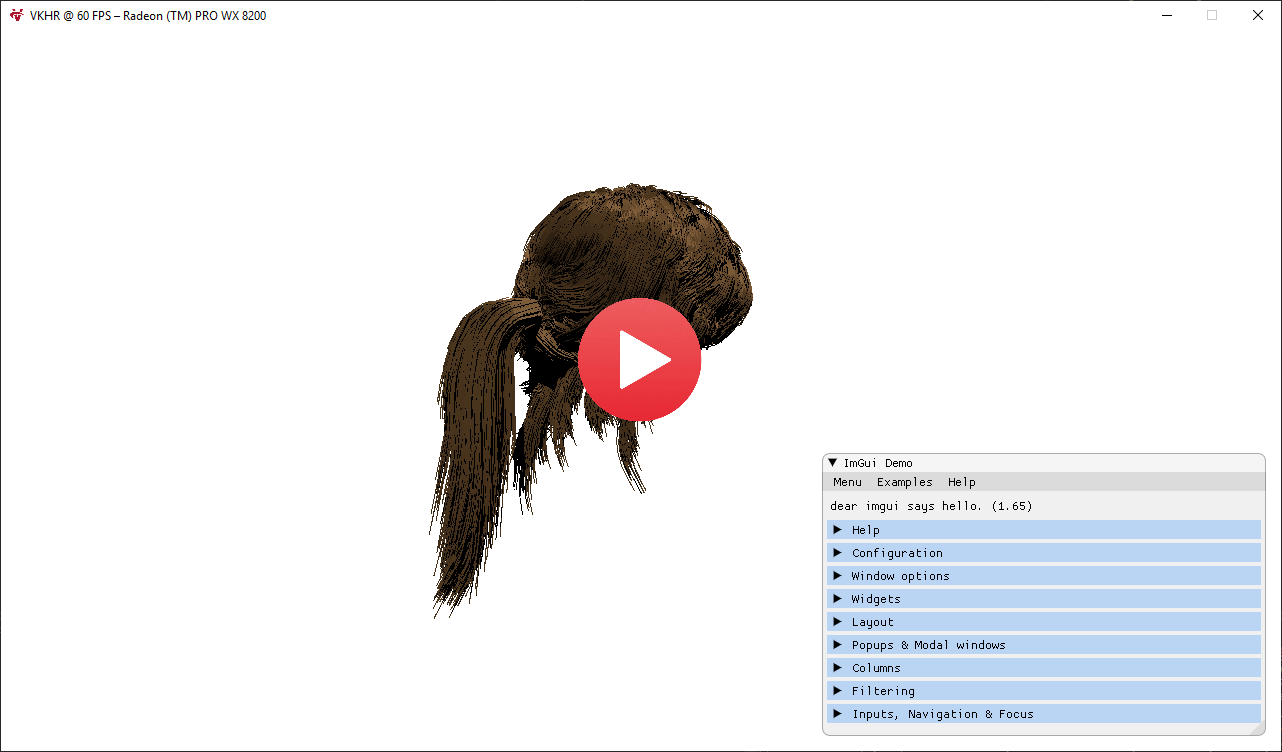

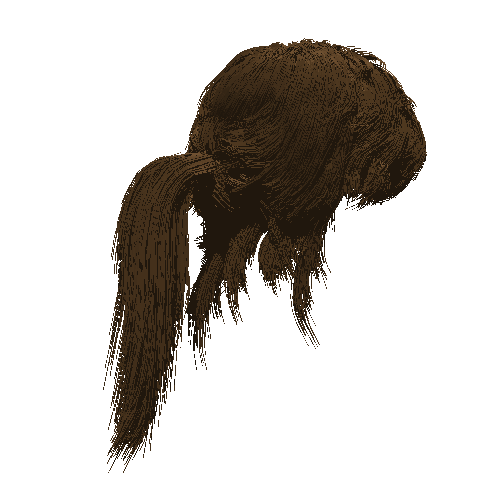

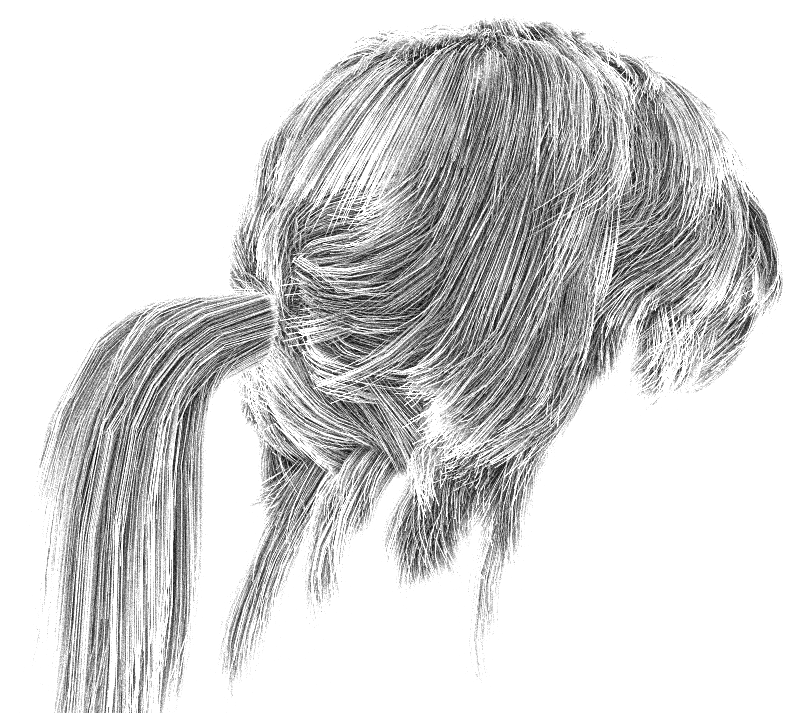

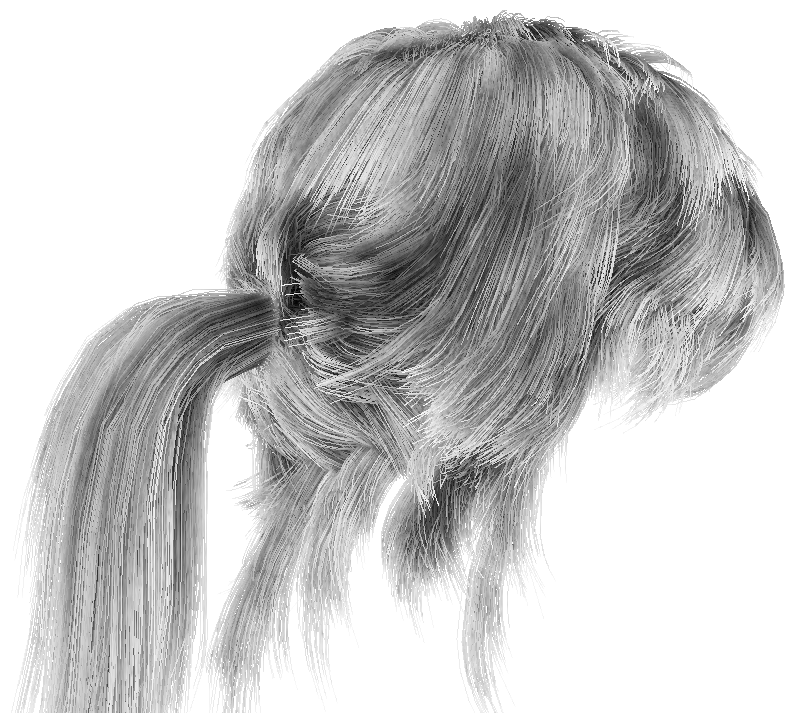

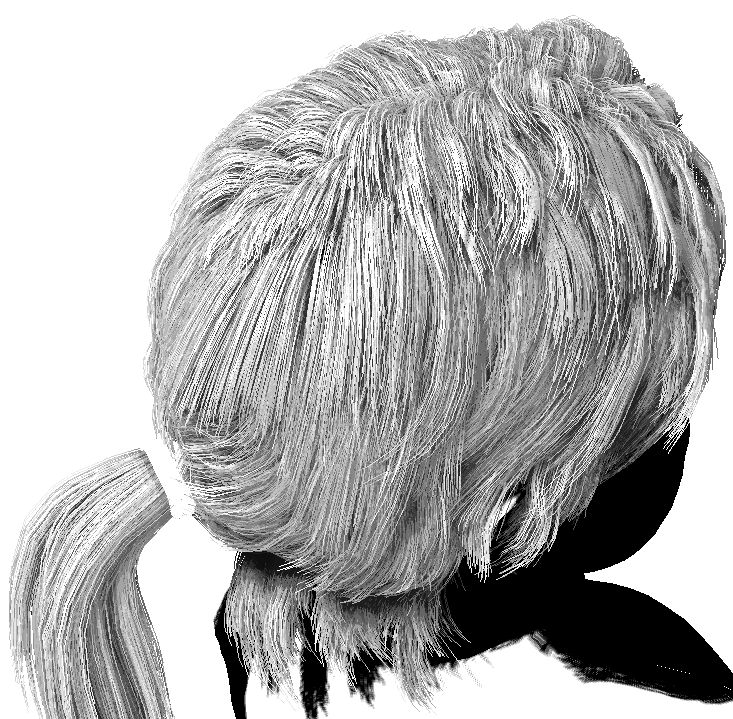

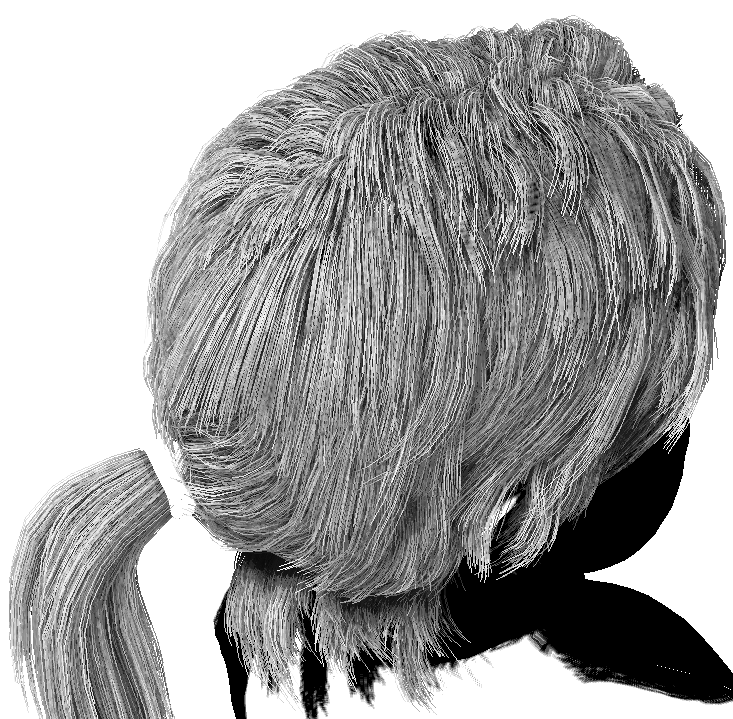

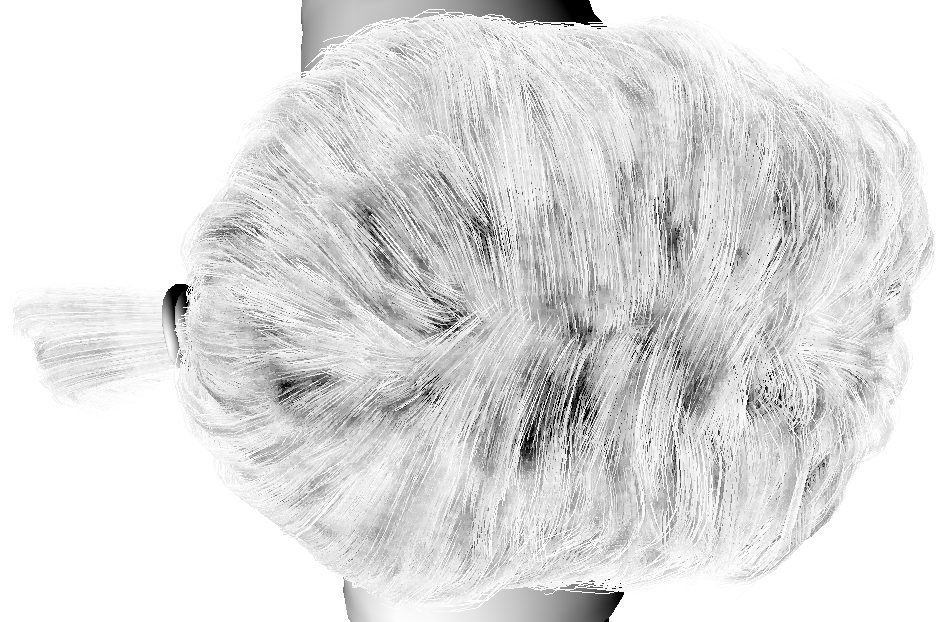

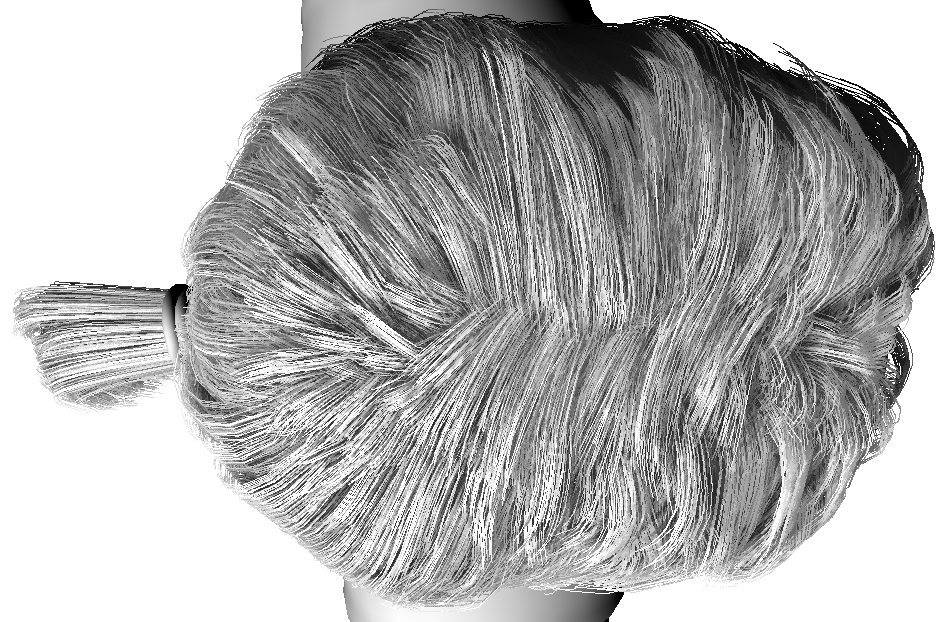

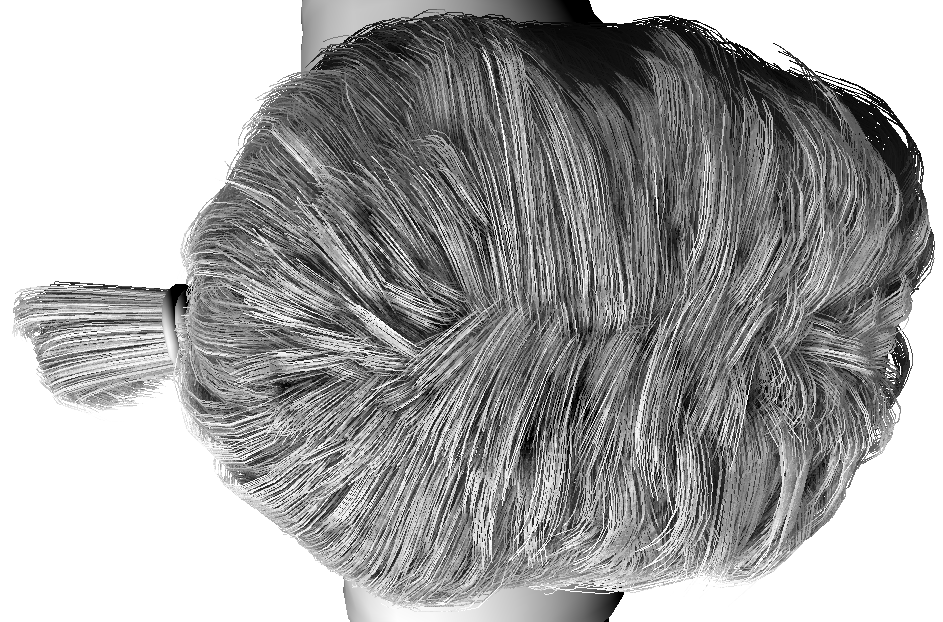

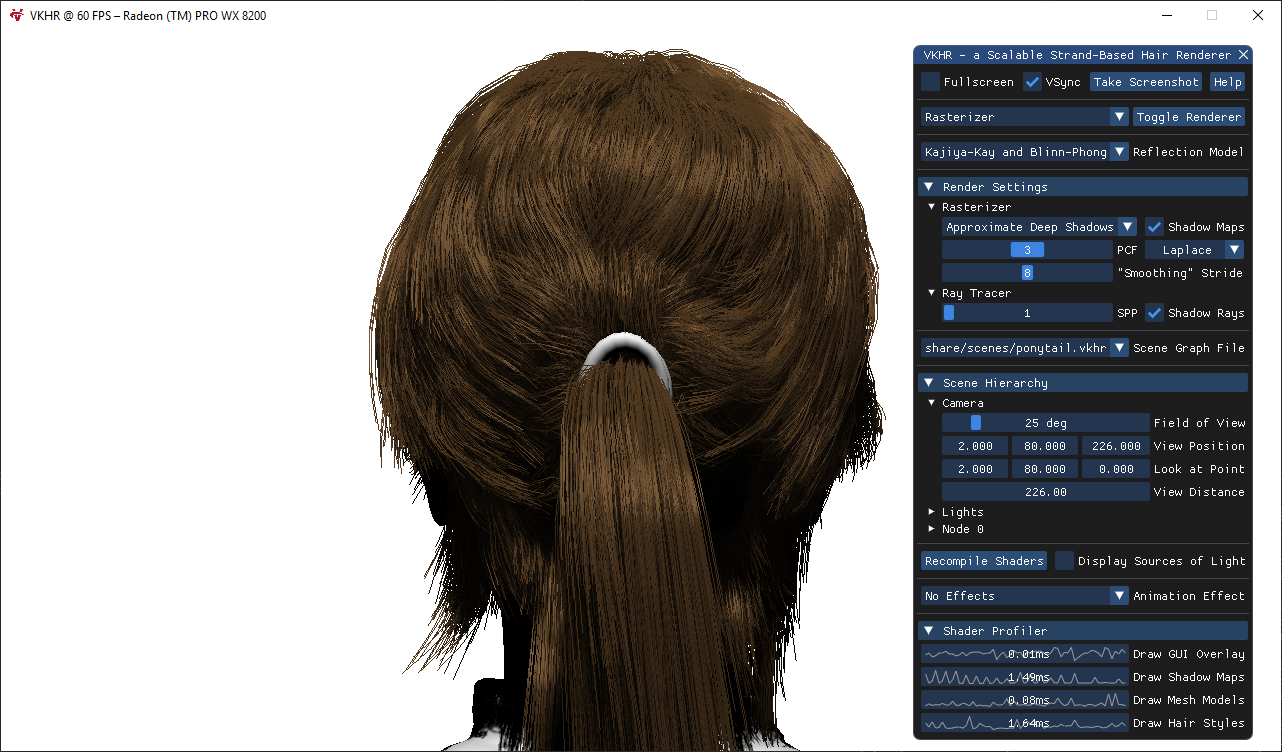

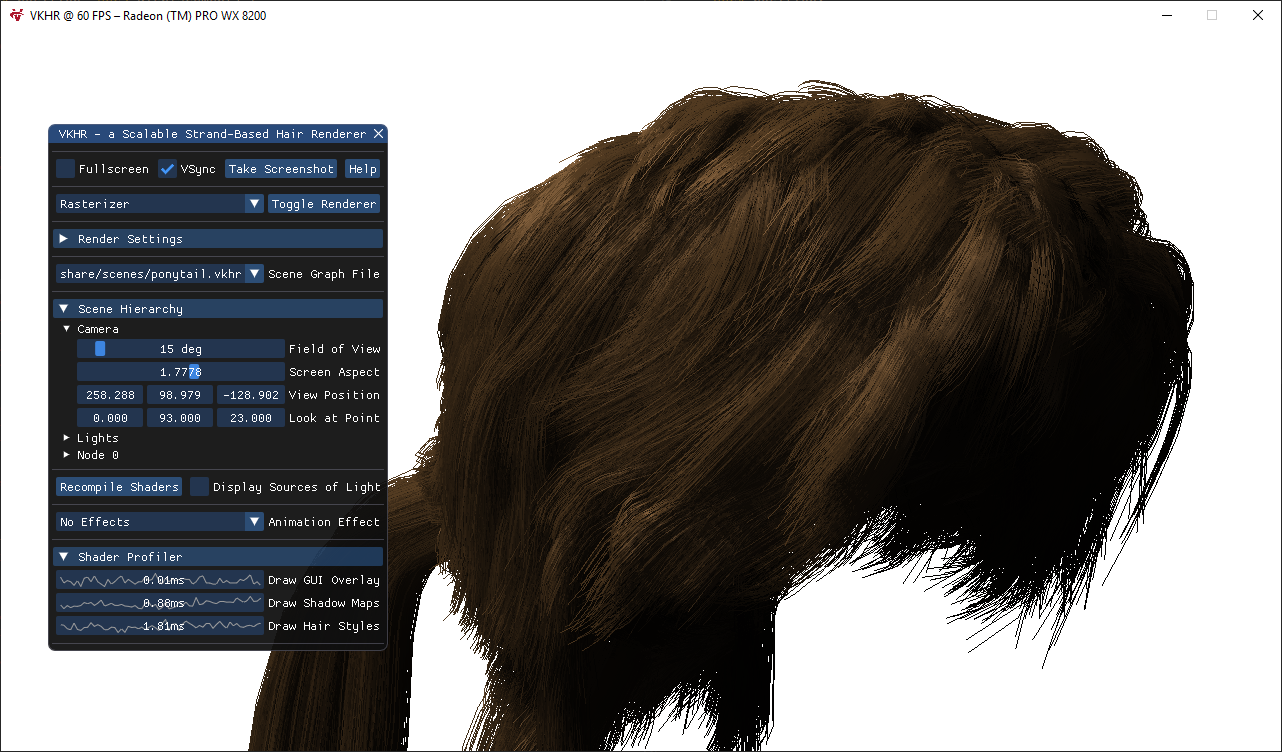

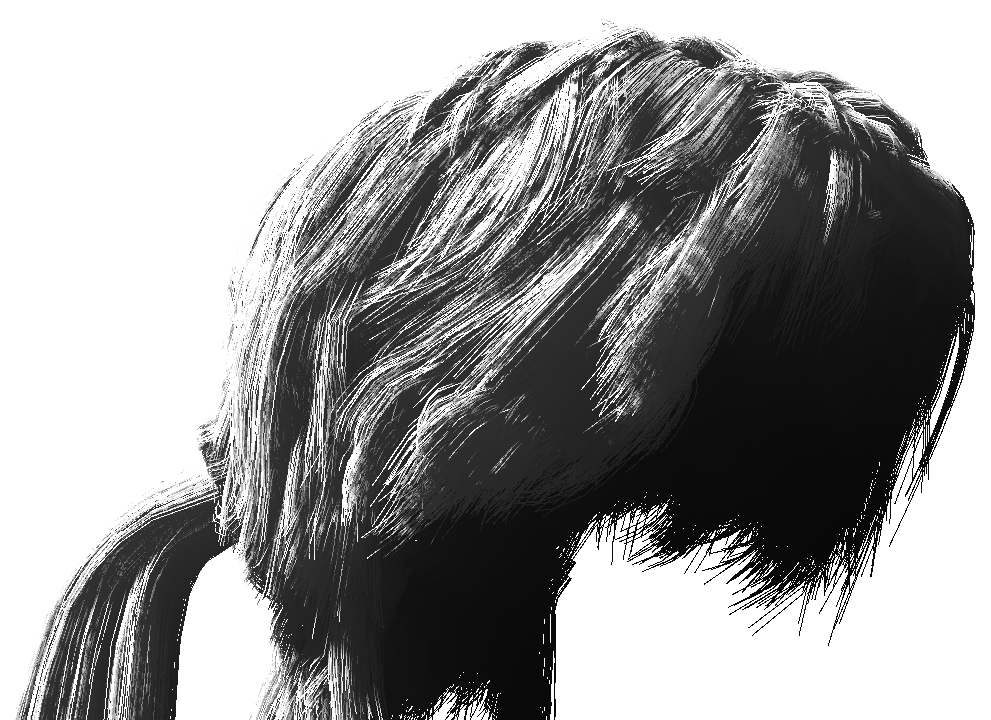

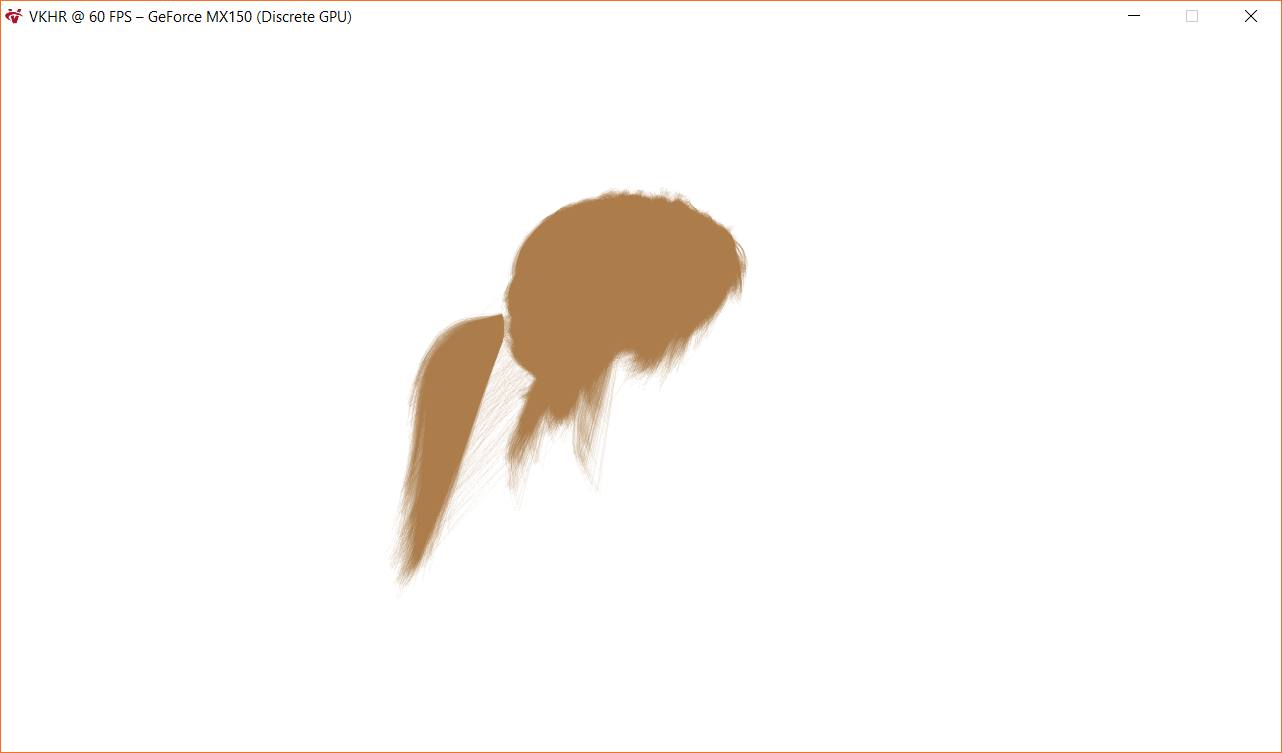

Figures: on the left we have the raymarched solution, center is rasterized and on the right

is the raytraced solution. You'll probably need to open the images to see some differences.

- Created a modified color rendering pass for extra volume rendering subpass.

- We need it since we need to know the current value in the depth buffer.

- Added depth buffer as a

subpassInputto the volume rendering subpasses.- Needed to add dependencies from subpass 0 to 1 and the right layout.

- Fixed mixed-rendering by

discard-ing fragments that are being occluded.- By sampling from the depth buffer and reading the raymarched depth.

- Fixed bug where Kajiya-Kay calculation would be in the wrong coordinates.

- General refactor and documentation of the shaders (and better names too).

- Added equivalent of ADSM to the raymarcher (same idea, but exact values)

- Volume Approximated Deep Shadows (VADS) I guess we should call it?

- Made so that the isosurfaces could be "nudged" into the right directions :-)

- Added knobs to control the raymarcher parameters (isosurface, samples, ...)

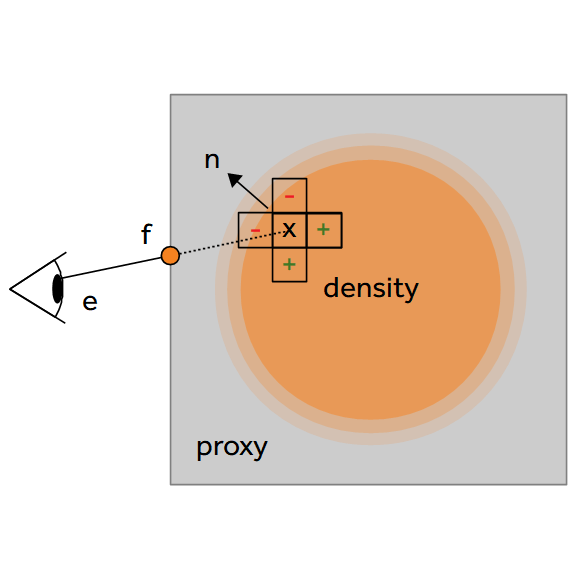

- Need to find tangent to correctly apply Kajiya-Kay in the raymarching-case:

- Either estimate tangent by using the curvatures of the volume density,

- or voxelize the tangents itself (needs extra memory, but easier to find)

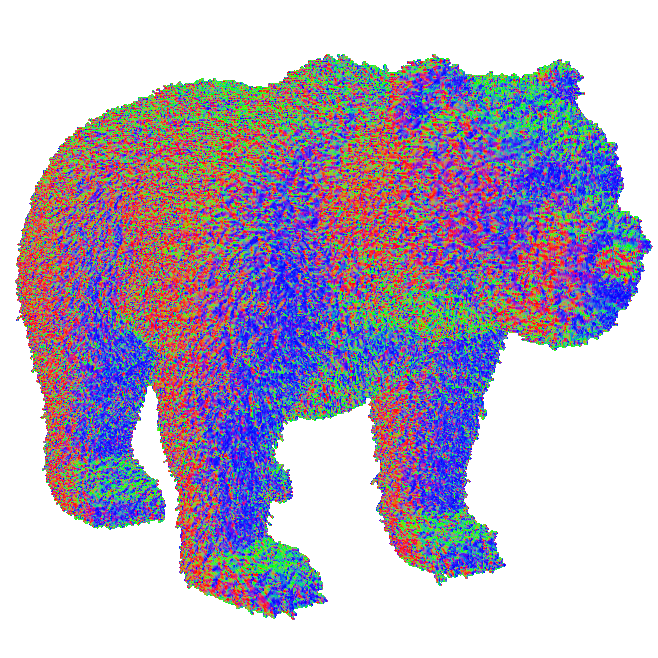

- Added an initial tangent voxelization solution with

imageAtomicAddagain:- Quantize the tangent, e.g. [-1,+1] -> [-128,+127] (not for all segments)

- Apply

imageAtomicAddto each component, density is the hair number - When reading tangent, de-quantize it and divide by the samples taken.

- Tangent will not change much in every other segment --> less samples

- Added

imageAtomicAverageRGBA8, in case we want to try that later as well.- Performance degrades with collision but will require less GPU memory

- Implemented "ground-truth" (raymarching) AO estimation using the rasterizer.

- Results aren't that different from LAO (i.e. project the AO sphere on grid).

- Added clamping factor to LAO to match raytraced Kajiya-Kay highlights better.

- This is needed since the LAO is never truly white on the volume estimate.

- Reorganized shaders, and removed

BezierDirectsince we won't be using it. - Added

Volumepipeline and modified Vulkan wrapper with extra functions. - Created proxy-geometry (AABB of volume) for the volume rendering pipeline.

- Fixed memory leak where

QueryPooldidn't free dynamic timestamp memory. - Fixed camera

positionuniform alignments (there were 2floats before it...). - Implemented a simple volume renderer (i.e. gather densities and show them).

- Added way to find isosurface by accumulating densities until the threshold

d. - Approximated surface normal with finite differences (optionally 2-3x filtered).

- Experimented with Lambertian / Kajiya-Kay (using normal instead of tangent).

- Tried approximating the tangents but to no avail. I have some ideas in mind.

- Started working on integrating depth buffer information in volume renderer.

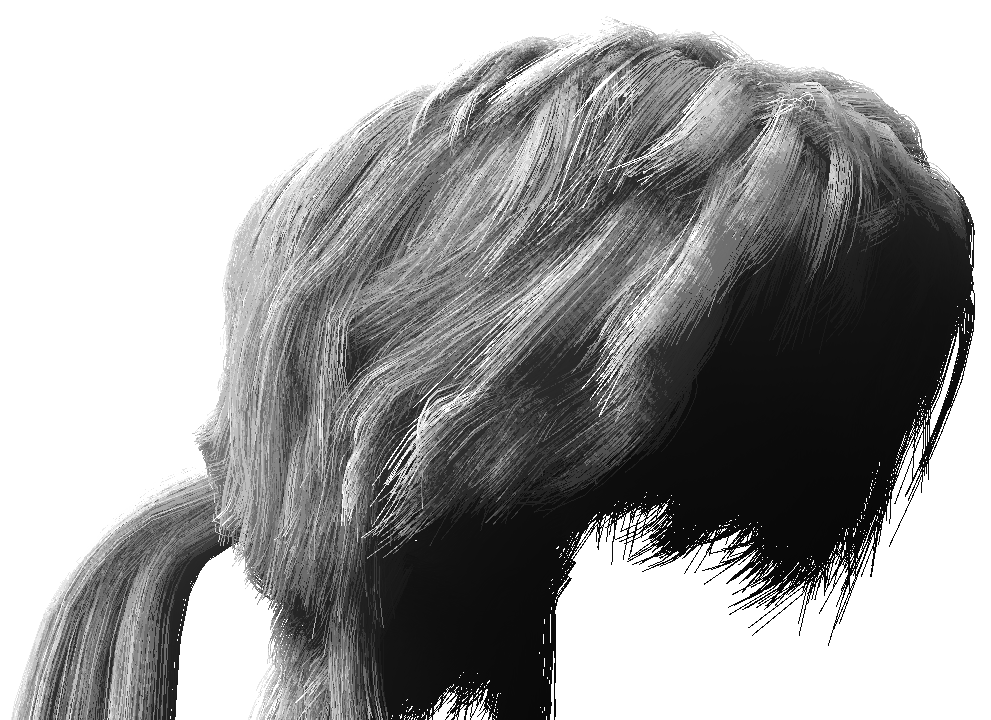

Figure: raytracer tangent interpolation using Embree now matches the rasterizer tangents.

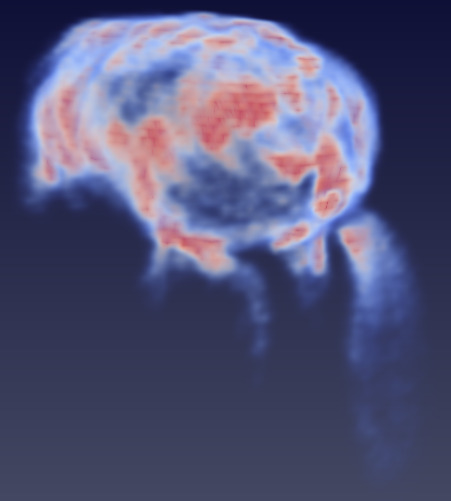

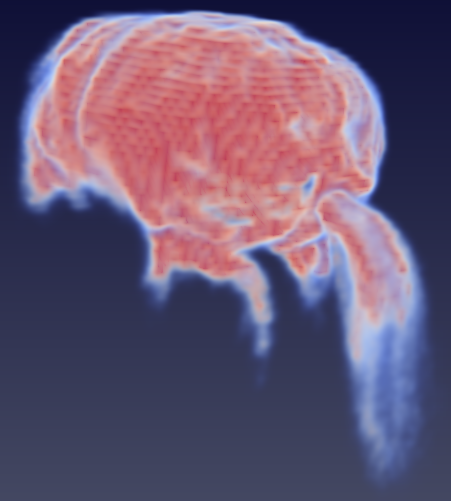

Figure: estimated AO within 2.5 units, raytraced results on the left, volume on the right.

Figure: the Kajiya-Kay and AO terms, notice how AO already improves quality by a lot!

Figure: final render result by adding the directional light source contributions as well.

- Integrated "Correlated Multi-Jittered Sampling", for faster raytracer convergance.

- Interpolated the strand tangents of Embree so that Kajiya-Kay shading can match.

- Matched the light source type of the raytracer / rasterizer to more easily compare.

- Normalized voxelized values (previously some values would be too small or dark).

- Extend raytracer with CMJ sampling and made raytracer show incremental results.

- Added possibility to limit the raytracer's AO estimation radius (as it's usually done).

- Strand thickness can now be pre-generated (right now only the tip is faded out).

- Implemented min/max filtered volume MIP-chain using

gather(volume, lambda).- Will allow us to easily find locations in screen-space with low strand density.

- Added Local Ambient Occlusion (LAO) for volumes. i.e. Project sphere in volume.

- Fixed bug where geometry shaders weren't included in

ShaderModuleabstraction. - Added geometry shader for billboarding strands (line to quad). Penalty cost TBD.

- General shader refactoring (e.g.

origin->volume_origin) and documentation. - Clear volume a bit faster by using

vkCmdClearColorImageinstead of in the kernel. - Implemented volume AO by casting towards the sides and the corners of a cube.

- Started work on Phone-Wire AA for the hair billboards (already "works" for lines).

- Need to blend fragments with the help of a e.g. PPLL or PTLL (a lá Dominik).

Figures: top-left is the raytraced AO, top-right is the visualized densities, bottom-left ADSM.

- Made density volume use the

GENERALlayout to remove validation layer errors. - The raytracer now traverses the scene graph nodes OK (bug when loading bear).

- Implemented the ground-truth AO calculation in the raytracer. It works like this:

- Shoot rays from the camera towards the scene, and for each hair strand hit:

- Spawn k occlusion rays from the hit towards a random direction in a sphere.

- The occlusion at the strand is therefore h/k, where h are the number of hits.

- In my implementation I divide k by 2 since we're not sampling a hemisphere.

- Added ability to apply Gaussian PCF to volumes as well (removed a lot of noise).

- Integrated raytracer into the GUI to easily compare rasterizer and raytraced AO.

- Major refactor of C++ and GLSL to make more sense (and be more "modular").

- Improved segment voxelization speed by using a DDA (Digital Differential Analyzer).

- Fixed bug where densities would not be interpolated (used

floorwhen sampling). - Removed unnecessary checks in

tex2Dprojand "gained" 1ms (from Sasha's code). - Generated model depth buffer for early fragment reject (for Dominik's HLSL shader).

- Added knobs for visualizing shadow maps / volume densities (useful for debugging).

- Fixed bug where (at least) the

ReduceDepthBuffercompute pipeline failed to build. - Added support for

image3D(i.e. no sampler) bindings, used for thevoxelizepass. - General refactor and documentation of the "core" (e.g. ADSM+) vkhr GLSL shaders.

- Made it possible to transition to

GENERALlayout, which was convenient for volumes. - Implemented GPU voxelization by using

imageAtomicAdd. Timings: ~0.1ms for 256³. - Added simple GPU raymarching for gathering densities (around 1-2ms for 15 steps).

- Improved Kajiya-Kay with coefficient from a newer paper (seems more correct now).

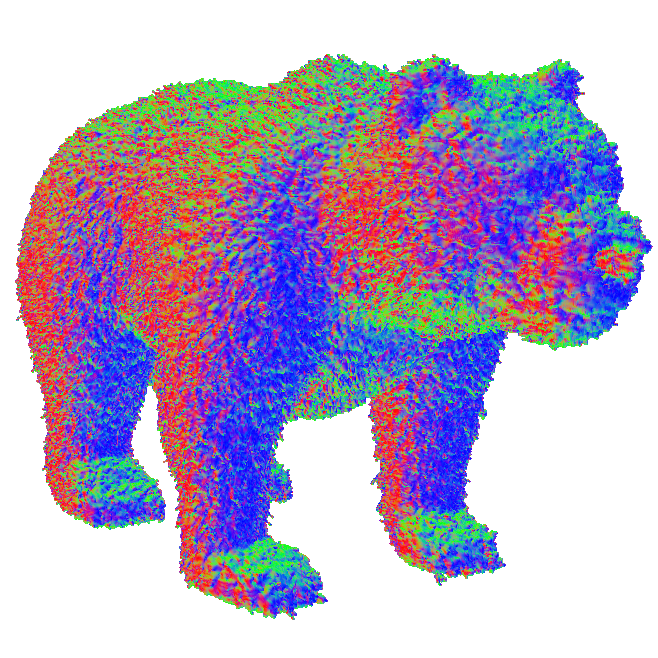

the figure above on the left are the inverse densities of a 256³ voxelization of the ponytail

using the vertex-based voxelization scheme, on the right we use the segment-based ones.

above on the left we interpret these inverse densities as an ambient occlusion term, as can

be seen, dark areas implies a lot of hair strands; and the right are the ADSM contributions.

we can combine both of these terms to get the light source contribution (from the ADSM)

and the ambient occlusion term (from the volume densities), giving better approximation.

this gives better shaded results than using plain ADSM, as can be seen above: on the left

we have ADSM shadows, and on the right we use ADSM + AO, giving less "plain" results.

- Uploaded volume parameters (bounds, resolution) to the GPU via a uniform buffer.

- Hair style parameters (color, strand radius and shininess) are not hard-coded now.

- Refactored way to upload uniform buffer to be more flexible (hopefully final now).

- Changed Vulkan abstraction to properly (and more easily) support volume images.

- Added ability to update storage buffers (previously assumed only single uploads).

- Added functions to sample volume textures from the shader (from

volume.glsl). - Fixed problems with incorrect coordinate-system translation (world ---> volume).

- Interpreted strand inverse densities as ambient occlusion terms (see result above).

Figures: the top row shows a 64³ voxelization of the ponytail, and a 256³ voxelization in the

bottom row. The left column uses a voxelization scheme which finds the number of vertices

in a voxel, while the one on the right find the number of segments passing through a voxel.

The vertex voxelization scheme takes around 15ms on the CPU (single-threaded), the curve

voxelization scheme takes 4x longer. There are still room for improvements for both I think.

cold colors: few vertices/segments passing through the voxel, warmer colors: many of them.

Each voxel uses 8-bits, so a 256³ voxelization takes 16MiB of memory, 1024³ --> 1GiB max.

- Generated correct AABBs (only the bounding sphere was OK in the previous version).

- Implemented a fast hair voxelization scheme (currently single-threaded on the CPU).

- Implemented a alternative high-quality hair voxelization scheme for e.g comparison.

- Extended Vulkan abstraction to support uploading of volume/voxel textures as well.

- Started adding (but didn't finish) compute implementations of the fast voxelization.

- Started implementing a simple volume raycaster to visualize the hair style densities.

- Implemented a way to validate these densities by treating them as occlusion values.

- Made bug fixes once I discovered them, most relate to the camera and hair loading.

- Profiled using RGP, the performance seems to match those by Vulkan timestamps.

- Added AABB and bounding sphere calculation to the hair strands for voxelization.

- Implemented camera controls for panning and zooming (previously an ugly hack).

- Made shadow map parameters customizable and changeable at runtime (via GUI).

- Simple node manipulation (transformation) by introducing knobs in the imgui too.

- Made so that strand and light properties can be changed at runtime via the imgui.

- Display statistics about the hair geometry in the GUI to easier judge performance.

- Added the skeleton code for some sort of simulation pass in case we need it later.

- Added model loading and rasterization shader with a simple Blinn-Phong shader.

- Added example voxelization shader pipeline (which we can use for Dominik's port).

- Profiler now reflects changes to the renderer parameters (e.g. if shadow removed).

- Started working on implementing a Marschner-based PBR shading model as well.

- Linearized the shadow depth values so that they can be more easily debugged too.

- Models can now cast and receive shadows from the shadow maps (see the image).

- Fixed most annoying z-fighting. In general, a lot of bug fixes for this release too.

- Implemented conventional PCF shadow mapping for use in the model's shadows.

- Further progress with porting Dominik's compute-based strand rendering shaders.

- I've also done some reading on related work too (mostly voxelization and shading):

- "Fast Scene Voxelization and Applications" (2006)

- "Efficient Multiple Scattering in Hair Using Spherical Harmonics" (2008)

- "Real-Time Rendering of Hair Under Low-Frequency Environmental Lighting" (2012)

- "Hair Self-Shadowing and Transparency Depth Ordering Using Occupancy Maps" (2009)

- "Light Scattering from Human Hair Fibers" (2008)

- "Hair Animation and Rendering in the Nalu Demo" (2005)

- "Hair Rendering and Shading" (2004)

- "Augmented Hair in Deus Ex Universe Projects: TressFX 3.0" (2015)

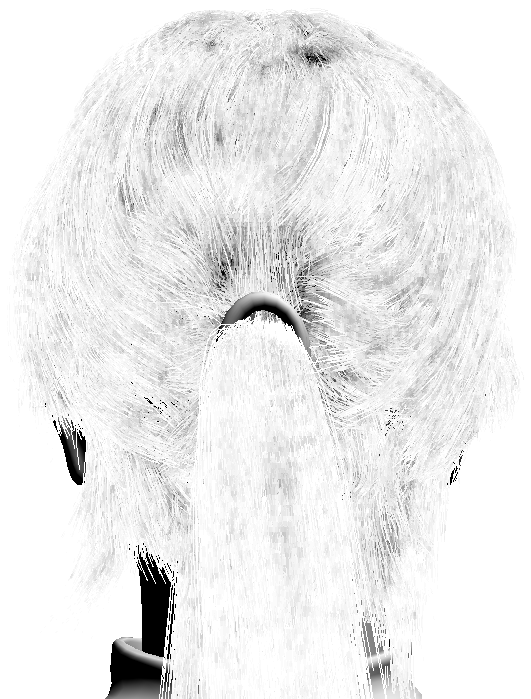

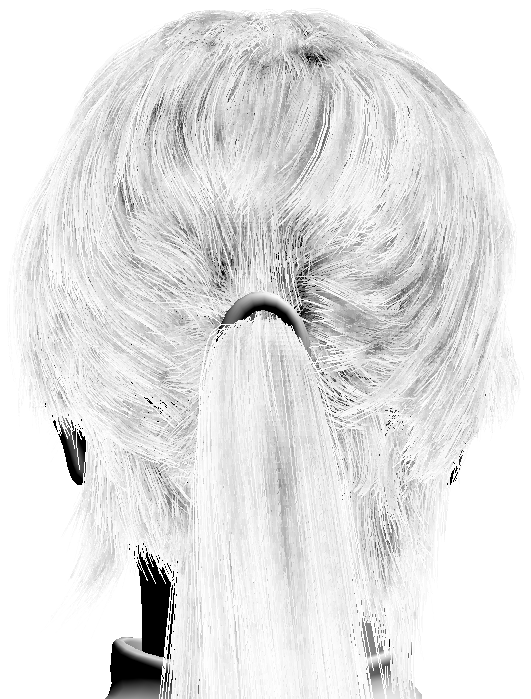

Figures: top row is vanilla ADSM with 3x3 PCF, bottom row is 8x Smooth ADSM 3x3 PCF.

On the left is Kajiya-Kay shading with shadows, on the right, their ADSM shadow values.

- Restructured the hair rendering code to accommodate for the hair depth passes.

- Fixed bug where a 2nd shadow map would use a previous shadow map transform.

- Added support for push constants to the MVP-matrices to hair and shadow maps.

- Provided correct depth correction and bias matrices to eliminate the SM artifacts.

- Added ability to reload scenes on-demand, should work if we add more scenes.

- Added support for Vulkan timestamp queries by integrating with debug markers.

- Fixed bug where multiple objects with the same name would not be shown (duh).

- Added ability to re-swizzle color channels (BGR -> RGB) when saving screenshot.

- Support for vanilla shadow maps with standard PCF and Poisson-Disk sampling.

- Have been getting warnings on "unused"

sampler2Dobjects, fixed it by adding... - ... specialization constants! No warnings, and array is not over allocated anymore.

- Added support to live-recompile the HLSL shaders too by calling

dxcorglslc. - Started writing pipeline and loading code for models (maybe leave it for later...).

- Added a bunch of still missing knobs to the imgui now (just look at that picture!).

- Calculated and stored a running history of timestamp values per-frame in ImGui.

- Visualization of timestamp history by using ImGui

PlotLineswith the averages. - Added knobs to switch shadow on/off in both the rasterizer and the raytracer :-).

- Implemented and integrated full "Approximated Deep Shadow Map" shader now.

- Tried to improve a bit on that by using a smoothed variant of it with Blue-Noise.

- Made sure that IMMEDIATE (i.e. vsync off) worked on AMD hardware. Bugs fixed.

- Fullscreen switch on AMD hardware should work (maybe cross-check resolution).

- Played around a lot with the shadow mapping technique, and profiled it as well:

- Performance: 2048x2048 SM renders in ~1ms, 3x3 PCF Smoothed ADSM ~2ms.

- Target: 13k strands, 1.8M lines, Radeon Pro WX 8200, AMD TR1 16-core 3.4 GHz.

- Started plugging in the compute pipelines for each stage of Dominik's shaders.

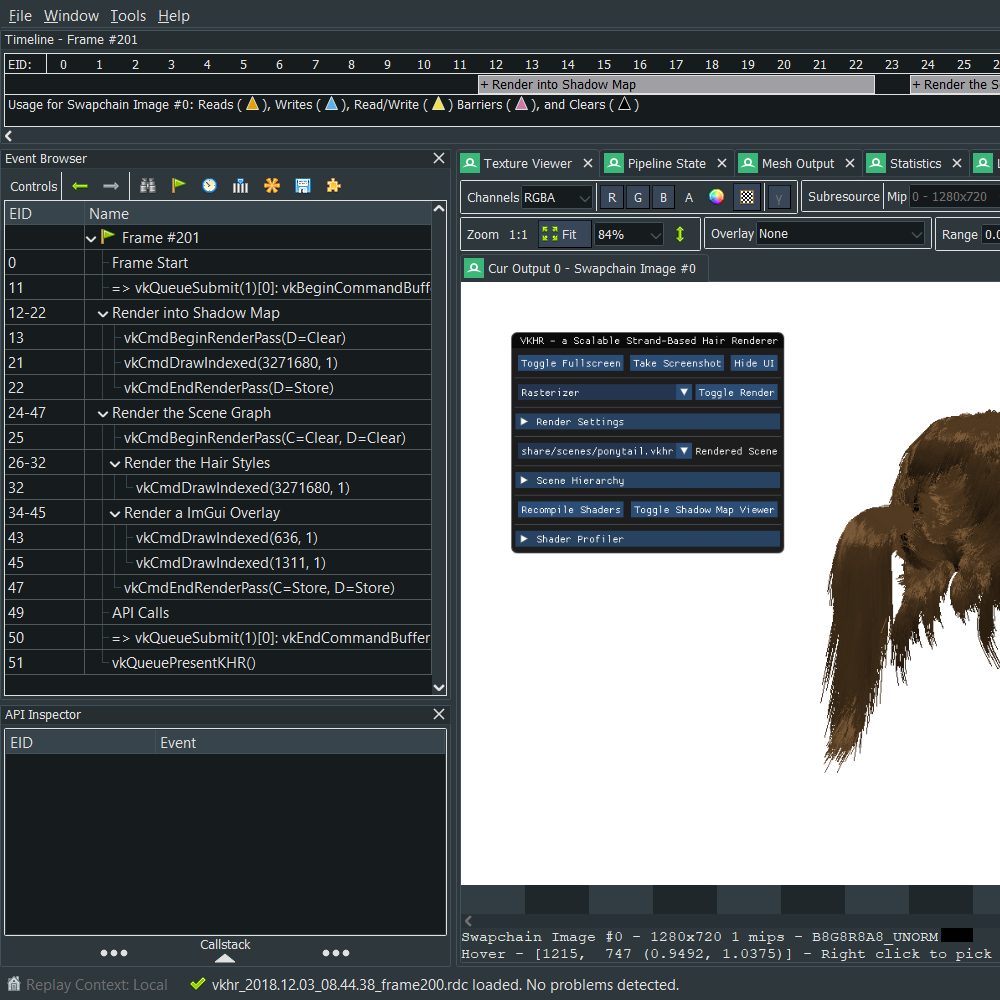

- Tested

ReduceDepthBufferand it seems to be doing the right thing (RenderDoc). - Spent a lot of time writing the thesis report too, need to achieve LiU milestones.

- Added Vulkan debug markers for more understandable RenderDoc capture data.

- Live shader re-compilation now works and can be used with the

Rkey or via UI. - Fullscreen now partially works (swapchain still not recreated though, but "fixed").

- Added depth render pass and the necessary transitions for doing shadow maps.

- Fixed double buffering bugs, should be slightly more correct/better than before.

- Re-written descriptor upload code to make it more flexible for the depth passes.

- Renderer now uses light information from the scene (not hard-coded anymore).

- Renderer produces a shadow map for light source in the scene (only spotlights).

- Integrated GUI knobs using ImGui, still need to wire up the scene graph in there.

- Added and adapted Dominik's HLSL shaders to the current vkhr project structure.

- Compiled the HLSL shaders down to SPIR-V by using GLSLC (look into DXC later).

- Small issues with automatic binding assignment in e.g.

ClipCurves.hlsl, fix this. - Fixed some issues with the Kajiya-Kay shader, show now be more-or-less correct.

- Added support for taking screenshots. Need to look into if blitting is supported.

- Made raytracing output more consitent with the newer Kajiya-Kay GLSL shader.

- Partially implemented "Approximated Deep Shadows" shader. Still need PCF part.

- Major refactor from

mainintoRay_Tracerwith partial support forSceneGraph. - Parsing of

SceneGraphfrom a VKHR scene file, e.g.share/scenes/ponytail.vkhr. - Started parsing

LightSource, still need to fully integrate it into theSceneGraph. - Parsed the

Camerasettings, and fixed issues with the raytracer's viewing plane. - Major refactor from

mainintoRasterizerwith partial support forSceneGraph. - Split

HairStyleintovk::HairStyleandrtc::HairStylefor API specific stuff. - Created tiny wrapper for Embree

Ray, just like in the Embree tutorials sections. - Raytracer now uses same index buffer as rasterizer (by adding a 2 x byte stride).

- Improved load time from 5 seconds to 0.5 seconds by pre-generating tangents.

- Hooked ImGui to feed from the GLFW key callback by chaining from

InputMap. - Added ability to preview raytraced result by using a staged framebuffer upload.

- Added ability to switch between renderers on the fly (using

Tabkeys for now). - Started work on adding renderpass for the depth buffers for shadow mapping.

- Fixed arcball rotation bug, it now looks good on both rasterizer and raytracer :)

- Added arcball controls, use the left mouse button to orbit the centered camera.

- Modified tangent pre-generation to extrapolate the last tangent of the strand.

- Added code to generate indices for the rasterizer, this fixes the

tangenthacks. - Fixed troublesome race condition when fetching a camera position in raytracer.

- Added full support for Kajiya-Kay in raytracer, results now match the rasterizer.

- Parallelized raytracer using OpenMP, still need to do shadow casting reduction.

- Fixed bug in hair loader: some index sequences were repeated more than once.

- Made strand raytracer use Embree's built-in tangent generation via

ray.hit.Ng. - ImGui is now fully integrated, still need to fix so that callbacks work right as well.

- Created

vkhr::Interfaceas a wrapper for the renderer's GUI, based on ImGui. - Added fences to support submitting new command buffers (e.g. for ImGui) later.

- Started writing a Embree raytracer, a simple strand renderer. still a lot of bugs :-(

- Finally added code to create a depth buffer and do the right transitions as well.

- Added code to handle image transitions via cmd pipeline barriers, also for depth.

- Implemented everything needed for a combined image sampler for the textures.

- Created a shader to have something like a billboard for displaying raytraced data.

- Added full Kajiya-Kay shader. Still hard-coded light position and strand colors :-(

- Added

vkhr::LightSourceto describe light sources. Need to upload descriptor. - Created code to generate tangents from strands since we need it for Kajiya-Kay.

- Added camera controls, and uploaded matrix transforms to the correct shaders.

- Made interface for descriptor sets/pools nicer and more high-level than before.

- Finally, added support for uploading descriptor sets via storage/uniform buffers.

- Added support for indexed drawing as well (won't be used too much for the hair)

- Vertices now go through a staging buffer instead of being readable host memory.

- Quality of life improvements to command buffers and their lifetime management.

- Abstracted away vertex buffer uploads and device memory stuff in general as well.

- A lot of progress, added uploading of camera transforms by using descriptor sets.

- Started looking into the hair strand indexing problem, still some work-in-progress.

- Fixed issues by providing a tangent of [0, 0, 0] at the end points of the hair strand.

- Pre-generated tangent for the hair strands, outputting tangent color in the shader.

- Working upload and example of rendering strands, does not work completely yet.

- Simple (not abstracted) way to upload vertex buffer information on to the device.

- Added command pool and buffer abstraction, can now submit work to the queue.

- Handling of sub- and render passes now works as expected, with a layer on top.

- Abstracted away shader module creation, and added graphics/compute pipelines.

- Added shader compilation scripts for SPIR-V based on

glslc. Need live reload? - Finished abstracting away swap chain, framebuffers and image view Vulkan stuff.

- Implemented logical device abstraction and queue family creation/selection too.

- Added scoring function for physical device selection based on discrete GPU type.

- Finished implementing

vkhr::Camera,vkhr::SceneGraph,vkhr::Nodefor scene.

- Wrote Flatbuffers schema for converting AMD-style HAIR files to Cem Yuksel ones.

- Implemented

vkhr::ArgParsefor parsing stuff like rendering settings and scenes. - Started sketching on

vkhr::Camera,vkhr::SceneGraphandvkhr::Nodefor scene. - Splitting renderer into

vkhr::Rasterizer(Vulkan) andvkhr::Raytracer(Embree). - Wrote a lot of wrappers for Vulkan. Setup validation layers and selected the device.

- Imported Embree to the project (that was tricky!) and linked it with the project too.

- Setup repository and

premake5build system for Unix or MinGW and Visual Studio. - Implemented

vkhr::HairStylefor loading and saving Cem Yuksel-style HAIR files. - Implemented

vkhr::Windowwrapper around the Glfw windowing system (Vulkan). - Implemented

vkhr::InputMapfor mapping GLFW 3 input to named user bindings. - Started (lightly) to work on the Vulkan back-end and also the

vkppC++ wrapper. - Made build systems manage dependencies via submodules and assets via Git LFS.